A Guide to Real-Time Data Integration

Explore real-time data integration, how it works, and why it's crucial for modern business. Learn key patterns, challenges, and best practices in our guide.

Real-time data integration is all about getting data from Point A to Point B the very moment it's born. Instead of waiting around for scheduled updates, this approach creates a constant, live stream of information from all your sources.

Moving Data at the Speed of Business

Think about navigating a busy city. You could use a paper map printed that morning. It’s useful, but by lunchtime, it's already out of date. It won't show you the new traffic jam on the freeway or the surprise road closure downtown. This is exactly how traditional batch processing works. It gathers up data into big chunks and moves it on a schedule—maybe once a night or once a week. This creates a delay, a latency, between something happening and you actually knowing about it.

Now, imagine using a live GPS app instead. It gives you instant updates, rerouting you around that traffic jam the second it forms. That’s real-time data integration. It turns data from a stale, historical record into a living, breathing asset you can act on right now. It means businesses can react to what's happening in seconds, not sit around waiting for a report that's already hours old.

Why Immediate Data Access Matters

The hunger for instant information is growing fast. In fact, the real-time segment of the data integration market is blowing past older methods with a massive 28.3% compound annual growth rate. This isn't just a trend; it's a fundamental shift in how businesses have to operate to keep up.

This need for speed is critical everywhere:

- E-commerce: Imagine a flash sale. Real-time integration means as soon as a product is sold on your website, the inventory is updated on your app, in-store, and everywhere else. No more overselling.

- Finance: A fraudulent credit card swipe can be detected and blocked in milliseconds, before the transaction even goes through. This protects everyone involved.

- Logistics: You can track a delivery truck’s location live, giving customers pinpoint-accurate ETAs and allowing you to reroute drivers around unexpected delays on the fly.

At its core, real-time data integration is about closing the gap between an event and the decision it influences. The smaller that gap, the more agile and competitive an organization becomes.

To give you a clearer picture, let's break down the key differences between the old way and the new way.

Batch Processing vs Real-Time Integration At a Glance

This table provides a quick comparison of the core differences between traditional batch data processing and modern real-time data integration across key operational attributes.

AttributeBatch ProcessingReal-Time IntegrationData FlowMoves data in large, scheduled chunksProcesses a continuous stream of eventsLatencyHigh (minutes, hours, or days)Ultra-low (milliseconds to seconds)Data FreshnessStale, reflecting a past point in timeAlways current and up-to-the-secondUse CasesEnd-of-day reporting, archivalLive dashboards, fraud detection, personalizationBusiness ImpactReactive, historical analysisProactive, in-the-moment decision-making

Ultimately, batch processing gives you a rear-view mirror look at your business, while real-time integration gives you a live dashboard of what's happening right now.

So, how does this near-instant data movement actually work? It's powered by clever technologies like Change Data Capture (CDC). Think of CDC as a vigilant reporter sitting inside your database, noting every single insert, update, and delete the moment it happens.

By treating data not as static files but as a continuous flow of events—also known as streaming data—organizations unlock a whole new level of operational intelligence. This is the foundation for building the kind of responsive, smart systems that define modern business.

How Real-Time Data Pipelines Actually Work

To really get what makes real-time data integration tick, you need to look at its core engine: Change Data Capture (CDC). The best way to think about CDC is like a stenographer for your database. Instead of constantly asking the entire database, "Hey, what's new?", it just listens in on the database's transaction log—a running tally of every single change.

This approach is incredibly efficient. It picks up every INSERT, UPDATE, and DELETE event the second it happens, without bogging down the source system. So, whether you're dealing with a high-traffic PostgreSQL database or a dynamic MongoDB cluster, CDC works quietly in the background, leaving your operational performance untouched.

The Journey of a Single Data Event

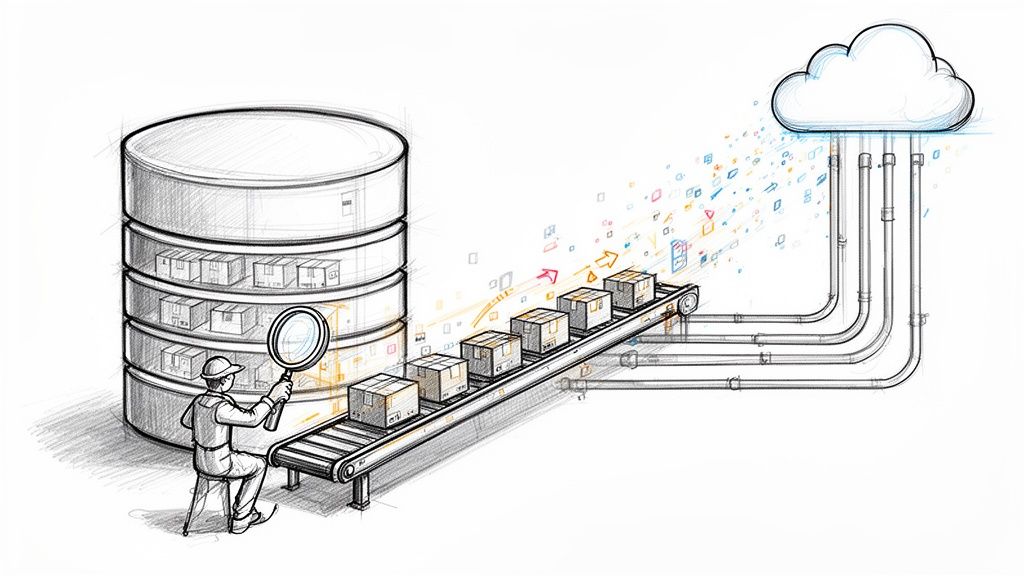

Once CDC nabs a change event, the real-time pipeline kicks into gear. We're not talking about shipping big, clunky files around. This is about escorting a tiny, individual packet of data on a very fast trip.

Here's how that journey usually plays out:

- Capture: A change event is spotted in the source database's log. Let's say a customer updates their shipping address on your e-commerce site.

- Streaming: That event is instantly fired off to a streaming platform, like Apache Kafka. Think of Kafka as a central nervous system for your data, built to handle millions of these tiny events per second and route them to the right places.

- Transformation: While the data is on the move, it can be cleaned up, enriched with other information, or reshaped. This makes sure it arrives at its destination ready for immediate use.

- Delivery: The processed event lands at its destination—maybe a data warehouse like Snowflake for analysis, a real-time dashboard, or another app—all within milliseconds.

This infographic paints a clear picture of the difference between sluggish, scheduled batch updates and the constant flow of real-time integration.

You can see how batch processing creates information delays (the hourglass), while real-time integration is a continuous, lightning-fast stream.

An E-Commerce Example in Action

Let's ground this in a real-world e-commerce situation. Your online store has 100 pairs of a hot new sneaker in stock. A customer buys one.

In a world without real-time integration, your inventory count might not get updated for an hour. In that time, your marketing emails are still pushing those sneakers, and other customers are trying to buy a product that's already gone. That leads to overselling and a lot of unhappy people.

With a CDC-powered real-time pipeline, the whole process is instantaneous:

- The

UPDATEevent in the inventory table (stock changes from 100 to 99) is captured by CDC. - The event zips through Kafka to your inventory system, website, and mobile app all at once.

- Within seconds, the product page shows the correct stock level: 99 units.

This smooth, immediate flow prevents major operational headaches and keeps the customer experience positive. If you want to get into the nuts and bolts of setting this up, our guide on how to build data pipelines walks through the architecture in much more detail.

The core idea is simple but incredibly powerful: treat data changes as events to act on now, not as records to check on later. This shift from a passive to an active data mindset is what allows modern businesses to be so nimble. By reacting to things as they happen, companies can automate workflows, create personalized experiences, and make decisions with information that's truly up-to-the-second. That's a serious competitive edge.

Exploring Key Architectural Patterns

Once you understand how real-time pipelines move data from A to B, the next step is to look at the blueprints—the architectural patterns—that make it all happen. These aren't rigid, one-size-fits-all rules. Think of them as fundamental designs you can mix and match to solve a specific business problem. The right choice always comes down to what you’re trying to accomplish.

Let's walk through three of the most important patterns for real-time data integration: data streaming, data replication, and event-driven architecture. Each one tackles the continuous flow of information in a completely different way.

The Power of Data Streaming

Imagine a river. That’s data streaming. Data doesn't show up in big, scheduled batches; it flows constantly, as an unending stream of tiny events straight from the source. This pattern is tailor-made for any situation where you need to analyze, monitor, or react to things as they happen.

This is exactly what powers live analytics dashboards and real-time monitoring systems. For instance, an e-commerce site could stream click data to a dashboard during a Black Friday sale. This gives them a live view of user behavior, letting them see which promotions are hitting the mark and which ones need a quick tweak.

The whole point of data streaming is to process data while it's in motion. Instead of parking the data in a database and then asking it questions, you run your logic directly on the live stream itself. This is what unlocks those immediate insights and actions.

Ensuring Consistency with Data Replication

Data replication is all about creating a perfect, up-to-the-second clone of your data somewhere else. The main goal is to keep an identical copy of a source database in a target system, synchronized in real time. It's a critical strategy for building high-availability systems and for taking the pressure off your production databases.

Using a technique called Change Data Capture (CDC), this pattern sniffs out every single change at the source—a new order in a production database, an updated customer record—and instantly applies that same change to the replica. The copy is always an exact mirror of the original.

So, why would you do this?

- Disaster Recovery: If your primary database suddenly goes offline, you can switch over to the replicated copy with virtually no downtime or data loss.

- Analytics Offloading: Running massive analytical queries on your main operational database can bring it to its knees. By replicating that data to a dedicated data warehouse like Snowflake, analysts can go wild with complex queries without affecting the app's performance one bit.

- Geographic Distribution: Companies with a global user base can replicate data to servers closer to their customers, which cuts down latency and makes for a much faster experience.

Platforms like Streamkap are built for this. They provide a rock-solid way to replicate data from sources like PostgreSQL or MySQL directly into cloud data warehouses, guaranteeing the two systems stay perfectly in sync. For a deeper dive, check out our guide on different data pipeline architectures.

Responding to Moments with Event-Driven Architecture

If data streaming is a river, an event-driven architecture (EDA) is more like a chain of dominoes. The fall of one domino (an "event") triggers the next, which triggers another, setting off a cascade of automated actions across completely different systems. Here, an "event" is just a fancy term for any significant change of state, like a customer placing an order or a sensor reading going over a set limit.

EDA is designed to decouple your systems. Instead of having applications tangled up in direct conversations with each other, they simply publish events to a central message broker. Other services can then subscribe to the events they care about and react accordingly.

Take a ride-sharing app. When you request a ride (that’s the event), several different services spring into action on their own:

- The dispatch service starts looking for the nearest driver.

- The payments service pre-authorizes your card.

- The notification service pings your phone with an update.

Each service does its job without needing to know the others even exist; they only need to know that the "ride requested" event happened. This makes the whole system more resilient, easier to scale, and simpler to update. It’s no surprise that this pattern is the backbone of modern microservices, allowing incredibly complex workflows to run like clockwork in real time.

What Business Value Does Real-Time Data Actually Unlock?

It’s one thing to understand the technical side of real-time data integration, but it's another to see how it directly fuels business growth. Swapping out batch processing for live data streams isn’t just an IT project; it’s a fundamental shift that impacts revenue, customer loyalty, and operational speed. It’s about making decisions based on what’s happening right now, not on a report from last week.

When data flows continuously, you can finally get ahead of the curve. Instead of analyzing last quarter’s sales report to figure out what happened, you can see what customers are buying this very minute and adjust on the fly. That kind of immediacy is a serious competitive edge.

Driving Revenue in E-commerce

In the hyper-competitive e-commerce space, real-time data is the engine. It’s what powers the dynamic, responsive experiences customers have come to demand, touching everything from the warehouse floor to marketing campaigns.

Think about these everyday e-commerce scenarios powered by live data:

- Dynamic Pricing: Prices can shift automatically based on a competitor’s stock, current demand, or inventory levels. A competitor runs out of a popular item? Your system can instantly nudge the price up to capitalize on the moment.

- Real-Time Inventory Sync: A product sells in a brick-and-mortar store, and the online inventory updates in milliseconds. This simple sync is what prevents overselling and customer frustration during huge sales events like Black Friday.

- Personalized Recommendations: A shopper adds a new camera to their cart. Before they even think about it, your site recommends the exact memory card and camera bag that similar customers bought, boosting the average order value.

By closing the gap between a customer's action and the business's reaction, real-time integration turns simple transactions into intelligent, personalized interactions that boost sales and build loyalty.

The e-commerce market is betting big on real-time analytics for fraud detection and inventory management. This trend also taps into the massive world of unstructured data—things like emails and IoT sensor feeds—which now makes up over 80% of all enterprise data. Industries like healthcare are also set for huge growth, driven by digital health records and real-time patient monitoring. You can dig into more of the numbers behind this data integration market growth in recent industry analysis.

Mitigating Risk in Financial Services

For the finance industry, speed isn't a luxury; it’s a core requirement for security and compliance. A few milliseconds can be the difference between stopping a fraudulent transaction and managing a costly data breach.

Real-time data pipelines are the backbone of modern financial security. When a credit card is swiped, the data streams through a fraud detection engine that analyzes dozens of variables instantly—location, purchase amount, historical spending—to assign a risk score before the transaction is even approved. This happens in the blink of an eye, stopping threats without slowing down legitimate customers.

It's the same story on trading platforms. They depend on real-time market data to execute trades at the perfect moment. Even a few seconds of delay could translate into significant financial loss.

Optimizing Operations Across Industries

The operational wins from real-time data aren’t just for e-commerce and finance. Logistics companies use it to track their fleets, rerouting drivers around traffic jams and giving customers pinpoint-accurate delivery times. In manufacturing, data streaming from factory sensors makes predictive maintenance possible, alerting teams to potential equipment failure before it causes an expensive shutdown.

This immediate view into day-to-day processes helps businesses spot bottlenecks, cut waste, and make their workflows leaner. With real-time data integration, companies aren't just hoarding data; they're putting it to work to build smarter, faster, and more resilient operations.

Navigating the Common Stumbling Blocks

Switching to real-time data integration is a game-changer, but let's be honest—it opens up a whole new can of engineering worms that batch processing just doesn't have. The upside is huge, but getting there means you need to anticipate the roadblocks and have a solid plan to get around them. It's not just about speed; it's about moving data reliably and correctly, every single time.

Most of these headaches boil down to keeping your data clean and managing the sheer complexity of a constantly-running, distributed system. When you're building real-time data pipelines, you have to think hard about the non-functional requirements. As laid out in A Founder's Guide to Non Functional Requirements, things like reliability and consistency are just as important as the raw speed of your data flow.

Let's walk through three of the most common hurdles you’re likely to face.

Guaranteeing Message Ordering

One of the trickiest parts of real-time data integration is making sure events are processed in the right sequence. Think about financial transactions: a deposit absolutely has to be processed before the withdrawal that comes after it. If those events get flipped, your system might bounce a perfectly valid payment.

This is a classic problem in distributed systems. When you have high volumes of data, individual events can take different network paths or hit random delays, getting them all jumbled up before they reach their destination.

To fix this, modern streaming platforms like Apache Kafka use a smart partitioning strategy. The idea is to send all events related to a single entity—say, one customer's bank account—to the same partition. This guarantees that all transactions for that specific account are processed in the exact order they happened, ensuring strict ordering where it counts and preventing nasty logical errors downstream.

Managing Schema Evolution

Data models are never set in stone. Sooner or later, a developer will add a new field to a user profile or change a data type from an integer to a string. This is called schema evolution, and if you don't handle it properly in a real-time pipeline, it can cause a total system meltdown. An unexpected change can easily break downstream applications, bringing your entire pipeline to a screeching halt.

Trying to manage these changes by hand is a recipe for disaster. It’s tedious, error-prone, and just doesn't scale. This is exactly why a schema registry is a must-have.

A schema registry is basically a centralized rulebook for your data. It enforces compatibility, making sure any new data coming into the pipeline follows the rules and preventing breaking changes from ever causing an outage.

Platforms like Streamkap take this a step further by automating the entire process. They can detect a schema change in your source database—like a new column being added—and automatically apply that same change to the destination, like adding the new column in Snowflake. This turns a massive operational risk into a simple, hands-off task.

Taming Operational Complexity

Finally, let’s talk about the elephant in the room: building and maintaining a resilient real-time system is complex. A DIY approach often means stitching together a bunch of open-source tools like Debezium for change data capture, Kafka for streaming, and Apache Flink for processing. Every single one of these components has to be configured, monitored, and scaled on its own.

This creates a ton of operational overhead. What happens if a connector fails in the middle of the night? How do you guarantee exactly-once processing so you don't lose or duplicate data? Answering these questions requires deep engineering expertise and a team that’s always on watch.

This is where managed platforms really shine. They absorb all that complexity for you, offering a single, unified solution that handles the tough stuff:

- Automated Failover: Keeping the system running smoothly even when parts of the infrastructure have issues.

- Scalability: Automatically adjusting resources to handle traffic spikes without anyone having to lift a finger.

- Monitoring and Alerting: Giving you a single dashboard to see what's going on and flagging problems before they become catastrophes.

By offloading these operational burdens, your team can stop worrying about keeping the lights on and start focusing on what really matters: getting value from your data.

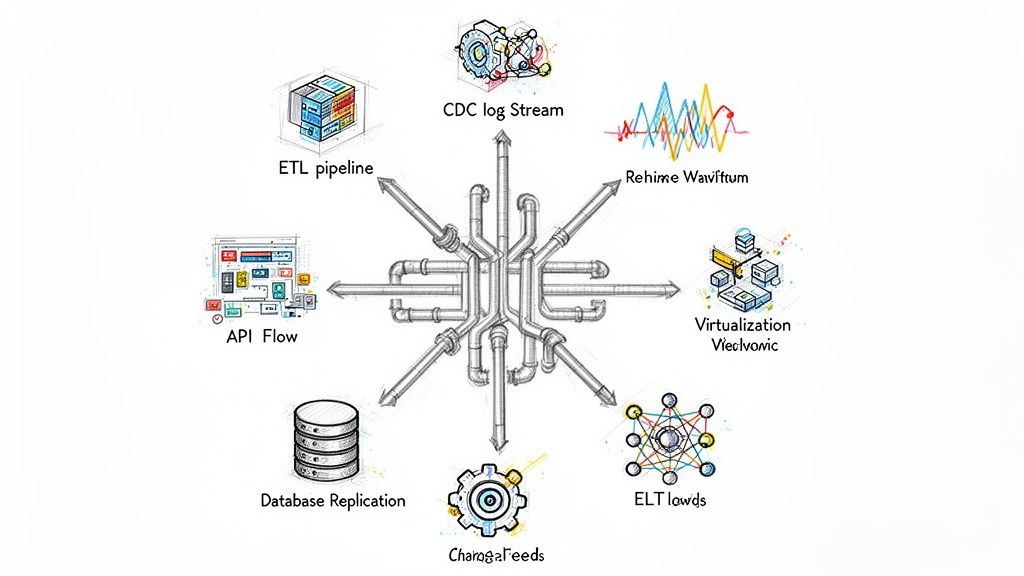

Choosing the Right Integration Strategy

Once you’ve decided to embrace real-time data, you’ll hit a critical fork in the road. Do you build a solution from the ground up, or do you partner with a managed platform? This is a huge decision, and it’s about far more than just technology—it's a strategic choice that balances cost, effort, and your team's focus.

The first path is the classic "Do-It-Yourself" (DIY) approach. This is where your team assembles a custom pipeline using some seriously powerful open-source tools. Think of it like building a high-performance race car from scratch; you're hand-picking every part. You'd likely use Debezium for Change Data Capture (CDC), Apache Kafka as the streaming backbone, and maybe Apache Flink to process it all.

This route gives you ultimate control and the ability to customize every single detail. The trade-off? It demands a massive investment in specialized engineers who can build, scale, and, most importantly, maintain this complex system. Your team is on the hook for everything, from connector stability and data integrity to the underlying infrastructure.

The alternative is to go with a managed platform like Streamkap. This is more like leasing that high-performance race car. You get all the speed and reliability without needing to be the mechanic who built it. These platforms bundle all the necessary components into a single, cohesive service, abstracting away the brutal complexity so your team can focus on what to do with the data, not just how to move it.

Making the Right Choice for Your Team

To figure out which path makes sense, you have to look beyond the initial software price tag and consider the total cost of ownership. This includes the often-hidden costs of engineering salaries, ongoing maintenance, and the operational headaches that come with running critical infrastructure.

Ask yourself these tough but crucial questions:

- Expertise: Do we have engineers with deep, hands-on experience in distributed systems, Kafka, and real-time processing? And can we afford to hire more if they leave?

- Time to Value: How fast do we need these real-time pipelines up and running to start seeing a return? Are we talking weeks or months?

- Maintenance: Who gets the call when a connector fails at 3 AM or a schema change breaks the pipeline? Is my team prepared for that 24/7 responsibility?

The build-versus-buy decision is fundamentally about resource allocation. You're choosing where your most valuable asset—your engineering team's time and talent—will make the biggest impact on the business.

Let’s get practical and compare these two approaches head-to-head.

DIY vs Managed Real-Time Integration Platform

When you lay it all out, the trade-offs between building it yourself and using a managed service become crystal clear. It’s not just about cost; it’s about time, risk, and focus.

FactorDIY (Open Source)Managed Platform (e.g., Streamkap)Initial CostLow software cost (it's open-source), but infrastructure and specialized engineering setup costs are very high.Higher subscription fee upfront, but it bundles infrastructure, support, and maintenance.Implementation TimeMonths. It takes a long time to build, test, and stabilize a truly production-ready pipeline.Days, sometimes hours. You can configure connectors and start streaming data almost immediately.In-House ExpertiseRequires a dedicated team of specialized data engineers who live and breathe complex tools like Kafka and Debezium.Minimal expertise needed. The platform is designed to hide the underlying complexity from the user.Ongoing MaintenanceHigh. Your team is fully responsible for all updates, security patches, scaling, and 3 AM troubleshooting.Low. The provider handles all maintenance, monitoring, and guarantees platform uptime.Total Cost of Ownership (TCO)Often much higher once you factor in engineering salaries, operational burdens, and infrastructure spend.Typically 60-80% lower TCO because the engineering and operational load is lifted from your team.

So, what's the verdict? A DIY approach can make sense for massive enterprises with dedicated platform engineering teams and truly unique, edge-case requirements. For almost everyone else, a managed platform offers a faster, more reliable, and far more cost-effective path to getting real-time data integration done right.

Got Questions? We've Got Answers

Even when you've got the concepts down, a few practical questions always come up when you start digging into real-time data integration. Let's tackle some of the most common ones to clear up any confusion.

We'll cover everything from how it handles huge data loads to the classic "what's the difference between this and my old ETL job?" question.

What's the Real Difference Between Real-Time Integration and ETL?

It all comes down to timing. Think of traditional ETL (Extract, Transform, Load) as a batch process. It's like developing a roll of film—you collect a bunch of data over time, process it all in one big scheduled job (maybe overnight), and then finally load it.

Real-time integration, on the other hand, is a continuous flow. Using technologies like Change Data Capture (CDC), it processes data one event at a time, right as it happens. It’s more like a live video feed than a developed photo.

How Can Real-Time Data Integration Keep Up With High Volumes?

This is where modern platforms really shine. They're built from the ground up to handle massive scale. The secret sauce is often a distributed system like Apache Kafka, which is capable of processing trillions of events every single day.

These systems scale horizontally, which is a fancy way of saying you can just add more machines to handle more data. There's no performance hit.

This kind of architecture means that even during a huge traffic spike—think Black Friday levels of activity—your data pipelines won't buckle. They’ll just keep delivering data with low latency, preventing system meltdowns or lost information.

Is It Expensive to Implement a Real-Time System?

The cost really depends on whether you build it yourself or use a managed service. A DIY approach with open-source tools might look cheap upfront, but it requires a significant and ongoing investment in specialized engineers just to build and maintain it.

That's why managed platforms like Streamkap often have a much lower Total Cost of Ownership (TCO)—we're talking 60-80% less. You pay a subscription, but you completely sidestep the need for a dedicated team to manage the complex plumbing. Your engineers can get back to building things that make your business money, not just keeping the data flowing.

Ready to stop wrestling with complex pipelines and start streaming data in minutes? Streamkap offers a fully managed, real-time data integration solution that automates the hard parts—like schema evolution and failover—so you can focus on insights, not infrastructure. Start your free trial today.