<--- Back to all resources

What Is Streaming Data and How Does It Work

Discover what is streaming data with this simple guide. Learn how real-time data streams power modern business, from analytics to instant customer experiences.

At its core, streaming data is a continuous, never-ending flow of information coming from thousands of different sources. Think of it as data that’s always in motion. This is a fundamental shift from how we used to handle data, which involved collecting it in big chunks, or batches, and then processing it all at once. Streaming data is different—it’s processed the moment it’s created.

Understanding Streaming Data vs Batch Data

To really get a feel for this, let’s use a simple analogy. Picture data as water.

Traditional batch data is like collecting rainwater in a giant barrel. You have to wait for the barrel to fill up before you can do anything with the water. Once it’s full, you take it away and analyze its contents. It’s a stop-and-start process that works with a large, fixed amount of data.

Streaming data, however, is like a river. The water is constantly flowing, and you don’t wait for it to collect anywhere. Instead, you dip your tools right into the river to analyze the water as it rushes past. This is the essence of data in motion—an endless sequence of events that gives you insights right now, not later. In a world that moves as fast as ours, that real-time feedback is everything.

The market certainly reflects this shift. The global streaming analytics market is already valued at around USD 23.19 billion and is expected to explode to USD 157.72 billion by 2035, growing at a blistering 21.1% each year. You can see the full streaming analytics market forecast on futuremarketinsights.com.

Streaming data isn’t just about speed; it’s about relevance. It allows businesses to react to events as they unfold, not hours or days later when the opportunity has passed.

Streaming Data vs Batch Data at a Glance

The difference between these two approaches is more than just technical—it changes what’s possible. Batch processing is perfectly fine for jobs where you can afford to wait, like putting together a monthly sales report. But streaming is absolutely essential when you need to act now, like flagging a fraudulent credit card transaction the second it happens.

For a deeper look into the nuts and bolts, our guide on batch vs stream processing breaks down their specific use cases and architectures.

To make the comparison crystal clear, here’s a simple table highlighting their core differences.

CharacteristicStreaming DataBatch DataData ScopeUnbounded, continuous eventsFinite, large volumesProcessing TimeReal-time (milliseconds/seconds)Delayed (minutes/hours/days)Data SizeSmall, individual records (kilobytes)Large blocks of data (gigabytes/terabytes)Analysis ModelEvent-driven, immediate responseQuery-based on stored data

Ultimately, one isn’t “better” than the other; they’re built for entirely different jobs. Batch processing looks at the big picture over time, while streaming focuses on what’s happening this very instant.

How a Streaming Data Pipeline Actually Works

It’s one thing to picture streaming data as a constantly flowing river, but it’s another to understand how you actually analyze that river as it rushes by. That’s where a streaming data pipeline comes in. This is the system that makes real-time analysis possible, moving data from where it’s created to a point of insight almost instantly.

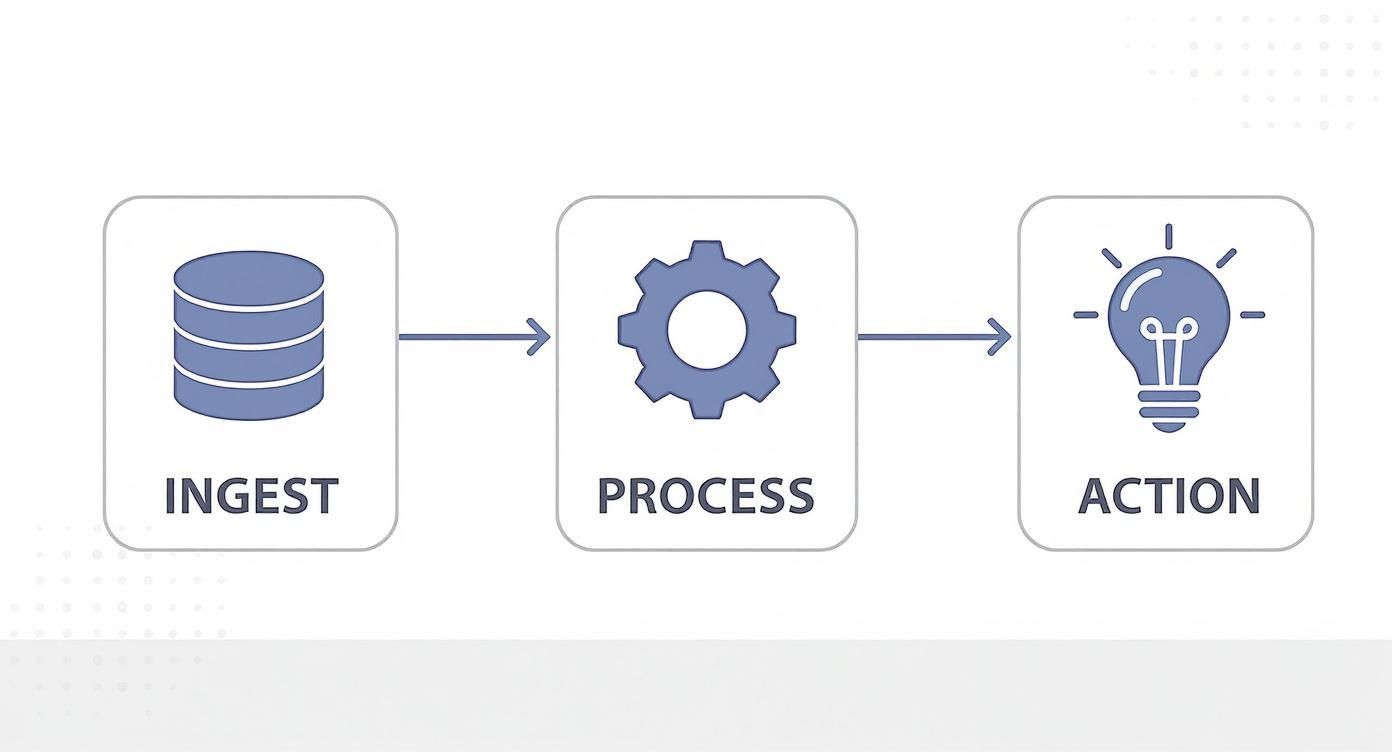

Think of the pipeline as a sophisticated, three-stage assembly line for information. Each stage has a very specific job, and they all work in perfect sync to turn a raw event into a valuable, actionable insight.

Stage 1: Ingestion

First, you have to actually capture the data. This is the ingestion stage, where raw events from all over the place are collected and funneled into the pipeline. This could be anything—a customer clicking “add to cart” on your website, a sensor on a factory floor reporting a temperature spike, or a new post hitting a social media feed.

The big challenge here is reliability. You need a system that can handle a massive, often unpredictable, flood of tiny data packets without dropping a single one. This is where a message broker like Apache Kafka really shines.

A message broker acts like the central post office for your data. It accepts every piece of mail (each data event) from every sender, sorts it into organized queues (called topics), and holds it securely until the next stage is ready to process it. This setup ensures no data gets lost, even if there’s a sudden, massive surge.

Stage 2: Processing

Once the data is safely queued up, it moves into the processing stage. This is the real brain of the operation, where raw data gets transformed, analyzed, and enriched on the fly. A stream processing engine, like Apache Flink or Apache Spark, is the powerhouse here.

This engine runs continuous queries or applies complex logic to the data as it streams through. For instance, it might perform calculations, filter for specific types of events, or even combine multiple data streams to spot emerging patterns.

If you want to dive deeper into the mechanics of this, check out our complete guide to data stream processing.

Stage 3: Action and Storage

Finally, after the data has been processed, it’s ready for the action stage. The insights generated in the processing stage are either used to trigger an immediate response or sent to a final destination for storage and future analysis.

This is where the business value really comes to life, and the possibilities are endless:

- Trigger an Alert: A fraud detection system could instantly block a suspicious credit card transaction and text the user.

- Update a Dashboard: A business intelligence tool could display live sales figures as orders are placed.

- Personalize an Experience: An e-commerce site could update product recommendations based on what a user is clicking on right now.

- Store for Analysis: The processed data can be loaded into a cloud data warehouse like Snowflake or Google BigQuery for deeper, long-term trend analysis.

From the moment an event happens to the final action, this entire journey takes just milliseconds. It allows businesses to operate with a level of awareness and responsiveness that just wasn’t possible before.

Why Streaming Data Is a Game Changer for Business

It’s one thing to understand the nuts and bolts of a streaming pipeline, but the real magic happens when you see what it can actually do for a business. Shifting from old-school batch processing to real-time streams isn’t just a technical tweak; it’s a fundamental change that opens up entirely new ways of operating and gives you a serious edge over the competition. The power to analyze, decide, and act in milliseconds is what separates the leaders from the laggards.

Think about it this way: instead of poring over reports to figure out what happened yesterday, companies can now react to events the second they happen. This shift to a proactive stance changes everything. It turns data from a historical archive into a live, strategic asset that drives results right now.

From Hindsight to Foresight

The true value of streaming data lies in its immediacy. It lets companies graduate from making reactive decisions based on old information to running proactive, and even predictive, operations. This capability creates real, measurable benefits across all sorts of industries.

Just look at these transformations in action:

- E-commerce Personalization: An online retailer can track a shopper’s clicks in real time. Rather than waiting hours to refresh recommendations, it can instantly suggest products based on what that person is looking at at this very moment, massively boosting the odds of a sale.

- Financial Fraud Detection: A bank can scrutinize transaction streams as they flow in. A suspicious purchase can be flagged and blocked before the transaction is even complete, saving millions in losses and protecting its customers.

- Supply Chain Optimization: A logistics firm can use live GPS data from its delivery trucks to reroute them around sudden traffic jams or bad weather, ensuring packages arrive on time while cutting down on fuel costs.

This simple diagram breaks down the flow from capturing raw data to taking a valuable action.

This visual shows how raw events get pulled in, processed, and turned into the kind of immediate, actionable insights that create real business value.

By processing data in motion, organizations gain the ability to create superior customer experiences, monitor operations proactively, and make critical decisions with up-to-the-second information. It’s about seizing opportunities that exist for only a fleeting moment.

As more businesses get on board with these systems, it becomes crucial to explain their value clearly. Effective strategies in content marketing for technology companies are essential for showcasing these capabilities and proving a clear return on investment.

At the end of the day, moving to streaming data isn’t just about adopting new technology. It’s about building a smarter, faster, and more responsive organization from the ground up.

Real-World Examples of Streaming Data in Action

The best way to really grasp what streaming data is all about is to see it in action. Let’s move past the theory and look at how the products and services we use every day rely on it. These examples show just how valuable processing data on the fly can be, creating smarter and more responsive experiences across different industries.

Think about the last time you used a ride-sharing app. From the moment you open it, your phone is sending a constant stream of your location data to the platform’s servers.

This live feed is what makes the whole service tick. It’s how the system finds the closest driver, figures out your fare based on current traffic and demand—that’s dynamic pricing in action—and gives you a pretty accurate ETA. Without that constant flow of GPS data, the app would be useless.

Powering Personalized Entertainment

The media world is a huge consumer of streaming data. Every time you binge a show on a service like Netflix or Hulu, you’re not just watching a stream; you’re creating one. The platform is tracking every single play, pause, rewind, and search you make, all in real time.

That viewership data gets fed straight into recommendation algorithms. What’s the result? The second you finish an episode, the service has already crunched the numbers on your behavior to suggest what you might like next. It’s a loop of constant analysis that creates a super personalized and sticky experience, and it’s a big deal in a market expected to reach USD 108.73 billion globally. You can read more about the media streaming market’s growth here.

Streaming data lets businesses shift from simply looking at what a customer did yesterday to influencing what they do in the next second. It’s the difference between passive observation and active engagement.

Enabling the Smart Factory

In manufacturing, streaming data is the key to dodging costly disasters and fine-tuning operations. This is the heart of what’s often called the Industrial Internet of Things (IIoT).

Modern factories are packed with thousands of sensors embedded in their machinery. Each one generates a continuous stream of data on things like temperature, vibration, pressure, and overall performance. This torrent of information is monitored constantly to spot tiny deviations that could signal a big problem on the horizon.

This strategy is known as predictive maintenance, and it allows companies to schedule repairs before a machine breaks down. It’s a game-changer for avoiding unplanned downtime and boosting safety. Here’s a quick breakdown of how it works:

- Data Source: Live sensor readings from a piece of factory equipment.

- Real-Time Insight: An algorithm flags a vibration pattern that’s slightly off from the normal baseline.

- Powerful Outcome: An alert is automatically fired off to a maintenance team to check the machine, potentially preventing a multi-million dollar production halt.

From hailing a ride and watching a movie to building the products we buy, streaming data has become the invisible engine driving a more efficient, personalized, and innovative world.

Choosing The Right Streaming Data Architecture

When you’re building a system to handle a constant flow of data, there’s no one-size-fits-all solution. Think of it like a building architect choosing a blueprint; a data engineer needs to pick the right architecture to structure their data pipelines. For years, two classic blueprints have shaped how we think about this: the Lambda and Kappa architectures.

Getting a handle on these two patterns is a great way to understand the trade-offs that come with designing any real-time system. They really just represent two different philosophies for balancing the need for speed with the demand for accuracy.

The Two Foundational Blueprints

So, what are these foundational patterns all about?

- The Lambda architecture is the original “belt-and-suspenders” approach. It processes data along two separate paths at the same time: a “hot” path for real-time speed and a “cold” path for batch-processing accuracy. The idea is simple: get fast, good-enough answers right now from the streaming layer, then correct or enrich them later with the more thorough batch layer.

- The Kappa architecture came along and simplified things by championing a single, all-streaming path. The argument here is that if your streaming system is powerful and reliable enough, you can handle both real-time processing and historical reprocessing without needing a completely separate batch pipeline. It’s a much sleeker, more unified approach, but it puts all its faith (and a heavy workload) on the stream processing engine.

Both architectures aim to solve the same problem: providing accurate, timely insights from a continuous flow of data. The choice often comes down to balancing system complexity against your specific business needs.

To help you see the differences more clearly, here’s a quick comparison of the two approaches.

Lambda vs Kappa Architecture Comparison

AspectLambda ArchitectureKappa ArchitectureComplexityHigh. Manages two separate data paths and codebases.Lower. Uses a single, unified stream processing path.Data PathsTwo paths: a “hot” real-time path and a “cold” batch path.One path: a single stream handles everything.LatencyLow latency for real-time views, high latency for batch views.Consistently low latency for all processing.CostCan be higher due to redundant data storage and processing.Generally more cost-effective with less infrastructure.Use CaseIdeal for systems where historical accuracy is paramount.Great for real-time applications where simplicity is key.

Ultimately, both have their place, but they represent a more traditional way of thinking about the problem.

Modernizing The Approach

While Lambda and Kappa provide a great theoretical foundation, the real world of data engineering has moved on a bit. The sheer complexity of maintaining two codebases for Lambda, or the heavy reprocessing demands of Kappa, often created a massive amount of engineering overhead.

For a deeper dive into these traditional models and how they stack up against newer methods, check out our guide on modern data pipeline architectures.

Today, the game has changed. Modern real-time data platforms like Streamkap are making these architectural choices far simpler. By using Change Data Capture (CDC), these tools create a single, reliable stream of data straight from sources like your production databases. This completely sidesteps the need for those complex, dual-path systems.

This modern approach offers some serious advantages:

- Reduced Complexity: You get one unified pipeline for both real-time and historical data, without the headache of managing separate systems.

- Lower Engineering Overhead: Teams can build and manage sophisticated pipelines without needing to be experts in complex tools like Kafka or Flink.

- Real-Time Analytics: You can connect databases like PostgreSQL or MongoDB directly to a data warehouse like Snowflake for instant analytics. This setup gives you the best of both worlds—the speed of Lambda’s hot path and the accuracy of its cold path, but with far less effort.

Of course. Here’s the rewritten section, designed to sound natural and human-written, as if from an experienced expert.

The Hard Parts of Streaming Data (And How to Solve Them)

Let’s be honest: moving to real-time data processing isn’t a walk in the park. While the payoff is huge, it brings its own set of technical headaches. With traditional batch systems, you can stop a job, fix a bug, and just rerun it. But a streaming system is always on, constantly handling an endless river of data. That requires a completely different way of thinking.

This push for instant insight is what’s behind some staggering market growth. The streaming analytics market is currently valued at USD 27.84 billion and is expected to rocket to USD 176.29 billion by 2032. This isn’t just hype; it’s being driven by the real-world demands of IoT devices and AI models that need fresh data to function. You can dig into the complete streaming analytics market report on Fortune Business Insights for the full picture.

To successfully manage this constant flow, you have to get ahead of some tough, but common, problems.

The biggest challenge with streaming data isn’t just about speed—it’s about correctness. If you can’t guarantee that every single event is processed exactly once, without any loss or duplication, you can’t build a reliable system that people can trust.

Tackling the Key Hurdles

When you’re building a streaming pipeline, you’ll inevitably run into a few classic issues. Here’s what to look out for:

- Exactly-Once Processing: What happens if a server reboots mid-process? You have to make sure an event isn’t counted twice. The solution lies in building idempotent systems, which are designed to safely re-process the same data without messing up the results.

- Out-of-Order Events: Data rarely arrives in a neat, chronological line. Imagine a user’s phone going offline for a minute—it will send a batch of older events once it reconnects. We use techniques like watermarking to tell the system to wait a bit for late-arriving data before finalizing calculations.

- Maintaining State: How does a system remember things over time, like the running total in a user’s shopping cart? This is where stateful processing comes in. It allows your application to hold onto and update information as new events arrive, giving you a much richer and more accurate analysis.

These challenges might sound daunting, but they are well-understood problems in the world of data engineering. Modern tools and established patterns are specifically designed to handle them. The key is to go in with a clear strategy for building a system that’s not just fast, but also incredibly resilient.

Got Questions About Streaming Data?

As you dive deeper into the world of streaming data, it’s natural for a few questions to surface. It’s a complex topic, so let’s walk through some of the most common ones to help sharpen your understanding.

Are Streaming Data and Real-Time Data the Same Thing?

This is a great question, and one that trips a lot of people up. While they’re often used in the same breath, they aren’t exactly the same.

Think of streaming data as the continuous flow of information itself—a constant river of events. Real-time data, on the other hand, is all about the speed at which you process that information.

You could have a data stream that you only process in batches once an hour. That’s still streaming data, but it’s certainly not real-time. True real-time processing means you’re acting on that stream the moment it arrives, typically within milliseconds. The two concepts go hand-in-hand, but they describe different parts of the puzzle.

What Are the Best Programming Languages for Stream Processing?

A few languages have really become the go-to choices for building stream processing applications, mostly because of their powerful libraries and proven performance.

Here are the big three:

- Java and Scala: These are the languages that power some of the most fundamental stream processing frameworks out there, like Apache Flink and Apache Kafka Streams. If you’re building from the ground up, this is where you’ll likely start.

- Python: Thanks to its simplicity and incredible ecosystem of data science libraries, Python is a fantastic choice for building streaming applications. It’s especially popular for tasks that involve applying machine learning models to live data feeds.

- SQL: This one might be a surprise, but it’s becoming a game-changer. Modern platforms are increasingly letting people run SQL queries directly on data streams. This has opened up the world of stream processing to data analysts and other folks who aren’t specialized engineers.

How Can a Small Business Start Using Streaming Data?

Getting started is probably easier than you think. You don’t need a huge team or a massive budget. The secret is to start small and focus on a single, high-impact problem.

Don’t try to boil the ocean by overhauling your entire infrastructure. Instead, pick one specific pain point where real-time insights could make a real difference. Maybe that’s monitoring live website activity to catch errors or tracking inventory changes the second they happen.

Cloud services have democratized this technology, making the tools much more affordable and manageable. You can start with a small, well-defined project, show its value, and then build from there as your business grows.

Ready to build powerful, real-time data pipelines without all the usual headaches? Streamkap uses Change Data Capture (CDC) to stream data from your databases to your warehouse in milliseconds. See how easy real-time can be and get started.