<--- Back to all resources

Batch vs Stream Processing: Which Data Method Is Right for You?

Learn the key differences between batch vs stream processing to choose the best data approach for your needs. Find out more now!

When you get down to it, the real difference between batch vs stream processing is time. Think of it this way: batch processing is like collecting all your mail for the week and opening it on Saturday. Stream processing is like reading each letter the moment it arrives. Your choice boils down to a simple question: do you need historical accuracy or the ability to act right now?

Understanding Core Data Processing Models

Picking the right data processing model isn’t about finding a one-size-fits-all “best” option. It’s about matching the architecture to what your business actually needs to accomplish. This decision has a ripple effect on everything from your operational efficiency to the experience you can offer customers. Getting a handle on the fundamental distinctions is the first step toward building a data pipeline that works.

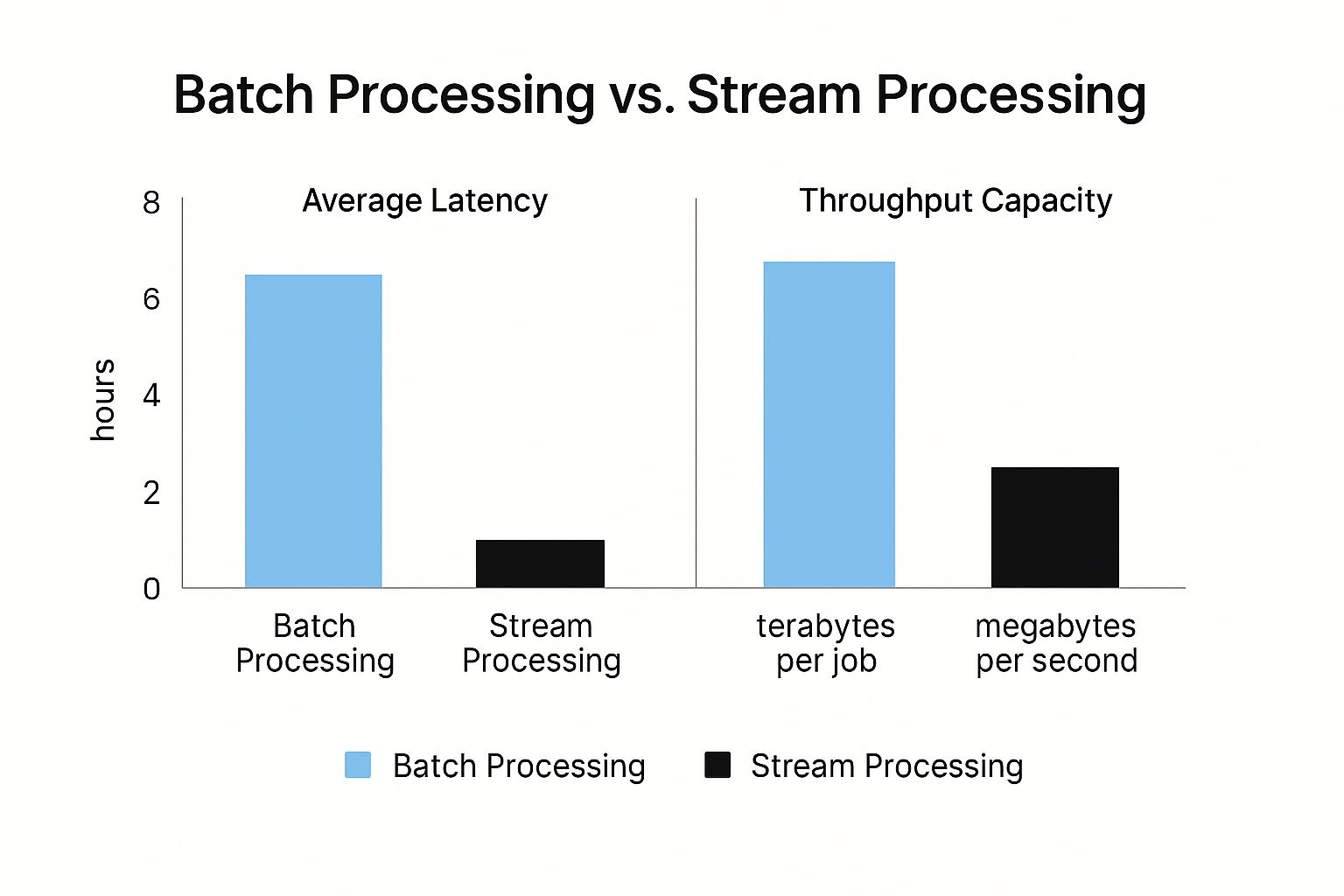

This chart really helps visualize the trade-offs, pitting average latency against throughput capacity for each model.

As you can see, batch processing is designed to chew through massive volumes of data—think terabytes per job. On the other hand, stream processing is all about speed, optimized for ultra-low latency measured in milliseconds.

Batch vs Stream Processing At a Glance

To make the comparison even clearer, let’s break down the core characteristics of each approach. The table below is a quick cheat sheet for understanding how they handle data, what to expect from their performance, and where they truly shine.

CharacteristicBatch ProcessingStream ProcessingData ScopeLarge, finite datasets (bounded)Infinite, continuous data streams (unbounded)LatencyHigh (minutes, hours, or even days)Low (milliseconds to seconds)ThroughputHigh (built for data volume)Varies (built for data velocity)Data InputCollected and stored over a periodProcessed event-by-event as it arrivesIdeal Use CasesPayroll, billing, ETL jobs, reportingFraud detection, IoT monitoring, personalization

This table lays out the essential trade-off: batch processing prioritizes chewing through enormous datasets efficiently, while stream processing prioritizes speed and immediate insight. Neither is inherently better; their value is all about the problem you’re trying to solve.

Ultimately, the architecture you build will be shaped by these factors. For a deeper dive into how these models work within larger systems, it’s worth exploring common data pipeline architectures that often combine both batch and streaming principles. This foundational knowledge is crucial before you get into the weeds of implementation.

How Batch Processing Architecture Delivers Throughput

The real power of batch processing is baked right into its architecture, a system designed with one primary goal in mind: maximum data throughput. Instead of chasing speed, this model is all about handling massive datasets efficiently and cost-effectively. It’s a deliberate, methodical approach built on collecting data, storing it, and then processing it all at once in large, scheduled chunks.

The workflow usually starts with raw data pooling in a central spot, like a data lake or cloud storage. This collection happens over a set period—maybe an entire day or even a week. Once that window closes, a scheduled Extract, Transform, Load (ETL) job fires up. This is the heart of the batch process, where all that raw information gets cleaned, structured, and made ready for analysis.

Core Components and Frameworks

To pull off this kind of large-scale operation, batch architectures lean on powerful distributed computing frameworks. These systems are brilliant at breaking down a gigantic processing job into smaller, more manageable tasks that can run in parallel across a whole cluster of machines.

A couple of key technologies make this possible:

- Apache Hadoop: This is the foundational framework for distributed processing of huge datasets. Its classic MapReduce programming model is the textbook example of batch-style data crunching.

- Apache Spark: While Spark can also handle streaming data, its core engine is an absolute beast for large-scale batch jobs. By performing operations in memory, it often runs circles around Hadoop for many ETL tasks.

These frameworks are the heavy lifters, the workhorses that make processing terabytes or even petabytes of data a reality. They’re built to squeeze every ounce of performance out of the available hardware during the processing window. If you’re just getting started, learning how to build a data pipeline will give you a great overview of how these pieces fit together.

The high latency in batch processing isn’t a bug; it’s a feature. By waiting to process until a large volume of data is collected, systems can optimize resource use, slash operational costs, and guarantee a complete, comprehensive analysis of the entire dataset.

This trade-off is precisely what makes batch processing perfect for jobs where completeness and accuracy trump immediacy. Think about generating monthly financial reports—you need the full month’s data to be correct. Training a machine learning model on historical customer data is another great example; the more data you have, the better. In these cases, the built-in delay isn’t just acceptable, it’s essential to getting the job done right.

Exploring Real-Time Stream Processing Architecture

While batch processing operates on a set schedule, stream processing is always on, ready to act the instant data is born. This approach completely inverts the old model of collecting data first and processing it later. Instead, it ingests and analyzes a continuous, unbounded flow of events in real-time, enabling actions in milliseconds.

This architecture is all about speed. It doesn’t treat data as a static collection to be queried later; it sees it as a live pulse of information. Naturally, this requires a completely different set of tools and a different way of thinking compared to batch systems.

Core Components for Instant Analysis

At the core of any stream processing system, you’ll find technologies built for incredibly low latency and high availability. These platforms are engineered to handle data as it flows, ensuring insights are generated without delay.

A couple of key players make this possible:

- Apache Kafka: Think of Kafka as the central nervous system. It’s a distributed event streaming platform that ingests massive volumes of data from countless sources in real-time. It acts as a durable, ordered log, making data immediately available for other systems to consume.

- Apache Flink: This is a powerful stream processing framework that runs computations on these unbounded data streams. Flink is where the magic happens—performing complex operations like aggregations, joins, and pattern detection on live data as it pours in.

To manage these endless flows, we rely on a concept called windowing. Since a data stream is technically infinite, windowing gives us a way to group events into logical chunks. For example, you can tell the system to “calculate the average transaction value over the last 60 seconds.”

Stream processing isn’t just about going faster; it’s about unlocking a new class of operations. By analyzing events as they happen, businesses can shift from reactive reporting to proactive, automated decisions that influence outcomes in real time.

This immediate feedback loop is critical for so many modern applications. A fraud detection system has to block a suspicious transaction before it’s complete, not hours later. An e-commerce site needs to adjust recommendations based on a user’s last click, not their session from yesterday.

This level of responsiveness is only possible with a solid architecture for real-time data streaming. From live monitoring dashboards to dynamic pricing models, stream processing provides the foundation for a truly event-driven business.

Latency vs. Throughput: A Tale of Two Priorities

When we talk about performance in batch vs. stream processing, it’s easy to fall into the “slow vs. fast” trap. But that’s not the whole story. The reality is much more interesting. Both approaches are built for high performance, but they optimize for completely different things: throughput and latency. Getting this trade-off right is the single most important decision you’ll make when choosing an architecture.

Batch processing is all about brute-force throughput. Its entire design philosophy revolves around gathering massive datasets—we’re talking terabytes or even petabytes—and processing them all in one go. This maximizes the efficiency of your computational resources and seriously brings down the cost-per-record.

The high latency you see in batch systems isn’t a bug; it’s a feature. It’s a deliberate design choice that shines when data completeness and deep historical analysis are more important than getting an instant answer.

Throughput in the Batch World

In batch systems, throughput is the name of the game. We measure it in terms of data volume over time, like terabytes per hour. The mission is simple: get the biggest possible chunk of data from point A to point B as efficiently as you can.

Here’s where that high-throughput model is essential:

- Nightly Data Warehousing: Imagine an e-commerce giant processing 20 terabytes of daily transaction logs. The goal isn’t to see each sale as it happens, but to get the entire day’s data processed and loaded into their analytics warehouse before the next business day starts.

- Genomic Sequencing: A research lab working on a human genome is dealing with colossal datasets that can take days to analyze. Here, throughput is the only metric that matters.

- Monthly Billing Cycles: A telecom company has to generate millions of customer bills at the end of each month. The system is built to crush this predictable, massive workload in a cost-effective way.

Latency in the Streaming World

Stream processing, on the other hand, is built for one thing: speed. It’s obsessed with minimizing latency. Here, latency is the time it takes for a single event to travel through the pipeline—from ingestion to processing to action. The goal is to get this down to mere milliseconds.

This relentless focus on speed is what makes stream processing indispensable for businesses that need to make decisions right now. Batch jobs often run on schedules measured in hours, with nightly runs of 6–24 hours being typical. In sharp contrast, a well-tuned streaming system can chew through millions of events per second with latencies well under 100 milliseconds.

Real-World Benchmarks

The difference in scale is stark. A Databricks survey found that 65% of enterprises process over 100 TB of data monthly using batch ETL. On the streaming side, platforms like Apache Kafka power systems at LinkedIn to process 2 million messages per second, enabling fraud detection with 99.99% accuracy by raising alerts within 100ms.

Netflix’s migration from batch to Apache Flink is a compelling case study. They cut personalization latency from hours to under a minute, boosting user engagement by 20% and slashing storage costs by 50% by eliminating the need to persist batch data. Flink now processes over a trillion events daily for Netflix with less than a 0.01% duplication rate.

The core trade-off is this: Batch processing is about maximizing how much data you can process. Stream processing is about minimizing how long it takes to process a single piece of that data. Your choice boils down to which of those two metrics creates more value for your business.

Think about it this way: a credit card company can’t afford to wait hours to flag a fraudulent transaction. That decision has to happen in the split second between the card swipe and the terminal’s response. Likewise, a logistics firm needs to track its delivery fleet in real time to reroute drivers around traffic, not get a summary report at day’s end. It all comes down to whether you need comprehensive historical insight or immediate operational intelligence.

When to Use Batch vs. Stream Processing

Deciding between batch and stream processing isn’t just a technical exercise; it’s a fundamental business decision. The right answer really boils down to one question: how fast do you need your insights? Your choice will shape your entire data architecture, so understanding where each model shines is crucial.

Some jobs just don’t need to happen right now. They’re better handled with a methodical, scheduled approach where having a complete dataset is far more important than speed. This is the natural home of batch processing.

Where Batch Processing Is the Optimal Choice

Batch processing is your workhorse for operations that run on a predictable clock and demand a full, historical view of the data. It’s built for chewing through massive datasets efficiently, not for knee-jerk reactions.

Think about these classic scenarios:

- Large-Scale Payroll Systems: Most companies run payroll on a fixed schedule—every two weeks or once a month. The system needs to collect all employee hours, calculate deductions, and process payments in one massive, scheduled run. There’s zero benefit to doing this in real-time; it would just be inefficient.

- Customer Billing and Invoicing: Your power company doesn’t send you a bill for every watt of electricity you use the second you use it. Instead, they collect a month’s worth of data, process it all at once, and generate a single, accurate invoice. That’s a textbook batch job.

- Comprehensive Inventory Management: A retailer needs to know exactly what’s on the shelves at the end of the day. An overnight batch job is perfect for this—it can reconcile all of the day’s sales against inventory levels to produce a definitive report for the morning.

The rule of thumb for batch processing is straightforward: if the business value comes from analyzing a complete, historical dataset on a regular schedule, batch is almost always the most reliable and cost-effective way to go.

When Stream Processing Is Indispensable

Stream processing, on the other hand, is a must-have when the value of your data has a short shelf life and you need to act on it now. This model is built for the never-ending, unbounded flow of data where insights are needed in milliseconds, not hours. Timeliness is so critical in some situations; for example, look at how a Fortune 500 detected internal fraud in 48 hours, which highlights the kind of operational demands that make real-time processing a necessity.

Here are a few places where stream processing isn’t just better—it’s the only option:

- Real-Time Fraud Detection: The moment a credit card is swiped, the transaction has to be checked for signs of fraud before it’s approved. That decision needs to happen in under a second. A batch system would be completely useless here.

- Live IoT Sensor Monitoring: Imagine a factory floor with sensors monitoring machine temperature and vibration. A stream processing system can analyze that data as it arrives, flagging anomalies to predict equipment failure before it happens and preventing costly downtime.

- Dynamic E-commerce Pricing: Online stores constantly tweak prices based on what competitors are doing, current demand, and their own inventory levels. This requires a streaming setup that can ingest all that market data and push out new prices instantly to stay competitive.

Choosing Your Modern Data Strategy

When it comes to batch vs. stream processing, the conversation isn’t really about picking a winner. It’s about building a data architecture that actually works for your business. The smartest strategies don’t force a choice; they blend both models to match specific operational needs and long-term goals. A modern data stack needs both historical depth and real-time reflexes.

To figure out the right mix, you need to ask a few honest questions:

- How fast do you need to act? What’s the absolute maximum delay you can tolerate between an event happening and your system responding? If the answer is seconds, you’re in streaming territory.

- How fast is your data coming in? Think about high-velocity sources like IoT sensors or real-time user clicks. This kind of firehose almost always demands a streaming-first approach.

- Can your team handle the complexity? Let’s be real—managing real-time systems can be a heavy lift. The operational overhead for stream processing is often higher than traditional batch jobs.

The Rise of Hybrid and Unified Architectures

Because most businesses need both speed and depth, the industry is naturally shifting toward hybrid models. These architectures give you the best of both worlds. You can use stream processing for immediate tasks like fraud detection while still relying on batch processing for deep, cost-effective historical analysis, like end-of-day financial reports.

The future of data isn’t about picking a side. It’s about building a fluid system that can handle real-time events and large-scale historical analysis, so you always have the right insight at the right time.

Thinking about how these models are applied in the real world can be eye-opening. For instance, consider the underlying data processing strategy of popular social media algorithms. Understanding these applications helps you build a scalable strategy that serves both today’s real-time demands and tomorrow’s analytical challenges.

Common Questions About Batch and Stream Processing

When you’re deciding between batch and stream processing, a few key questions always come up. Let’s break down the most common ones to help you make the right call for your data architecture.

Can I Use Both Batch and Stream Processing in the Same System?

Absolutely. In fact, many of the most effective data systems do exactly this. This hybrid model, often seen in architectures like Lambda or Kappa, gives you the best of both worlds.

Think about an e-commerce platform. They could use stream processing to power real-time fraud detection and instantly update inventory levels as sales happen. At the same time, they’d likely use batch processing overnight to run complex sales reports and analyze historical customer behavior. This way, they can react in the moment while still getting the deep, comprehensive insights needed for long-term strategy.

Is Stream Processing Always the More Expensive Option?

It can be, but it’s not a universal rule. Stream processing often requires “always-on” infrastructure to handle data as it arrives, which can lead to higher operational costs and more complex management. Batch processing, on the other hand, lets you schedule massive jobs during off-peak hours when compute resources are cheaper, making it very cost-effective for tasks that aren’t time-sensitive.

That said, modern streaming platforms are becoming much more efficient and accessible, closing the cost gap.

When you look at the total cost, you have to factor in the business value. The initial spend on streaming infrastructure might seem higher, but the return from preventing even a single major fraudulent transaction can easily justify the expense.

Which One Is Better for Machine Learning?

This is a classic “it depends” scenario because both are crucial, just at different stages of the machine learning lifecycle.

- Batch processing is the workhorse for training models. You need to feed massive historical datasets to an algorithm to teach it patterns, and batch is perfect for that.

- Stream processing comes into play for real-time inference. Once a model is trained and deployed, you use streaming data to make instant predictions, like flagging a suspicious transaction or recommending a product to a user browsing your site.

The two are really two sides of the same coin in a modern MLOps workflow.

Ready to build flexible data pipelines that support both real-time and scheduled workloads without the complexity? Streamkap provides a unified platform for real-time data movement, letting you replace brittle batch ETL with modern, event-driven architecture. Get started with Streamkap today.