<--- Back to all resources

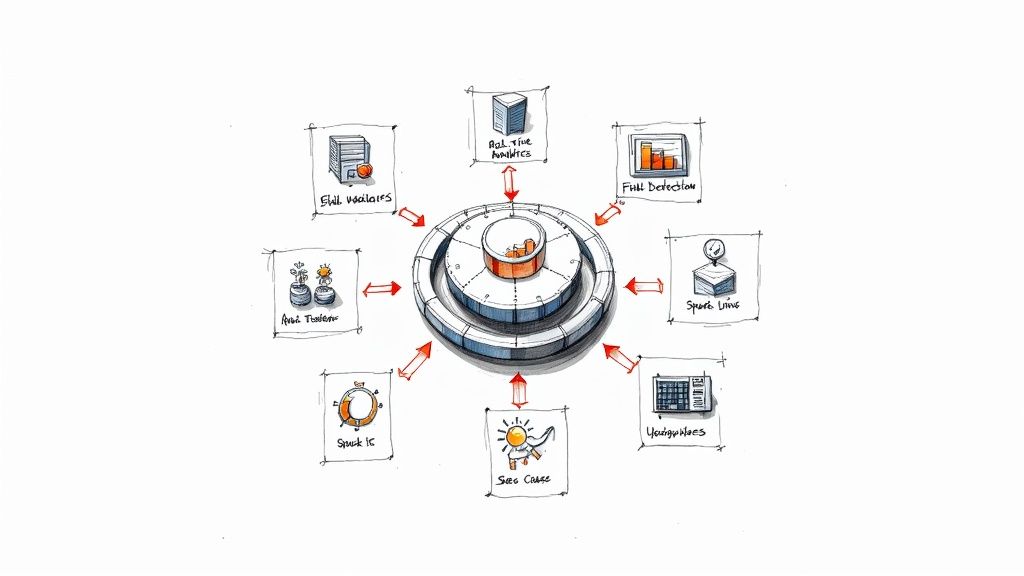

10 Powerful Real Time Analytics Use Cases for 2025

Explore 10 powerful real time analytics use cases revolutionizing industries. See practical examples, tech stacks, and how to implement them today.

In a business environment where decisions are made in minutes, not months, relying on yesterday’s data is a competitive liability. The winners are those who can act on insights the moment they emerge. This is the fundamental shift from historical batch reporting to real-time analytics: the ability to process, analyze, and act on data as it is generated, not hours or days later. This capability is no longer a niche advantage for Silicon Valley giants; it’s a critical tool for any organization aiming to optimize operations, enhance customer experiences, and mitigate risks instantly.

The core challenge has always been the complexity of building and maintaining the necessary data infrastructure. Constructing reliable, low-latency pipelines to capture and stream data from operational databases and applications often requires significant, specialized engineering effort. However, modern approaches like Change Data Capture (CDC) combined with managed streaming platforms have democratized this power, making real-time data integration accessible and efficient. This combination allows businesses to continuously synchronize data from sources like PostgreSQL, MySQL, and MongoDB directly into real-time destinations without complex custom code.

This article moves beyond theory to provide a practical, replicable blueprint for implementation. We will dissect 10 powerful real time analytics use cases, offering a comprehensive breakdown for each one. You will learn the specific business problems they solve, the required data sources, proven streaming architecture patterns, and key performance metrics to track. For each use case, we provide actionable implementation strategies, example technology stacks featuring integrations with platforms like Snowflake, BigQuery, and Databricks, and crucial considerations for cost, performance, and monitoring. This guide is designed to equip you with the strategic and tactical knowledge to build your own competitive edge with real-time data.

1. Fraud Detection in Financial Services

Real-time fraud detection is one of the most critical and high-stakes real time analytics use cases in the financial industry. It involves analyzing streams of transaction data as they happen to identify and block fraudulent activities before they cause financial loss. These systems ingest multiple data sources simultaneously, including transaction details, user location, device information, and historical spending habits, to create a holistic view of each event.

The core principle is to score the risk of each transaction in milliseconds. By comparing incoming data against established patterns and machine learning models, the system can flag anomalies, such as a purchase made in a different country from the user’s last known location. Industry leaders like Visa and Mastercard process thousands of transactions per second, relying on sophisticated models to approve legitimate purchases while stopping fraud in its tracks.

Strategic Breakdown

- Business Problem: The primary goal is to minimize financial losses from unauthorized transactions and reduce the number of “false positives” that frustrate legitimate customers. A secondary goal is to adapt quickly to new fraud patterns as they emerge.

- Data Sources: Transaction streams from payment gateways (e.g., Kafka, Kinesis), user account databases (e.g., Postgres, MySQL), device fingerprinting data, and third-party risk intelligence feeds.

- Key Metrics: Focus on fraud detection rate, false positive rate, and decision latency (under 100ms is a common goal).

Actionable Takeaways

To effectively implement real-time fraud detection, consider the following tactics:

- Use Ensemble Models: Combine multiple algorithms (e.g., decision trees, neural networks) to improve accuracy and resilience against diverse fraud tactics. No single model is perfect.

- Implement Feedback Loops: Use a Change Data Capture (CDC) tool like Streamkap to feed confirmed fraud and false positive outcomes back into the model for continuous training. This ensures the system adapts to evolving threats.

- Leverage Graph Databases: For sophisticated fraud rings, analyzing relationships between accounts, devices, and IP addresses is crucial. You can learn more about how graph databases power real-time fraud detection.

- Balance Security and Experience: Fine-tune risk thresholds to avoid aggressively blocking legitimate transactions, which can harm customer trust and lead to churn.

2. Website and Application Performance Monitoring (APM)

Application Performance Monitoring (APM) is a quintessential example of real time analytics use cases, providing immediate visibility into the health and performance of software applications. These systems ingest and analyze high-velocity streams of telemetry data, including logs, metrics, and traces, to detect issues like slow response times, high error rates, or resource bottlenecks as they occur. This allows engineering teams to diagnose and resolve problems before they escalate into service outages that impact customer experience.

The core objective is to move from reactive troubleshooting to proactive optimization. By correlating performance data with user behavior and business outcomes in real time, organizations can pinpoint exactly how a spike in API latency affects a checkout process or how a database query impacts page load times. Industry leaders like Datadog, New Relic, and Dynatrace provide sophisticated platforms that turn terabytes of raw machine data into actionable dashboards and alerts within seconds.

Strategic Breakdown

- Business Problem: The primary goal is to minimize application downtime and performance degradation, which directly impact user satisfaction, conversion rates, and revenue. A secondary goal is to improve developer productivity by reducing the mean time to resolution (MTTR) for incidents.

- Data Sources: Application logs (e.g., from Fluentd or Logstash), system metrics (CPU, memory), distributed traces from instrumentation libraries (e.g., OpenTelemetry), and user interaction data from front-end agents.

- Key Metrics: Focus on the “Golden Signals”: latency, traffic, errors, and saturation. Apdex scores, uptime percentage, and MTTR are also critical performance indicators.

Actionable Takeaways

To implement a powerful real-time APM strategy, focus on these tactics:

- Implement Distributed Tracing: In modern microservices architectures, a single user request can traverse dozens of services. Use distributed tracing to visualize the entire request path, identify the specific service causing a delay, and dramatically speed up root cause analysis.

- Establish Dynamic Baselines: Instead of static alert thresholds, use machine learning to establish dynamic baselines for normal performance. This helps identify true anomalies and significantly reduces alert fatigue from noisy or predictable fluctuations.

- Correlate Performance with Business KPIs: Stream performance data into a real-time analytics platform to join it with business metrics. You can then answer critical questions like, “How does a 200ms increase in API latency affect our add-to-cart rate?”

- Focus on Reducing Latency: Proactively monitor and optimize system performance to ensure a smooth user experience. You can learn how to effectively reduce latency in your systems to improve application responsiveness.

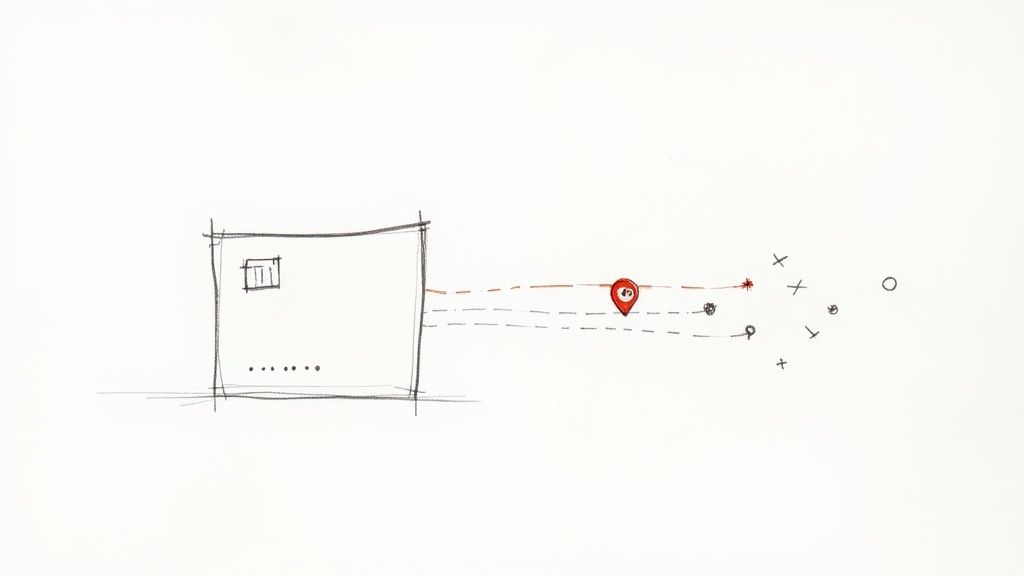

3. Real-Time Traffic and Location Analytics

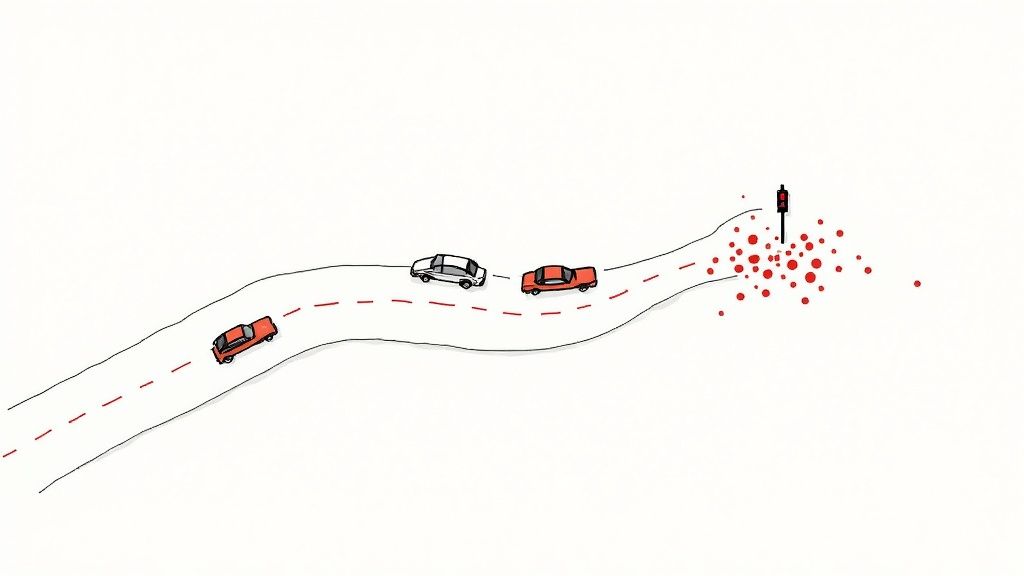

Real-time traffic and location analytics process vast streams of geospatial data from GPS devices, mobile signals, and IoT sensors to monitor vehicle movements and optimize traffic flow. This is one of the most visible real time analytics use cases, powering everything from navigation apps to smart city infrastructure. By ingesting and analyzing location data in real time, these systems can identify congestion, predict traffic patterns, and offer dynamic routing recommendations to drivers and logistics companies.

The underlying principle is to build a live, dynamic map of a transportation network. By aggregating millions of data points per second, platforms like Google Maps and Waze can visualize traffic conditions, estimate travel times with high accuracy, and automatically reroute users to avoid delays. This immediate feedback loop between data collection, analysis, and user recommendation is what makes these services indispensable for modern transit.

Strategic Breakdown

- Business Problem: The main goal is to reduce travel time, minimize fuel consumption, and improve road safety by providing accurate, up-to-the-minute traffic information. For cities, the objective is to optimize traffic light timing and manage urban congestion.

- Data Sources: GPS streams from vehicles and mobile apps (e.g., via MQTT or WebSockets), IoT sensor data from roadside units, municipal traffic camera feeds, and user-reported incident data.

- Key Metrics: Focus on route calculation latency, ETA (Estimated Time of Arrival) accuracy, and data freshness (how quickly new traffic conditions are reflected).

Actionable Takeaways

To implement a powerful real-time location analytics system, focus on these strategies:

- Aggregate Diverse Data: Combine data from multiple sources like vehicle GPS, road sensors, and weather feeds to create a more accurate and resilient traffic model. No single data stream tells the whole story.

- Use Geospatial Indexing: Employ specialized databases or indexes (like Quadtrees or R-trees) designed for high-speed spatial queries to quickly find nearby vehicles or points of interest.

- Build Robust Pipelines: Ensure your infrastructure can handle high-volume, high-velocity data streams without bottlenecks. Explore how to build resilient streaming data pipelines to support these demanding workloads.

- Implement Edge Computing: For use cases like connected vehicle communication, process data closer to the source (e.g., in roadside units) to reduce latency and provide sub-second incident alerts.

4. Real-Time Business Intelligence and Sales Dashboards

Moving beyond historical reporting, real-time business intelligence provides a live, dynamic view of business operations. This is one of the most transformative real time analytics use cases for modern enterprises, enabling leaders to monitor key metrics like sales performance, inventory levels, and customer behavior as they change. Instead of making decisions based on last week’s or yesterday’s data, teams can react to current conditions immediately.

These systems stream data from transactional databases and operational systems directly into analytical platforms. This continuous flow of information powers live dashboards that visualize business health, helping companies like major retailers track sales per minute on Black Friday or logistics firms monitor supply chain movements in transit. The goal is to close the gap between an event happening and the insight being available for decision-making.

Strategic Breakdown

- Business Problem: The core challenge is overcoming the “data lag” inherent in traditional batch reporting, which can lead to missed opportunities or delayed responses to problems. The objective is to provide an up-to-the-minute operational view for faster, more accurate decision-making.

- Data Sources: Operational databases (e.g., Postgres, MySQL, SQL Server), CRM systems (e.g., Salesforce), ERP systems (e.g., SAP), and web analytics streams (e.g., Segment, Snowplow).

- Key Metrics: Focus on data freshness (how current the data is, aiming for seconds or minutes), query latency for dashboards, and dashboard adoption rate by business users.

Actionable Takeaways

To build effective real-time dashboards, focus on the following strategies:

- Design for Decision-Making: Tailor each dashboard to a specific role or function. A sales leader needs to see live deal closures, while an operations manager needs to monitor inventory levels. Avoid creating one-size-fits-all visualizations.

- Use CDC for Low-Latency Replication: Implement Change Data Capture (CDC) with a tool like Streamkap to move data from operational databases to your data warehouse (e.g., Snowflake, BigQuery) with minimal latency and no performance impact on source systems.

- Enable Drill-Downs: A top-level KPI is useful, but its value multiplies when users can click into it to explore the underlying data. This contextual analysis is key for understanding the “why” behind the numbers.

- Implement Smart Alerting: Don’t rely on users constantly watching a dashboard. Set up automated push notifications or alerts for when critical metrics cross predefined thresholds, allowing teams to act proactively.

5. Real-Time Customer Experience Monitoring

Understanding and improving the customer journey is a top priority for modern businesses, making this one of the most impactful real time analytics use cases. This practice involves continuously tracking customer interactions across all touchpoints like websites, mobile apps, social media, and support chats. By analyzing this data as it is generated, companies can gauge satisfaction, detect friction points, and understand sentiment in real time.

The goal is to move from reactive to proactive customer service. Instead of waiting for a negative survey, a system can flag a user struggling with a checkout process or detect rising frustration in a chat conversation. Companies like Salesforce and Zendesk use this to empower support teams to intervene immediately, turning a potentially negative experience into a positive one and significantly boosting loyalty.

Strategic Breakdown

- Business Problem: The objective is to increase customer satisfaction and retention by identifying and resolving issues as they happen. A key secondary goal is to gather live feedback to improve products and services.

- Data Sources: Clickstream data from web/mobile apps (e.g., Segment, Snowplow), CRM interaction logs (e.g., Salesforce), support chat transcripts (e.g., Intercom), and social media mentions (e.g., Brandwatch).

- Key Metrics: Focus on Customer Satisfaction (CSAT) scores, Net Promoter Score (NPS), Customer Effort Score (CES), and time to resolution for identified issues.

Actionable Takeaways

To build an effective real-time customer monitoring system, focus on these strategies:

- Combine Behavioral and Sentiment Data: Track what customers do (e.g., rage clicks) and what they say (e.g., chat sentiment) for a complete picture. Use natural language processing (NLP) to analyze unstructured text from support tickets and social media.

- Create Automated Alerting Workflows: Set up triggers that notify the right team when a high-value customer shows signs of distress or when a widespread issue is detected. This enables immediate, targeted intervention.

- Unify Data for an Omnichannel View: Use a real-time pipeline with a tool like Streamkap to consolidate data from disparate sources into a single customer view in your data warehouse. This prevents siloed insights and ensures a cohesive understanding of the customer journey.

- Empower Teams with Actionable Dashboards: Provide customer service and marketing teams with live dashboards (e.g., in Tableau, Looker) that visualize customer health scores and highlight urgent issues, enabling them to act on insights effectively.

6. Real-Time Supply Chain and IoT Tracking

In modern logistics, real-time supply chain tracking is a transformative application of real time analytics use cases. This approach involves using data streams from sensors, GPS, RFID tags, and other IoT devices to monitor assets, shipments, and inventory as they move. By processing this location and condition data instantly, companies gain unprecedented visibility, allowing them to optimize routes, manage inventory, and prevent costly disruptions before they escalate.

These systems are the backbone of global logistics, enabling companies like Amazon and Maersk to orchestrate complex global movements with precision. For instance, cold chain monitoring for pharmaceuticals uses real-time temperature data to ensure vaccine integrity, while retail giants like Walmart use it to maintain optimal stock levels and prevent out-of-stock situations. The ability to react in seconds to a delayed shipment or a temperature anomaly is what separates market leaders from the competition.

Strategic Breakdown

- Business Problem: The core challenge is to overcome the lack of visibility in complex supply chains, which leads to inefficiencies, delays, spoilage, and theft. The goal is to create a transparent, predictable, and resilient logistics network.

- Data Sources: IoT sensor data (GPS, temperature, humidity) from devices, RFID tag readers, vehicle telematics systems (e.g., via MQTT or AMQP), and shipping manifests from ERP systems (e.g., SAP, Oracle).

- Key Metrics: Focus on on-time delivery rate, order accuracy, inventory turnover, and asset utilization. Latency targets for critical alerts (e.g., temperature deviation) are often in the low seconds.

Actionable Takeaways

To build a robust real-time tracking system, focus on these strategies:

- Leverage Edge Computing: Process telemetry data on or near the IoT devices themselves to reduce latency and data transmission costs. This is crucial for making immediate decisions, like rerouting a truck based on traffic data.

- Establish Data Quality Checks: Implement validation rules at the point of ingestion to filter out erroneous sensor readings. Inaccurate data can lead to false alarms and poor operational decisions.

- Build a Unified Data Stream: Use a CDC tool like Streamkap to integrate IoT data streams with transactional data from your ERP or warehouse management systems. This creates a single, real-time view of inventory both in transit and in storage.

- Understand Logistical Nuances: The operational complexity of these systems is immense. To gain a deeper understanding of how these complex systems operate, explore guides on essential aspects like freight forwarding services.

7. Real-Time Cybersecurity Threat Detection

In the high-stakes world of cybersecurity, real-time threat detection has become a non-negotiable component of modern defense strategies. This is one of the most critical real time analytics use cases, involving the continuous analysis of security information and event management (SIEM) data. Systems ingest and correlate logs, network traffic, and endpoint data as it is generated to identify intrusions, malware, and anomalous behavior before significant damage occurs.

The core objective is to reduce the “dwell time” of an attacker, which is the period between initial compromise and detection. By processing terabytes of event data in milliseconds, security teams can spot the faint signals of an attack, such as unusual login patterns or data exfiltration attempts. Companies like Palo Alto Networks and Microsoft use these systems to correlate billions of events from diverse sources, enabling security operations centers (SOCs) to respond to threats in minutes rather than days or weeks.

Strategic Breakdown

- Business Problem: The primary goal is to detect and respond to security incidents before they escalate into data breaches, financial loss, or reputational damage. It’s also crucial to minimize analyst fatigue by reducing false positives and automating incident triage.

- Data Sources: Log files from servers and applications (e.g., syslog, Windows Events), network flow data (e.g., NetFlow, IPFIX), endpoint detection and response (EDR) agent data, and external threat intelligence feeds.

- Key Metrics: Focus on Mean Time to Detect (MTTD), Mean Time to Respond (MTTR), and the false positive rate. Reducing MTTD is the ultimate measure of success for real-time analytics in this domain.

Actionable Takeaways

To build a robust real-time cybersecurity detection capability, focus on these tactics:

- Establish Behavioral Baselines: Use machine learning models to understand normal patterns of activity for users and systems. This makes it far easier to spot deviations that could indicate a compromise.

- Correlate Across Silos: True security insights come from combining data. An unusual login (authentication log) paired with abnormal network traffic (firewall log) from the same source is a much stronger signal than either event alone.

- Automate Tier-1 Response: For well-understood, high-confidence alerts (e.g., a known malicious IP), automate responses like blocking the IP address. This frees up human analysts to investigate more complex, nuanced threats.

- Enrich Data with Threat Intelligence: Use a Change Data Capture (CDC) pipeline with a tool like Streamkap to continuously enrich incoming security events with data from threat intelligence feeds. This adds crucial context, helping to validate potential threats in real time.

8. Real-Time Healthcare Patient Monitoring

In healthcare, real-time patient monitoring represents one of the most impactful real time analytics use cases, transforming reactive care into proactive intervention. This approach involves the continuous analysis of data streams from medical devices, wearables, and electronic health records to detect subtle, critical changes in a patient’s condition. By processing vital signs like heart rate, blood pressure, and oxygen saturation in milliseconds, these systems can predict adverse events like sepsis or cardiac arrest before they become life-threatening.

The primary goal is to provide clinical teams with immediate, actionable alerts that enable faster and more effective treatment. Leading hospital systems and telehealth platforms like Teladoc use these analytics to monitor patients both in ICUs and remotely at home. This constant vigilance improves patient outcomes, reduces hospital readmissions, and helps manage chronic conditions more effectively, marking a significant shift in modern medicine.

Strategic Breakdown

- Business Problem: The objective is to improve patient safety by detecting clinical deterioration early, reduce the workload on healthcare staff through intelligent alerting, and enable scalable remote patient monitoring programs.

- Data Sources: High-frequency data streams from bedside monitors (e.g., ECG, SpO2), IoT medical devices, wearables, and real-time updates from electronic health records (EHRs).

- Key Metrics: Critical metrics include alert latency (time from event to notification), predictive accuracy for adverse events, and alert fatigue rate (minimizing non-actionable alarms).

Actionable Takeaways

To build a robust real-time patient monitoring system, focus on these strategies:

- Personalize Alert Thresholds: Use machine learning models to create dynamic, patient-specific alert thresholds based on their baseline vitals and medical history, reducing false alarms.

- Ensure Data Integrity and Compliance: Guarantee HIPAA compliance by encrypting data in transit and at rest. Use Change Data Capture (CDC) to reliably stream sensitive updates from databases to the analytics engine without direct queries.

- Integrate with Clinical Workflows: For efficient and accurate patient data management, real-time analytics can be integrated with advanced patient charting software. This ensures alerts are delivered directly within the systems clinicians already use, preventing context switching.

- Build Redundant Alerting Systems: For life-critical monitoring, implement failover mechanisms and redundant data pathways to ensure alerts are never missed due to a single point of failure in the tech stack.

9. Real-Time Marketing and Personalization

Delivering personalized customer experiences at the right moment is a cornerstone of modern digital strategy, making this one of the most impactful real time analytics use cases for B2C companies. This approach involves analyzing a continuous stream of user interactions, such as clicks, views, and searches, to dynamically tailor content, offers, and recommendations. The goal is to move beyond static, segment-based marketing to individual-level personalization that responds instantly to user behavior.

The core principle is to capture user intent in real time and act on it immediately. When a user browses a specific product category on an e-commerce site, a real-time system can instantly update the homepage banner, trigger a relevant push notification, or populate recommendation carousels with similar items. Industry giants like Netflix and Amazon have built their empires on this capability, using sophisticated algorithms to suggest content and products that feel uniquely curated for each user, dramatically increasing engagement and sales.

Strategic Breakdown

- Business Problem: The primary challenge is to increase key conversion metrics like click-through rates, add-to-cart rates, and purchase value by making every interaction relevant. A secondary goal is to improve customer loyalty and lifetime value by creating a more engaging and responsive user experience.

- Data Sources: Clickstream data from web and mobile apps (e.g., Kafka, Segment), customer profiles from a CRM (e.g., Salesforce), product catalog databases (e.g., MongoDB), and contextual data like geolocation and device type.

- Key Metrics: Focus on click-through rate (CTR), conversion rate, session duration, and revenue per user. Latency for recommendation generation should be low, typically under 200ms, to avoid disrupting the user experience.

Actionable Takeaways

To build an effective real-time personalization engine, focus on these strategies:

- Combine Behavioral and Contextual Data: Don’t just rely on a user’s past purchase history. Incorporate real-time contextual signals like their current location, time of day, and device to deliver more relevant recommendations.

- Create a Real-Time Customer 360 View: Use a Change Data Capture (CDC) tool like Streamkap to unify data from operational databases (e.g., Postgres, MySQL) and streaming event sources into a central analytics warehouse. This provides a complete, up-to-the-millisecond view of the customer to power personalization models.

- Implement Continuous A/B Testing: Personalization is not a set-it-and-forget-it task. Continuously test different recommendation algorithms, UI placements, and messaging strategies to optimize for key business metrics.

- Balance Personalization and Privacy: Be transparent with users about how their data is being used and provide them with clear controls. Overly aggressive or “creepy” personalization can erode trust and lead to customer churn.

10. Real-Time IoT and Smart Building Management

Real-time IoT analytics for smart buildings is a transformative use case that leverages data from sensors to optimize facility operations. This involves continuously collecting and analyzing data from devices monitoring everything from HVAC and lighting to occupancy and security. By processing this information as it streams in, systems can make automated adjustments that improve energy efficiency, enhance occupant comfort, and bolster security.

These systems represent a core application of real time analytics use cases in the physical world. For instance, sensors might detect that a meeting room is empty and automatically dim the lights and adjust the thermostat, saving energy without human intervention. Corporate real estate leaders and facilities teams use these insights to manage resources proactively. Companies like Siemens with its Desigo platform and Johnson Controls have pioneered comprehensive building automation systems that rely on this immediate data processing.

Strategic Breakdown

- Business Problem: The primary objectives are to reduce operational costs, primarily energy consumption, and improve the building environment for occupants. A secondary goal is to enable predictive maintenance by identifying equipment anomalies before they lead to failure.

- Data Sources: IoT sensor streams (e.g., MQTT, CoAP) for temperature, light, motion, and air quality; building management system (BMS) logs; access control systems; and external data like weather forecasts.

- Key Metrics: Focus on Energy Usage Intensity (EUI), occupancy rates, equipment uptime, and occupant comfort scores. Decision latency for automated adjustments (e.g., lighting) should be in the sub-second to few-seconds range.

Actionable Takeaways

To effectively implement real-time building management, consider the following tactics:

- Start with High-Impact Areas: Prioritize analytics for HVAC and lighting systems, as they typically account for the largest portion of a commercial building’s energy consumption and offer the quickest return on investment.

- Predictive Occupancy Modeling: Use machine learning models to analyze historical access and motion data to predict future occupancy patterns. This allows HVAC systems to pre-cool or pre-heat spaces just in time, rather than running on a fixed schedule.

- Integrate Diverse Data Streams: Use a real-time data pipeline to combine IoT sensor data with operational data from your existing BMS. Capturing changes from systems like access logs with a CDC tool like Streamkap can enrich your analytics for more accurate decision-making.

- Choose Open Standards: Opt for systems and sensors that use open communication protocols. This prevents vendor lock-in and ensures you can integrate new technologies and data sources as your smart building strategy evolves.

10 Real-Time Analytics Use Cases Compared

Use caseImplementation complexityResource requirementsExpected outcomesIdeal use casesKey advantagesFraud Detection in Financial ServicesHigh — real-time models + rule enginesHigh compute, low-latency integration, ML dataImmediate fraud prevention, reduced chargebacksPayment processors, banks, card networksPrevents losses, regulatory support, customer trustWebsite and Application Performance Monitoring (APM)Medium–High — distributed tracing, instrumentationHigh data ingestion, monitoring agents, observability toolsFaster detection and resolution of performance issuesSaaS, web apps, microservices environmentsImproves uptime, MTTD reduction, capacity planningReal-Time Traffic and Location AnalyticsMedium — geospatial processing, routing logicGPS feeds, IoT sensors, map data, edge computeOptimized routing, reduced congestion, faster responseNavigation apps, cities, logistics providersCuts commute time, improves emergency responseReal-Time Business Intelligence and Sales DashboardsMedium — streaming ETL and dashboardingStreaming pipelines, BI tools, governed dataLive KPIs, faster business decisions, trend spottingSales ops, exec dashboards, retail analyticsEnables rapid decisions, sales forecasting, transparencyReal-Time Customer Experience MonitoringMedium — multi-channel integration, NLPOmnichannel data, sentiment models, storageImmediate issue detection, improved retentionSupport teams, e-commerce, hospitalityBoosts satisfaction, proactive support, churn reductionReal-Time Supply Chain and IoT TrackingHigh — hardware + systems integrationIoT devices, RFID/GPS, robust networkingVisibility into shipments, reduced losses, better cadenceLogistics, manufacturing, cold chain managementMinimizes theft, condition monitoring, faster recallsReal-Time Cybersecurity Threat DetectionHigh — SIEM, correlation, automationMassive log volumes, threat feeds, security analystsRapid detection/response, reduced dwell timeEnterprise networks, SOCs, cloud environmentsDetects intrusions, supports forensics, complianceReal-Time Healthcare Patient MonitoringHigh — regulated, clinical integrationMedical devices, secure networks, EHR integrationEarly intervention, reduced adverse eventsHospitals, ICUs, remote patient monitoringImproves outcomes, enables remote care, alertingReal-Time Marketing and PersonalizationMedium — real-time segmentation + deliveryBehavioral data, recommendation engines, privacy controlsHigher conversions, tailored customer journeysE‑commerce, streaming services, ad platformsIncreases engagement, optimizes spend, personalizationReal-Time IoT and Smart Building ManagementMedium–High — sensor networks + automationSensors, BMS integration, edge/ cloud computeEnergy savings, comfort optimization, predictive maintenanceCommercial buildings, data centers, campusesCuts energy costs, improves comfort, predictive upkeep

From Data Streams to Business Value: Your Next Steps

We have journeyed through ten powerful real time analytics use cases, spanning industries from finance and e-commerce to healthcare and logistics. Each example, from detecting fraudulent transactions in milliseconds to personalizing customer marketing on the fly, demonstrates a fundamental business transformation. The era of waiting hours or days for batch processing to deliver insights is definitively over. Today, the competitive edge belongs to those who can act on data the moment it is created.

The core lesson from these diverse applications is not just the “what” but the “how.” A clear, repeatable architectural pattern has emerged as the gold standard for building robust real-time systems. This pattern forms the backbone of modern data strategy and is the key to unlocking the value we’ve discussed.

The Unifying Architectural Blueprint

Across all the use cases, from IoT sensor monitoring to real-time sales dashboards, a consistent three-stage blueprint for success appears:

- Capture at the Source: The journey begins with capturing data changes instantly from operational systems like PostgreSQL, MySQL, or MongoDB. Change Data Capture (CDC) has become the de-facto method for this, as it provides a low-impact, high-fidelity stream of events without requiring invasive application-level changes.

- Process in Motion: Raw data streams are then processed, enriched, and transformed in real-time using powerful stream processing engines like Apache Flink or Apache Kafka Streams. This is where business logic is applied, such as identifying fraudulent patterns, aggregating metrics for a dashboard, or triggering alerts.

- Deliver for Impact: Finally, the processed, analysis-ready data is delivered to a destination system where it can be acted upon. This could be a modern data warehouse like Snowflake or BigQuery for immediate dashboarding, a data lakehouse like Databricks for complex analytics, or even another operational system to trigger an automated response.

This Capture-Process-Deliver model is the engine that drives every one of the real time analytics use cases detailed in this article. Its power lies in its flexibility and scalability, allowing organizations to start with one use case and expand their real-time capabilities over time.

Overcoming the Implementation Barrier

Historically, the primary obstacle to adopting this powerful architecture has been operational complexity. Building, managing, and scaling the underlying infrastructure, particularly distributed systems like Kafka and Flink, requires a specialized skill set and significant engineering overhead. This burden often diverts valuable resources from the actual goal: building applications that create business value.

This is precisely where the modern data stack has evolved. The complexity of the streaming infrastructure can now be abstracted away. Solutions like Streamkap are designed to handle the heavy lifting of CDC, data transformation, and reliable delivery, effectively democratizing access to real-time data pipelines. Instead of spending months building and debugging infrastructure, data teams can focus on defining business logic and delivering insights. This shift from managing infrastructure to delivering value is the single most important catalyst for making real-time analytics accessible to a wider range of organizations.

Strategic Takeaway: The goal is not to become an expert in managing Kafka clusters. The goal is to leverage real-time data to solve business problems. By abstracting the infrastructure layer, you free your team to focus on high-impact activities that directly contribute to revenue, efficiency, and customer satisfaction.

Your Path Forward

The journey to real-time analytics is an iterative one. You don’t need to boil the ocean. Start by identifying the single most pressing business problem in your organization that could be solved with fresher data.

- Is it reducing credit card fraud?

- Is it understanding customer churn before it happens?

- Is it optimizing your supply chain with up-to-the-second inventory data?

Pick one of the real time analytics use cases from this article that resonates most strongly. Use the provided architectural patterns, technology stacks, and strategic considerations as a blueprint. Begin a small-scale pilot project to demonstrate the value and build momentum. By taking this first, focused step, you can begin your organization’s transformation into a truly data-driven enterprise, one that operates not on yesterday’s information, but on the intelligence of now.

Ready to stop managing complex infrastructure and start building valuable real-time applications? Streamkap provides a fully-managed, serverless platform that combines CDC and stream processing to deliver analysis-ready data to your warehouse or lakehouse in seconds. Explore how you can build your first real-time pipeline in minutes by visiting Streamkap.