<--- Back to all resources

What Is Event Driven Architecture Explained

What is event driven architecture? This guide explains how it works with real-world examples, core patterns, and benefits for building scalable, modern systems.

Ever wonder how modern applications handle millions of user actions at once without grinding to a halt? The secret often lies in a design approach called event-driven architecture (EDA).

Instead of services directly calling each other and waiting for a response, EDA flips the script. In an event-driven system, different parts of an application react to events—which are just records of something significant that happened, like a user clicking “buy” or a sensor reporting a new temperature. This creates a more fluid, asynchronous flow of communication, leading to systems that are incredibly scalable and resilient.

From Restaurant Kitchens to Software Systems

To really get a feel for this, let’s forget about code for a minute and think about a busy restaurant.

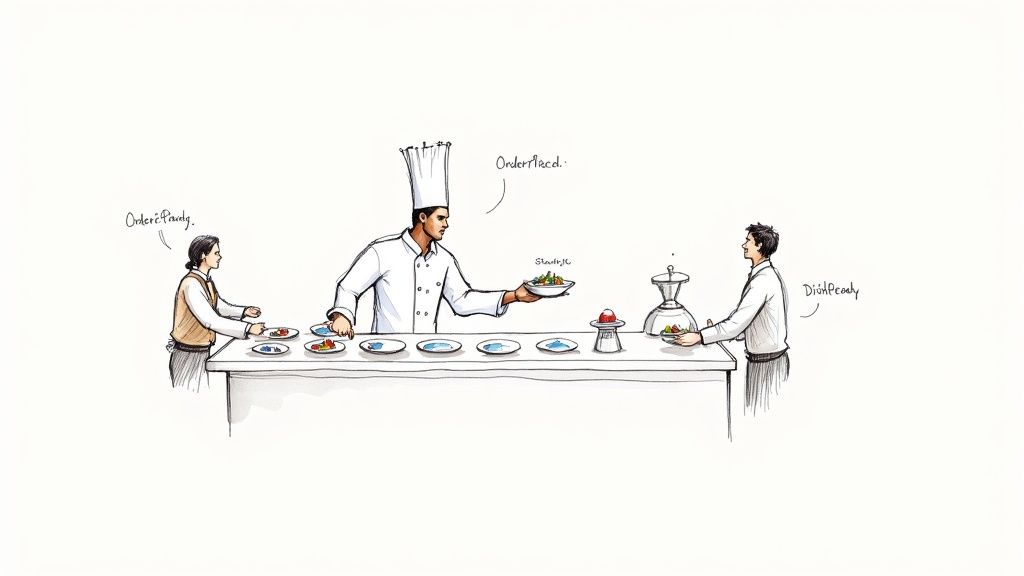

Imagine a traditional software model—what we call request-response—as a waiter who has to constantly walk back to the kitchen and ask, “Is the order for table five ready yet?” Over and over. This constant checking, or polling, is terribly inefficient. The waiter is stuck, unable to do much else, and is tightly coupled to the chef’s immediate progress.

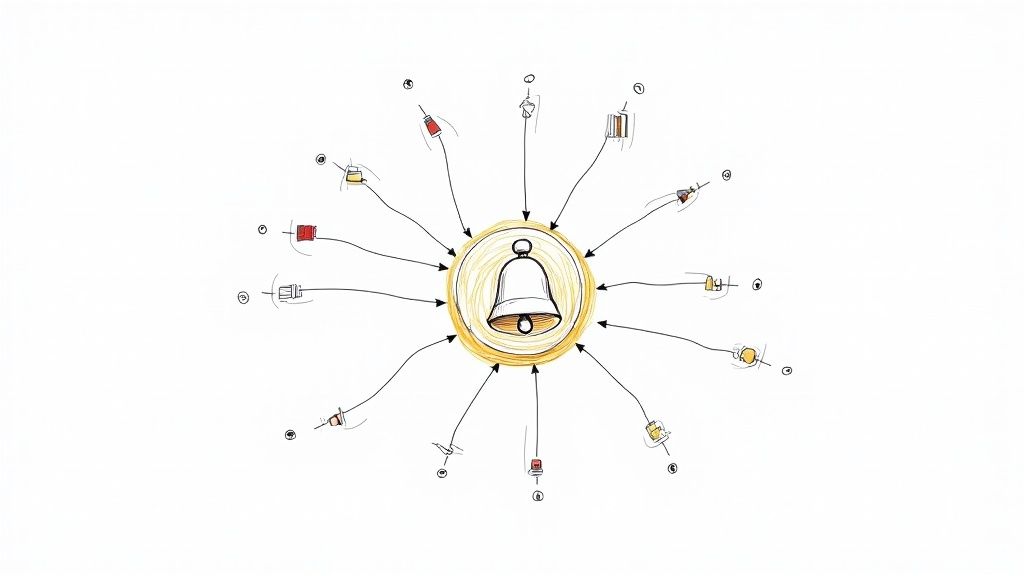

Now, let’s picture an event-driven kitchen. The chef finishes a dish and puts it on the pass. Instead of waiting for the waiter to ask, the chef simply rings a bell. That bell is the event. The sound is a notification: “Order Is Ready.” Any waiter who is free can hear that bell, grab the right dish, and deliver it.

This simple analogy gets right to the heart of EDA. The chef (the producer of the event) doesn’t need to know which specific waiter (a consumer) will pick up the food. They just announce that something important has happened, and the rest of the system responds. This shift from actively asking for updates to passively reacting to them is what makes event-driven systems so dynamic.

The Decoupled Advantage

In the restaurant model, the chef and the waiters are decoupled. The chef can stay focused on cooking, and the waiters can manage their own tasks—serving guests, clearing tables, or taking new orders. If one waiter gets held up, another can easily step in to grab the food. The whole operation is more efficient and far less fragile.

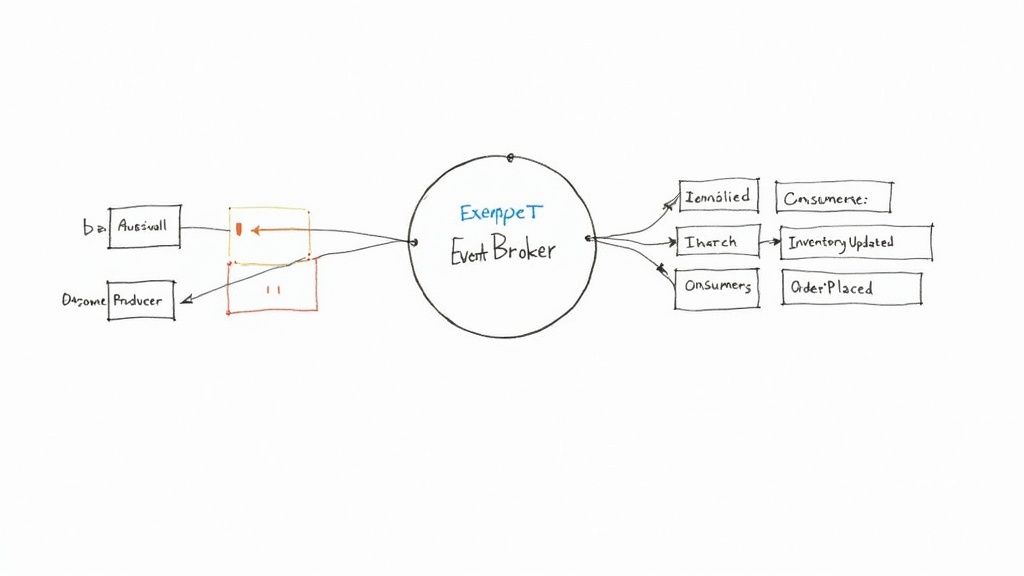

Software built with EDA works exactly the same way. When a customer completes a purchase, the order service doesn’t have to boss around every other part of the system. It simply publishes an “OrderPlaced” event. From there, several independent services can spring into action:

- The Inventory Service sees the event and immediately deducts the items from stock.

- The Shipping Service listens for the same event and kicks off the fulfillment process.

- A Notification Service picks up on it and sends the customer a confirmation email.

Notice that the order service never directly commands these other systems. It just broadcasts a fact—an order was placed—and trusts that the right components will do their jobs. This loose coupling is foundational for building complex, distributed applications. If this is a new concept for you, getting familiar with the fundamentals of event-driven programming can be a great starting point.

At its heart, event-driven architecture is about building systems that communicate through observed facts rather than direct commands. This allows individual components to evolve, scale, and even fail independently without bringing down the entire application.

Event Driven vs Request-Response At a Glance

To make the distinction crystal clear, it helps to see the two models side-by-side. The traditional request-response approach has its place, but EDA opens up a new world of possibilities for complex, real-time systems.

CharacteristicEvent-Driven ArchitectureRequest-Response ArchitectureCouplingLoosely coupled. Services don’t need to know about each other.Tightly coupled. The requester must know the responder’s location and API.CommunicationAsynchronous. Producers fire events and move on immediately.Synchronous. The client sends a request and blocks, waiting for a response.ScalabilityHigh. Services can be scaled independently to handle load.Limited. Scaling often requires scaling the entire monolithic application.ResilienceHigh. Failure of one consumer service doesn’t impact others.Low. Failure of a downstream service can cause cascading failures.Use CasesReal-time analytics, microservices, IoT, e-commerce platforms.Simple web services, CRUD operations, client-server interactions.

Ultimately, choosing between them depends on what you’re building. For systems that need to react instantly to a high volume of changes, EDA is often the clear winner.

Exploring the Core Components of EDA

To really get a handle on event-driven architecture, you have to understand its core building blocks. Think of them as a well-oiled team, where each member has a specific job, all working together to create a system that’s responsive, scalable, and loosely coupled.

Let’s break down the four key players that make any event-driven system tick.

The Event: A Significant State Change

Everything starts with the event. An event isn’t just some random message; it’s an immutable record of a business fact. It’s a signal that something important just happened and the state of the system has changed.

Think of it like a newspaper headline. “Stock Market Reaches All-Time High” isn’t just a vague update; it’s a specific, factual report of an occurrence. Events in software serve the same purpose.

Here are a few classic examples you’d see in the wild:

- OrderPlaced: A customer just finished checking out.

- InventoryUpdated: The stock count for a specific product just changed.

- UserRegistered: Someone new signed up for an account.

- PaymentFailed: A credit card transaction didn’t go through.

Each event carries a payload of data related to what happened—the order ID, the new stock level, the user’s email. This context is what allows other parts of the system to react intelligently.

Event Producers: The Source of Truth

An event producer (you’ll also hear it called a publisher) is any part of your system that detects a state change and fires off an event to announce it. Its one and only job is to create and broadcast these facts.

In our e-commerce world, when a customer hits the “Confirm Purchase” button, the checkout service becomes an event producer. It bundles up all the relevant details and publishes an OrderPlaced event.

Here’s the critical part: the producer has absolutely no idea who is listening. It just shouts its news into the system, trusting something is there to catch it. This “fire-and-forget” mentality is the secret sauce to decoupling.

Event Consumers: The Responders to Action

On the receiving end, you have event consumers (or subscribers). These are the services that are listening for specific events and are ready to take action when one comes through. A single event might have one consumer, dozens, or none at all.

When that OrderPlaced event gets published, several different consumers can spring into action, each completely unaware of the others:

- The Shipping Service grabs the event to kick off the fulfillment process.

- The Inventory Service listens in to decrement the stock count.

- The Notifications Service sees it and sends a confirmation email to the customer.

Each consumer lives in its own world, only caring about the events relevant to its job. This separation allows development teams to work on their services without stepping on each other’s toes. This model of ordered, independent actions is also at the heart of technologies like blockchain technology, which is fundamentally built on a chain of recorded events.

Decoupling in Action: The service that places an order doesn’t know or care about the service that sends the confirmation email. If the email service goes down, orders can still be placed and shipped without a problem.

Event Brokers: The Central Nervous System

The final piece holding this all together is the event broker, sometimes called an event bus. This is the middleware that sits in the middle, making sure events from producers get to the right consumers, reliably. It’s the central distribution hub. To get a deeper technical understanding, exploring concepts in data stream processing can provide valuable context on how this works under the hood.

Popular brokers like Apache Kafka, RabbitMQ, or Amazon SQS are the workhorses here. They handle all the messy details of message queuing, persistence, and delivery guarantees so your individual services don’t have to. By managing this communication backbone, the broker lets your producers and consumers stay simple, focused, and truly independent.

Key Patterns for Building Event-Driven Systems

Knowing the core components of an event-driven architecture (EDA) is one thing. Actually building a powerful, resilient system with them is another. That’s where design patterns come in—they are the proven blueprints architects use to bring these concepts to life and solve common problems in distributed systems.

We’re going to walk through three of the most essential patterns you’ll see in the wild: Publish/Subscribe (Pub/Sub), Event Sourcing, and Command Query Responsibility Segregation (CQRS). Each one provides a different set of tools for creating applications that are scalable, easy to maintain, and transparent.

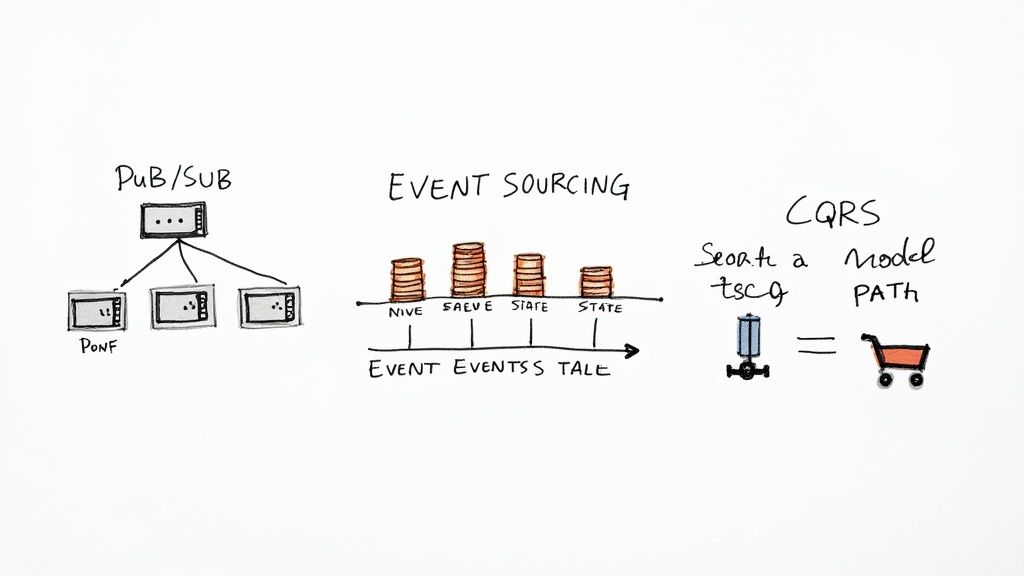

The Publish/Subscribe Pattern

The Publish/Subscribe pattern, or Pub/Sub for short, is the absolute bedrock of EDA. It’s the engine that makes decoupling between services possible. In this model, a producer doesn’t care who gets its messages. It just publishes an event to a topical channel, kind of like a radio station broadcasting on a specific frequency.

Consumers then “tune in” by subscribing to the topics they’re interested in, and they’ll receive every event published on that channel. This simple idea is incredibly powerful because a single event can now trigger multiple, completely independent actions across the entire system at the same time.

Let’s jump back to our e-commerce example:

- An Order Service publishes an

OrderPlacedevent to theorderstopic. It’s done. - An Inventory Service, subscribed to

orders, hears the event and immediately updates stock levels. - The Shipping Service, also listening to

orders, kicks off the fulfillment process. - Meanwhile, an Analytics Service logs the sale for its business intelligence dashboards.

The original Order Service has no idea these other services even exist. It just shouts what happened into the void. This freedom is what allows development teams to add brand new features—like a fraud detection service—just by creating a new subscriber, without ever having to change a single line of code in the original producer.

Event Sourcing

Event Sourcing is a more advanced pattern that completely flips how you think about data. Instead of storing the current state of something in a database (like a customer’s current address), you store the entire, ordered sequence of events that ever happened to it. The current state is then simply a projection, calculated by replaying those events.

It’s a lot like an accountant’s ledger. A good accountant doesn’t just write down the final bank balance. They meticulously record every single transaction—every deposit, every withdrawal. The final balance is just the result of adding up that complete history.

With Event Sourcing, the event stream itself becomes the non-negotiable source of truth. This gives you a perfect, unchangeable audit log of everything that has ever happened, which is a lifesaver for debugging, compliance, and deep analytics.

If a bug ever corrupts the current state, it’s not a catastrophe. You can fix the code, discard the bad state, and rebuild the correct one simply by replaying the events from the log. This pattern offers a level of system recovery and historical insight that’s hard to achieve any other way.

Command Query Responsibility Segregation (CQRS)

While you don’t have to use it with EDA, Command Query Responsibility Segregation (CQRS) is a pattern that fits like a glove, especially when you’re already using Event Sourcing. The idea behind CQRS is simple but profound: completely separate the models you use for writing data (Commands) from the models you use for reading data (Queries).

In a typical application, you often use the same data model for both updating and fetching information. This gets complicated fast, as that one model has to juggle the competing needs of transactional consistency for writes and high performance for reads.

CQRS solves this by creating two distinct pathways:

- The Command Side: This handles all requests that change the system’s state, like

CreateUserorUpdateProductPrice. These commands are what generate the events that other services consume. - The Query Side: This side is all about providing optimized, often denormalized “read models” specifically tailored for what a user or service needs to see. These read models are updated in the background by listening to the events flying out of the command side.

For instance, when a user updates their profile (a command), a UserProfileUpdated event is published. A dedicated consumer then listens for that event and updates several different read models—one for the user’s main account page, another for a company admin dashboard, and maybe a third for a site-wide search index. This separation lets you optimize each path independently, keeping writes safe and consistent while making reads blazingly fast.

Taken together, these patterns create a robust toolkit for building modern, distributed applications. They elevate the simple concept of “reacting to events” into a structured, scalable, and genuinely powerful architectural strategy.

The Business Case for Adopting EDA

So, we’ve walked through the nuts and bolts of event-driven architecture. But the big question on everyone’s mind is always, “Why should we do this?” It’s a fair question. The answer isn’t just about technical cool points; it’s about real, measurable business impact. Moving to an EDA is a strategic call that directly affects how well you can scale, how resilient your systems are, and how fast your teams can actually build and ship new things.

The companies leading the charge aren’t just modernizing for the sake of it. They’re building systems that don’t fall over during unpredictable demand and can bounce back from failures on their own. At the same time, they’re giving their development teams the freedom to move faster than ever before.

Unlocking Unprecedented Scalability

Think about a traditional, tightly-coupled system. A flash sale kicks off, traffic spikes in the order processing service, and suddenly, the whole thing grinds to a halt. The overloaded order service ends up locking resources needed by user authentication and inventory, and boom—a site-wide outage.

Event-driven architecture flips this script entirely. Since services are decoupled, you can scale them one by one. If the OrderPlaced event stream is suddenly flooded with a million events a minute, you just spin up more inventory and shipping consumers to keep up. The best part? This massive surge has zero direct impact on totally unrelated services like user sign-ups or product reviews.

This kind of elasticity means you can absorb huge, unexpected traffic spikes without having to over-provision your entire infrastructure just in case. That translates to serious cost savings and a much better customer experience when it matters most.

Building Highly Resilient Systems

Let’s face it: things break. A service will eventually crash, or a third-party API will go down. In a classic request-response world, this often triggers a domino effect, where one failing service takes its neighbors down with it.

EDA is built for resilience. If the service that sends confirmation emails suddenly dies, it just stops pulling from the OrderPlaced event stream. Meanwhile, the core business of processing orders, updating inventory, and starting the shipping process hums along perfectly because those services aren’t stuck waiting on a response from the email service.

An event-driven system is inherently fault-tolerant. When a failed service comes back online, it can simply pick up processing events from where it left off, ensuring no data is lost and the system can self-heal without manual intervention.

This design prevents a small fire in one corner of your system from turning into a five-alarm blaze that takes down the entire operation, which is absolutely critical for keeping the business running and customers happy.

Accelerating Innovation and Development

This might be the most powerful long-term benefit of all: the massive boost to developer speed and innovation. When your services are decoupled, so are the teams that build them. The team working on a new fraud detection feature doesn’t have to schedule a meeting or coordinate a risky deployment with the checkout team.

Instead, they can just build a new consumer that listens to the existing OrderPlaced event stream, test it in its own little world, and ship it. This kind of freedom opens up a new way of working:

- Parallel Development: Multiple teams can build different things at the same time without stepping on each other’s toes.

- Faster Deployments: Small, independent services can be updated and pushed to production way faster than a massive, monolithic application.

- Safer Experimentation: You can add new services to listen to events and try out new ideas without any risk to your core business logic.

And the data backs this up. A recent analysis of real-world EDA implementations found a 62% reduction in system latency and a 58% improvement in throughput. It also led to a 76.9% decrease in direct API dependencies, which is a huge win for modularity. One e-commerce platform was seen processing 8.7 million events per minute at its peak, automatically scaling to handle an 870% traffic surge while maintaining 99.98% availability. You can explore the full findings and see exactly how EDA is delivering these kinds of results.

Navigating Implementation and Common Challenges

Making the switch to an event-driven architecture is a big step, but it’s one that brings a whole new set of design questions to the table. This isn’t your classic request-response world anymore. To build a system that’s truly scalable, reliable, and easy to maintain, you have to get the details right from the start.

When you successfully adopt EDA, you’re really shifting your mindset from giving direct commands to embracing asynchronous communication and eventual consistency. It’s about building a system that can grow, heal itself, and move fast.

This process is what unlocks major improvements in scale, resilience, and speed for your entire operation.

As you can see, it’s a natural progression: building for scale and resilience is what ultimately lets you operate with greater speed and agility.

Ensuring Reliable Event Delivery

In any distributed system, things will fail. It’s not a question of if, but when. Networks drop, services restart. So, what happens if a consumer grabs an event, processes it, but crashes right before it can say “I’m done”? The event broker, thinking the job failed, will likely send that same event again. Now you’ve got a duplicate.

This is where you have to think about delivery guarantees:

- At-Least-Once Delivery: The system promises every event gets delivered at least one time. This is the most common and practical approach, but it means your downstream services must be ready to see the same event more than once.

- Exactly-Once Delivery: The holy grail. This guarantees an event is processed precisely one time. It sounds perfect, but achieving it in the real world is incredibly complex and often comes with a steep performance penalty.

For the vast majority of use cases, designing your system for at-least-once delivery is the way to go. It just puts the responsibility on the consumer to handle any duplicates that might show up.

Designing for Idempotency

So, how do you safely handle at-least-once delivery? The answer is idempotency. It’s a crucial concept in EDA, and it just means that processing the same event multiple times has the exact same result as processing it only once.

An idempotent operation can be repeated over and over without changing the outcome beyond the initial application. For example, charging a credit card for orderId: 123 is not idempotent, but setting the status of orderId: 123 to PAID is.

Achieving this usually involves keeping track of the events you’ve already processed. When a new event comes in, the consumer first checks its ID against a log of processed events. If it’s a repeat, the consumer simply acknowledges it and moves on, preventing messy situations like sending a customer the same order confirmation email three times.

Managing Schema Evolution

Your business isn’t static, and neither are your events. Today, your UserSignedUp event might just have a userId and email. Six months from now, you might need to add a signupSource to track where new users are coming from. How do you make that change without breaking every service that listens for that event?

This is where a schema registry becomes your best friend. A schema registry is a central, authoritative source for all your event structures. It enforces rules to make sure any changes you make are backward and forward compatible, acting as a critical guardrail that prevents producers from publishing data that consumers can’t possibly understand.

Bridging the Gap with Change Data Capture

One of the biggest roadblocks to adopting EDA is the “first mile” problem: how do you get events out of your big, monolithic databases in the first place? Rewriting your entire legacy application to publish events is rarely practical.

Luckily, Change Data Capture (CDC) offers a brilliant path forward.

CDC is a technique that plugs directly into a database’s internal transaction log. It watches for every single row-level change—every insert, update, and delete—and streams those changes out as events in real-time. This lets you turn your database into a live event source without touching a single line of your application’s code. To get the full picture, you can learn more about what Change Data Capture is and its foundational role in modern data architecture.

With a CDC platform like Streamkap, you can connect to databases like PostgreSQL or MySQL and stream those change events directly to an event broker like Apache Kafka. This creates a low-risk, practical on-ramp for migrating from a monolith to a nimble microservices architecture, one piece at a time.

Where EDA Is Headed: A Look at the Market

Event-driven architecture isn’t just a niche tactic for Silicon Valley giants anymore. It’s quickly becoming the standard way to build modern, responsive applications. This shift is happening for one simple reason: an insatiable demand for real-time data across nearly every industry.

Think about it. A financial service needs to spot fraud the instant it happens, not hours later. A logistics company has to track a package in real-time, down to the second. The ability to react to events as they happen is a massive competitive advantage, and it’s fueling huge growth in the tools that make EDA possible.

An Ecosystem in Full Swing

The technology behind EDA is evolving fast. Powerhouses like Apache Kafka and Pulsar have long set the gold standard for high-volume data streaming. But now, a new generation of platforms is cropping up, designed to take the headache out of building and managing these incredibly powerful—but complex—systems.

This growing toolkit is making event-driven architecture a real possibility for companies of all sizes, not just those with massive engineering departments. The focus is moving from just providing raw infrastructure to offering managed services and developer-friendly tools. This means more teams can build sophisticated, data-driven applications without needing a platoon of specialists just to keep the lights on. The barrier to entry for building an event-driven system is lower than ever.

The global market for EDA platforms was valued at around USD 3.7 billion in 2024 and is expected to grow significantly. This boom is a direct result of the increasing need for real-time data and scalable microservices.

By 2025, it’s estimated that 72% of companies worldwide had adopted EDA in some capacity. But here’s the reality check: only about 13% had managed a full, company-wide implementation. This tells us that while many are starting the journey, achieving deep, mature integration is still a work in progress. You can discover more insights about the EDA platform market to get the full picture of this trend.

Frequently Asked Questions About EDA

As you start digging into event-driven architecture, a few common questions always seem to pop up. Getting clear answers to these is key to understanding where EDA shines and where another approach might be a better fit. Let’s tackle some of the most common ones I hear from developers and architects.

What Is the Main Difference Between EDA and Microservices?

This is a big one, and it’s easy to get them tangled up. The simplest way to think about it is that one describes how you build your application, and the other describes how its parts talk to each other.

Microservices is an architectural style. You break down a big application into a collection of small, independent services. In contrast, Event-Driven Architecture (EDA) is a communication pattern—it’s one of the ways those services can communicate.

They actually work together beautifully. You could have your microservices call each other directly with synchronous API requests, but that creates tight coupling. Using EDA lets them communicate asynchronously through events, which makes your whole system more resilient, scalable, and loosely coupled. It’s no surprise it’s a popular pairing for modern systems.

When Should You Not Use Event-Driven Architecture?

EDA is powerful, but it’s definitely not the right tool for every job. For simple applications, the complexity of setting up an event broker and handling asynchronous logic is often just overkill.

It’s also not the best choice for workflows that absolutely need an immediate, synchronous response. Imagine a user action that has to get an instant confirmation that a complex, multi-step process is complete. In that scenario, a classic request-response model is far simpler and more direct.

Eventual consistency, a cornerstone of EDA, can also be a deal-breaker. If your business logic requires iron-clad, immediate transactional consistency across different services, trying to force that into an EDA model can introduce a world of complexity you might be better off avoiding.

How Is Data Consistency Handled in EDA?

In the world of EDA, data consistency is typically handled through a model called eventual consistency. Because every service is decoupled and just reacting to events on its own schedule, there isn’t a single, all-or-nothing transaction that keeps the whole system in sync at every instant.

Instead, the system becomes consistent over time as all the different services process the relevant events. This might just be milliseconds, but it’s not instantaneous. For workflows that need stronger guarantees, architects often turn to patterns like the Saga pattern. A Saga coordinates a sequence of local transactions across services and, crucially, defines compensating actions to “undo” previous steps if something fails along the way. It’s a way to maintain data integrity across a distributed system without a traditional, blocking transaction.

Ready to bridge your existing databases to a real-time event-driven architecture without rewriting your applications? Streamkap uses Change Data Capture (CDC) to turn your database into a streaming event source, seamlessly integrating with Kafka and other platforms. Explore how you can modernize your data infrastructure at Streamkap.