Streaming CDC Data into Motherduck: A Step-by-Step Guide

September 4, 2025

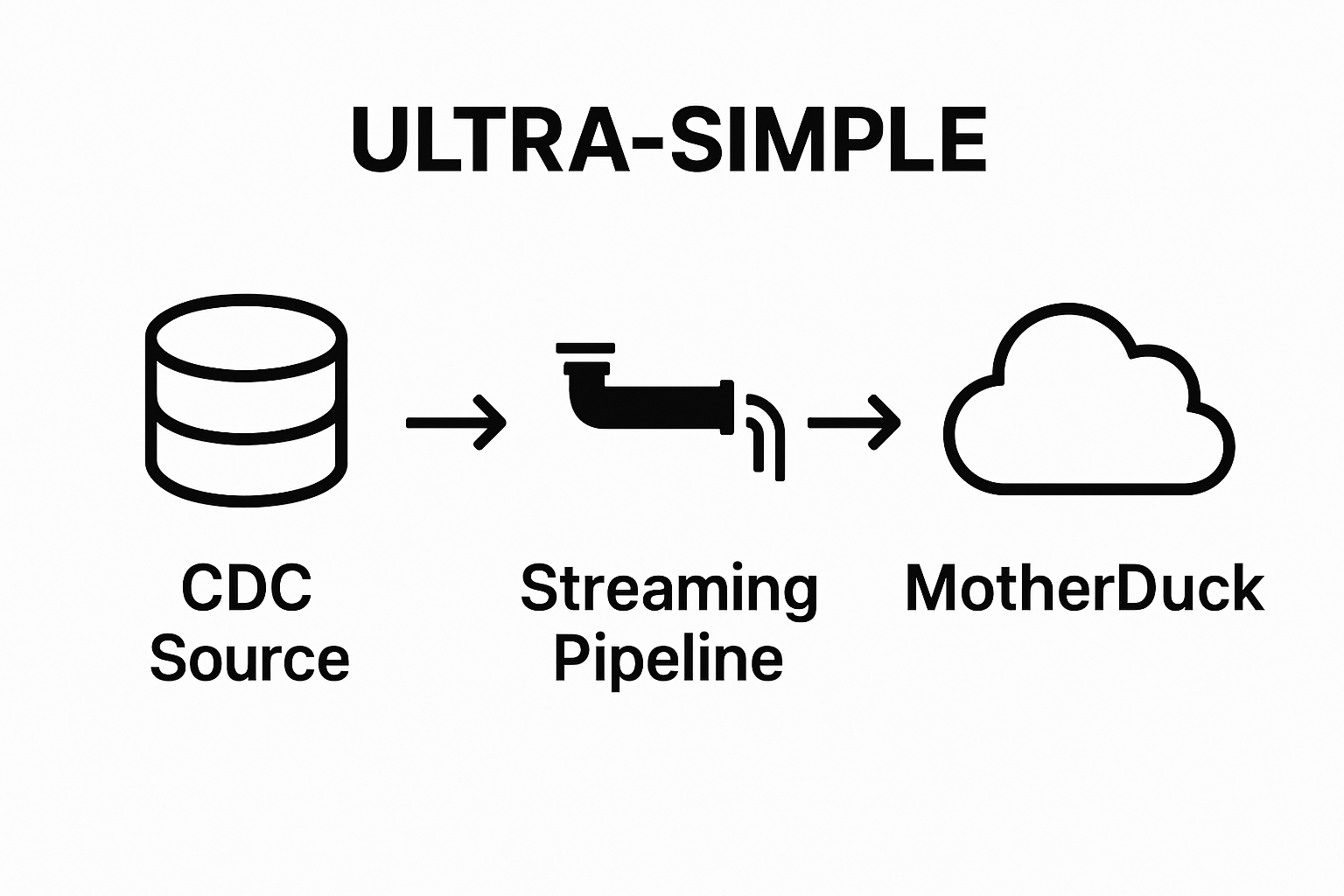

Streaming real-time change data into MotherDuck is becoming the new standard for data teams who want instant analytics. Over 70 percent of organizations now rely on CDC (Change Data Capture) to keep their data sync accurate and lightning fast. Most people assume the process is complicated or fragile. Surprisingly, the biggest breakthroughs come from a few careful setup steps that make the whole workflow both simple and bulletproof.

Table of Contents

- Step 1: Prepare Your Environment For Streaming

- Step 2: Set Up The Data Source For Cdc

- Step 3: Configure Motherduck For Data Ingestion

- Step 4: Stream Cdc Data Into Motherduck

- Step 5: Verify Data Integrity And Flow

- Step 6: Optimize And Monitor The Streaming Process

Quick Summary

| Key Point | Explanation |

|---|---|

| 1. Verify system compatibility for CDC | Ensure your data source supports change data capture and verify administrative access for configuration. |

| 2. Configure database for change tracking | Activate CDC features within your database to enable accurate tracking of data modifications. |

| 3. Establish secure connection to MotherDuck | Generate authentication credentials and set precise connection parameters for successful data ingestion. |

| 4. Monitor data integrity during streaming | Run verification queries to ensure all changes are accurately captured and reflected in MotherDuck. |

| 5. Optimize monitoring and performance | Implement real-time monitoring tools and dynamic resource scaling to maintain a high-performance data pipeline. |

Step 1: Prepare Your Environment for Streaming

Successful streaming of CDC data into MotherDuck requires a strategic preparation of your data integration environment. Before diving into the technical implementation, you need to ensure your infrastructure is configured to support seamless data transfer and capture. This initial setup phase is critical for establishing a robust and reliable streaming pipeline.

Begin by verifying your system’s compatibility with the necessary streaming technologies. MotherDuck requires a compatible data source that supports change data capture (CDC) mechanisms. Typical sources include relational databases like PostgreSQL, MySQL, and SQL Server. You’ll need administrative access to your source database and permissions to configure replication or change tracking.

To set up your streaming environment, install and configure the required software components. This typically involves setting up a streaming platform like Apache Kafka or a specialized CDC connector. Our comprehensive guide on data streaming can provide additional insights into selecting the right tools for your specific use case.

Key prerequisites for your environment include:

- Stable network connectivity with low latency between your source database and MotherDuck

- Sufficient compute resources to handle real-time data transformation

- Configured firewall rules allowing secure data transmission

- Appropriate authentication credentials for both source and destination systems

Carefully validate your network and security configuration to prevent potential data streaming interruptions. Test your connection thoroughly by establishing a small-scale proof of concept before scaling to full production. This approach allows you to identify and resolve any potential integration challenges early in the process, minimizing downstream complications and ensuring a smooth streaming implementation.

Below is a checklist table to help validate your CDC streaming pipeline setup, ensuring all requirements and verification steps are completed before moving to production.

| Step | Verification Item | Completion Criteria |

|---|---|---|

| 1 | System Compatibility | Data source supports CDC and administrative access is available |

| 2 | Network Configuration | Stable, low-latency connectivity and secure firewall rules in place |

| 3 | CDC Enabled | Change tracking or replication features activated in source database |

| 4 | Permissions | All necessary permissions for CDC extraction verified |

| 5 | Connection Setup | Authentication credentials generated and connection parameters configured for MotherDuck |

| 6 | Sample Streaming Test | Sample data modifications successfully streamed into MotherDuck |

| 7 | Data Integrity Check | Comparison queries confirm data completeness and accuracy |

Step 2: Set Up the Data Source for CDC

Configuring your data source for Change Data Capture (CDC) is a pivotal step in establishing a robust streaming pipeline into MotherDuck. This stage involves preparing your source database to track and transmit data modifications accurately and efficiently. Successful CDC setup requires careful configuration of your database’s replication and tracking mechanisms.

The process begins with identifying and enabling CDC capabilities within your specific database management system. For most relational databases like PostgreSQL, MySQL, and SQL Server, this involves activating built-in change tracking features. Administrators will need to modify database settings to log transactional changes, creating a reliable stream of data modifications that can be captured and replicated.

Learn more about optimizing database streaming to ensure your data source is configured for maximum efficiency. When configuring CDC, pay special attention to the following critical parameters:

- Enabling supplemental logging for comprehensive change tracking

- Configuring appropriate user permissions for change data extraction

- Setting up minimal required database-level replication settings

- Defining precise capture scopes for relevant tables and schemas

Verify your CDC configuration by running test queries that demonstrate successful change tracking. This typically involves creating sample data modifications and confirming that these changes are correctly logged and can be retrieved by your streaming mechanism. Careful validation at this stage prevents potential data synchronization issues downstream, ensuring a smooth and reliable data streaming experience into MotherDuck. Remember that each database system has unique CDC implementation methods, so consult your specific database documentation for precise configuration instructions.

Step 3: Configure Motherduck for Data Ingestion

Configuring MotherDuck for data ingestion represents a critical transition point in your streaming pipeline, transforming raw captured changes into actionable analytics-ready data. The configuration process requires precise connection parameters and authentication mechanisms to establish a secure, reliable data transfer pathway.

Initiate the configuration by generating the necessary authentication credentials within your MotherDuck account. This involves creating an API token or service account with appropriate permissions to receive and process incoming streaming data. Carefully manage these credentials, ensuring they have granular access controls that align with your specific data governance requirements.

Explore advanced data pipeline strategies to understand the nuanced approaches for seamless data integration. When setting up your MotherDuck environment, focus on establishing robust connection parameters that support real-time data streaming. This includes configuring network settings, defining data transformation rules, and setting up appropriate schema mappings that accurately translate your source database’s structure into MotherDuck’s analytical format.

Key configuration considerations include:

- Specifying precise data schema and type mappings

- Configuring network firewall rules for secure data transmission

- Setting up appropriate data retention and archival policies

- Defining real-time transformation rules for incoming data streams

Verify your MotherDuck configuration by executing a small-scale test ingestion. Run a sample data stream and confirm that the data is correctly processed, transformed, and stored within your MotherDuck environment. Pay close attention to data integrity, checking that all captured changes are accurately reflected without loss or corruption. Successful configuration ensures a smooth, reliable data streaming experience that transforms raw transactional data into valuable analytical insights.

Step 4: Stream CDC Data into Motherduck

Streaming Change Data Capture (CDC) data into MotherDuck represents the culmination of your data integration efforts, transforming raw transactional changes into actionable analytical insights. This critical step involves establishing a continuous, real-time data flow that captures and replicates database modifications with precision and reliability.

Initiate the streaming process by configuring your chosen data streaming platform to create a persistent connection between your source database and MotherDuck. This typically involves setting up a streaming connector that can translate raw CDC logs into a format compatible with MotherDuck’s ingestion mechanisms. Carefully map your source database schema to ensure accurate data representation, paying close attention to data type conversions and potential precision limitations.

Explore advanced real-time data pipeline techniques to optimize your streaming configuration. When implementing the data stream, focus on creating a robust, fault-tolerant pipeline that can handle potential network interruptions or temporary system unavailability. This requires configuring appropriate retry mechanisms, error handling protocols, and checkpoint tracking to ensure no data modifications are lost during transmission.

Key streaming implementation considerations include:

- Establishing secure, encrypted data transmission channels

- Configuring real-time data transformation rules

- Implementing comprehensive error logging and monitoring

- Setting up automatic data reconciliation mechanisms

Verify the streaming implementation by monitoring the initial data transfer closely. Observe the first complete CDC data cycle to confirm that all database modifications are accurately captured, transmitted, and reflected in MotherDuck. Check for data completeness, validate timestamp accuracy, and ensure that both incremental and full table changes are correctly processed. Successful streaming configuration transforms your data infrastructure, enabling real-time analytics and immediate insights from your most dynamic data sources.

Step 5: Verify Data Integrity and Flow

Verifying data integrity and flow represents the critical final checkpoint in your CDC streaming pipeline, ensuring that every captured change is accurately transmitted and stored within MotherDuck. This verification process acts as a comprehensive quality assurance mechanism, protecting your analytical insights from potential data corruption or transmission errors.

Begin the verification process by executing comprehensive comparison queries between your source database and MotherDuck. These queries should systematically cross-reference record counts, timestamp sequences, and specific data transformations to confirm that each captured change has been precisely replicated. Pay special attention to edge cases such as high-volume transactions, complex data types, and time-sensitive modifications that might introduce subtle inconsistencies during streaming.

Learn more about optimizing data workflow reliability to enhance your data integrity checks. Implement a multi-layered verification strategy that combines automated validation scripts with manual sampling techniques. This approach allows you to detect potential discrepancies at both macro and micro levels, providing a comprehensive view of your data streaming performance.

Key verification considerations include:

- Executing row-count comparisions between source and destination

- Validating timestamp accuracy and sequence preservation

- Checking data type consistency and transformation accuracy

- Monitoring streaming latency and transmission performance metrics

Complete your verification by generating a detailed integrity report that documents the streaming performance, highlighting any detected anomalies or potential improvement areas. This report serves not just as a validation tool but as a continuous improvement mechanism for your data pipeline. Successful verification confirms that your CDC streaming configuration delivers reliable, accurate, and real-time data insights directly from your source systems into MotherDuck, transforming raw transactional changes into actionable analytics.

Step 6: Optimize and Monitor the Streaming Process

Optimizing and monitoring your CDC streaming process is essential for maintaining a high-performance, reliable data pipeline between your source database and MotherDuck. This final stage transforms your initial configuration into a robust, self-sustaining data integration system that adapts to changing workloads and potential performance bottlenecks.

Begin the optimization process by implementing comprehensive monitoring tools that provide real-time visibility into your streaming performance. Configure detailed logging mechanisms that track key metrics such as data transmission latency, change capture rates, and system resource utilization. These insights will help you identify potential performance constraints and proactively adjust your streaming configuration to maintain optimal data flow.

Explore advanced real-time analytics workflow strategies to enhance your monitoring approach. Focus on developing adaptive streaming parameters that can automatically scale resources based on incoming data volume and complexity. This might involve implementing dynamic buffering strategies, adjusting connection pool sizes, or configuring intelligent retry mechanisms that can handle temporary network interruptions without losing data integrity.

Key optimization considerations include:

- Implementing automated performance alert systems

- Configuring dynamic resource scaling mechanisms

- Establishing comprehensive error tracking and recovery protocols

- Creating periodic performance review checkpoints

Complete your optimization process by developing a continuous improvement framework. Schedule regular performance reviews where you analyze streaming metrics, identify potential bottlenecks, and iteratively refine your configuration. Successful optimization ensures that your CDC streaming pipeline remains efficient, resilient, and capable of delivering real-time data insights with minimal manual intervention, transforming your data integration from a static process to a dynamic, self-optimizing system.

The table below provides an overview of the critical considerations for optimizing and monitoring your CDC streaming process, helping ensure a robust, high-performance pipeline.

| Optimization Area | Purpose | Key Actions |

|---|---|---|

| Monitoring Tools | Provide real-time visibility | Implement logging for data latency, capture rates, resource usage |

| Automated Alerts | Rapid issue detection | Set up alerting for performance and errors |

| Scaling Mechanisms | Handle variable workloads | Configure dynamic resource scaling and buffering |

| Error Recovery | Minimize data loss | Establish error tracking and recovery protocols |

| Performance Review | Continuous improvement | Schedule regular checkpoint reviews and analysis |

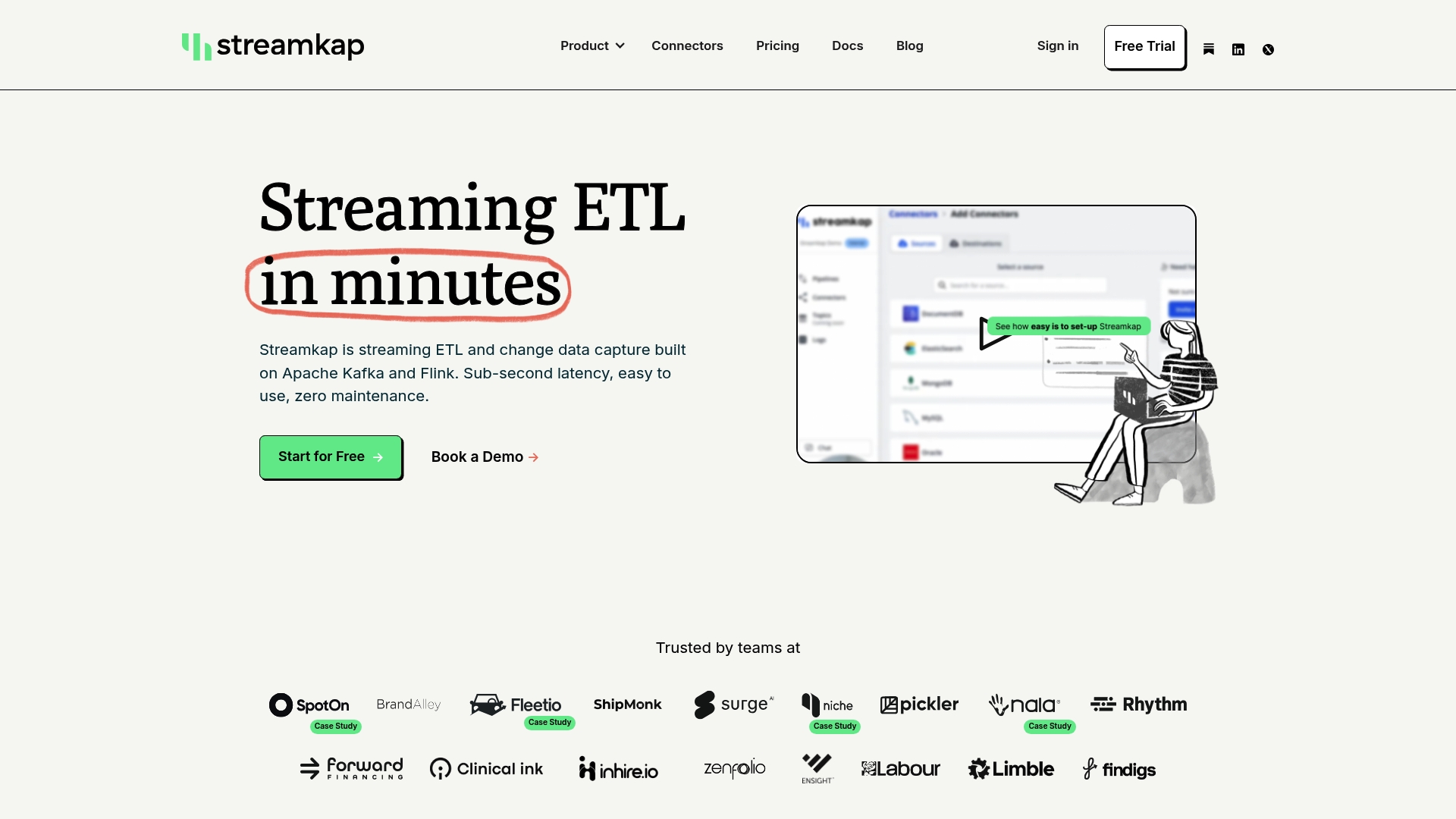

Ready to Transform Your CDC Streaming to MotherDuck?

Struggling with unreliable CDC streaming or complex pipeline configuration can slow down your real-time analytics initiatives. The article outlined how preparing your environment, configuring data sources, and setting up automated change data capture are all essential steps. But when you face data integrity issues, delayed pipelines, or complicated transformation logic, even following best practices can feel overwhelming. Streamkap directly addresses these pain points. Our platform offers automated schema management, no-code connectors for sources like PostgreSQL and MySQL, and a robust real-time transformation engine so you can easily stream changes to destinations like MotherDuck without operational headache. Move beyond patchwork solutions and start building resilient pipelines today.

Don’t let technical bottlenecks or data consistency concerns hold you back. Visit Streamkap to see how our real-time CDC and streaming ETL features can power your MotherDuck workflows. Get started now to accelerate your streaming architecture and enjoy flexible, low-latency integration—request a demo or learn more on our homepage today.

Frequently Asked Questions

What is Change Data Capture (CDC) and why is it important for streaming data?

Change Data Capture (CDC) is a technique used to identify and capture changes made to data in a database. It is important for streaming data as it allows for real-time tracking of changes, ensuring that analytics platforms, like MotherDuck, receive the most current data for accurate insights.

How do I prepare my environment for streaming CDC data into MotherDuck?

To prepare your environment, ensure that your system is compatible with CDC technologies, install necessary software components such as a streaming platform or CDC connector, and configure your network and firewall settings to support secure data transmission.

What are the key steps to configure my data source for CDC?

Key steps to configure your data source for CDC include enabling built-in change tracking features, modifying database settings to log changes, and verifying permissions for data extraction. Additionally, you should run test queries to confirm that changes are being correctly tracked and logged.

How can I verify the integrity of data streamed into MotherDuck?

To verify data integrity, execute comparison queries between your source database and MotherDuck. Look for consistency in record counts, timestamp accuracy, and data transformations. Implement automated validation scripts along with manual checks to ensure robust integrity verification.

Recommended