Technology

Top 12 Database Synchronization Tools for 2025

Explore the best database synchronization tools for real-time data movement. Our 2025 guide covers features, pros, cons, and use cases to help you choose.

PUBLISHED

November 17, 2025

TL;DR

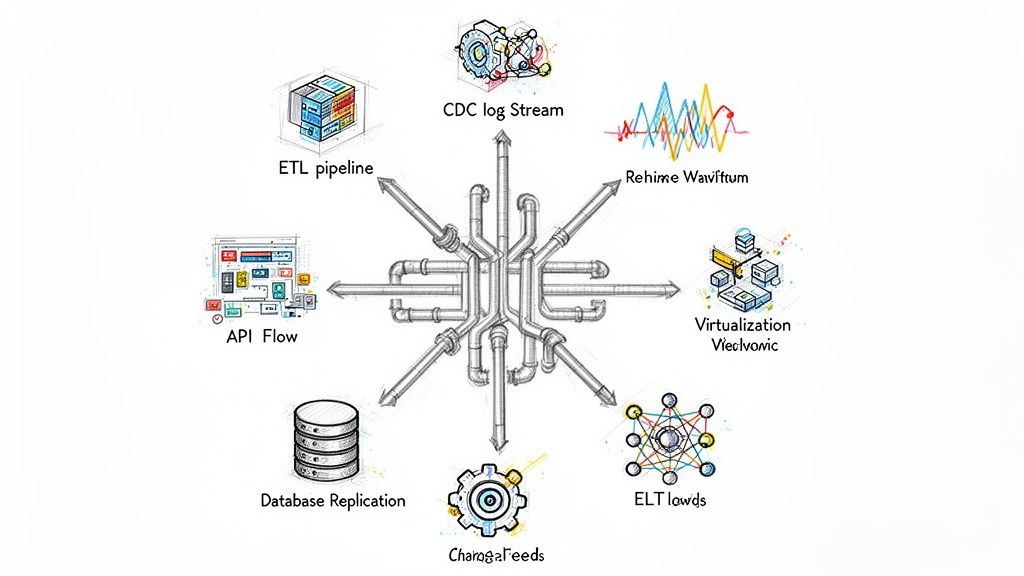

• Batch ETL delays create costly information gaps; CDC-powered sync delivers changes as they occur.

• This guide analyzes 12 tools from managed SaaS (Fivetran, Streamkap) to open-source (Debezium).

• Evaluate based on architecture, pricing model, and performance characteristics for your specific requirements.