Master Postgresql to Snowflake Streaming Efficiently

August 31, 2025

Streaming data from PostgreSQL to Snowflake can cut reporting delays from hours to minutes and instantly fuel business decisions. Most teams expect connecting these powerhouse platforms to be a nightmare of custom code and endless bugs. But with the right setup, you can unlock real-time insights by streaming billions of rows with reliability not possible just a few years ago.

Table of Contents

- Step 1: Prepare Your PostgreSQL Database For Streaming

- Step 2: Set Up Your Snowflake Environment For Data Ingestion

- Step 3: Configure A Streaming Tool For Data Transfer

- Step 4: Execute The Data Streaming Process

- Step 5: Verify Data Consistency And Integrity In Snowflake

Quick Summary

| Key Point | Explanation |

|---|---|

| 1. Enable Logical Replication | Set “wal_level” to logical to allow capturing detailed data changes in PostgreSQL. |

| 2. Create a Dedicated Streaming User | Use ALTER ROLE to create a secure user with REPLICATION and LOGIN permissions for streaming data. |

| 3. Configure Snowflake Warehouse for Streaming | Set up a Snowflake warehouse that matches your expected data volume and performance for effective ingestion. |

| 4. Ensure Accurate Data Mapping | Carefully translate schema and data types between PostgreSQL and Snowflake to maintain data integrity during streaming. |

| 5. Perform Comprehensive Data Validation | Execute thorough verification between source and destination to confirm data consistency post-migration. |

Step 1: Prepare Your PostgreSQL Database for Streaming

Successful PostgreSQL to Snowflake streaming begins with meticulous database preparation. This critical first step ensures your PostgreSQL environment is configured to capture and transmit data changes efficiently and reliably. By implementing the right configuration settings, you establish a robust foundation for seamless real-time data replication.

Logical Replication Configuration

To enable streaming, you must modify your PostgreSQL configuration to support logical replication. Start by accessing your postgresql.conf file and adjusting several key parameters. First, set wal_level to logical, which allows the database to generate write-ahead logs (WAL) with sufficient detail for capturing row-level changes. This configuration permits tracking every data modification precisely.

Grant your streaming user the necessary replication permissions using the ALTER ROLE command. Specifically, create a dedicated replication user with minimal privileges required for streaming operations. Your user should have REPLICATION and LOGIN attributes, ensuring secure and controlled access to database change streams.

Verify your configuration by checking key settings:

- wal_level is set to logical

- Replication slot is created

- Dedicated streaming user with appropriate permissions exists

For databases running in production environments, carefully plan your replication strategy. Consider potential performance impacts and monitor WAL growth, as continuous logical replication can generate significant log volume. PostgreSQL’s official documentation provides comprehensive guidance on managing these configurations.

Your next step involves configuring data change capture to identify which tables and schemas will participate in the streaming pipeline. Thoughtful selection ensures you stream only relevant data, minimizing unnecessary resource consumption and maintaining optimal performance.

Successful configuration transforms your PostgreSQL database from a static data store into a dynamic, real-time data source ready for continuous streaming to Snowflake. By investing time in precise configuration, you create a reliable, efficient data integration pathway that supports immediate, accurate data movement.

Below is a checklist summarizing the essential configuration tasks and checkpoints for preparing PostgreSQL for streaming to Snowflake.

| Configuration Step | Description | Verification/Checkpoint |

|---|---|---|

| Set wal_level to logical | Allows capturing detailed row-level changes for logical replication | Verify wal_level is set to logical |

| Create replication user | Ensures secure, dedicated streaming user | User has REPLICATION and LOGIN permissions |

| Create replication slot | Maintains the replication state for streaming | Confirm replication slot exists |

| Adjust postgresql.conf & permissions | Updates config for streaming and security | Review all parameter and role modifications |

| Select tables/schemas for streaming | Optimizes streaming by including only relevant data | Scope of streamed tables and schemas is defined |

| Monitor WAL growth and resource use | Prevents performance issues and excessive log volume | WAL size is monitored and within safe limits |

Step 2: Set Up Your Snowflake Environment for Data Ingestion

Preparing your Snowflake environment represents a critical bridge between your PostgreSQL data source and your ultimate analytics destination. This step transforms your cloud data warehouse into a receptive, secure, and optimized platform ready to consume streaming data with precision and efficiency.

Account and Warehouse Configuration

Begin by establishing a dedicated Snowflake account with the appropriate compute resources. Create a warehouse specifically designed for your PostgreSQL streaming workload, selecting an initial size that matches your expected data volume and performance requirements. Compute resources in Snowflake are dynamically scalable, so start with a medium-sized warehouse that can automatically adjust based on incoming data streams.

Establish robust security protocols by generating a dedicated service user with granular access permissions. This user will manage the data ingestion process, requiring specific grants for creating schemas, tables, and executing streaming operations. Use Snowflake’s role-based access control to limit this user’s permissions strictly to the necessary streaming and transformation activities.

Key configuration checkpoints include:

- Warehouse size matches expected data throughput

- Service user created with minimal required permissions

- Network access rules configured for secure connectivity

Explore advanced streaming configurations that can optimize your data pipeline performance. These configurations help manage complex ingestion scenarios and ensure reliable, low-latency data movement.

The final preparatory step involves designing your target schema and table structures. Map your PostgreSQL source schema directly to Snowflake, paying close attention to data type translations and potential precision requirements. Snowflake supports complex data types and provides flexible schema evolution, allowing you to adapt your target schema as source data structures change.

By meticulously configuring your Snowflake environment, you create a robust, scalable platform capable of ingesting real-time data with minimal friction. Your careful preparation sets the stage for seamless, efficient PostgreSQL to Snowflake streaming.

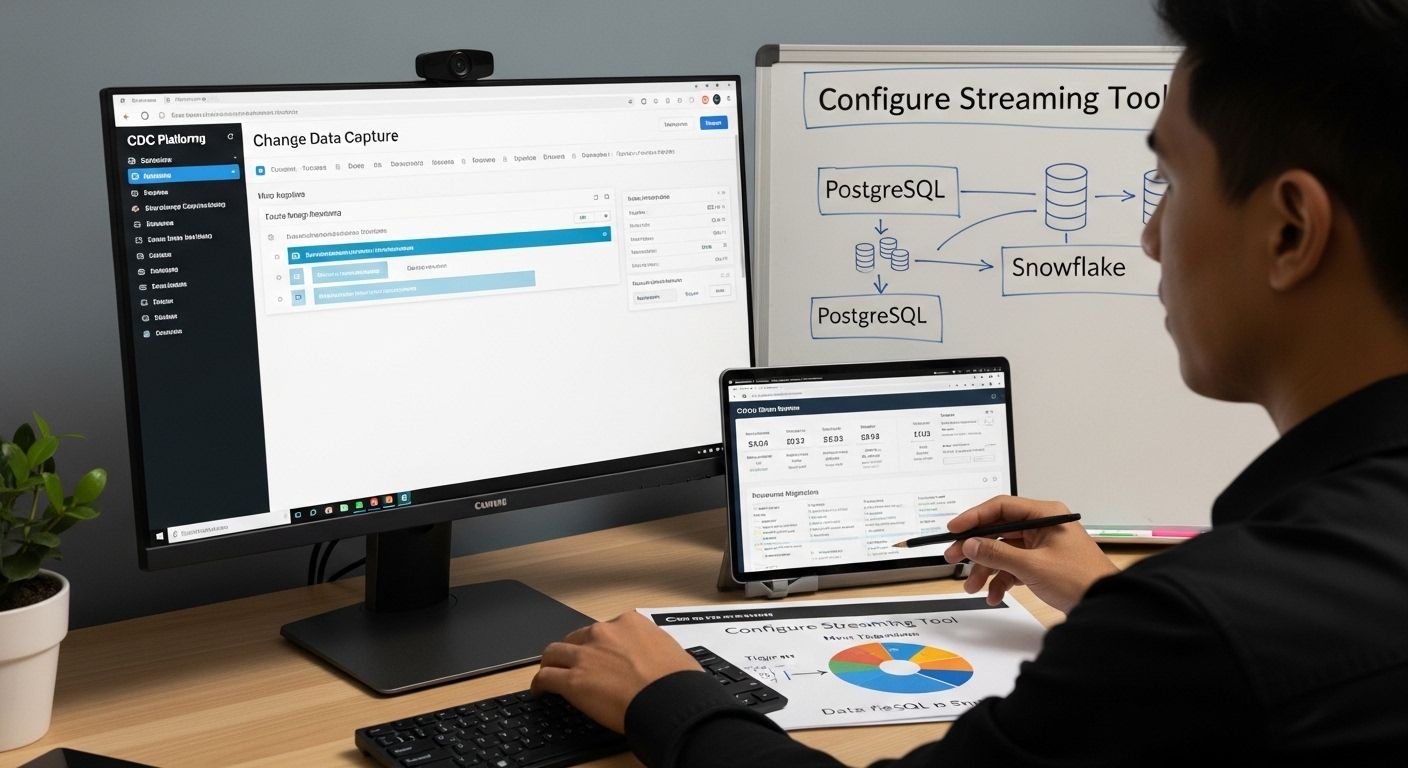

Step 3: Configure a Streaming Tool for Data Transfer

Configuring the right streaming tool transforms your data migration from a complex challenge into a streamlined, predictable process. This critical step bridges your PostgreSQL database and Snowflake environment, establishing a reliable channel for continuous data movement that minimizes latency and ensures data integrity.

Streaming Architecture Selection

Choose a streaming tool that supports change data capture (CDC) and provides robust connectivity between PostgreSQL and Snowflake. Look for solutions that offer native support for logical replication, enabling real-time tracking of data modifications. The ideal tool will handle schema evolution automatically, reducing manual intervention and potential synchronization errors.

Implement your streaming architecture with careful consideration of performance and scalability. Configure the tool to capture primary key and before/after image data, which allows precise tracking of record changes. This approach ensures that each data modification is accurately represented in your Snowflake destination, maintaining referential integrity throughout the streaming process.

Key configuration requirements include:

- Logical replication support

- Real-time change tracking capabilities

- Automatic schema evolution handling

- Secure, encrypted data transfer

Learn about advanced streaming techniques that can optimize your data pipeline performance. These strategies help manage complex ingestion scenarios and ensure reliable, low-latency data movement.

Carefully map your source database schema to the target Snowflake schema, paying close attention to data type translations and potential precision requirements. Some streaming tools offer automatic schema mapping, which can significantly reduce configuration complexity. Verify that your chosen solution handles complex data types and supports incremental schema updates without requiring complete pipeline redeployment.

Establish monitoring and alerting mechanisms to track streaming performance and quickly identify potential issues. Configure your streaming tool to generate detailed logs and metrics, enabling proactive management of your data transfer pipeline. By investing time in precise configuration and monitoring, you create a resilient, efficient data streaming solution that transforms your PostgreSQL database into a real-time, actionable data resource.

Step 4: Execute the Data Streaming Process

The data streaming process represents the moment where theoretical preparation transforms into real-world data movement. This critical stage combines all previous configurations into a synchronized, continuous data transfer mechanism that bridges your PostgreSQL source with Snowflake destination.

Initial Streaming Activation

Initiate your streaming process by establishing a secure connection between your PostgreSQL logical replication slot and the designated streaming tool. Verify your connection parameters meticulously, ensuring that authentication credentials, network routes, and encryption protocols align perfectly. The initial connection serves as a critical validation point, confirming that your carefully configured environment is prepared for live data transfer.

Trigger the streaming process with a controlled, incremental data load. Start by selecting a subset of tables or a specific schema to validate the entire data movement pipeline. This strategic approach allows you to monitor performance, verify data integrity, and identify potential transformation or connectivity issues before executing a full-scale migration.

Key streaming activation checkpoints include:

- Successful logical replication connection establishment

- Initial data transfer with zero data loss

- Consistent schema translation between source and destination

- Minimal latency in data movement

Explore advanced streaming optimization techniques that can enhance your data pipeline’s performance and reliability. These strategies help manage complex ingestion scenarios and ensure precise, efficient data movement.

Implement robust monitoring mechanisms to track the streaming process in real-time. Configure detailed logging and performance metrics that capture every aspect of data transfer, from initial connection to final record ingestion. Pay close attention to data transformation accuracy, ensuring that each record maintains its original integrity while adapting to Snowflake’s specific schema requirements.

As your streaming process stabilizes, gradually increase the data volume and complexity. Monitor system performance, watching for potential bottlenecks in network bandwidth, compute resources, or data transformation processes. By maintaining a vigilant, incremental approach, you transform your PostgreSQL to Snowflake streaming from a technical challenge into a seamless, reliable data integration solution.

Step 5: Verify Data Consistency and Integrity in Snowflake

Data integrity verification represents the critical final checkpoint in your PostgreSQL to Snowflake streaming journey. This comprehensive validation ensures that every single record transferred maintains its original structure, value, and relational context through the complex migration process.

Comprehensive Validation Strategy

Begin your verification process by executing a series of systematic cross-referencing queries between your source PostgreSQL database and the Snowflake destination. Calculate and compare total record counts, ensuring absolute numerical alignment across all migrated tables. This initial count-based verification provides an immediate high-level assessment of data transfer completeness.

Dive deeper into data validation by performing granular record-level comparisons. Utilize Snowflake’s powerful SQL capabilities to construct detailed comparison queries that examine primary key integrity, data type preservation, and precise value matching. Focus particularly on complex data types that often present challenges during streaming, such as timestamps, JSON objects, and nested structures.

Key verification checkpoints include:

- Total record count matches between source and destination

- Zero data type translation errors

- Preserved primary and foreign key relationships

- Accurate representation of NULL and default values

- Consistent timestamp and timezone handling

Explore advanced data validation techniques that can enhance your data integrity assessment. These strategies help uncover subtle discrepancies that might escape standard comparison methods.

Implement automated validation scripts that can be repeatedly executed to ensure ongoing data consistency. Configure these scripts to generate comprehensive reports highlighting any detected anomalies, providing clear documentation of the streaming process’s reliability. Consider creating permanent comparison views in Snowflake that allow continuous monitoring of data synchronization quality.

Finalize your verification by stress-testing the streaming pipeline with incremental updates. Introduce deliberate changes in your source PostgreSQL database and meticulously track how these modifications propagate through the streaming process. This dynamic testing approach validates not just initial data transfer, but the entire ongoing synchronization mechanism, ensuring your PostgreSQL to Snowflake streaming solution remains robust and dependable.

Use the table below to cross-reference key validation criteria during data verification to ensure your streaming process is complete and accurate.

| Verification Checkpoint | What to Validate | Method/Notes |

|---|---|---|

| Total record count matches | Source and destination table row counts | Run count(*) queries on both systems |

| No data type translation errors | Data types preserved between platforms | Check for conversion mismatches/errors |

| Primary and foreign key relationships | Relational integrity intact | Inspect key constraints and related fields |

| NULL and default values accuracy | Proper handling of special values | Compare NULLs and defaults in representative data |

| Timestamps and timezones consistency | Accurate time data migration | Validate with test records across time fields |

| Incremental update propagation | Changes in source appear in destination | Make test edits and monitor streaming results |

Turn PostgreSQL to Snowflake Streaming Into an Advantage with Streamkap

Struggling with complex configuration steps, latency issues, and inconsistent data when setting up PostgreSQL to Snowflake streaming? You are not alone. Many teams face roadblocks with logical replication, real-time change data capture, and precise schema transformation. Streamkap eliminates these challenges by offering a fully managed, no-code solution built for speed and reliability. With automated schema handling, resilient CDC pipelines, and instant integration to Snowflake, you gain real-time insight without the hassles of manual setup or maintenance.

Why wait for batch ETL cycles or risk data mismatch? Experience sub-second streaming, real-time data enrichment, and seamless validation from the very start of your data journey. Take control of your pipeline with streaming for PostgreSQL to Snowflake and see how easy modern data workflows can be. Visit Streamkap now to schedule a personalized demo and unlock your streaming potential today.

Frequently Asked Questions

What are the key configuration steps for preparing PostgreSQL for streaming to Snowflake?

To prepare PostgreSQL for streaming to Snowflake, modify the postgresql.conf file to set wal_level to logical, create a replication user with necessary permissions, and ensure the replication slot is created. Additionally, plan your replication strategy to monitor WAL growth and resource impacts.

How do I set up my Snowflake environment for data ingestion from PostgreSQL?

To set up your Snowflake environment, create a dedicated warehouse for incoming PostgreSQL streams, generate a service user with necessary permissions, and map your PostgreSQL schema to Snowflake’s schema, ensuring attention to data type translations and precision requirements.

What streaming tools can I use for effective data transfer between PostgreSQL and Snowflake?

Select a streaming tool that supports Change Data Capture (CDC) and logical replication for real-time data tracking. Ensure the tool automates schema evolution and supports secure, encrypted data transfer to maintain data integrity during migration.

How can I verify the integrity and consistency of data after streaming from PostgreSQL to Snowflake?

To verify data integrity, compare total record counts and perform detailed record-level comparisons between the PostgreSQL source and Snowflake destination. Focus on primary key integrity, data type preservation, and ensure consistent handling of NULL values and timestamps.

Recommended