Optimizing Snowflake for Lower Costs with the Snowpipe Streaming API

Snowflake — it’s scalable, flexible, and easy to use. But Snowflake can also become increasingly expensive as your data (and data needs) grow.

In data, performance and cost are closely entwined. Warehouse size, query frequency, data loading patterns, and data pipelines — there’s a delicate balance between high-performing systems and high-cost systems.

Luckily, a simple switch to Snowpipe Streaming is the easiest and lightest lift for those currently working with Snowflake.

Snowpipe Streaming is a new, low-latency feature that uses the Snowpipe Streaming API to load data. This represents a significant change from previous methods for bulk loads with files.

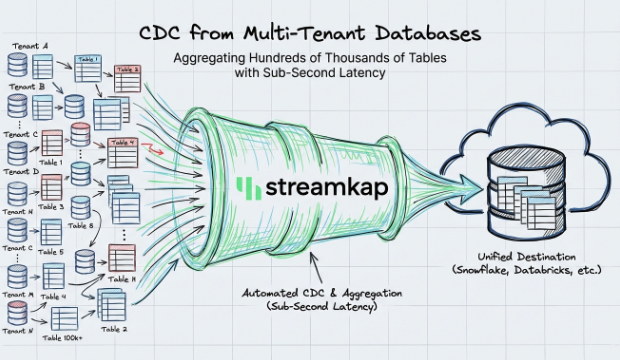

With Snowpipe Streaming, Streamkap can provide cost-effective real-time streaming connectivity and performance at a fraction of the competition’s cost — interoperable and Snowflake-ready.

Improving Snowflake performance and cost with Snowpipe Streaming API

There are multiple ways to load data into Snowflake. The most common approach, which most batch ETL tools take, is to load data with a SQL statement like COPY INTO. Snowpipe is another option which allows for streaming data into S3 and then batching data into Snowflake. There is relatively new option callled Snowpipe Streaming which allows for low latency streaming of data into Snowflake at much lower cost than either of the above options.

With Streamkap and Snowpipe Streaming, you can snapshot/backfill historical data before moving to streaming mode, include/exclude tables/columns from sources, support schema evolution, and set up the sending of real-time data to Snowflake.

When it comes to data – especially real-time streaming data – transformation and consumption are generally the greatest costs – storage-related costs tend to be minimal. To reduce your costs, you need to focus on uploading and transforming. Snowpipe Streaming enables real-time data processing and analytics, capturing changes as they occur and allowing for immediate data consumption without complex ETL processes.

Dynamic Tables with Snowpipe Streaming

In addition to Snowpipe Streaming, you can use Snowflake’s Dynamic Tables to increase the efficiency of your data operations.

In Snowflake, dynamic tables are a novel table type that allows teams to use SQL statements to define the results within their data pipeline. Dynamic Tables will automatically refresh as data changes, operating solely on new changes since the last update and providing these custom views. Snowflake will manage the scheduling and orchestration – simplifying the process and making it easier to pull the needed data.

Reduced Staging Costs

Traditional Snowpipe relies on a staging area in cloud storage to temporarily hold data before loading it into Snowflake tables. This additional storage layer incurs costs for the storage itself and the operations needed to move data from staging to Snowflake. Snowpipe Streaming removes this staging step, reducing overall storage costs by directly streaming data.

Reduced Latency and Operational Costs

Snowpipe Streaming reduces data ingestion latency compared to traditional Snowpipe, enhancing the performance of real-time applications and minimizing the processing time and resources required for data ingestion. For businesses relying on real-time data insights, this can translate into significant cost savings through optimized resource allocation and sub-second latency.

Snowpipe Streaming costs

Snowpipe Streaming costs factor in ingestion time and migration costs.

- Ingestion Time: The streaming insert time. This is calculated per hour of each client runtime, multiplied by 0.01 credits. Client runtime is collected in seconds; 1 hour of client runtime is 3,600 seconds.

- Migration Cost: This is the automated background process with Snowflake. It is approximately three to 10 credits per terabyte of data; it varies relative to the number of tiles generated, depending on the insert rate.

Snowpipe Streaming can potentially result in up to 50 to 100x lower costs than using COPY INTO or similar mechanisms for loading data. Optimizing data performance on Snowflake

In addition to transitioning to Snowpipe Streaming, you can also improve performance by improving the overall efficiency of your data. Partitioning, clustering, and data compression are all cost-effective methods that can vastly improve performance — assuming, of course, that your data isn’t already optimized.

Partitioning

Partitioning involves dividing a database table into smaller, more manageable pieces called partitions. In Snowflake, partitioning can significantly improve query performance by limiting the amount of data scanned during queries. By effectively partitioning data, you can enhance query performance by reducing the volume of data that needs to be processed, leading to faster retrieval times.

To achieve the best results, it’s essential to implement partitioning based on query patterns and data access frequency. For instance, partitioning by date can be highly effective for time-series data, ensuring that queries targeting specific periods run more efficiently. Similarly, partitioning by geographical region can benefit location-based queries, enabling quicker access to relevant data subsets.

Clustering

Clustering in Snowflake involves organizing data within a table based on the values of specified columns, known as clustering keys. This organization helps improve the efficiency of queries that filter or sort data by the clustering keys. Proper clustering can significantly reduce the amount of data scanned, enhancing query performance, especially for large tables.

Regular monitoring and adjustments to clustering strategies are crucial to ensure optimal performance. For example, if a table often undergoes queries based on a specific customer ID or transaction date, clustering by these columns can streamline data retrieval and improve overall query efficiency.

Data Compression

Data compression reduces the physical storage size of data, which can lead to performance improvements by decreasing the amount of data read from disk. Snowflake offers various compression methods, including automatic compression and user-defined compression.

LZ77 compression can benefit text-heavy data, while Run-Length Encoding (RLE) is more suitable for repetitive data. By selecting and implementing the appropriate compression methods, organizations can achieve significant performance gains and reduce storage costs.

Cost-efficiency strategies

Of course, sometimes you just can’t partition, cluster, or compress your data any further — or it would be prohibitively expensive to spend your labor costs on optimization rather than other activities. You may instead need a comprehensive strategy regarding how you’re using your data and Snowflake.

Minimizing Data Storage Costs

As data volumes grow, data storage costs can quickly escalate. Implementing strategies to minimize these costs is crucial for maintaining cost-efficiency. One effective approach is data archiving, which involves moving infrequently accessed data to cheaper storage tiers or archiving it to reduce costs.

Organizations can balance cost and performance by implementing a tiered storage strategy, storing less critical data in lower-cost storage solutions.

Optimizing Credit Usage

Snowflake’s credit-based pricing model means optimizing credit usage directly impacts cost efficiency. This includes avoiding unnecessary computations, ensuring well-indexed queries, and leveraging efficient data retrieval techniques.

Workload management is another critical aspect of optimizing credit usage. Utilizing Snowflake’s resource monitors and workload management features allows organizations to control and prioritize resource usage.

By ensuring that critical workloads receive the necessary resources without overspending, organizations can maintain cost efficiency while maximizing performance.

Reduce third-party outlays

Finally, but most critically, one area that may be neglected when optimizing Snowflake performance is third-party outlays. When working with Snowflake, third-party tools and services enhance data workflows. However, these tools can also significantly increase your overall spend.

To start reducing your third-party outlays:

- Evaluate and Optimize Tool Usage: Begin by conducting a thorough evaluation of the third-party tools and services currently in use. Assess their contribution to your data workflows and identify any overlaps or redundancies. Often, multiple tools may offer similar functionalities, leading to unnecessary costs. By consolidating these tools, you can reduce expenses and streamline your processes.

- Implement Cost Monitoring and Management Tools: Using cost monitoring and management tools can help you track and control expenses related to third-party services. Streamkap offers advanced analytics and monitoring features that provide insights into your third-party tool usage and costs. With these insights, you can identify areas where you can reduce usage or switch to more cost-effective alternatives.

- Negotiate Better Rates: Engage with your third-party vendors to negotiate better rates or volume discounts. Many vendors are open to renegotiating terms, especially if you are a long-term customer or if you commit to higher usage volumes. Additionally, consider exploring different pricing tiers or subscription models that align better with your usage patterns and budget constraints.

- Regularly Review and Update Contracts: Make it a practice to review and update contracts with third-party vendors regularly; otherwise, you run the risk of scope creep. This ensures that you are not paying for outdated or unnecessary services. Regular reviews also provide opportunities to renegotiate terms and explore new offerings that might be more cost-effective; even the same data company could have new products to explore.

Changing a data pipeline is a challenge — and it can be an intimidating one. Find a partner that can help your organization make the transition without disruption.

Streamkap is your partner in Snowflake optimization

Snowpipe Streaming can reduce Snowflake Warehouse costs by up to 3x while also reducing latency. And Streamkap can help.

Streamkap is a drop-in replacement for the vendors and methods traditionally used to sync data to Snowflake; easy to use and easy to deploy. With Streamkap, you can greatly reduce the cost and increase the efficiency of streaming data to and from Snowflake without sacrificing your functionality, interoperability, or performance.

Sign up for a free trial today to unlock the full potential of your Snowflake warehousing.