Unlocking Realtime Data Integration for Your Business

Discover how realtime data integration works, from core concepts to practical use cases. Learn to build a strategy that drives faster decisions and growth.

At its core, real-time data integration is the process of getting data from Point A to Point B the moment it's created. There's no waiting around. Unlike older methods that move data in scheduled chunks, this approach means your business is always running on the freshest information available.

Why Realtime Data Integration Matters Now More Than Ever

Think about trying to drive through a major city using a paper map printed yesterday. You wouldn't know about the accident on the main bridge, the new detour, or the sudden traffic jam building downtown. That's what it's like relying on traditional, batch-based data.

Realtime data integration is the equivalent of a live GPS. It's constantly feeding you information, letting you make smarter decisions on the fly. This move from "let's look at what happened yesterday" to "what's happening right now" is completely changing how modern companies operate.

The concept is straightforward: data flows continuously between all your systems—your apps, databases, and analytics tools. This allows you to stop reacting to old problems and start proactively managing your business in the present moment.

The Shift from Batch to Continuous Flow

For years, the standard was batch processing. Data would pile up, and then, once a day or once an hour, a job would run to move it all in one big lump. This works fine for things that aren't time-sensitive, like end-of-month financial reports. But it creates a huge delay, or "data latency"—the time between something happening and you actually knowing about it.

Real-time integration is all about closing that gap. It’s designed for a world where instant action is not just an advantage, but a necessity. The need for this up-to-the-second information is glaringly obvious in finance, where a trader's guide to real-time options data highlights how crucial it is. This immediate access lets organizations:

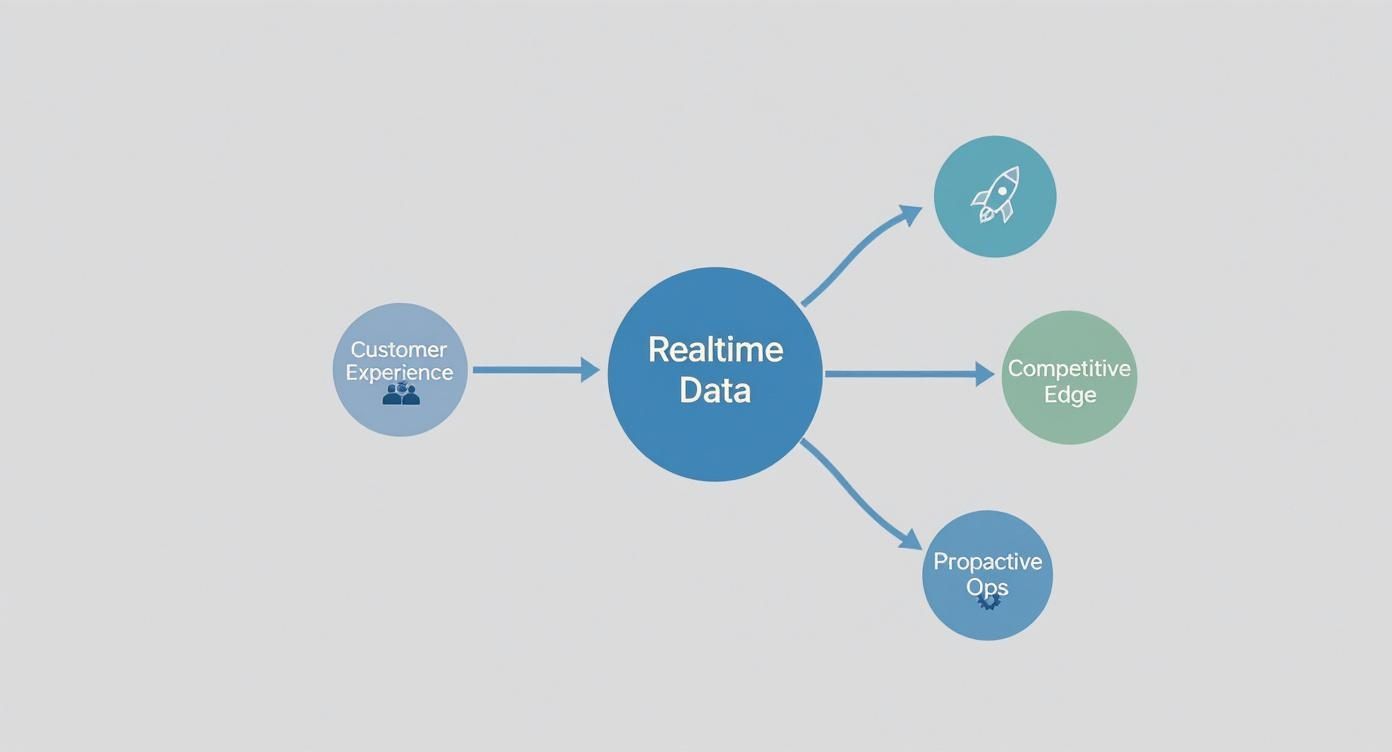

- Enhance Customer Experiences: You can send a personalized offer to a customer based on what they're looking at on your website right now, not what they browsed last week.

- Sharpen Competitive Edge: Instantly react to a competitor's price drop, optimize your shipping routes based on live traffic, and keep your inventory perfectly aligned with demand.

- Improve Operational Efficiency: Spot potential system failures before they cause an outage, detect fraudulent transactions the second they happen, and automate decisions that used to require manual oversight.

A Rapidly Expanding Market

The demand for this kind of speed is obvious when you look at the market numbers. The real-time data integration market is on track to nearly double, jumping from $15.18 billion in 2024 to an estimated $30.27 billion by 2030. This incredible growth is driven by businesses across every industry needing instant analytics and event-driven architectures to stay competitive. You can read more about these impressive growth rates and what they mean for businesses.

In this guide, we'll cover everything from the basic ideas to how this technology works in the real world. By the time you're done, you'll see why making decisions based on yesterday's data just doesn't cut it anymore.

Understanding the Architectures That Power Instant Data Flow

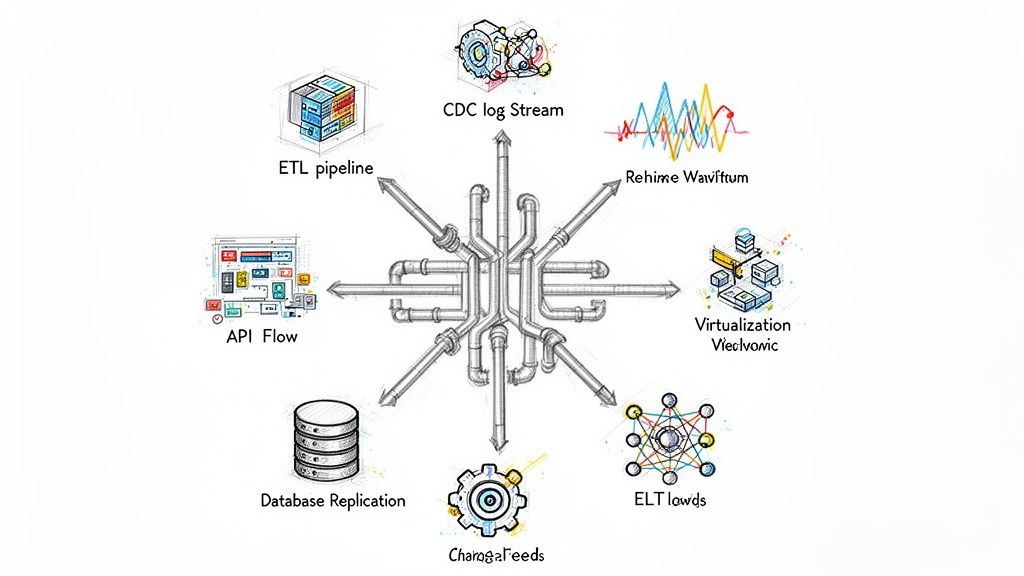

To really get what real-time data integration is all about, you have to look under the hood at the engines that make it run. These aren't just abstract ideas; they're specific architectural patterns, each with its own way of handling the constant flow of information. Let's break them down.

Think of it like delivering breaking news. You could listen for every single bulletin as it's announced, have a reporter stream live from the scene, or set up a system of alerts that go off when something important happens. Each method works, but they're built for different situations.

Change Data Capture: A Database Diary

Imagine your database keeps a detailed diary—a transaction log—that notes every single change the moment it happens. Every new entry, update, or deletion gets an entry. Change Data Capture (CDC) is simply the process of reading this diary in real time.

Instead of constantly pestering the database with questions like, "Anything new? How about now?", which is slow and eats up resources, CDC quietly observes the log. It’s an incredibly efficient way to grab every event without getting in the way of your operational systems.

This is the perfect approach for keeping a data warehouse perfectly in sync with a production database or replicating data for analytics without dragging down performance.

Streaming ETL: The Constant River of Data

Traditional ETL (Extract, Transform, Load) is like filling a reservoir and then processing all the water in one big batch. Streaming ETL, on the other hand, is like a river. Data flows constantly, and transformations—like filtering, enriching, or restructuring—happen as it travels downstream.

There's no waiting around for a huge batch to build up. As soon as a piece of data enters the stream, it gets processed and sent on its way. This architecture is built for speed and is ideal for use cases that need immediate insights from a high volume of events, like analyzing website clickstreams or processing sensor data from IoT devices.

"Realtime data integration isn't just a technical upgrade; it's a fundamental business shift. It changes the organization's metabolism from a slow, batch-oriented rhythm to a continuous, responsive pulse."

By 2025, over 70% of enterprises are expected to rely heavily on AI-driven tools to manage real-time data. This shift is turning data integration from a simple IT task into a core driver of business innovation. For more on this trend, you can check out these developing data integration market trends on rapidionline.com.

This concept map shows how real-time data directly fuels key business areas like customer experience and operational agility.

As you can see, a continuous stream of data is what enables a business to become more responsive and forward-thinking in every critical function.

Event-Driven Architecture: The Instant Notification System

Now, picture a system built on instant notifications. When a customer places an order, an "OrderPlaced" event is immediately broadcast. This single event can trigger multiple actions across the business all at once: the warehouse gets a notification to pack the item, finance processes the payment, and marketing sends a confirmation email.

That's the core idea behind an Event-Driven Architecture (EDA). Instead of applications being tightly connected and waiting on each other, they are loosely coupled and simply react to events as they happen. This makes the entire system more resilient, scalable, and nimble.

EDA is the backbone for modern microservices and applications that need to respond instantly to real-world occurrences. If you're looking for a deeper dive, our guide on different data pipeline architectures explains how these systems are built in greater detail.

Comparing Realtime Data Integration Architectures

To help you choose the right approach, this table breaks down the fundamental differences between CDC, Data Streaming, and Event-Driven Architecture.

ArchitecturePrimary Use CaseLatencyImpact on Source SystemExample ScenarioChange Data Capture (CDC)Database replication and synchronizationNear real-time (milliseconds)Very LowKeeping a read-replica or data warehouse updated with a production OLTP database.Data StreamingContinuous processing of high-volume event dataReal-time (sub-second)Low to MediumAnalyzing live website clickstream data to personalize user experiences.Event-Driven Architecture (EDA)Decoupling services and triggering reactive workflowsReal-time (milliseconds)LowAn e-commerce "order placed" event triggers inventory, shipping, and notification services.

Each of these architectural patterns offers a different way to tackle real-time data challenges. Understanding their strengths and weaknesses is the first step toward building a responsive, data-driven system.

What This Actually Means for Your Business

Let's move past the technical jargon. The real magic of realtime data integration isn't just about making data move faster; it’s about fundamentally changing how your business operates, competes, and grows. When information flows instantly, vague goals like "becoming more agile" suddenly turn into real, measurable results.

Think about a major retailer tackling the dreaded "out of stock" notification on their website. The old way involved waiting for nightly inventory updates. The new way? They stream data from every cash register and warehouse scanner in real time.

The second an item is purchased in-store, the website's inventory count is updated. This immediate sync lets them pull online ads for sold-out products on the fly, adjust pricing based on demand, and even automatically trigger a new order to their supplier. The outcome is simple: fewer lost sales, happier customers, and a supply chain that actually works.

Stop Fraud Before It Happens

In the world of financial services, speed is everything—especially when it comes to security. Fintech companies that use realtime data integration can spot suspicious transaction patterns the very instant they happen. A traditional batch system might take hours to flag a fraudulent charge, but by then, the money is long gone.

With a modern, streaming setup, a bizarre spending pattern—say, a purchase in a new country just minutes after one in your customer's hometown—triggers an immediate alert. The system can instantly block the transaction and ping the customer. It’s a game-changer.

Businesses that make fast, data-driven decisions through automated processes have been found to achieve 97% higher profit margins and 62% greater revenue growth than their peers. Real-time data integration is the engine that enables this level of performance.

This approach turns fraud detection from a reactive, after-the-fact cleanup into a proactive shield, saving millions and maintaining the trust you've worked so hard to build.

Keep Operations Running Smoothly

The impact goes far beyond just customer-facing systems. Take manufacturing, for example, where realtime data is the key to predictive maintenance. By constantly streaming data from sensors on factory equipment—tracking everything from temperature to vibration—companies can see a failure coming before it happens.

Instead of waiting for a critical machine to grind to a halt and shut down the whole production line, an AI model fed with live data can spot tiny warning signs. This gives maintenance crews a heads-up to schedule repairs during planned downtime.

This smart shift leads to clear, bottom-line improvements:

- Less Downtime: Fixing things proactively prevents the kind of catastrophic failures that can pause operations for days.

- Lower Repair Costs: A planned repair is always cheaper than a frantic, middle-of-the-night emergency fix.

- Smarter Staffing: Maintenance teams can be sent where they’re actually needed, based on hard data, not guesswork.

At the end of the day, realtime data integration closes the gap between simply having data and actually doing something intelligent with it. It transforms data from a stale, historical record into a living, breathing resource that powers smarter decisions, creates better customer experiences, and builds a far more resilient business.

Facing the Common Implementation Hurdles

Making the switch to real-time data integration is a game-changer, but it’s not always a simple flip of a switch. While the upside is huge, getting there means you have to clear a few common hurdles. The trick is to know what these roadblocks are before you run into them, so you can build an infrastructure that’s both powerful and resilient.

The first big challenge is keeping your data consistent. In the old world of batch processing, you had predictable moments when all your data was in sync. But in real-time, data is constantly on the move. Picture this: two different systems get an update on the same customer order just milliseconds apart. Which one holds the absolute truth?

It's absolutely critical that every system has the same, correct information at the same time. If it doesn't, you're looking at potential operational chaos—think everything from messed-up inventory counts to business analytics built on bad data. This is just one of many complexities, and you can get a much deeper look into the common challenges of data integration in our dedicated guide.

Taming Data Complexity and Volume

As information starts pouring in from dozens or even hundreds of sources, the complexity can get out of hand, fast. Every source might have its own format, structure, and quality. Trying to wrangle all these different streams into a single, reliable flow is a serious technical puzzle that demands great tools and even better governance.

At the same time, the sheer amount of data can put a massive strain on your systems. A sudden spike in website traffic or a surge from IoT sensors can easily overwhelm your data pipelines, causing delays or even failures. Your architecture has to be built for your busiest day, not your average one.

A successful real-time data platform isn't just about moving fast; it's about being dependable under pressure. The aim is to create a system that can absorb unexpected spikes without losing a single piece of critical information.

This means you have to be smart about how data is brought in, temporarily stored, and processed. Many of the best solutions use message queues like Apache Kafka to act as a buffer, smoothing out the unpredictable flow of data before it ever hits your databases or applications.

Ensuring Scalability and Reliability

Maybe the most important long-term challenge is scalability. The amount of data you have today is nothing compared to what you'll have tomorrow. A system that hums along perfectly with one million events a day could completely fall apart when faced with one hundred million. You absolutely have to plan for growth from the very beginning.

This is where cloud-native architectures really come into their own. They give you the power to automatically scale resources up or down depending on what you need at that moment, so you get the performance required without overpaying. This kind of elastic scalability is fundamental to modern data integration.

On top of that, reliability is everything. A broken data pipeline means lost data, and lost data often means lost money or unhappy customers. Building a system that can withstand failures involves a few key tactics:

- Monitoring and Alerting: You need to know the second a pipeline slows down or breaks so your team can jump on it.

- Automated Recovery: The best systems are designed to restart failed processes or automatically find a new path for data if there’s an outage.

- Data Validation: Constant checks should be in place to make sure data isn't getting garbled as it travels from one system to another.

The investment required to solve these problems is driving huge market growth. The global data integration market is expected to jump from about $17.58 billion in 2025 to $33.24 billion by 2030. This boom is happening because companies are finally ditching their old, slow batch systems for modern platforms built for the scale and reliability today's world demands. You can discover more insights about these market shifts on marketsandmarkets.com. By tackling these challenges head-on, you can truly unlock the power of your live data.

Seeing Real-Time Data Integration in Action

The true power of real-time data integration clicks into place when you move from theory to reality. Abstract concepts like "low latency" and "streaming" suddenly feel concrete when you see them driving tangible, high-impact business outcomes.

So, let's explore a few compelling, real-world stories from different industries to see just how instant data flow creates measurable value.

These examples aren't futuristic fantasies—they're happening right now. They're driven by businesses that understand the high cost of delay and have turned real-time data from a technical capability into a core strategic asset.

Transforming E-commerce With Live Personalization

Imagine a major online retailer struggling to keep up with customer expectations. Their personalization engine used to run on nightly data batches. This meant a customer's recommendations were based on what they did yesterday, not what they were doing in the moment.

This lag created a constant stream of missed opportunities. A user browsing for running shoes might see ads for hiking boots they looked at last week, leading to a disconnected and frankly, annoying, experience. To fix this, the company implemented a real-time data integration pipeline.

Now, every click, search, and "add to cart" action is captured and processed instantly. This live user behavior feeds directly into their recommendation engine, allowing for dynamic adjustments on the fly.

- The Problem: Recommendations were stale, based on 24-hour-old data, leading to low engagement and lost sales.

- The Real-Time Solution: A streaming pipeline captures user actions as they happen, feeding a live personalization engine.

- The Impact: The retailer saw a significant lift in conversion rates and a 15% increase in average order value. Shoppers now get relevant suggestions that align with their immediate interests, creating a much stickier experience.

A perfect example of this is the online retailer Niche.com. By moving from a daily batch process to a continuous flow, they made huge improvements. You can learn more about how Streamkap helped reduce Niche.com's data latency from 24 hours to near-real-time, which completely changed how they manage inventory and analytics.

Optimizing Logistics With Real-Time Fleet Tracking

Next, picture a national logistics company managing thousands of delivery trucks. Their biggest headache was route optimization. Using static, pre-planned routes meant they couldn't react to unpredictable events like traffic jams, accidents, or sudden road closures.

This resulted in delayed deliveries, wasted fuel, and frustrated customers. To get ahead of the chaos, they equipped their entire fleet with GPS trackers that stream location data back to a central command center every second.

By integrating live traffic data, weather alerts, and vehicle diagnostics, dispatchers can see the entire network in real time. This immediate visibility allows for dynamic rerouting to avoid delays and optimize fuel consumption.

This shift from static planning to dynamic response had a massive effect on their operations. Dispatchers can now proactively guide drivers around bottlenecks, ensuring packages arrive on time.

The system also monitors engine performance and fuel levels, enabling predictive maintenance that prevents costly breakdowns. The result is a more efficient, reliable, and cost-effective delivery network that provides a superior customer experience.

Saving Lives in Healthcare With Instant Patient Data

Finally, let's look at a hospital's critical care unit. Traditionally, nurses would manually check patient vitals periodically, and data from monitoring devices was often stuck in silos. This created dangerous information gaps where a patient's condition could deteriorate rapidly between checks.

To address this life-or-death challenge, the hospital implemented a real-time data integration platform. This system continuously streams data from every single patient monitoring device—heart rate monitors, ventilators, infusion pumps—into a centralized dashboard.

This live data feed is then analyzed by an AI-powered system that can detect subtle patterns that often precede a critical event, like cardiac arrest or sepsis.

- The Problem: Delayed and siloed patient data created unnecessary risks and forced medical staff into reactive care.

- The Real-Time Solution: All patient monitoring devices stream data to a central system for continuous, automated analysis.

- The Impact: The system provides predictive alerts to medical staff, giving them crucial minutes to intervene before a crisis occurs. This proactive approach has been shown to significantly reduce patient mortality rates in critical care units.

From boosting sales to saving fuel and, most importantly, saving lives, these use cases demonstrate that real-time data integration is far more than an IT upgrade. It's a fundamental business enabler that unlocks new levels of efficiency, intelligence, and responsiveness across every industry.

Building Your Roadmap for a Successful Implementation

Jumping into a real-time data integration strategy is a big deal. It's about more than just picking the right software; it's about having a rock-solid plan to make sure the whole project actually delivers on its promise. Think of this roadmap as your guide, helping you move from a rough idea to a successful, scalable system without getting lost along the way.

The first, and most important, step is to tie your project to a real business problem. It’s easy to get caught up in the technology itself, but that’s a classic mistake. Instead, find a specific pain point or a clear opportunity where having data right now will make a tangible difference.

Start with the Business Case

Before a single line of code gets written, you need to know exactly what you’re trying to accomplish. Are you hoping to cut down on customer churn by sending personalized offers at just the right moment? Or maybe the goal is to get ahead of costly equipment breakdowns with predictive maintenance alerts.

When you have a clear objective, your project has a north star. It makes getting buy-in from leadership much easier and gives you a concrete way to measure success later on. A solid business case should answer a few key questions:

- What’s the problem? (e.g., "Our inventory data is always a day old, and it's causing stockouts.")

- What’s the ideal outcome? (e.g., "We want to slash stockouts by 30% and see our online conversion rates go up.")

- How will we know we’ve succeeded? (e.g., "By tracking inventory accuracy in real time and correlating it with sales data.")

This kind of focus ensures your real-time data integration efforts are directly linked to bottom-line results.

Make the Critical Build vs. Buy Decision

With your objective in hand, the next fork in the road is deciding whether to build a custom solution or buy an existing platform.

Going the build route gives you total control and customization, but it’s a heavy lift. It means hiring specialized engineers, committing to long-term maintenance, and managing all the underlying infrastructure yourself.

On the other hand, buying a managed solution—like an Integration Platform as a Service (iPaaS)—can get you to the finish line much faster and often at a lower total cost. These platforms take care of all the messy, behind-the-scenes work like scaling, monitoring, and error handling. This frees up your team to focus on creating business value instead of just keeping the lights on.

The right answer really depends on your company's budget, in-house talent, and how quickly you need to move. For most organizations, though, a managed platform is the most practical path to getting real-time capabilities without the massive learning curve of a custom build.

Establish Strong Governance from Day One

Real-time data is incredibly powerful, but it can create a real mess if you don't have good governance in place. This isn't something you can tack on at the end; it has to be part of the foundation. You need to set the rules of the road from the very beginning.

This means figuring out who owns what data, defining quality standards, and making sure you’re compliant with regulations like GDPR. Strong governance ensures the data flowing through your pipelines is accurate, consistent, and trustworthy—which is the only way you can make sound, data-driven decisions.

Finally, think about the future. Design your architecture to grow with you. Choose technologies that can handle more data than you have today and that can bounce back from failures without bringing everything to a halt. A little foresight here will save you from a painful and expensive re-architecture project a few years from now.

Answering Your Key Questions

As you start digging into real-time data integration, a few common questions always seem to pop up. Getting straight answers to these is the key to seeing how these concepts actually apply to your business.

Let’s break down some of the most frequent ones.

ETL vs. ELT vs. Streaming ETL

So, what’s the real difference between all these acronyms? It really just boils down to one simple thing: when the data gets transformed.

Think of traditional ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) like scheduled deliveries. Data gets bundled up, then processed either before it’s loaded (ETL) or after it arrives at its destination (ELT). Either way, it’s a batch process, which means there's always a delay.

Streaming ETL, on the other hand, is a different beast entirely. It works on data continuously, as soon as it's created. It’s less like a scheduled truck delivery and more like a conveyor belt, where each piece of data is handled the moment it appears, not after a huge pile has formed.

Will Change Data Capture Slow Down My Database?

This is a great question and a totally valid concern. The good news is that modern Change Data Capture (CDC) methods are built to be incredibly lightweight. They don't hammer your production database with heavy, resource-draining queries.

Instead, they work much smarter.

Most modern CDC tools tap directly into the database’s transaction logs. Think of this log as the database's private diary, recording every single change. By reading this log, CDC tools are just listening in on what’s happening, not actively interrogating the database and slowing it down.

This approach leaves your database free to do its main job: running your applications.

Should We Replace All Batch Processing?

Probably not. While real-time integration is incredibly powerful, it’s not the magic wand for every single data job. The real goal is to use the right tool for the right task, and batch processing still has a solid place in the modern data stack.

For instance, batch is still your best bet for things like:

- Large-Scale Historical Reporting: Crunching massive amounts of data from months or even years ago.

- Training Machine Learning Models: Most models need to learn from huge historical datasets, which are best handled in bulk.

- Archival and Compliance: Periodically moving data for long-term storage where you don't need instant access.

A smart data strategy usually isn't about choosing one over the other. It's about blending real-time and batch processes, letting each one handle what it does best to move the business forward.

Ready to replace slow, costly batch ETL with an efficient streaming solution? Streamkap uses CDC to synchronize data in real-time without impacting your source databases. Learn more at https://streamkap.com.