<--- Back to all resources

A Guide to Real Time Data Processing

Discover how real time data processing is transforming modern business. Our guide covers key concepts, architectures, and real-world applications.

Real-time data processing is all about analyzing information the very second it’s created. The goal? To get immediate insights that let you take instant action. It’s the difference between looking at a static paper map versus using a live GPS that reroutes you around a traffic jam happening right now. That ability to react on the fly is what gives modern businesses a serious edge.

The Shift to Instant Decision Making

For decades, businesses ran on historical data. They’d make decisions by poring over reports from last week, last month, or even last quarter. This old-school method, called batch processing, is a bit like developing film from a camera—you have to wait a while to see the results. It’s still fine for long-term strategic planning, but it leaves you completely exposed to immediate threats and fleeting opportunities.

Today, business moves way too fast for that. Waiting is a liability. Real-time data processing closes that critical gap between when an event happens and when you can actually do something about it. It’s a fundamental shift from being reactive to proactive, letting companies engage with customers, manage their operations, and handle risks with incredible speed.

Why Immediate Insight Matters

The truth is, most data loses its value almost instantly. Finding out about a fraudulent credit card transaction a day later is just damage control; stopping it in the milliseconds before it goes through is true prevention. This idea plays out everywhere.

- Finance: Banks sift through thousands of transactions per second to spot and block fraud before a customer’s money is gone.

- Retail: E-commerce sites look at what you’re clicking on right now to suggest products you might actually want, making a sale more likely.

- Logistics: A delivery company tracks its fleet and live traffic to reroute drivers on the fly, which means faster deliveries and less money spent on fuel.

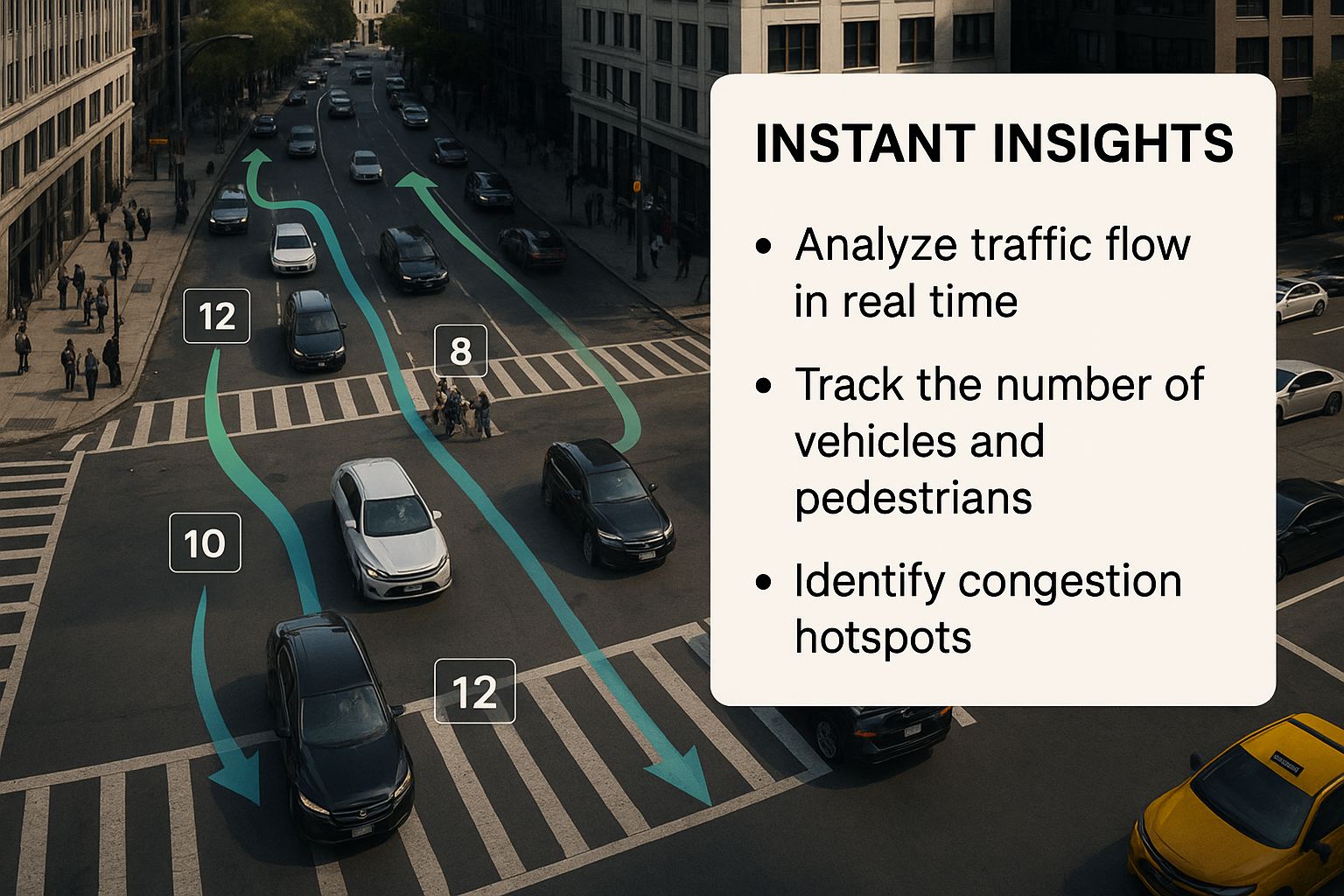

This infographic does a great job of showing how real-time data can bring order to a chaotic system, like a snarled intersection, and turn it into something that flows smoothly.

The big takeaway here is that getting insights instantly allows you to make dynamic adjustments, turning potential disasters into moments of pure efficiency.

The Growing Demand for Real Time Analytics

This move toward instant analytics isn’t just a passing fad; it’s a huge economic driver. The global real-time analytics market was valued at a massive $51.36 billion in 2024 and is expected to balloon to $151.17 billion by 2035.

What’s fueling this? An absolute explosion of data. By 2025, the world will be creating an estimated 73 zettabytes of data every single year. Trying to make sense of that flood of information without instant processing is a recipe for falling behind. You can dig into more big data statistics to see what’s behind this incredible growth.

Understanding Batch vs Stream Processing

To really get what real-time data processing is all about, it helps to see how it differs from the old-school method: batch processing.

Think of it like this. For years, the standard way to do laundry was to let it pile up all week and then tackle it all in one giant load on Saturday. That’s batch processing in a nutshell. It’s about collecting data over a set period—maybe an hour, a day, or even a month—and then processing it all in one big, efficient go.

This approach is a workhorse for handling huge amounts of data where time isn’t the most critical factor. Many core business operations still rely on it because a bit of a delay is perfectly acceptable.

For example, you see batch processing at work in:

- End-of-day financial reports: A business totals up the day’s sales long after the doors have closed.

- Monthly payroll: All employee salaries and deductions are calculated at the end of the month.

- Nightly inventory updates: A job runs overnight to sync up stock levels across all warehouses.

In these cases, the main goal isn’t lightning speed; it’s about getting the numbers right and handling a large volume of information efficiently. The system is designed to wait, gather all the necessary data, and then run a heavy, scheduled job.

The Rise of Stream Processing

Now, let’s flip the script. Imagine you spill coffee on your shirt just minutes before a big meeting. You wouldn’t toss it in the hamper for Saturday’s laundry day, right? You’d treat that stain immediately. That’s the idea behind stream processing.

Instead of collecting and waiting, stream processing handles data event-by-event, or in tiny windows of time, often within milliseconds of it being created. It’s built for speed and immediate action, making it essential for any scenario where the value of data plummets with every passing second. The focus completely shifts from crunching massive stored datasets to analyzing data as it’s moving.

Stream processing isn’t about handling “big data” so much as it is about handling “fast data.” The priority is low-latency insight, not processing massive historical archives, which allows a business to react the moment something happens.

This kind of instant analysis is the magic behind the modern digital experiences we’ve come to expect. Things like live monitoring dashboards, instant fraud alerts from your bank, and the dynamic pricing you see on ride-sharing apps all depend on processing events on the fly. For a deeper dive, you can explore the technical details in this guide on batch processing vs real time stream processing.

Batch Processing vs Stream Processing Key Differences

So, how do you decide which one to use? It’s not about one being flat-out better than the other. The real question is, which one is right for the job at hand? In fact, most organizations use both, letting each play to its strengths.

The table below breaks down the fundamental differences to help you see where each model shines.

AttributeBatch ProcessingStream ProcessingData ScopeProcesses large, bounded datasets from a specific time period.Processes continuous, unbounded streams of individual events.LatencyHigh (minutes to hours or days). Results are delayed.Very low (milliseconds to seconds). Delivers near-instant results.Data SizeHandles terabytes or petabytes of data in a single run.Processes small packets of data (kilobytes) continuously.Best ForNon-urgent, periodic tasks like payroll and billing.Time-sensitive actions like fraud detection and personalization.

Ultimately, choosing between them boils down to understanding your data’s lifecycle and how quickly you need to act on the insights it provides.

The Blueprints for Real-Time Data

Building a system that can process data in real time isn’t about just plugging in tools; you need a solid blueprint. Think of it like an architect designing a house. You wouldn’t use the same plan for a skyscraper as you would for a single-family home. Similarly, data engineers choose an architecture based on what they need to prioritize—be it raw speed, absolute accuracy, or the ability to handle complex logic.

These frameworks give us a structured way to think about how data flows from its source all the way to a useful insight. Let’s walk through the most common designs that power modern real-time systems. Each one strikes a different balance between getting answers right now and getting them perfectly right over time.

The Lambda Architecture

The Lambda architecture was one of the first serious attempts to solve a classic data dilemma: how do you get fast, good-enough answers now without sacrificing the perfectly accurate, comprehensive answers you’ll need later? Its solution is clever: it creates two parallel data pipelines.

Imagine two assembly lines processing the same raw materials, but for different purposes.

- The Speed Layer: This is your express lane. It uses stream processing to analyze data the moment it arrives, giving you immediate but sometimes incomplete views. It’s perfect for things like a live analytics dashboard, where a quick result is more valuable than a 100% perfect one.

- The Batch Layer: This is the meticulous, quality-control line. It stores all the raw data and runs massive batch jobs on it periodically. This creates the official, completely accurate historical record—the ultimate source of truth.

Finally, a “serving layer” merges the results from both pipelines to give the user a single, unified view. This dual-path approach gives you both speed and reliability, but it comes at a cost: you’re essentially building and maintaining two separate systems.

The Kappa Architecture

As stream processing technology got more powerful, a simpler idea took hold: the Kappa architecture. If Lambda is two parallel assembly lines, Kappa is a single, incredibly efficient one. It gets rid of the batch layer completely. The argument is that a modern streaming system is powerful enough to do it all.

The core principle here is that all data is just a stream. If you want to analyze fresh, incoming data, you process the stream. If you need to re-calculate your entire history—maybe because you found a bug in your code—you just replay the entire historical stream through the same processing logic.

The big win with the Kappa architecture is simplicity. You only have to build, test, and maintain one set of code and one pipeline. This dramatically cuts down on the operational headache.

This streamlined model has become the go-to for many new real time data processing projects. For a deeper dive into how these two models stack up, this comprehensive guide on the evolution from Lambda to Kappa architecture provides an excellent breakdown.

Modern Event-Driven Systems

Beyond Lambda and Kappa, a much broader architectural style has emerged: event-driven architecture (EDA). Instead of thinking in rigid layers, EDA is about designing systems that react to events. An “event” is just a small record of something that happened—a customer clicked “buy,” a sensor’s temperature changed, a new user signed up.

In an event-driven world, different parts of a system communicate by producing and consuming these events. This makes the whole system incredibly flexible and resilient. For example, a single “order placed” event can trigger several different actions at once, all independently: the inventory system gets updated, the finance system processes the payment, and the shipping department gets a notification.

This highly responsive design is what makes many modern applications tick, from microservices to massive IoT platforms, where reacting instantly isn’t just a feature—it’s the entire point.

The Technology Powering Instant Insights

Behind every seamless real-time experience—from the fraud alert that saves your account to the live delivery tracker on your phone—there’s a sophisticated stack of technologies working in perfect harmony. Building a system for real time data processing is a lot like assembling a high-performance engine; every single component has a distinct and vital role.

To really get a grip on how it all works, we can break down the core technology stack into three key functions: ingestion, processing, and serving.

Think of it as a digital factory. Raw data arrives at the loading dock (ingestion), moves through an intelligent assembly line to be analyzed (processing), and is then stored in a warehouse for immediate access (serving). Each stage needs specialized tools designed for speed and reliability.

Message Queues: The Central Nervous System

The first hurdle in any real-time system is capturing massive, relentless streams of data as they’re generated. That’s the job of a message queue. It acts as the system’s central nervous system or, sticking with our factory analogy, its loading dock.

A message queue provides a durable buffer, making sure no data gets lost even if downstream systems get bogged down or temporarily fail.

Imagine thousands of people trying to rush into a sold-out concert all at once. Instead of a single, chaotic entrance, organized lines (queues) let everyone get inside smoothly without overwhelming the staff. Message queues do the exact same thing for data.

Popular choices here include:

- Apache Kafka: The undisputed industry standard for high-throughput, fault-tolerant event streaming. It’s built to handle trillions of events a day without breaking a sweat.

- Amazon Kinesis: A fully managed service on AWS that makes it incredibly easy to collect, process, and analyze real-time streaming data.

- Google Cloud Pub/Sub: A scalable and reliable messaging service that lets you send and receive messages between independent applications.

These tools ingest data from countless sources—like website clicks, IoT sensors, or mobile app interactions—and organize it into ordered streams, ready for the next step.

Stream Processing Frameworks: The Brains of the Operation

Once data is flowing into the message queue, the next step is to make sense of it on the fly. This is where stream processing frameworks come in. They are the brains of the real-time pipeline, performing complex calculations, transformations, and analysis on data as it moves.

This isn’t about querying a static database. It’s about applying logic to a continuous, unending river of information. The framework lets you ask questions like, “Is this credit card transaction’s value more than three standard deviations from the user’s average?” or “How many users are adding this specific product to their cart right now?”

The real magic of a stream processor is its ability to maintain “state.” It can remember past events to provide context for new ones, which is what enables sophisticated operations like tracking a user’s entire session or spotting complex event patterns over time.

Leading stream processing frameworks include:

- Apache Flink: Known for its incredibly low latency and powerful state management capabilities, making it the go-to for complex event processing. You can learn more by checking out our guide on managed Flink solutions.

- Apache Spark Streaming: An extension of the popular Spark framework that processes data in small micro-batches, offering a great balance between throughput and latency.

The demand for these capabilities is exploding. The real-time analytics software segment now dominates the broader data analytics market, capturing a 67.8% share in 2024. This trend is fueled by the geyser of data from IoT and social media, pushing the total data analytics market from $50.04 billion in 2024 toward a projected $658.64 billion by 2034. You can see the full breakdown in this data analytics market research.

Real-Time Databases: The High-Speed Memory

After the data has been processed, the fresh insights need to be stored somewhere they can be queried instantly. Your traditional database just won’t cut it—they’re too slow. Real-time databases, also known as real-time analytical processing (RTAP) databases, are built specifically to serve queries on fresh data with sub-second latency.

They act as the system’s lightning-fast memory, making the results of your real time data processing immediately available to applications and dashboards. These databases are optimized for fast writes and even faster reads, allowing thousands of concurrent users to get up-to-the-millisecond information.

Key players in this space are:

- Apache Druid: A high-performance, column-oriented database designed for real-time analytics on huge datasets.

- Apache Pinot: Originally developed at LinkedIn to power real-time analytics dashboards, it’s famous for its ultra-low query latency.

- ClickHouse: An open-source columnar database that is exceptionally fast for analytical queries.

By combining these three components—a message queue for ingestion, a stream processor for analysis, and a real-time database for serving—organizations build robust, end-to-end pipelines that turn raw data into immediate, actionable insights.

How Real-Time Processing Actually Drives Business Success

It’s one thing to understand the theories and architectures behind real-time data processing, but seeing it in the wild is where its power truly clicks. The ability to act on data in the blink of an eye isn’t just a technical achievement; it’s creating real, measurable business outcomes that were pure science fiction not too long ago.

So, let’s move beyond the abstract and look at how smart companies are using this technology to get ahead. These examples draw a straight line from instant data insights to bottom-line results.

Preventing Fraud in Financial Services

Think about a credit card company processing thousands of transactions every single second. With an old-school batch system, they might catch a fraudulent purchase hours after it happened. By then, the money is gone, and the damage is done. Real-time processing flips this model completely on its head.

When you swipe your card, a stream processing system kicks into gear, instantly comparing that single transaction against a rich history of your spending patterns. In milliseconds, it asks critical questions:

- Location: Are you suddenly buying something in a city you’ve never been to?

- Amount: Is this purchase way larger than your typical spending?

- Frequency: Are there multiple transactions happening suspiciously fast in different places?

If the system’s algorithm flags the transaction as a high risk, it gets blocked before it goes through. This isn’t just about damage control; it’s about prevention. This proactive stance saves both customers and the company a fortune every year.

Powering Personalization in E-commerce

Ever feel like an e-commerce site is reading your mind? That’s not a coincidence. It’s real-time data processing doing its job. As you click around a website, every single action—a search, a product view, even just hovering over an image—creates a tiny data point.

These “events” are immediately fed into a streaming engine that’s constantly refining your profile. This allows the site to serve up personalized recommendations almost instantly. For example, if you look at a couple of different hiking boots, the recommendation engine doesn’t wait. It immediately starts suggesting related gear like wool socks or waterproof jackets right on your homepage.

This instant feedback loop is what makes the experience feel so relevant. By reacting to what a user is doing right now, retailers can boost engagement, lift conversion rates, and increase the average order value.

Optimizing Global Logistics

For a global shipping company, every minute and every gallon of fuel counts. Real-time data from fleet tracking is the key to optimizing everything on the fly. Delivery trucks are now packed with IoT sensors streaming a constant flow of information—location, speed, fuel levels, even engine health.

This data firehose lets a central system monitor the entire fleet against live traffic, weather alerts, and unexpected road closures. If a sudden accident creates a massive jam, the system can automatically reroute drivers to a faster path. This saves time and fuel while ensuring packages still arrive on schedule.

This isn’t just about cutting operational costs; it’s also about keeping customers happy with more reliable delivery estimates. It’s no wonder that mature real-time implementations deliver an average return on investment (ROI) of 295% over three years. This trend is only accelerating, with the number of connected IoT devices projected to grow from 18.8 billion in 2024 to 40 billion by 2030. You can dig deeper into the growth rates of real-time data integration to see just how significant this impact is.

Navigating the Rough Waters of Implementation

Jumping into real-time data processing is exciting, and the payoff can be huge. But let’s be honest—the road from a great idea to a fully functioning, reliable system is rarely smooth. It’s one thing to see the potential, but quite another to build and maintain these powerful, always-on systems.

Knowing the common pitfalls before you start is half the battle. Most of the headaches you’ll encounter will boil down to three things: keeping your data straight, building a system that won’t fall over, and dealing with the real-world costs and people needed to run it.

Keeping Your Data Clean and Consistent

In a real-time system, data is zipping between different services all the time. The biggest challenge? Making sure every part of your system agrees on what the truth is at any given moment. This is what we call data consistency, and it’s tougher than it sounds.

Think about an e-commerce inventory system. If one part of the system says you have 10 widgets left, but another part just processed a sale and knows you only have 9, you’ve got a problem. A customer might buy an item that’s already gone. This gets even messier when events arrive out of order or you accidentally process the same transaction twice, leading to completely wrong analytics and bad business decisions.

The holy grail in many streaming systems is achieving “exactly-once” processing. This means every single event is processed one time—no more, no less. It’s absolutely critical for things like financial ledgers or inventory counts, where dropped or duplicated data can cause chaos.

Building a System That Can Take a Punch

Real-time systems don’t get to take a break. They have to run 24/7, which means they need to be incredibly resilient. This is known as fault tolerance. What happens when a server dies or a network connection blips out? A well-built system has to handle these hiccups without losing data or grinding to a halt.

So, how do you build for this kind of resilience? It comes down to a few key tactics:

- Redundancy: Always have a backup. Run duplicate components so if one fails, another is ready to take its place instantly.

- State Management: Continuously save your work. By storing the state of processing jobs, a system can pick up right where it left off after a crash, as if nothing happened.

- Backpressure Handling: Don’t let the system drown in data. You need a way to manage surges when data arrives faster than it can be processed, preventing overloads that could bring everything down.

The Hidden Costs: Money and People

Finally, we need to talk about the operational side of things. Real-time systems are high-maintenance. They aren’t something you can just set up and walk away from. They require constant monitoring and tweaking to keep them running smoothly, and that all adds up to real operational costs. The powerful infrastructure needed for this kind of work doesn’t come cheap, either.

On top of that, there’s a very real skills gap. Finding engineers who are true experts in real-time data processing and know their way around tools like Apache Kafka, Apache Flink, and Apache Druid is tough. They are in high demand, and that scarcity can slow you down and drive up costs. Getting real-time right means you have to invest just as much in your people as you do in your technology.

Answering Your Real-Time Data Questions

As you start digging into the world of instant data, a few common questions always seem to surface. Getting straight answers is the first step to figuring out how real-time data processing can actually work for you. Let’s tackle some of the most frequent ones to clear up any confusion.

Having this practical knowledge will help you make much smarter decisions, whether you’re just kicking around the idea of a real-time pipeline or you’re already deep in the planning stages.

Distinguishing Real Time from Near Real Time

People often throw around “real time” and “near real time” as if they mean the same thing, but they really don’t. The difference, which is critical, all boils down to latency—that tiny gap between an event happening and you being able to do something about it.

- True Real Time: This is about processing with virtually zero delay. We’re talking milliseconds, sometimes even microseconds. The classic example is a car’s airbag system; it has to react instantly, with no time for a second thought.

- Near Real Time: This is for tasks that are incredibly fast but can tolerate a small, acceptable delay, usually a few seconds up to a minute. Think of a live sports scoreboard that refreshes every 30 seconds. It feels instant to the fan, but there’s a slight lag.

The right choice always comes back to your specific needs. For high-frequency stock trading, every millisecond counts. But for a customer support dashboard showing new tickets, a few seconds’ delay is perfectly fine and a lot more practical to build.

How to Choose the Right Technology

Picking the right tools for a real-time project can feel like a huge task, but you can cut through the noise by focusing on a few core factors. There’s no magic “best” technology; the right stack is the one that solves your problem within your budget and plays to your team’s strengths.

Start by asking these questions:

- What’s your data volume and velocity? Are you dealing with a trickle of a few hundred events a minute, or a firehose of millions per second? A tool like Apache Kafka is built for that firehose, while a simpler message queue might be all you need for smaller loads.

- What does your team already know? If your engineers live and breathe Java, a framework like Apache Flink is a natural choice. If they’re more comfortable with Python or SQL, pushing them toward a different ecosystem might just slow you down.

- What problem are you truly solving? Building a simple real-time dashboard for analytics is a world away from creating a fraud detection system. The latter requires sophisticated tools that can manage complex state, while the former might not.

Your First Steps to Building a Pipeline

Jumping into real-time data processing doesn’t require a massive, multi-year commitment. The smartest way to begin is by starting small. Build a proof of concept (PoC) that delivers real value quickly and lets your team learn by doing.

A simple three-step plan can get you off the ground:

- Identify a Valuable Data Source: Don’t try to boil the ocean. Pick one stream of data that has immediate business value, like website clickstream events or user sign-ups.

- Define a Clear Goal: What specific insight are you chasing? Maybe you want to track user engagement on a new feature in real time or get instant alerts for application errors as they happen.

- Use a Managed Service: Instead of building your entire infrastructure from scratch, lean on a cloud platform. Using a managed service for Kafka or Flink can slash the initial setup complexity and cost.

This approach lets you prove the power of real-time insights without having to ask for a huge upfront investment.

Ready to build your own real-time data pipelines without the complexity? Streamkap uses Change Data Capture (CDC) to stream data from your databases to your warehouse in milliseconds. Replace your slow batch jobs and start making decisions on fresh, accurate data.