Batch Processing vs Real-Time Stream Processing

There is a big movement underway in the migration from batch ETL to real-time streaming ETL but what does that mean? How do these methods compare? While real-time data streaming has many advantages over batch processing, it is not always the right choice depending on the use case so let’s take a look at both in detail.

What is Batch Data Processing?

As the name suggests, batch data processing involves the processing of data in batches. This means that rather than processing one row or event at a time, a group or collection of data is processed. This is usually carried out at regular intervals such as every 15min, hourly, or daily.

Batch Processing:

- Tries to process more than one row or event at a time

- Will process data on a scheduled basis instead of in real-time

- Usually, pull-based. i.e. A system pulls records from the data sources before sending a copy to the destination

- Can be cost-effective to move large volumes of data. It may however cause other costs and lost opportunities.

- Carrying out batch processing at faster intervals and thus smaller batches may be called micro batches but this is not real-time stream processing.

Batch Processing Use Cases

Typical use cases for batch processing and applications

- Historical analysis: Can be used to generate reports regularly, such as monthly financial statements or weekly sales reports.

- Data backups: Backups usually occur at regular intervals and will allow a recovery back to the processing time.

- ETL (extract, transform, load) processes: Batch processing has been the primary method for all ETL pipelines until recently. The movement to ELT (extract, load, transform) from ETL led to batch transformations occurring within the database using products such as dbt.

- Data mining: Batch processing can be used to analyze large datasets to discover insights that may not be visible from individual data points.

What is Real-Time Stream Processing?

Stream processing refers to the real-time processing of data streams as it is continuously generated or received. This means that within the data stream it processes one row or event at a time.

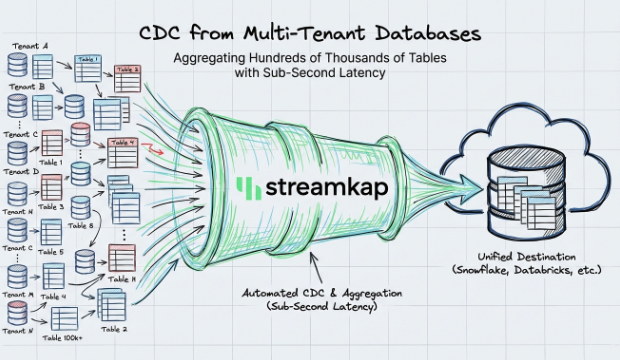

Above is a diagram showing a high availability setup processing continuous data.

Real-time processing:

- Processes one row or event at a time

- Will process data continuously. There are no schedules and thus no timing issues

- Usually includes both pushes & pull methods called Producers and Consumers

- Is now the de facto approach with database sources, log files, and event data. APIs are slowly migrating to real-time.

- Other terminology you may hear may be log-based replication, real-time ETL, real-time replication, event-driven ETL, and real-time change data capture

Streaming Data Processing Use Cases

Common use cases for real-time applications

- Data apps: Anything from recommendation engines, sensor analysis, or logistics management. These are often very subtle and are part of a wider application. For example, a data app in the Uber taxi booking app may have several such as the fare calculator, location of the taxi, and the time to arrive.

- Customer apps: Providing real-time customer experiences to your customers such as real-time dashboards, segmentation, and marketing tools.

- Log processing: Logs often produce vast amounts of information, which are difficult to read and act upon. Performing real-time ETL (extract, transform, load) upon those logs allows you to make instant use of the data generated.

- Personalization: Our day-to-day lives are shifting far beyond just the traditional e-commerce recommendation engines, and becoming more tailored than ever before with advanced segmentation. For example, Garmin can provide coaching during your sports races with guidance dependent upon the terrain, exertion, current energy levels, and goals of the race while Zoe provides real-time nutrition recommendations based upon your glucose levels monitored in real-time.

- Advertising & Marketing: With a myriad of marketing channels available, it can be difficult to distinguish yourself from the competition. Fortunately, real-time advertising and marketing provide an opportunity for you to engage prospects with relevant messages on the most effective platform at just the right moment - all while driving down costs and boosting your ROI.

- Fraud Detection: Identifying and preventing fraudulent activity as it is occurring is possible by analyzing various types of data, such as financial transactions, login attempts, and customer behavior, and using algorithms to detect patterns that may indicate fraudulent activity. One approach to real-time fraud detection is to use machine learning algorithms to analyze data and identify patterns that are indicative of fraudulent activity. These algorithms can be trained on large datasets of past fraudulent activity, and can then be used to analyze new data in real-time to identify potentially fraudulent activity.

- Cyber Security: The gold standard of response times in cyber security is 1 minute to detect an intrusion, 10 minutes to investigate, and 1 hour to remediate the issue. This is called the 1:10:60 rule. Doing this without the real-time processing of log files is nearly impossible and indeed still difficult to achieve. Automated systems utilizing data apps will be the future to meet and indeed beat these times.

- Sensors: The Internet of Things (IoT devices) is currently being utilized by the freight industry to track cargo in real-time as well as in hotels for a variety of devices from entryways to heaters. Smart cars are also transmitting data back to manufacturers, and governments are taking advantage of IoT technology to guarantee our access to safe water supplies.

- Indexes/Cache: Often indexes and caches are updated in batch process form, i.e a script runs to update it. This can cause service interruption as well as many applications not running optimally between updates. Moving to real-time incremental updates will keep the customer’s experience optimal and also likely save cost.

- Dashboards: Moving your datasets and dashboards to real-time analytics can only improve the outcomes for which these dashboards were built. It’s a simple first step to switch from batch ETL to a streaming ETL process by loading data into your data warehouse(s) or data lake(s) in real-time.

- High Availability: Backups are often performed in batch, i.e. once per day or hourly. Keeping another system up to date in real-time can produce instant failover as well as being able to recover to a precise point in time.

- Preference Updates: Your customers are continually changing their preferences, whether that is their address, phone number, email, phone number, or marketing preferences. If these changes are not acted upon and processed immediately there is a risk of fraud, lost opportunity in potential sales, or a break in regulation.

Advantages & Disadvantages of Batch Processing

Advantages- Cost: Batch processing continues to be a low-cost option (Dependent upon the vendor you may use).

- Efficiency: Batch processing is an efficient approach to handling a large volume of data in one go.

- Flexibility: It is possible to schedule the batch processing of data at a suitable time, including during off-peak hours.Disadvantages- Latency: The biggest disadvantage of batch processing is the latency between the time the data is created vs the time it’s processed and ultimately used. This is often daily or down to every few hours with increasing costs to handle sub 1 hour. This is why batch data processing is often referred to as working with stale data.

- Complexity: It’s often overlooked but working with batch processing often involves many systems & steps to set up and manage. Products may be cheap but the labor is not.

- Lack of transparency: Taking batch ETL as an example, the lack of transparency in the process for users can make it difficult to identify and resolve bugs.

Advantages & Disadvantages of Stream Processing

Advantages- Low latency: The data is processed as soon as it is received resulting in sub-second processing, down to levels such as 40ms. This can be useful where having fresh data is required or a competitive advantage is sought after.

- Scalability: Stream processing systems have been built to handle high volumes of data without significant performance degradation.

- Fault tolerance: Stream processing systems are designed to be resilient to failure, as they can continue to function even if one or more of the computers or servers goes down.

Disadvantages- Learning curve: Building a stream processing platform can be a steep learning curve. While it’s quite simple to get the processing running with products such as Apache Kafka, it may not be tuned as you need, you may need to build connectors and it’s likely you will then be lacking the suitable monitoring required for a production system.

- Data management: Streaming data can result in a large amount of data being processed. It’s important to ensure you are only processing the data you need and you have suitable monitoring in place across your stream processing platform.

- Ordering of data: It’s not uncommon for the generated data packet to arrive in a different order than it originally was sent. This can also make it difficult when analyzing the log data.

Which is better? Batch or Real-Time?

There are many pros and cons to each approach but it is not a case of which is better. Instead, you should choose the right approach based on the use case and objectives. Traditionally, real-time data streaming has been expensive and difficult to implement but that is changing. Streamkap offers a cloud-based serverless real-time ETL platform allowing you to start streaming in minutes.