<--- Back to all resources

Boost Business Efficiency with Real Time Data Integration

Learn how real time data integration enhances decision-making and operational agility. Discover tools and strategies to implement it effectively.

Think of real-time data integration as the nervous system of a modern business. It’s the practice of gathering data from all your different sources—like your sales platform, website, and inventory system—and moving it to a central hub in the blink of an eye. There’s virtually no delay.

This is a world away from older methods that would collect and process data in big chunks, maybe once a day or even once a week. It’s the difference between getting a printed daily newspaper and watching a live news broadcast as events unfold.

Why Instant Data Matters

Imagine you’re trying to drive through a busy city. Would you rather use a paper map that was printed yesterday or a live GPS that reroutes you around a traffic jam that just happened? That’s the power of real-time data integration. You stop looking at what happened and start seeing what is happening.

This isn’t just about moving faster. It’s about making your data relevant. When you can act on information the second it’s generated, you gain a massive competitive edge. At the heart of this process is a constant, reliable method for data synchronization, which keeps every part of your business perfectly in step.

From Lagging to Leading

Traditional batch processing is like running payroll—it happens on a set schedule. It’s reliable for certain tasks, but it always leaves you with an information gap. Real-time integration closes that gap, allowing your organization to move from being reactive to proactive.

Suddenly, you can:

- Seize Opportunities Instantly: Tweak a marketing campaign on the fly based on live social media sentiment.

- Prevent Problems Before They Escalate: Flag a potentially fraudulent credit card transaction in milliseconds, not after the damage is done.

- Create Smooth Customer Experiences: Update your website’s inventory the exact moment an item is sold in a physical store.

To truly understand the difference, let’s compare the two approaches side-by-side.

Batch Processing vs Real Time Data Integration

AttributeBatch ProcessingReal Time Data IntegrationData LatencyHigh (minutes, hours, or days)Very Low (seconds or milliseconds)Processing TriggerScheduled intervals (e.g., nightly)Event-driven (e.g., a new sale)Data VolumeLarge, accumulated chunks (batches)Small, continuous individual events (streams)Use CasesPayroll, periodic reporting, billingFraud detection, live dashboards, IoT analyticsSystem ImpactHigh-intensity load during processing windowsConsistent, low-intensity load

This table makes it clear: while batch processing still has its place for non-urgent, heavy-duty tasks, real-time integration is the standard for operations that demand immediate awareness and action.

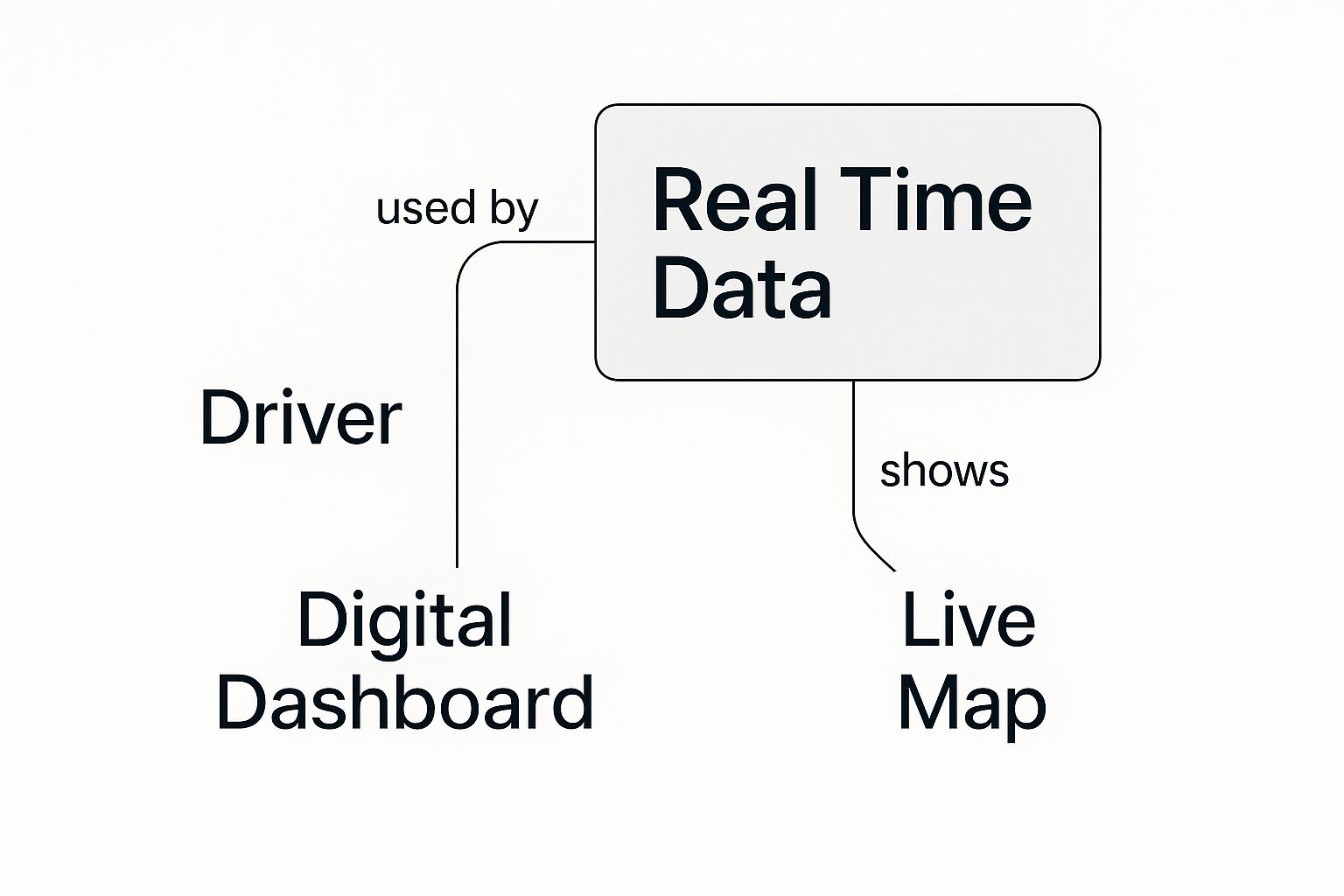

The infographic below really drives home this point, showing how real-time data gives you the same kind of clarity and control as a modern car’s dashboard.

You’re no longer driving by looking in the rearview mirror; you’re focused on the road ahead with a complete, up-to-the-second view.

The ability to sync and analyze data in the moment is no longer a luxury—it’s a foundational requirement for staying competitive. It transforms a business from being reactive to proactive.

This shift isn’t just a niche trend; it’s a massive market movement. The data integration market as a whole was valued at $14.23 billion in 2024 and is expected to rocket to nearly $30.87 billion by 2030, largely because of the explosion of enterprise data and the need for instant insights. It’s a clear signal that the future belongs to businesses that can act on data right now.

Understanding Core Real-Time Architectures

To make real-time data integration actually work, you need a powerful engine running under the hood. While there are a few ways to set things up, two core architectures really dominate the field: Streaming Pipelines and Change Data Capture (CDC). Getting a handle on how they operate is the key to picking the right tool for the job.

Each one solves the problem of moving data instantly, but they go about it in completely different ways. Think of one as a high-speed conveyor belt for brand-new events, and the other as a meticulous reporter that tracks every change to existing data.

Streaming Pipelines: The Digital Conveyor Belt

Picture a modern factory with a conveyor belt that never stops. As soon as a product part is ready, it’s placed on the belt and immediately sent to the next station. A streaming pipeline works exactly like this, just with data instead of physical parts.

It doesn’t see data as big, static files to be moved later. Instead, it treats data as a continuous flow of small, individual events. Each event—a website click, a sensor reading, a social media mention—is a tiny packet of information placed on that digital conveyor belt the moment it happens.

This constant stream is perfect for on-the-fly processing. Systems can react to each event as it arrives, making this approach ideal for things like:

- Live Analytics: Powering real-time dashboards that show you exactly what’s happening with website traffic or application performance right now.

- IoT Data Processing: Juggling continuous data streams from thousands of connected devices without breaking a sweat.

- Immediate Alerts: Firing off notifications for security threats or system errors the instant they’re detected.

The huge advantage here is the ability to handle brand-new, event-based information coming from countless sources. For a deeper look into how these are put together, you can explore various data pipeline architectures.

Change Data Capture: The Database Journalist

Now, imagine you’ve assigned a meticulous journalist to your company’s most important database. This journalist doesn’t waste time reading the entire database every day. Instead, they have a “press pass” to the database’s internal transaction log—a detailed, moment-by-moment record of every single change.

Whenever a record is created, updated, or deleted, the journalist notes that specific change and reports it immediately. That’s the core idea behind Change Data Capture (CDC).

CDC is a non-intrusive way to track data modifications right at the source. It avoids hammering your production database with constant queries, making it incredibly efficient for keeping different systems synchronized.

Instead of constantly asking the database, “Anything new? Anything new?,” CDC simply listens for the changes the database has already recorded for its own purposes. This low-impact approach makes it the perfect solution for keeping different systems in lockstep.

Common use cases include:

- Database Replication: Keeping a secondary database or data warehouse as an exact, up-to-the-second mirror of the primary one.

- Microservices Synchronization: Letting different application services know about data changes without them having to talk directly to each other.

- Zero-Downtime Migrations: Shifting data to a new system without ever having to take the old one offline.

So, while streaming pipelines are champs at catching new events from the outside world, CDC specializes in capturing changes within your core databases. Together, they form the foundation of any solid real-time data integration strategy, paving the way for a truly responsive, data-aware business.

The Business Impact of Instant Data Syncing

Getting a handle on the technical architecture is just one piece of the puzzle. The real magic of real time data integration is how it shifts a business from being reactive to truly proactive. When your data flows instantly, decision-making stops being a history lesson based on last week’s reports and becomes a live, dynamic process.

This shift means you can stop analyzing what went wrong yesterday and start influencing what happens in the next minute. It’s the difference between finding out a customer was frustrated through a weekly survey and getting an alert to fix their problem while they’re still on your website.

Improve Customer Experiences

In a world where everything is on-demand, customers don’t just want—they expect—interactions to be immediate and personal. Real-time data integration is the engine that makes this happen, turning a one-size-fits-all customer journey into a responsive, one-on-one conversation.

Think about a retail store using live foot traffic data. The moment a loyal customer walks in, they could get a personalized promotion on their phone for an item they’ve browsed online. That kind of immediate relevance is simply impossible with old-school batch processing, where you’d be lucky to get those insights a day later.

This need for immediacy is driving huge market growth. The real-time analytics market is on track to explode from $890.2 million in 2024 to a staggering $5.26 billion by 2032, all because companies are racing to get instant insights. You can read more about the growth of the real-time analytics market from Fortune Business Insights.

Boost Operational Agility

The benefits don’t stop with the customer. Instant data syncing also creates some incredible efficiencies behind the scenes. It gives you a live, up-to-the-second view of your operations, letting you spot and fix bottlenecks as they form, not after they’ve already caused a major headache.

Take a logistics company, for example. A real time data integration platform can pull in GPS data, traffic updates, and weather alerts all at once. If a sudden highway accident blocks a route, the system can reroute drivers in seconds. That saves fuel, time, and keeps customers happy by preventing late deliveries.

This proactive approach gives businesses a serious advantage in a few key areas:

- Reduced Downtime: Live sensor data from factory equipment can trigger predictive maintenance alerts, helping you fix a machine before it breaks down and halts production.

- Optimized Inventory: An e-commerce site can sync stock levels across its website, app, and marketplace listings the instant an item is sold, which stops you from accidentally overselling a hot product.

- Enhanced Security: Banks and fintech companies can analyze transaction data in milliseconds to spot and block fraud before the money ever leaves the account.

By unifying data streams in the moment, businesses gain a competitive edge built on speed and intelligence. They are no longer just collecting data; they are putting it to work instantly. This agility separates market leaders from followers.

Real-Time Data Integration in Action

It’s one thing to talk about architectures and concepts, but the real magic of real-time data integration happens when you see it solving actual business problems. This isn’t just theory; companies are using this stuff right now to get a serious edge.

Let’s look at a few examples of how different industries are putting instant data to work. From stopping thieves in the financial world to making sure you can get that last item in your online shopping cart, these use cases show what’s possible when you act on data the second it’s created.

Finance: Protecting Customers and Assets

The world of finance runs on speed and trust, making it a natural fit for real-time data. The most powerful example? Fraud detection. Every time you swipe your credit card, a real-time system is working behind the scenes, analyzing dozens of data points in the blink of an eye.

That system is checking your location, the purchase amount, your normal spending patterns, and even the merchant’s risk profile. If something seems off—say, a big purchase in another country just minutes after you bought coffee down the street—the system can flag or block the transaction on the spot. This isn’t just a nice feature; it prevents billions in losses every year and is only possible with a constant flow of live data.

By processing transaction data as it happens, financial institutions can identify and stop fraudulent activity before it impacts the customer, shifting from a reactive “clean-up” model to a proactive prevention strategy.

This goes way beyond simple rules. By feeding live data into machine learning models, banks can spot subtle, new fraud tactics that would be completely invisible to an older system that only looks at data in batches.

E-commerce: Maximizing Revenue and Satisfaction

In the hyper-competitive e-commerce space, every click—and every second—matters. Real-time data integration is what powers two of its most critical functions: dynamic pricing and live inventory management.

A dynamic pricing algorithm, for instance, can tweak a product’s price based on real-time supply and demand, what competitors are charging, or even a sudden surge in website traffic. If a product suddenly goes viral, the system can adjust the price instantly to capture the most revenue without anyone having to lift a finger.

At the same time, live inventory management is all about creating a smooth, frustration-free experience for the shopper.

- Preventing Stockouts: The moment an item sells out, it’s instantly marked as unavailable across the website and all other channels. No more angry customers who thought they bought something that wasn’t actually there.

- Cross-Channel Syncing: If a customer grabs the last shirt off the rack in a physical store, the online inventory updates immediately. The data is consistent everywhere, all the time.

To see how real-time data integration applies in a sales context, you can learn more about how automation in sales can drive major efficiencies.

Manufacturing: Enabling Predictive Maintenance

For a manufacturer, unexpected downtime is a profit killer. Real-time data, often from Internet of Things (IoT) sensors, is completely changing how factories operate by making predictive maintenance a reality.

Instead of just servicing machines on a rigid schedule, companies now install sensors that constantly stream data on temperature, vibration levels, and power usage. This data is fed into a central system that looks for any signs of trouble. If a machine starts vibrating slightly more than usual, an alert can be triggered to schedule maintenance before it breaks down. This simple shift from reactive to proactive prevents costly production shutdowns and helps expensive equipment last much longer.

Putting Your Real Time Data Strategy Into Action

Alright, let’s move from theory to practice. The jump from understanding real time data concepts to actually building a system can feel massive, but it doesn’t have to be. The secret is to think small first. Don’t try to boil the ocean.

Start by picking one, high-impact business problem to solve. Seriously, just one. Is it the inaccurate inventory on your e-commerce site that’s driving customers crazy? Or maybe it’s the lag in your fraud detection that’s costing you money? Find a single pain point where having data right now will make a tangible, measurable difference. This first win is critical for building momentum and getting buy-in for more.

Nail Down Your Scope and Pick the Right Tools

Once you have that clear goal, you can start mapping things out. What specific data sources are you pulling from? Where does that data need to end up? What kind of cleanup or changes does it need on the way? Answering these questions gives you a blueprint for your first data pipeline and prevents a lot of headaches later on.

Now, you can think about technology. When you’re choosing tools, be honest about your team’s skills and where you see this project going in the future. A platform that can take the sting out of complex tech like Change Data Capture will drastically cut down your implementation time. For example, getting database synchronization right means understanding the details of how CDC works. You can get a good primer by looking into Change Data Capture for SQL databases.

Look for a solution that fits your team today but won’t hold you back when your data volumes inevitably grow. I’d strongly recommend prioritizing platforms that offer:

- Managed Services: This takes the infrastructure headache off your team’s plate so they can focus on the data itself.

- Automated Schema Handling: A lifesaver. It keeps your pipelines from breaking every time someone adds a column to a source table.

- Broad Connector Support: Make sure it can easily plug into the systems you have now and the ones you’ll likely add down the road.

Build Your Governance Foundation from Day One

Last but definitely not least, think about data governance from the very beginning. This isn’t just a box-ticking exercise for compliance; it’s about building trust. Your real-time data is worthless if the business doesn’t believe it’s accurate and secure.

Right out of the gate, you need to define who owns the data, who gets to access it, and how you’re going to keep an eye on its quality. Putting these guardrails in place early on prevents a “wild west” data free-for-all and ensures your entire real-time strategy is built on solid ground.

By starting small, choosing tools that equip your team, and making governance a priority, you can roll out a real time data integration strategy that delivers immediate results and is built for the long haul. This playbook helps you sidestep the common traps and launch a solution that actually works.

Handling Common Implementation Challenges

Jumping into real-time data integration is a major advantage, but let’s be honest—it’s not always a walk in the park. Shifting from the predictable world of batch processing to a constant, live flow of information brings a whole new set of complexities that can trip up even seasoned engineering teams. Getting it right means knowing what to look out for before you even start.

One of the biggest headaches is maintaining data consistency and order. In a distributed system, it’s easy for events to arrive out of sequence or, worse, get duplicated. Imagine two updates to a customer’s record arriving in the wrong order—you’ve just created a corrupted profile, and downstream analytics will be completely wrong.

Then there’s the cost. Streaming data 24/7 means your infrastructure is always on, handling a ton of traffic and processing it instantly. This can get expensive, fast. If you’re not careful, cloud service bills and data transfer fees can balloon, quickly eating into the ROI you were hoping for.

Tackling Complexity Head-On

You can’t just open the floodgates and hope for the best. The key to sidestepping these issues is to be deliberate about building a system that’s both resilient and easy to monitor.

Here are a few strategies that actually work:

- Establish Strong Data Governance: Don’t wait until things are a mess. Set up clear rules for data quality, how schemas are managed, and who can access what, right from the beginning. This stops your real-time pipelines from turning into a chaotic free-for-all of bad data.

- Prioritize Scalability and Cost Management: Pick your tools and architecture with an eye on future growth and your budget. Look for features like auto-scaling and resource monitoring to make sure you’re only paying for what you actually use.

- Address Security Proactively: A constant stream of data creates more opportunities for security breaches. You need to encrypt data both as it moves (in transit) and where it’s stored (at rest). On top of that, lock down access to make sure sensitive information stays protected every step of the way.

A successful real-time data integration project is as much about managing complexity and risk as it is about moving data quickly. Proactive planning for consistency, cost, and security is non-negotiable.

These hurdles are pretty common, but they are entirely solvable with the right game plan. For a more detailed breakdown, our guide to understanding real time ETL challenges offers deeper insights and practical solutions.

Ready to build reliable, real-time data pipelines without the complexity? Streamkap uses Change Data Capture (CDC) to sync your data with minimal latency and zero impact on source systems. See how our managed platform can simplify your data strategy and deliver instant value. Get started with Streamkap today.