problems with data integration: 10 fixes you need now

Explore practical strategies to solve problems with data integration, improve data quality, and accelerate analytics with proven fixes and tips.

In a data-driven economy, connecting disparate systems to create a single source of truth seems like a foundational business goal. However, beneath the surface of this seemingly straightforward objective lies a web of significant complexity. Organizations frequently underestimate the substantial challenges that can derail projects, inflate budgets, and severely compromise data integrity. These common problems with data integration are not merely technical hurdles; they are strategic obstacles that prevent a business from unlocking the true value locked within its information assets.

The journey to a unified data ecosystem is often more difficult than anticipated. From the persistent fragmentation caused by data silos and legacy systems to the nuanced difficulties of maintaining data quality across different formats, the path is fraught with potential pitfalls. Issues like managing real-time data synchronization, ensuring robust security and compliance, and maintaining clear data lineage can quickly escalate from minor concerns to critical project blockers. Failing to address these challenges proactively can lead to flawed analytics, poor business decisions, and a loss of competitive advantage.

This article provides a detailed breakdown of the 10 most critical data integration problems that organizations must navigate. We will move beyond surface-level descriptions to explore the root causes, tangible business impacts, and high-level mitigation strategies for each challenge. By understanding these hidden hurdles, you can better prepare your team, select the right tools, and build a resilient, scalable, and genuinely integrated data architecture that powers your business forward.

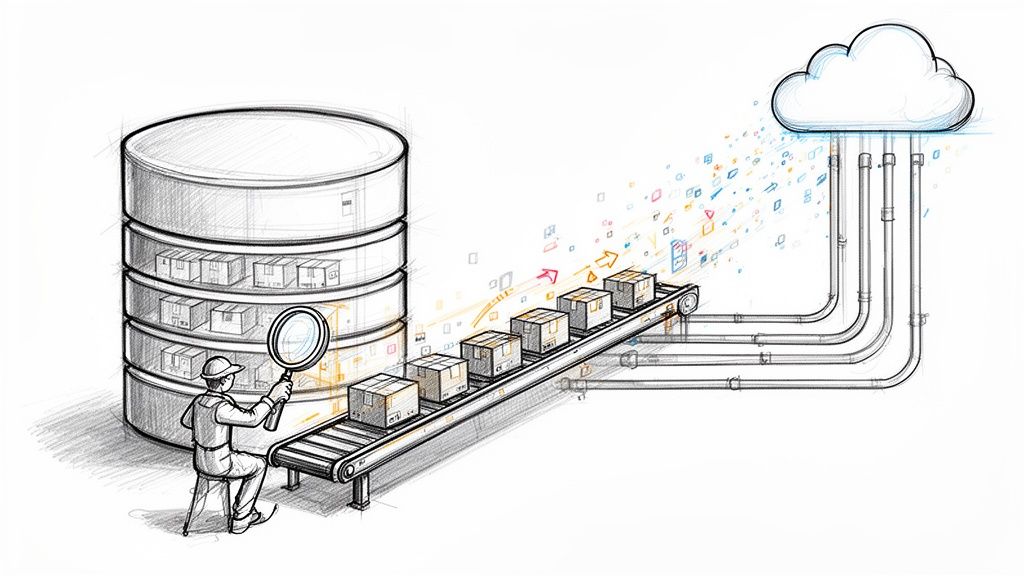

1. Data Silos and Legacy System Fragmentation

One of the most foundational problems with data integration is the existence of data silos. These are isolated repositories of information, often trapped within department-specific legacy systems that were never designed to communicate. This fragmentation prevents a unified view of organizational data, leading to significant inefficiencies, inconsistencies, and missed opportunities.

A classic example is a large financial institution where the retail banking, investment management, and corporate lending divisions each operate on separate, decades-old mainframe systems. A customer who uses services from all three divisions exists as three distinct entities in the company’s data landscape. This fragmentation makes it impossible to create a holistic customer profile, hampering efforts in personalized marketing, comprehensive risk assessment, and effective customer service. Similarly, in healthcare, patient data can be scattered across disparate Electronic Health Record (EHR) systems from different departments or acquired clinics, creating critical gaps in a patient's medical history.

The Impact of Fragmented Systems

Data silos actively undermine business intelligence and operational efficiency. When data is not integrated, teams are forced to make decisions based on incomplete information, often leading to conflicting strategies. It also breeds data redundancy, where the same information is stored and maintained in multiple places, increasing storage costs and the risk of inconsistencies when updates are made in one system but not others.

Key Insight: Data silos aren't just a technical problem; they are a business problem. They create barriers to collaboration, obscure a single source of truth, and directly inhibit an organization's ability to leverage its data as a strategic asset.

How to Dismantle Data Silos

Tackling entrenched legacy systems requires a strategic, phased approach rather than a single, massive overhaul.

- Conduct a Comprehensive Data Audit: Begin by mapping every data source, system, and storage location across the organization. Identify who owns the data, how it is used, and its overall quality.

- Prioritize Integration Efforts: Not all silos are equal. Rank integration projects based on their potential business impact and technical feasibility. Focus on high-value, low-complexity integrations first to build momentum.

- Adopt an API-First Strategy: For legacy systems that cannot be immediately replaced, develop APIs (Application Programming Interfaces) to expose their data. This allows modern applications to access and utilize the information without costly, disruptive system replacements. This gradual approach is central to effective data modernization.

By methodically breaking down these silos, organizations can unlock the full value of their information. This process often involves complex data movement, a topic covered in-depth in our guide to data migration best practices.

2. Data Quality and Consistency Issues

One of the most persistent problems with data integration is that the process often reveals and amplifies underlying data quality issues. When combining datasets from different sources, organizations frequently encounter missing values, duplicate records, inconsistent formatting, and conflicting information. These problems degrade the value of the integrated data, leading to unreliable analytics, flawed business intelligence, and poor decision-making.

Consider a global manufacturing company trying to create a unified supply chain view. One facility might record measurements in meters, while another uses feet. An e-commerce platform could have conflicting inventory counts between its sales front-end and its warehouse management system. Similarly, customer relationship management (CRM) systems often contain multiple entries for the same client, with slight variations in company names ("Global Corp," "Global, Inc.," "Global Corporation"), making it impossible to get a clear, single view of customer interactions.

The Impact of Poor Data Quality

Low-quality data directly erodes trust in analytics and BI dashboards. When stakeholders cannot rely on the information presented, they revert to manual data collection and gut-feel decisions, negating the entire purpose of data integration. This issue also creates significant operational friction, as teams must spend more time cleaning and validating data than analyzing it. Over time, these data integrity problems can lead to costly compliance failures, missed sales opportunities, and damaged customer relationships.

Key Insight: Data integration does not magically fix bad data; it puts a spotlight on it. Without a proactive data quality strategy, integration projects risk becoming exercises in "garbage in, garbage out" at a much larger scale.

How to Address Data Quality and Consistency

Improving data quality is not a one-time fix but an ongoing governance process. A systematic approach is crucial for building a reliable data foundation.

- Establish Data Quality Metrics: Before starting integration, define clear KPIs for data quality, such as completeness, accuracy, timeliness, and uniqueness. Use data profiling tools to benchmark your current state against these metrics.

- Implement a Master Data Management (MDM) Strategy: Create a single, authoritative source for critical data entities like customers, products, and suppliers. An MDM solution ensures that all systems draw from the same well-governed, consistent information.

- Automate Data Cleansing and Validation: Deploy data quality tools (like Trifacta or Talend) to automate the process of identifying errors, standardizing formats, and removing duplicates. Implement validation rules at the point of data entry and during integration pipelines to prevent bad data from propagating.

By embedding data quality checks throughout the data lifecycle, organizations can ensure their integrated data is a trustworthy asset. Explore these concepts further in our guide to solving common data integrity problems.

3. Schema Mapping and Semantic Heterogeneity

One of the most complex problems with data integration arises not from a lack of connectivity, but from a lack of shared meaning. Different source systems often use unique database schemas, field names, and data models to represent the same information. This issue, known as semantic heterogeneity, occurs when the meaning of data is inconsistent across systems, making it difficult to map and transform data accurately.

For instance, following a corporate merger, the acquiring company's CRM may identify customers with a customer_id field, while the acquired company’s system uses acct_num. Both fields refer to the same concept-a unique customer identifier-but their names and formats differ. Similarly, a multinational retailer might store sales figures as revenue in North America but as turnover in its European databases. Without a clear translation layer, automated integration processes can fail or produce deeply flawed results.

The Impact of Semantic Mismatches

Semantic heterogeneity directly complicates data transformation logic and threatens data integrity. Mapping these disparate fields requires significant manual effort from data engineers and subject matter experts to correctly interpret and align the data. If not handled properly, it can lead to corrupted datasets, inaccurate analytics, and poor business decisions based on misinterpreted information. A failure to reconcile these differences is a common reason data integration projects go over budget and past deadlines.

Key Insight: Schema mapping is more than a technical task of connecting fields; it is an exercise in business translation. The real challenge is ensuring that the semantic context and meaning of the data are preserved and harmonized across systems.

How to Achieve Semantic Consistency

Bridging semantic gaps requires a combination of robust documentation, governance, and advanced tools.

- Create a Comprehensive Data Dictionary: Develop and maintain a centralized business glossary or data dictionary that formally defines every key data element, its business meaning, its format, and its authoritative source.

- Leverage Metadata Management Tools: Implement tools that can automatically scan data sources, extract metadata (like schema definitions and data types), and help visualize relationships between different systems. This provides a clear blueprint for mapping.

- Establish Strong Data Governance: Form a data governance committee composed of business and IT stakeholders. This body is responsible for standardizing data definitions and approving all transformation rules, ensuring alignment across the organization. This formal process is crucial for solving semantic problems with data integration.

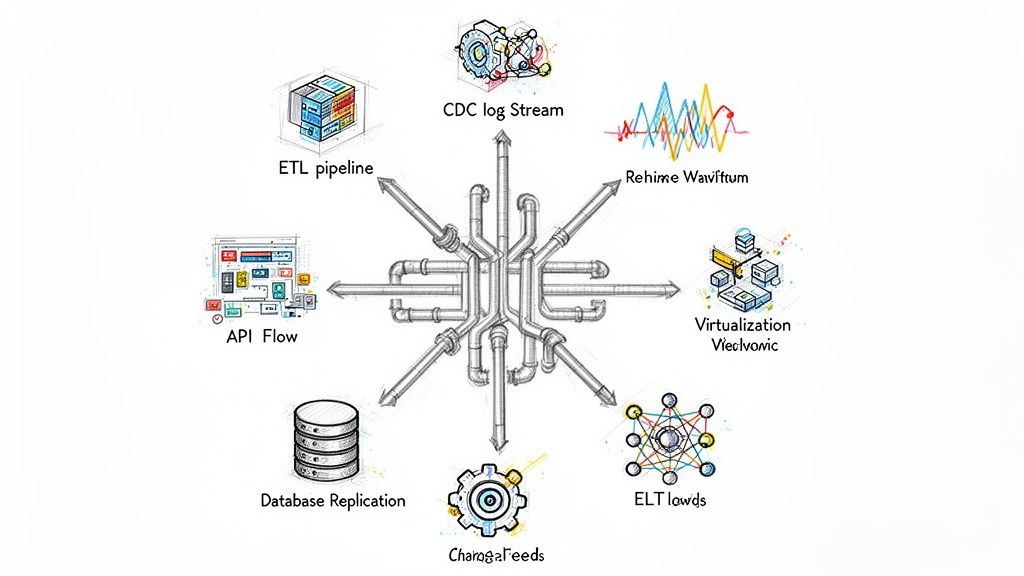

4. Real-Time Data Synchronization

One of the most complex problems with data integration is achieving real-time synchronization across multiple systems. As businesses increasingly rely on up-to-the-minute information for operational decisions, the latency inherent in traditional batch processing is no longer acceptable. The challenge lies in managing high-volume, high-velocity data streams while ensuring consistency and avoiding data loss or duplication across a distributed environment.

Consider an e-commerce platform where inventory data is shared between the online storefront, a mobile app, and physical store POS systems. A delay in syncing a sale from one channel could lead to another channel selling an item that is already out of stock, resulting in a poor customer experience. Similarly, financial trading platforms must synchronize transaction data across multiple systems instantly to execute trades at the correct price and manage risk effectively. Without real-time sync, these operations would fail.

The Impact of Synchronization Delays

Synchronization latency directly impacts business agility and customer satisfaction. Delayed data can lead to flawed analytics, misguided operational decisions, and a disjointed customer experience. In modern data ecosystems, where decisions are automated based on live data, even a few seconds of delay can have significant financial or reputational consequences. This makes mastering real-time data flow a critical competency.

Key Insight: Real-time synchronization is not just about speed; it's about maintaining data integrity and consistency at the pace of business. Failing to do so erodes trust in the data and undermines the very systems that depend on it.

How to Achieve Real-Time Synchronization

Implementing a robust, real-time data pipeline requires a modern architectural approach centered on event streaming and efficient data capture.

- Implement an Event-Driven Architecture: Shift from batch-based updates to an event-driven model using message brokers like Apache Kafka or cloud services like AWS Kinesis. This allows systems to react to data changes as they happen.

- Use Change Data Capture (CDC): Instead of querying entire databases for changes, use CDC to capture row-level changes (inserts, updates, deletes) from a source database's transaction log in real time. This is a highly efficient, low-impact method for sourcing data.

- Design for Idempotency: Ensure that processing the same data event multiple times does not result in duplicate records or incorrect states. This is crucial for building resilient systems that can handle network failures or retries without corrupting data.

By adopting these strategies, organizations can build a responsive data infrastructure that supports real-time analytics and operations. Explore how to build these pipelines in our deep dive into the fundamentals of real-time data streaming.

5. Scalability and Performance Bottlenecks

As data volumes grow exponentially, integration pipelines that were once efficient can quickly become critical performance bottlenecks. Traditional ETL jobs, inefficient data transformations, and infrastructure that cannot scale on demand often lead to significant processing delays. This inability to handle increasing data loads or sudden spikes in usage directly impacts downstream analytics, reporting, and time-sensitive business operations.

A prime example is a retail company during a major holiday sale. The influx of transaction data can overwhelm its nightly batch processing jobs, causing delays in updating inventory levels and sales dashboards. This prevents managers from making timely decisions about restocking popular items or adjusting marketing spend. Similarly, a telecommunications provider processing billions of call detail records (CDRs) daily needs a highly scalable integration architecture to support fraud detection and network optimization in near real-time. Without it, fraudulent activity could go unnoticed for hours, resulting in significant financial loss.

The Impact of Inefficient Scaling

Performance bottlenecks in data integration are more than just a minor inconvenience; they cripple an organization's ability to operate in an agile, data-driven manner. When data pipelines are slow, business intelligence reports are outdated, machine learning models are trained on stale data, and operational systems lack the real-time information needed for effective decision-making. This lag creates a disconnect between data generation and insight, diminishing the value of the data itself.

Key Insight: Scalability is not a "set it and forget it" feature. It's an ongoing architectural concern. A data integration solution that fails to scale with the business becomes a barrier to growth rather than an enabler of it.

How to Engineer for Scale

Building resilient, high-performance integration pipelines requires a proactive approach to architecture and continuous optimization.

- Implement Distributed Processing: Leverage frameworks like Apache Spark to distribute data processing tasks across a cluster of machines. This parallel execution dramatically reduces processing times for large datasets compared to single-node architectures.

- Embrace Cloud-Native Autoscaling: Utilize cloud data platforms like Snowflake or Databricks that automatically provision and de-provision compute resources based on workload demands. This ensures you have the power you need during peak times without overpaying for idle infrastructure.

- Optimize at the Core: Focus on fundamental performance tuning. This includes optimizing complex SQL queries, adding appropriate database indexes to speed up lookups, and implementing partitioning strategies to process data in smaller, manageable chunks.

- Choose Efficient Data Formats: Use columnar storage formats like Apache Parquet or ORC. These formats are highly optimized for analytical queries, significantly improving read performance and reducing storage footprint compared to row-based formats like CSV.

6. Security, Privacy, and Compliance Requirements

Data integration processes often handle an organization's most sensitive information, making them a primary target for security threats and a focal point for regulatory scrutiny. Navigating complex legal frameworks like GDPR, HIPAA, and CCPA while moving data between systems introduces significant risk. A failure to secure data in transit or at rest can lead to catastrophic data breaches, severe financial penalties, and irreversible reputational damage.

Consider a global e-commerce company integrating customer data from its European and North American operations. The integration pipeline must adhere to GDPR's strict consent and data transfer rules for EU customers while simultaneously complying with CCPA for California residents. Similarly, a healthcare provider building an integrated patient portal must ensure that every data point moved between its EHR, billing, and lab systems is fully compliant with HIPAA’s stringent privacy and security rules. These regulations dictate how data is collected, processed, stored, and even deleted, making compliance a core component of any integration strategy.

The Impact of Non-Compliance

Overlooking security and privacy is not an option. A single breach during data movement can expose sensitive customer information or proprietary business data, leading to direct financial loss and legal action. Regulatory bodies can impose fines that reach millions of dollars or a percentage of global revenue. Beyond the financial cost, a public breach erodes customer trust, which can be far more damaging and difficult to recover from.

Key Insight: Security and compliance are not afterthoughts to be addressed post-integration; they must be foundational design principles. Treating these requirements as a checkbox exercise is a recipe for failure, as regulations are complex, constantly evolving, and unforgiving.

How to Integrate Data Securely

A proactive, multi-layered security strategy is essential for mitigating risks associated with data integration problems.

- Implement End-to-End Encryption: Ensure all data is encrypted both in transit (using protocols like TLS) and at rest (using database or file-level encryption). This is the baseline defense against unauthorized access.

- Enforce Strict Access Controls: Use Role-Based Access Control (RBAC) to ensure that users and systems can only access the minimum data necessary to perform their functions. The principle of least privilege should be applied rigorously.

- Anonymize and Mask Data: For development, testing, and analytics, use data masking or tokenization techniques to obfuscate personally identifiable information (PII). This reduces the risk of exposing sensitive data in non-production environments.

- Maintain Comprehensive Audit Trails: Log all access, modification, and movement of data. These logs are crucial for monitoring for suspicious activity, troubleshooting issues, and demonstrating compliance during an audit. This comprehensive approach extends to the entire data lifecycle, including critical steps like protecting sensitive data during electronics recycling to prevent breaches even after hardware is decommissioned.

7. Data Volume and Storage Management

The exponential growth of data is a double-edged sword. While it offers unprecedented opportunities for insight, it presents one of the most significant problems with data integration: managing sheer volume. Organizations now grapple with petabytes of information from IoT devices, user interactions, and transactional systems, making the storage, retrieval, and processing of this data a monumental infrastructure challenge. Inefficient management can lead to soaring costs, slow performance, and complex compliance hurdles.

A clear example is a global weather forecasting service that ingests terabytes of satellite imagery, sensor readings, and historical climate data daily. Integrating this constant influx into a unified analytical model requires an immensely scalable and performant storage architecture. Similarly, financial institutions must archive decades of transaction data for regulatory compliance, a task that becomes exponentially more difficult as data volumes grow. Without a robust strategy, the integration process itself can become a bottleneck, unable to handle the load.

The Impact of Unmanaged Data Volume

Failing to manage data volume effectively leads to severe operational and financial consequences. Storage costs can spiral out of control, consuming IT budgets that could be allocated to innovation. Integration pipelines can slow to a crawl, delaying the delivery of time-sensitive insights to business users. Furthermore, navigating vast, unorganized data stores for compliance audits or data governance checks becomes a near-impossible task, increasing organizational risk.

Key Insight: Data volume is not just a storage problem; it's a velocity and accessibility problem. The challenge lies in building an integration framework that can ingest, process, and serve massive datasets without compromising on speed, cost-efficiency, or governance.

How to Manage Data at Scale

Effectively managing large-scale data requires a proactive and multi-faceted approach focused on efficiency and lifecycle management.

- Implement Tiered Storage: Classify data based on access frequency and performance requirements. Use high-performance "hot" storage for frequently accessed data, cost-effective "warm" storage for less frequent access, and low-cost archival "cold" storage for long-term retention.

- Leverage Compression and Partitioning: Apply data compression techniques to reduce the physical storage footprint. Partition large datasets based on logical keys (like date or region) to significantly speed up query performance during and after integration.

- Adopt Cloud-Native Solutions: Utilize the elasticity of cloud storage services like Amazon S3 or Google Cloud Storage. These platforms offer virtually limitless scalability and pay-as-you-go pricing, allowing you to align storage costs directly with usage and avoid over-provisioning expensive on-premises hardware.

8. Maintaining Data Lineage and Governance

As data moves and transforms across complex pipelines, tracking its journey from origin to destination is one of the most critical yet overlooked problems with data integration. This practice, known as data lineage, provides a complete audit trail of the data's lifecycle. Without clear lineage, organizations struggle to ensure regulatory compliance, troubleshoot data quality issues, or trust the outputs of their analytics, undermining effective data governance.

Consider a financial institution preparing a report for a regulatory body like the SEC. The regulator demands proof that the figures in the report are derived directly from validated source transactions. Without automated data lineage, auditors must manually trace data through countless ETL jobs, databases, and spreadsheets, a process that is both error-prone and incredibly time-consuming. Similarly, a BI analyst in a retail company may find a discrepancy in a sales report. Without lineage, identifying which transformation step or source system introduced the error is like searching for a needle in a haystack.

The Impact of Opaque Data Flows

Poor data lineage and governance create significant business and operational risks. It makes it nearly impossible to perform impact analysis; for instance, you can't determine which downstream reports or applications will be affected by a change to a source system schema. This opacity also erodes trust in data, as stakeholders cannot verify the accuracy or origin of the information they use for critical decision-making, leading to a culture of data skepticism.

Key Insight: Data lineage is not just a technical mapping exercise; it is the foundation of data trust and accountability. It transforms data from a mysterious black box into a transparent, auditable asset that the entire organization can rely on.

How to Establish Strong Lineage and Governance

Building a robust data lineage framework requires a combination of automated tools and disciplined processes. It is essential for managing the complexity inherent in modern data integration.

- Implement Automated Lineage Tools: Leverage platforms like Apache Atlas, Collibra, or Alation that automatically scan data pipelines, databases, and BI tools to parse code and metadata. These tools build and visualize end-to-end lineage graphs, significantly reducing manual effort.

- Document All Transformations: Maintain a centralized data catalog or business glossary that clearly defines all business rules and transformation logic applied during the integration process. This context is crucial for both technical and non-technical users to understand how data evolves.

- Embed Governance into Workflows: Integrate data governance checks directly into your CI/CD pipelines. This includes validating that new integration jobs have proper documentation, ownership is assigned, and lineage is being captured before they are deployed to production. This proactive approach prevents governance gaps from forming.

9. Change Management and System Evolution

One of the most dynamic and often underestimated problems with data integration is managing constant change. Source systems are not static; they evolve with schema updates, new fields, API modifications, and shifting business logic. An integration process built for today’s system can easily break tomorrow, causing data pipelines to fail and disrupting critical downstream operations.

A clear example is an e-commerce platform that integrates with a third-party payment gateway. If the gateway updates its API to include new security protocols or transaction fields, the existing integration could fail, preventing the processing of sales. Similarly, a healthcare system might need to accommodate a new lab result format from a partner clinic. Without a robust change management strategy, this evolution could disrupt the flow of vital patient information, impacting care quality and operational continuity.

The Impact of Unmanaged Evolution

Failing to account for system evolution turns data integration pipelines into fragile liabilities. Unexpected changes can lead to silent data corruption, where incorrect or incomplete data flows into analytics platforms, skewing reports and leading to flawed business decisions. It also creates a high maintenance burden, forcing engineering teams into a reactive cycle of fixing broken connections rather than focusing on strategic initiatives.

Key Insight: Effective data integration is not a one-time project but a continuous process of adaptation. The architecture must be designed with the assumption that source systems will change, building resilience and flexibility into its core.

How to Build Adaptive Integrations

Creating resilient data pipelines requires a proactive approach to managing change, focusing on both technology and process.

- Implement API Versioning and Contract Testing: Enforce a strict API versioning strategy (e.g., in the URL or headers) to manage changes gracefully. Use contract testing to automatically verify that integrations adhere to agreed-upon data structures, catching breaking changes before they reach production.

- Establish a Change Control Process: Coordinate with source system owners to establish clear communication channels and change windows. Document all modifications in version control and maintain a shared calendar of planned updates to prevent surprises.

- Use Gradual Deployment Strategies: Mitigate risk by adopting deployment patterns like blue-green or canary releases. These techniques allow you to roll out changes to a small subset of users or infrastructure first, enabling you to validate the impact before a full-scale deployment and providing an immediate rollback path if issues arise.

10. Cost and Resource Constraints

A significant and often underestimated problem with data integration is the substantial investment required. These projects demand not only expensive software and infrastructure but also highly specialized talent, leading to high upfront costs and significant ongoing operational expenses. Budget limitations often force organizations to make difficult trade-offs that can compromise the quality, scope, or long-term viability of their integration architecture.

For example, a mid-market e-commerce company may find enterprise-grade integration platforms like Informatica or MuleSoft prohibitively expensive. As a result, they might opt for a less mature open-source tool that requires more custom development and specialized skills, shifting the cost from licensing to payroll and maintenance. Similarly, a startup might build fragile, point-to-point custom integrations to save money initially, only to find them unscalable and expensive to maintain as the business grows.

The Impact of Budgetary Limitations

Cost and resource constraints directly influence an integration project's success. When budgets are tight, teams may be forced to cut corners on data quality checks, defer necessary infrastructure upgrades, or understaff the project. This leads to the accumulation of technical debt, where short-term savings create long-term maintenance burdens, performance issues, and a higher total cost of ownership. The project may ultimately fail to deliver the expected ROI, creating a cycle of underinvestment.

Key Insight: Treating data integration as a one-time capital expense is a critical mistake. It is an ongoing operational commitment that requires sustained investment in tools, talent, and maintenance to deliver continuous business value.

How to Manage Integration Costs Effectively

A strategic approach can help organizations navigate budget limitations without sacrificing the integrity of their data integration efforts.

- Start with a Phased Implementation: Instead of a "big bang" approach, begin with a limited-scope proof-of-concept (POC) to demonstrate value. Use early wins to build a strong business case and secure funding for subsequent phases.

- Evaluate Cloud-Native and iPaaS Solutions: Cloud services offer pay-as-you-go pricing models that convert large capital expenditures into predictable operational costs. Integration Platform-as-a-Service (iPaaS) solutions can further reduce the burden by managing infrastructure and providing pre-built connectors.

- Leverage Open-Source Alternatives Strategically: Tools like Apache NiFi or Talend Open Studio can be powerful, cost-effective alternatives to enterprise platforms, provided the organization has or can develop the necessary in-house expertise to manage them.

Top 10 Data Integration Challenges Compared

ItemImplementation complexityResource requirementsExpected outcomesIdeal use casesKey advantagesData Silos and Legacy System FragmentationLow-to-moderate to maintain; high to integrate/modernizeModerate short-term; high for integration (middleware, migration teams)Continued departmental isolation and redundant data until consolidatedOrganizations with entrenched legacy systems or needing short-term stabilityDepartmental control; minimal immediate disruptionData Quality and Consistency IssuesHigh — profiling, cleansing, MDM requiredHigh (tools, data stewards, remediation effort)Improved trust and analytics after remediation; initial delays/costsData consolidation, master data initiatives, reporting accuracy effortsReveals issues early; enables governance and cleaner analyticsSchema Mapping and Semantic HeterogeneityHigh — extensive manual mapping and semantic modelingModerate–high (schema tools, domain experts)Accurate integrated schemas when resolved; reduced semantic errorsMergers, multi-vendor integrations, cross-domain consolidationPreserves system-specific optimizations; flexible mappingsReal-Time Data SynchronizationVery high — streaming, CDC, event-driven architectureHigh infra and operational expertise (Kafka, brokers, monitoring)Low-latency current data enabling real-time decisions and alertsFinance, e‑commerce inventory, ride‑sharing, real‑time analyticsActionable, up-to-date insights; improved responsivenessScalability and Performance BottlenecksHigh — distributed processing and continuous tuningHigh compute/storage and specialized engineersHigher throughput and timelier analytics when optimizedHigh-volume analytics (social media, telco, retail at scale)Enables growth; drives architectural and cost optimizationsSecurity, Privacy, and Compliance RequirementsHigh — encryption, access controls, auditing and policiesHigh legal/security expertise; compliance tooling and monitoringReduced breach risk, regulatory compliance, maintained trustHealthcare, finance, companies processing PII or cross-border dataProtects data, reduces legal exposure, builds customer trustData Volume and Storage ManagementMedium–high — lifecycle policies, tiering and archivalHigh storage costs; tools for cataloging, tiering, compressionCost-optimized storage with retained analytical valueStreaming platforms, historical archives, analytics-heavy orgsEnables historical analysis; scalable cost managementMaintaining Data Lineage and GovernanceHigh — metadata capture, lineage tooling, process changesModerate–high (governance platforms, stewards, automation)Improved auditability, faster root-cause analysis, compliance readinessRegulated industries and complex ETL/transformation pipelinesTransparency, compliance support, impact analysisChange Management and System EvolutionHigh — versioning, compatibility, CI/CD and testingModerate (CI/CD, contract testing, coordination across teams)Safer system evolution with fewer integration breakagesRapidly evolving products, API ecosystems, incremental migrationsSupports innovation with controlled risk and rollbacksCost and Resource ConstraintsVariable — requires phased scope and trade-offsHigh overall; choice between enterprise, OSS, cloud pay-as-you-goPhased delivery; potential technical debt if underfundedBudget-limited orgs, startups, mid-market prioritizing ROIEncourages prioritization, cost-effective and incremental approaches

From Challenge to Opportunity: A Modern Approach to Data Integration

Navigating the landscape of modern data management reveals a clear truth: the problems with data integration are not isolated technical hurdles but interconnected strategic challenges. As we've explored, issues ranging from entrenched data silos and fragmented legacy systems to the complexities of real-time synchronization and stringent governance are multifaceted. Simply throwing more resources at outdated ETL processes or custom scripts is no longer a viable solution. This approach only deepens technical debt and widens the gap between data generation and actionable insight.

The core takeaway from this extensive breakdown is that a fundamental mindset shift is required. Instead of viewing data integration as a reactive, batch-oriented janitorial task, forward-thinking organizations are treating it as a continuous, strategic, and real-time capability. The challenges we've detailed, such as schema heterogeneity, performance bottlenecks, and the immense pressure of data volume, are symptoms of a paradigm that struggles to keep pace with the velocity of modern business.

Key Takeaways and Strategic Next Steps

To transform your data integration strategy from a persistent bottleneck into a competitive advantage, focus on these critical pillars:

- Prioritize a Unified Data Fabric: The first step in dismantling data silos is to adopt a strategy that promotes interoperability. This doesn't mean a massive, one-time migration. Instead, focus on creating a connective tissue using modern integration platforms that can tap into both legacy systems and cloud-native applications, ensuring data is accessible and consistent across the enterprise.

- Embed Governance from the Start: Data quality, security, and lineage cannot be afterthoughts. Proactive governance must be built into your integration workflows. This involves implementing automated data quality checks, robust access controls, and transparent lineage tracking to ensure that data is not only available but also trustworthy, secure, and compliant from its source to its destination.

- Embrace Real-Time, Event-Driven Architectures: The demand for immediate insights makes real-time data synchronization non-negotiable. Moving away from brittle, high-latency batch processing toward event-driven models, such as Change Data Capture (CDC), is essential. This approach minimizes the load on source systems, reduces data latency to mere seconds, and enables a host of new use cases, from real-time analytics to responsive operational systems.

The True Value of Modernized Integration

Mastering these concepts unlocks far more than just cleaner data pipelines. It builds a resilient and agile data foundation that directly fuels business innovation. When your teams can trust the data and access it in real-time, the organization can confidently pursue advanced analytics, develop sophisticated machine learning models, and create superior customer experiences. The operational efficiency gained by automating complex integration tasks frees up valuable engineering resources to focus on high-impact projects rather than constant maintenance and firefighting.

Ultimately, solving the persistent problems with data integration is about future-proofing your organization. It's about building the capacity to adapt, scale, and innovate in an increasingly data-driven world. By shifting from a reactive posture to a proactive, strategic approach, you transform a legacy challenge into your most powerful opportunity for growth and a decisive competitive edge.

Ready to eliminate the performance bottlenecks and complexity of traditional ETL? Discover how Streamkap uses real-time CDC to solve the most critical problems with data integration, enabling you to build scalable, reliable data pipelines in minutes, not months. Explore our platform and see how we can transform your data strategy at Streamkap.