<--- Back to all resources

Finding the Right Estuary Alternative for Your Data

Explore top Estuary alternative platforms for real-time data pipelines. Our guide compares performance, cost, and use cases to help you choose wisely.

When you’re looking for the best Estuary alternative, it’s usually because you need a more scalable architecture, predictable pricing, or broader connector support for your specific workloads. Platforms like Streamkap often enter the conversation when teams are searching for real-time Change Data Capture (CDC) without the high operational overhead or the steep costs that come with high-volume data streaming.

Why Teams Look for an Estuary Alternative

High-performing engineering teams don’t just switch data stacks because a tool is broken. They re-evaluate when their needs evolve beyond a platform’s original design. The search for an Estuary alternative is almost never about one missing feature. Instead, it’s a strategic move driven by core technical and business demands that pop up as data volume and complexity inevitably grow.

It’s a bit like managing vital ecosystems for economic growth. For example, coastal areas in the U.S., including natural estuaries, supported 69 million jobs and added $7.9 trillion to the GDP back in 2007. This just goes to show how critical it is to have the right infrastructure—whether natural or digital—to support massive operations. You can explore more EPA data on the economic impact of estuaries.

Common Catalysts for Change

Teams usually start shopping around when they run into friction points tied to growth. These aren’t sudden catastrophes but slow-burning issues that start to kill development velocity and drive up operational costs.

The key drivers often include:

- Hitting Scalability Ceilings: The initial architecture starts to buckle under increased data throughput, failing to maintain low latency. This leads to processing backlogs and stale data in downstream systems.

- Unpredictable Cost Structures: Usage-based pricing can get out of hand at scale. It makes budgeting a nightmare and inflates the total cost of ownership (TCO) beyond what’s justifiable.

- Connector Gaps and Limitations: A team might need a specific high-performance, log-based CDC connector that isn’t available or well-supported, forcing them to use less efficient, query-based workarounds.

Mature data teams don’t just want a replacement; they’re looking for an upgrade. The goal isn’t just to fix today’s problems but to find a solution with enough architectural runway for the next three to five years of their data strategy.

Adopting a Problem-First Mindset

Picking the right Estuary alternative means going deeper than a simple feature-for-feature checklist. The best evaluations start by clearly defining the problem you’re trying to solve. Are you fighting high latency in your real-time analytics dashboards? Or is it the operational grind of managing complex transformations in-flight that’s slowing you down?

Getting to the root of these challenges is essential. Many organizations find that their nagging pipeline issues are actually symptoms of a deeper architectural mismatch. We cover this in our guide to solving common data integration issues. By adopting this problem-first mindset, you can evaluate how each alternative’s architecture directly solves your specific pain points. This ensures your next platform is a true strategic fit, not just a temporary patch.

This guide will give you a clear framework to make that choice with confidence.

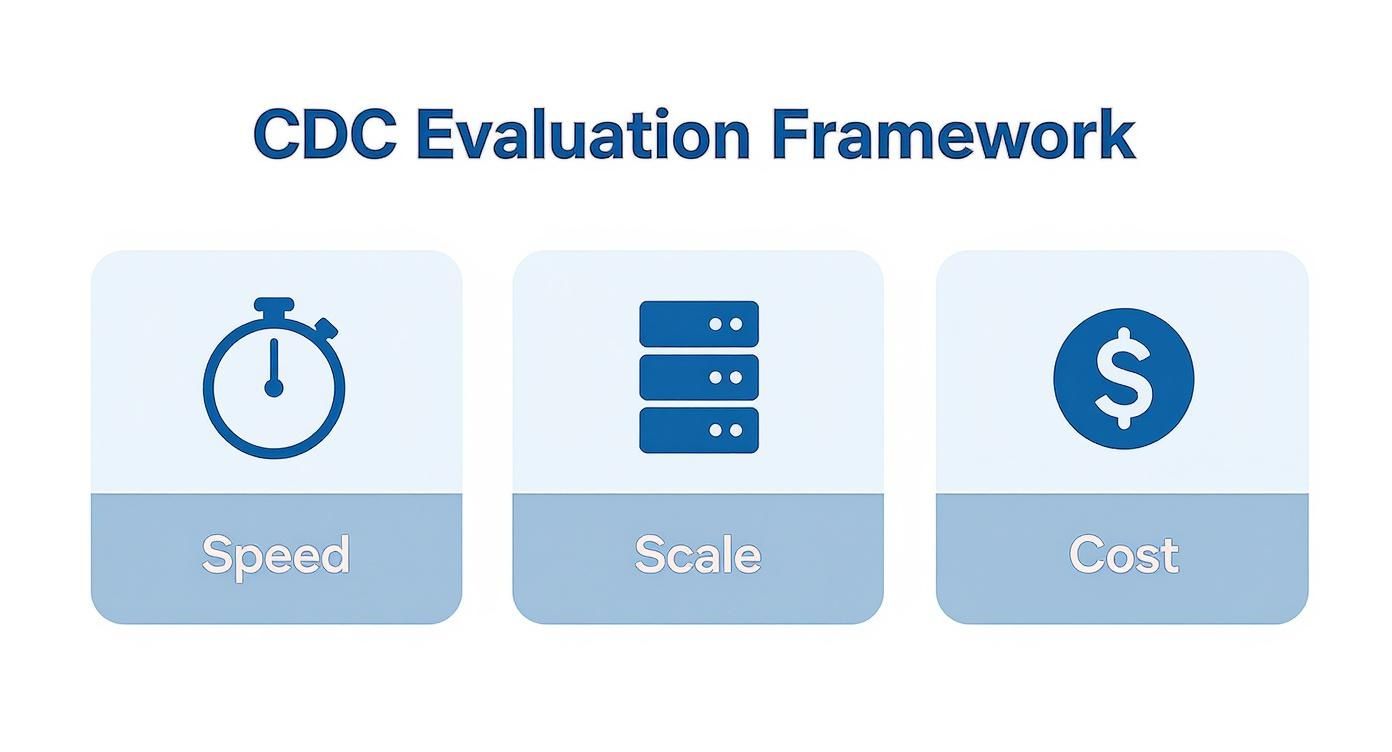

A Practical Framework for Evaluating CDC Platforms

Choosing an Estuary alternative isn’t just about picking a replacement; it’s about making a strategic decision for your data architecture. Without a clear evaluation method, you can easily get bogged down in marketing hype and feature lists that don’t tell the whole story. What you need is a framework that stress-tests a platform’s real-world capabilities.

I’ve built this framework around five pillars that I’ve seen make or break data projects. These aren’t just abstract concepts—they directly impact your team’s efficiency, your budget, and your ability to deliver the real-time data your business depends on. Use these criteria to cut through the noise and make a choice you won’t regret.

Performance and Latency

Let’s be honest, speed is the whole point of a modern CDC pipeline. But “real-time” is a term that gets thrown around a lot. What really matters is end-to-end latency—the actual time it takes for a row change in your source database to become a usable event in your destination.

For anything business-critical, sub-second latency is the gold standard. Hitting that mark requires an architecture built from the ground up for low-overhead capture and high-speed processing. Anything slower means your analytics dashboards are stale and your event-driven services are reacting to the past.

Scalability and Reliability

A platform that hums along with a few gigabytes of data can fall apart when you throw a few terabytes at it. Real scalability isn’t just about handling bigger volumes; it’s about handling them predictably, without performance cratering. You need to look under the hood. Does the platform use a durable backbone like Apache Kafka to buffer high-velocity streams?

Reliability is the other half of that equation. Your data pipelines are not side projects; they’re mission-critical infrastructure. Look for hard guarantees:

- Exactly-once processing: This is non-negotiable. It ensures data is never lost or duplicated, even when things go wrong.

- Automated recovery: The system needs to recover from disruptions on its own, without a 3 a.m. page to your on-call engineer.

- Transactional integrity: Events must arrive in the correct order, preserving the consistency of what happened at the source.

Developer Experience

A powerful platform that’s a nightmare to use is just expensive shelfware. A great developer experience is all about reducing friction. Think about the entire lifecycle—from spinning up a new pipeline to monitoring and troubleshooting it months later.

The best platforms empower engineers, they don’t burden them. An intuitive UI, clear monitoring dashboards, and a CLI for automation are not just nice-to-haves; they are essential for improving productivity and reducing the mean time to resolution (MTTR) when issues arise.

Connector Depth and Quality

Not all connectors are created equal. Just seeing your database logo on a vendor’s website means nothing. You have to dig deeper. A log-based CDC source connector, for example, is almost always superior to a query-based one because it doesn’t hammer your production database.

Assessing a platform’s ability to handle robust system integrations is a huge part of the evaluation. How a platform deals with schema evolution is another massive differentiator. You can learn more about the different kinds of change data capture tools and why these details matter so much.

Total Cost of Ownership

Finally, don’t be fooled by the sticker price. It’s just one piece of a much larger financial puzzle. The Total Cost of Ownership (TCO) is what you should really be calculating.

Make sure you account for all of it:

- Subscription or usage fees paid to the vendor.

- Infrastructure costs for compute, storage, and networking.

- Engineering time spent on setup, maintenance, and building workarounds for missing features.

- Operational overhead tied to managing the system and keeping it online.

Often, a platform with a lower initial price tag ends up costing far more in engineering hours just to keep it running at scale. A transparent, predictable pricing model is a must for any kind of long-term planning.

Comparing Estuary Against Top Alternatives

With a solid framework in place, we can now dive into a real-world, head-to-head comparison of Estuary and its main competitors. This isn’t about picking a single “best” tool. Instead, we’ll get into the weeds of their architectural decisions and see how those choices play out in production environments. We’re going to focus on the nuances that actually matter for high-stakes data pipelines.

We’ll put Estuary, Streamkap, Fivetran, and Airbyte under the microscope, evaluating them against our core pillars. That means looking past the marketing jargon to see how each one truly handles Change Data Capture (CDC), data transformations, and what kind of stream processing engine they’re built on. These are the foundational design choices that will directly impact your costs, engineering overhead, and whether your system can scale when you need it to.

The framework guiding this comparison boils down to three critical metrics: speed, scale, and cost.

This image really drives home the essential trade-offs that data teams have to juggle when selecting a platform for real-time data integration.

Architectural Philosophy and Its Impact

A platform’s fundamental architecture is its DNA; it sets the ceiling for performance and dictates how you’ll operate it day-to-day. This is where you’ll find the biggest differences between Estuary and any potential estuary alternative.

Estuary is built around its own proprietary, real-time data lakehouse, which uses “collections” to store both streaming and batch data. It’s an interesting design that lets you capture data once and then replay it to different destinations, which is a powerful feature for complex data distribution. The trade-off? You’re now managing and learning a proprietary storage layer.

On the other hand, platforms like Streamkap take a different path, building their architecture on a managed Kafka and Flink backbone. By using open-source standards, they deliver a durable, high-throughput streaming layer without forcing the end-user to wrestle with the underlying infrastructure. This approach almost always leads to lower end-to-end latency and more predictable scaling, because Kafka has been battle-tested for years at massive volumes.

A platform’s core architecture is its DNA. A solution built on managed open standards like Kafka provides a level of transparency, performance, and community support that proprietary systems often struggle to match, especially for enterprise-grade, real-time use cases.

Then you have Fivetran and Airbyte, which represent the more traditional ELT (Extract-Load-Transform) world. Their architectures are primarily built to shuttle data from a source to a warehouse in batches, even if they run those batches very frequently. It works great for historical analytics, but this design often can’t hit the sub-second latency that true real-time operational workloads demand. A deep dive shows just how different their architectures are when you compare Streamkap vs Fivetran, especially in their handling of CDC and data delivery.

Change Data Capture Methodology

How a platform actually captures changes from a database is a massive differentiator. There are really only two games in town: log-based CDC and query-based CDC.

- Log-Based CDC: This is the gold standard. It reads directly from the database’s transaction log (like the binlog in MySQL or the write-ahead log in PostgreSQL). It’s incredibly efficient, captures every single change with almost zero impact on the source database, and delivers the lowest latency possible.

- Query-Based CDC: This method involves repeatedly polling the source database with queries to look for new or updated rows, typically using a timestamp or version column. This puts a ton of extra load on your production database, can easily miss changes that happen between queries, and adds significant latency.

Both Estuary and Streamkap are all-in on log-based CDC, which is hands-down the better method for any real-time use case. Fivetran and Airbyte offer a mixed bag; some of their connectors are log-based, but many still fall back on less efficient query-based methods. For a high-volume transactional database, a log-based approach is simply non-negotiable if you want to avoid dragging your application’s performance down.

The following table breaks down these architectural differences side-by-side, offering a clear view of how each platform stacks up against our evaluation criteria.

Head-to-Head CDC Platform Comparison

Evaluation CriteriaEstuary FlowStreamkapFivetranAirbyte (Cloud)Core ArchitectureProprietary real-time lakehouseManaged Kafka & Flink (Open Source)Batch-based ELTBatch-based ELTPrimary Use CaseReal-time ETL & data distributionReal-time streaming & operational analyticsHistorical analytics & BI reportingGeneral-purpose data movementCDC MethodLog-basedLog-basedMixed (Log and Query)Mixed (Log and Query)LatencyLow (sub-second possible)Very Low (sub-second standard)High (minutes)High (minutes)ScalabilityGood, tied to proprietary systemExcellent, built on KafkaLimited by batch processingLimited by batch processingTransformationsIn-flight (ETL)Post-load (ELT)Post-load (ELT)Post-load (ELT)Operational BurdenModerate (manage proprietary layer)Low (fully managed)Very Low (fully managed)Moderate (connector management)Ideal ForTeams needing a unified streaming/batch system with multi-destination replay.Enterprise-grade, real-time pipelines for operational analytics and event-driven apps.Business intelligence teams focused on warehousing historical data for reporting.Teams needing a wide variety of connectors for less time-sensitive data movement.

This comparison highlights that the “right” choice is entirely dependent on your specific performance requirements and operational model.

Data Transformation In-Flight vs Post-Load

Another critical point of difference is where—and how—you transform your data. Estuary lets you run transformations using SQL or TypeScript directly on its platform before the data ever reaches its destination. This is a classic real-time ETL (Extract, Transform, Load) pattern.

This can be handy for quick data cleaning or simple enrichment jobs. The problem is, trying to run complex transformations in-flight can add latency and create a serious bottleneck in your pipeline. It also ties your transformation logic directly to the pipeline itself, making it a pain to manage and version control.

The modern approach, favored by ELT tools and platforms like Streamkap, is to get the raw, untouched data into your destination (like Snowflake or BigQuery) as fast as humanly possible. All the transformation work is then handled after the data has landed, usually with powerful tools like dbt. This decouples extraction and loading from transformation, which makes for more reliable pipelines and lets your data team use the full power of the data warehouse for sophisticated data modeling.

Choosing the right evaluation framework for a data platform isn’t so different from how companies in other industries make critical software decisions. For a look at how platforms are vetted in specialized fields, you can explore discussions around the top Drata alternatives and competitors in the compliance automation space.

Just as a changing climate can affect a natural ecosystem, shifting data needs can force a change in technology. For example, climate change is expected to dramatically alter estuarine environments. Salt intrusion is projected to increase in a staggering 89% of studied estuaries worldwide, with sea-level rise being the primary culprit—contributing roughly twice as much to this issue as reduced river flow. This highlights how powerful external forces can make you completely re-evaluate the systems you rely on.

Finding the Right Estuary Alternative for Your Use Case

Comparing platforms on paper is one thing, but the real test is how a tool holds up in the wild. The right platform for your business depends entirely on the problem you’re trying to solve. A killer feature for one team is often just noise for another.

Let’s move past the spec sheets and dig into four common scenarios. We’ll identify the best-fit platform for each and, more importantly, explain the why behind the choice. This is all about matching your specific needs to the right architecture.

Scenario 1: The High-Growth Startup

The Challenge: You’re a fast-growing startup, and you need to get your production PostgreSQL database replicated into Snowflake for real-time customer analytics. Your priorities are simple: lightning-fast data, effortless scaling as your user base explodes, and a setup that won’t require a dedicated data engineering team to babysit it.

The Recommendation: Streamkap is the clear winner here.

Its architecture, built on managed Kafka and Flink, was practically designed for the high-throughput, low-latency demands of a scaling startup. The log-based CDC for PostgreSQL means you get real-time data with almost no impact on your production database—a critical factor for maintaining app performance.

Best of all, it’s a fully managed service. This means your lean engineering team can stay focused on building your product, not wrestling with complex data infrastructure. The predictable pricing is also a huge plus, helping you avoid the surprise bills that can hamstring a growing business.

For startups, operational simplicity is everything. A managed platform like Streamkap abstracts away the immense complexity of running a real-time Kafka pipeline. You get enterprise-grade performance without needing an enterprise-sized team, letting a small group of engineers achieve massive results.

Scenario 2: The Large Enterprise

The Challenge: A large financial services firm needs to stream change data from several mission-critical Oracle databases. The goal is to feed real-time fraud detection algorithms and risk analytics models. The platform has to be bulletproof—rock-solid reliability, top-tier security, and the ability to handle massive transaction volumes without breaking a sweat.

The Recommendation: Streamkap or a self-hosted solution with Debezium/Kafka.

In the enterprise world, reliability and performance are table stakes. Streamkap delivers with its exactly-once processing guarantees and battle-tested Kafka backbone, ensuring zero data is lost or duplicated. It’s built to handle terabytes of data daily while keeping latency in the sub-second range, making it a powerful contender right out of the box.

However, some enterprises with deep in-house Kafka expertise and hyper-specific security protocols might choose to build their own solution using open-source tools like Debezium. This approach offers complete control but comes with a hefty operational price tag and a much higher total cost of ownership. For most enterprises, a managed service like Streamkap strikes the perfect balance of performance, reliability, and operational sanity.

Scenario 3: The Event-Driven Microservices Team

The Challenge: A data team is building out a suite of event-driven microservices that need to react instantly to database changes. Think about this: a new user signs up (an INSERT in the users table), and that single event needs to trigger a welcome email, update the CRM, and kick off an onboarding analytics flow—all at once.

The Recommendation: Streamkap or Estuary.

This is the classic streaming use case, and both platforms are strong fits. Streamkap shines by turning every database change into a clean, well-structured event on a Kafka topic. Your microservices can then subscribe to these topics independently, creating a beautifully decoupled and scalable architecture. It’s a textbook execution of using the database as a single source of truth for events.

Estuary also handles this scenario well with its “collections” architecture. It captures the data once and allows for multiple “materializations” to different downstream systems, achieving a similar outcome to Kafka’s pub/sub model. The decision here really boils down to your team’s preference: the industry-standard Kafka ecosystem (Streamkap) or the powerful, albeit proprietary, data lakehouse features of Estuary.

Scenario 4: The Open-Source Adopter

The Challenge: Your company has a strong “open-source first” philosophy. You want the flexibility to customize pipelines and avoid vendor lock-in, but you also need a lifeline—the option for enterprise-grade support when things inevitably get complicated in production.

The Recommendation: Airbyte (Open-Source) with an eye toward their enterprise plan.

For any team that prizes ultimate control and customizability, starting with the open-source version of Airbyte makes a lot of sense. It boasts a huge library of connectors and a vibrant community for support. Your engineers can get their hands on the source code, build their own connectors, and deploy it all within your own infrastructure.

The real advantage here is the clear upgrade path. If the operational burden of managing the open-source version becomes too much, or if you hit a point where you need SLAs and dedicated support, you can smoothly transition to Airbyte’s commercial cloud or enterprise offerings. This strategy gives you maximum flexibility from the start with a built-in safety net for the future.

A Strategic Guide to Platform Migration

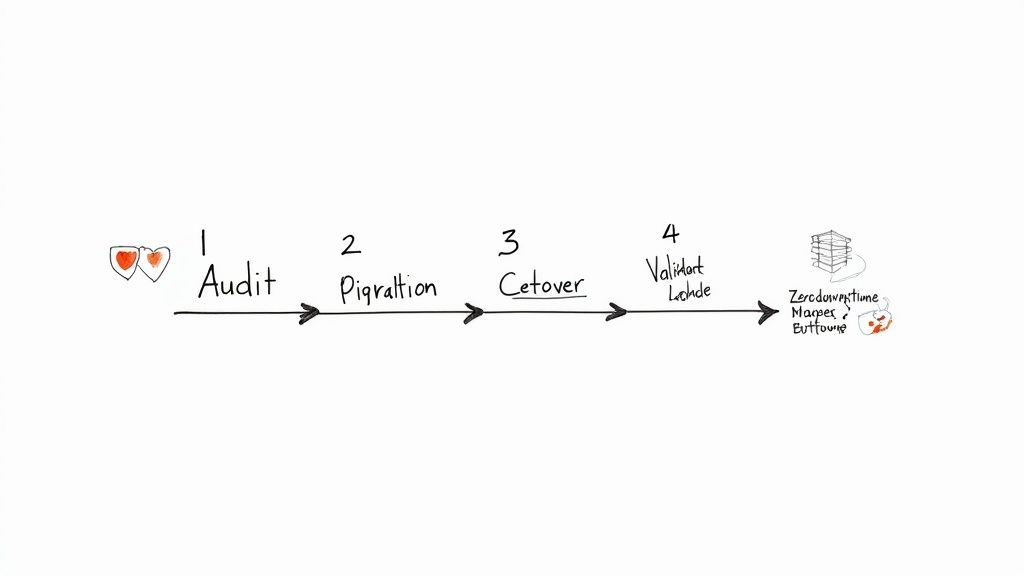

Let’s be honest: migrating your core data pipelines is a serious undertaking. Ripping out a system like Estuary and plugging in an estuary alternative requires more than just technical know-how; it demands a solid, strategic plan. The real goal here isn’t just to switch platforms—it’s to do it so seamlessly that your downstream consumers never even notice. That means zero downtime and absolute data integrity.

This isn’t a simple lift-and-shift operation. Think of it as a full-scale production software release, complete with deep analysis, parallel testing, and a carefully managed cutover. By approaching it with this level of discipline, you can sidestep the common traps that often derail data projects.

Phase 1: Audit and Define Success

Before you write a single line of code or configure a new connector, you have to do the groundwork. Start with a comprehensive audit of your existing Estuary pipelines. This discovery phase is non-negotiable and sets the foundation for the entire project.

Get into the weeds and catalog every single pipeline. For each one, document:

- Source and Destination: Pinpoint every database, warehouse, and application in the chain.

- Data Volume and Velocity: Get hard numbers on how much data you’re moving and how fast it’s changing.

- Transformation Logic: Uncover any in-flight transformations happening inside Estuary. Don’t let hidden business logic bite you later.

- Downstream Dependencies: Map every single team, dashboard, or application that relies on this data. Who will scream if the data stops flowing?

Once you have this map, you can define what “success” actually looks like. These aren’t vague aspirations; they are concrete, measurable criteria. For instance, a solid success metric might be: “The new pipeline must maintain end-to-end latency below 500 milliseconds for 99.9% of events, with zero data discrepancy between source and target over a 48-hour parallel run.”

This level of detail is your best defense against scope creep. It ensures everyone from engineering to product is aligned on what “done” truly means.

Phase 2: Parallel Pipelines and Validation

The secret to a migration with zero downtime and zero data loss is running the old and new systems in parallel. This is where a platform like Streamkap really shines, as it lets you set up a completely new, independent pipeline from the exact same database source without disrupting your production Estuary environment.

This dual-pipeline approach is your safety net. It allows you to validate the new system’s performance, reliability, and accuracy against the old one in a live environment. You’re not guessing—you’re proving. You can compare record counts, run checksums, and monitor latency to confirm the new pipeline isn’t just working, but working perfectly.

A migration strategy without a parallel validation phase is a high-stakes gamble. By running both systems simultaneously, you transform the cutover from a risky “big bang” event into a controlled, evidence-based decision.

This phase also forces you to tackle critical technical details head-on. You’ll need a plan for historical data backfills to fully seed the new destination. And you absolutely need a strategy for handling schema drift—because source tables will change, and both pipelines must handle those changes gracefully during the transition.

Phase 3: Phased Cutover and Decommission

Once your validation data proves the new pipeline meets every success criterion, it’s time to make the switch. But don’t just flip a giant lever. A phased cutover is always the smarter move.

Start small. Pick a single, non-critical downstream consumer and point it to the new data source. Watch it like a hawk. This isolates your test and minimizes the blast radius if anything goes wrong. Once you’ve confirmed its stability, you can methodically migrate other consumers, either one by one or in small, logical batches.

Only when every single downstream dependency has been moved over—and the new system has been running flawlessly for a predetermined period—should you even think about decommissioning the old Estuary pipelines. This is a process of careful, systematic replacement. It’s a lot like how human development has reshaped natural environments; over the last 35 years, at least 250,000 acres of natural estuaries have been converted to urban and agricultural land. It underscores the need for planned transitions to avoid disrupting an established ecosystem, whether it’s digital or natural. You can learn more about the global transformation of estuaries and see the parallels for yourself.

Frequently Asked Questions

When you’re deep in the weeds evaluating a new data platform, you move past the marketing slides pretty quickly. The real questions are practical, technical, and focused on what will happen when the rubber meets the road. Here are the common questions we hear from engineering leaders when they’re considering a move away from Estuary.

How Does Latency in Streamkap Compare to Estuary?

The latency conversation really comes down to a difference in core architecture. Estuary is built around its own proprietary, real-time data lakehouse. It’s a powerful concept but adds an extra layer for data to move through, and performance depends entirely on how that specific layer is processing everything.

Streamkap, on the other hand, is built directly on a managed Apache Kafka and Apache Flink backbone. This stack was purpose-built for one thing: extremely low-latency, high-throughput streaming. By sticking to these battle-tested open standards, Streamkap consistently hits sub-second, end-to-end latency. For anything where milliseconds matter—think real-time fraud detection or live operational dashboards—this Kafka-native approach almost always gives you a measurable speed advantage.

The key takeaway is that a managed Kafka architecture gives data a more direct, efficient path from source to destination. This generally translates to lower and more predictable latency, especially under the heavy workloads you see at enterprise scale.

What Is the True Cost Difference Between Models?

Comparing pricing isn’t just about looking at the sticker price; you have to calculate the total cost of ownership (TCO). A usage-based model like Estuary’s can look great for small projects, but it can quickly become unpredictable and expensive as your data volumes grow. One unexpected usage spike can blow your budget for the quarter.

A fixed-price model, which you’ll find with an Estuary alternative like Streamkap, brings predictability back into the picture. But to get the true TCO, you also need to factor in:

- Infrastructure Costs: Are you paying separately for the underlying compute and storage, or is that bundled in?

- Maintenance Overhead: How many engineering hours will your team spend managing, troubleshooting, and scaling the platform?

- Operational Burden: Does the platform require deep, specialized knowledge to run well, effectively increasing your labor costs?

A fully managed platform with transparent, fixed pricing usually results in a lower TCO because it absorbs the infrastructure management and frees up your engineers from babysitting pipelines.

Can I Migrate from Estuary with Zero Downtime?

Yes, a zero-downtime migration is absolutely possible, but it requires a solid strategy and the right platform features. The entire process relies on running the old and new systems in parallel for a time to ensure data integrity before you flip the switch.

You’d start by setting up your new pipeline in Streamkap to pull from the exact same source database Estuary is using. For a while, both platforms will stream data simultaneously to their destinations. This parallel run gives you the chance to do rigorous validation—comparing record counts and checking data consistency—all without touching your production workflows. Once you’ve confirmed 100% data parity, you can confidently point your downstream applications to the new source with zero service interruption.

How Do Open-Source Alternatives Compare on Reliability?

Open-source tools like Airbyte offer fantastic flexibility and have vibrant communities. But for enterprise workloads where reliability is non-negotiable, you have to consider the trade-offs. Running open-source software in production means your team owns everything: deployment, scaling, monitoring, and disaster recovery.

While that level of control is appealing, it also creates a significant operational burden. This is where managed, enterprise-grade platforms stand out by providing:

- Service Level Agreements (SLAs): A contractual promise for uptime and performance.

- Expert Support: Direct access to dedicated engineers who can solve critical problems fast.

- Hardened Security: Enterprise-grade security features and compliance certifications that come out of the box.

For your most critical data pipelines, the guaranteed reliability and expert support from a managed Estuary alternative often provide more business value than the raw flexibility of a self-hosted open-source solution.

Ready to see how a truly real-time, managed Kafka platform can transform your data pipelines? Streamkap offers predictable pricing, sub-second latency, and a seamless developer experience. Start your free trial today and build a production-ready pipeline in minutes.