Building Data Pipelines for Modern Analytics

A practical guide to building data pipelines that deliver real-time insights. Learn to use CDC with Snowflake, Databricks, and BigQuery for faster analytics.

Building a data pipeline is all about setting up an automated path for your data to travel. You're essentially creating a system that pulls information from all its different starting points and brings it to one central place, ready for storage and analysis. This is the bedrock of modern analytics, machine learning, and BI—without a reliable, steady stream of data, none of it works. The basic building blocks are always the same: sources, ingestion, processing, and a destination like Snowflake, Databricks, or Google BigQuery.

Why Modern Data Pipelines Are a Business Imperative

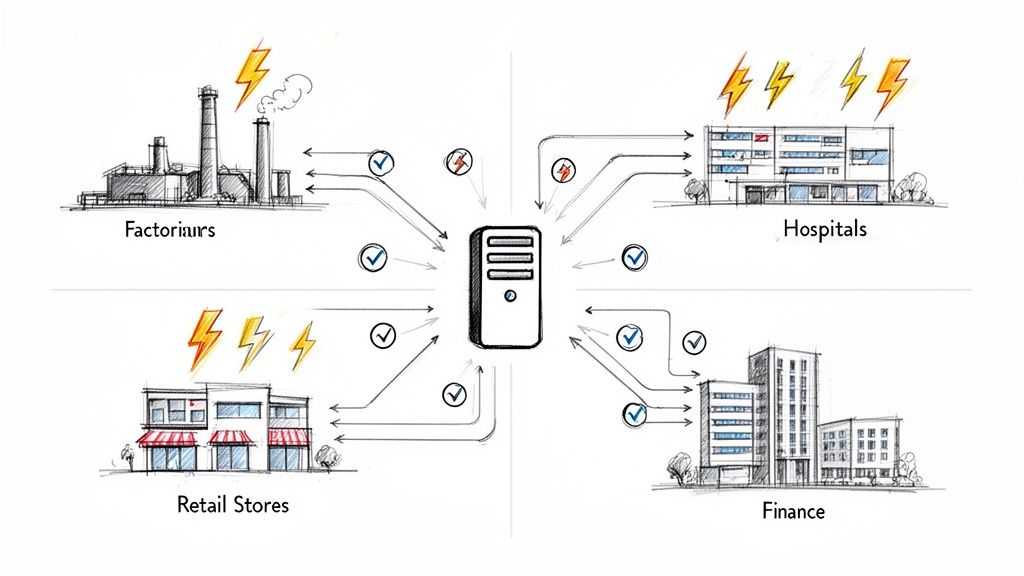

Let's be clear: a data pipeline isn't just some technical plumbing. It’s the central nervous system of any company that wants to be data-driven. It's the infrastructure that powers everything from the real-time dashboards your CEO is glued to, all the way to the complex ML models predicting what your customers will buy next. If you don't have solid pipelines, your most valuable data is just sitting there, locked away in operational databases and siloed apps, doing absolutely nothing for you.

You don't have to take my word for it—just look at the market. The global data pipeline tools market was recently valued at around $12.09 billion, and forecasts show it exploding to somewhere between $43.61 billion and $48.33 billion by 2030-2032. That kind of growth is a direct response to the insane amount of data we're creating, which is projected to hit 200 zettabytes a year by 2025. You can get a better sense of this growth by exploring insights on the rapid expansion of the data pipeline market and what’s behind it.

The Big Shift: From Batch to Real-Time

For years, the standard approach was batch processing. You'd collect data all day and then run a massive job overnight to process it. That worked fine for historical reporting, but in today’s world, it’s just too slow. Businesses need to react instantly. Think about detecting credit card fraud in milliseconds or updating inventory the exact moment a product sells. That kind of immediacy has forced a huge shift toward real-time data architectures.

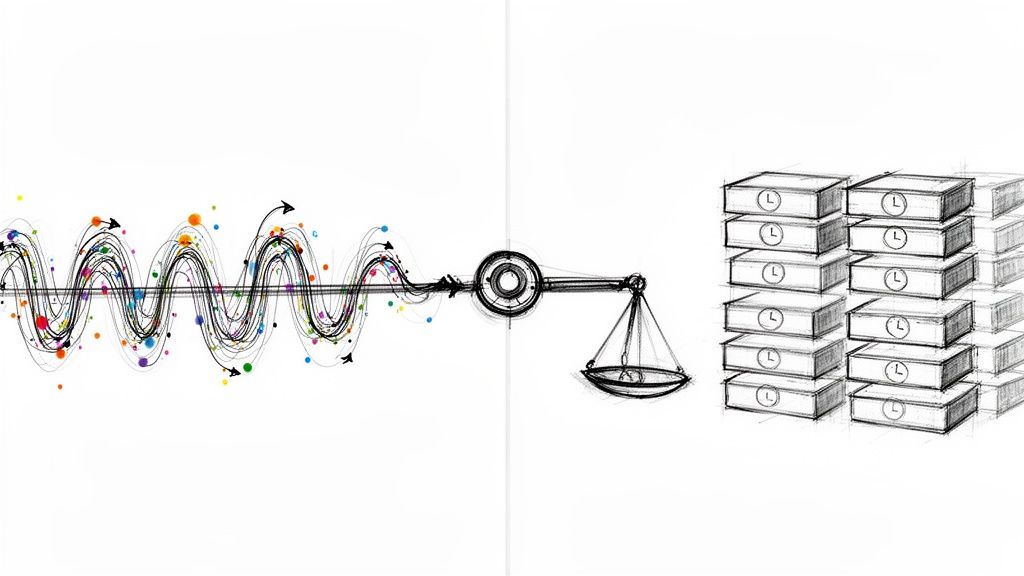

The technology that really makes this possible is Change Data Capture (CDC). Instead of querying entire databases over and over, which is slow and resource-intensive, CDC just watches for the changes—the inserts, updates, and deletes—as they happen. This creates a continuous, low-latency stream of events that you can process on the fly.

A pipeline that delivers data hours or even days late is a pipeline that delivers missed opportunities. The move to real-time processing isn't just about speed; it's about making data actionable at the precise moment it matters most.

Before we dive into the "how," it's helpful to see just how different these two worlds are.

Traditional Batch vs Modern Real-Time Pipelines

FeatureTraditional Batch PipelinesModern Real-Time PipelinesData LatencyHigh (hours or days)Low (milliseconds to seconds)ProcessingScheduled, large chunks of dataContinuous stream of individual eventsArchitectureETL (Extract, Transform, Load)ELT (Extract, Load, Transform) / StreamingPrimary Use CaseHistorical reporting, BI dashboardsFraud detection, live inventory, IoTCore TechnologyCron jobs, custom scriptsChange Data Capture (CDC), KafkaBusiness ImpactReactive decisions based on old dataProactive decisions on live data

This table really just scratches the surface, but it highlights the fundamental change in thinking. We're moving from a "look back" model to a "what's happening now" model.

Setting the Stage for Analytics That Can Keep Up

This guide is all about building pipelines that can handle the modern demand for speed and scale. We’re going to walk through how to use CDC to get your data flowing into the major cloud platforms that are the cornerstones of high-performance analytics today. Specifically, we'll be focusing on these three:

- Snowflake: A cloud data platform famous for its architecture that separates storage and compute, which is a game-changer for scalability.

- Databricks: A unified analytics platform built on Apache Spark, perfect for creating "lakehouses" that give you the power of a data warehouse with the flexibility of a data lake.

- Google BigQuery: A serverless, massively scalable data warehouse that lets you run incredibly fast SQL queries using Google's own infrastructure.

By hooking up real-time data streams to these powerful platforms, you can finally unlock the insights you need to stay competitive and react to the market as it changes, not hours after the fact.

Designing a Future-Proof Data Pipeline Architecture

Any successful data pipeline is built on a solid blueprint long before a single line of code gets written. It all starts with a strategic plan that ties your technical architecture directly to what the business actually needs. If you just jump straight into implementation, you’re setting yourself up for a brittle system that's a nightmare to maintain and will be obsolete before you know it.

I like to think of it like building a house. You wouldn't just start pouring concrete without a clear idea of the layout, where the plumbing needs to run, or if the foundation can even support a second story. The same exact principle applies here. The initial design phase is your chance to make sure the final structure can handle the load, both today and down the road.

Aligning Architecture with Business Objectives

The very first thing you need to do is map out your data sources. More importantly, you need to understand the why. What specific business outcomes are you trying to drive with this pipeline? The answers you get will dictate every architectural choice you make.

For instance, a pipeline built for a real-time fraud detection system has completely different requirements than one for weekly sales reporting. Fraud detection demands millisecond latency, while a sales report can easily tolerate batch processing.

To get to the heart of it, start asking these questions:

- What decisions will this data actually drive? This tells you everything you need to know about data freshness and accuracy requirements.

- Who are the end-users? Are we talking about analysts running ad-hoc queries, or a machine learning model that needs a constant, reliable feed?

- What are the real performance expectations? Get specific and define clear Service Level Objectives (SLOs) for data latency and availability.

Answering these questions upfront ensures you’re not just shuffling data around—you’re delivering measurable value. It’s the best way to avoid over-engineering a simple solution or, even worse, under-engineering a pipeline for a mission-critical use case.

Choosing the Right Architectural Pattern

Once the objectives are crystal clear, it's time to pick an architectural pattern. This decision almost always comes down to the three Vs of data: Volume (how much data are we talking about?), Velocity (how fast is it coming in?), and Variety (how many different formats and sources?). In modern data engineering, the two patterns you'll see most often are ETL and ELT.

ETL (Extract, Transform, Load) is the old-school, traditional approach. You pull data from a source, transform it in a separate processing engine, and then load it into your data warehouse. This pattern is still incredibly useful when you need to run complex calculations or cleanse sensitive data for compliance reasons before it ever touches your warehouse.

ELT (Extract, Load, Transform) is the new standard, especially for cloud-native data platforms. With ELT, you extract the raw data and load it directly into a powerhouse destination like Snowflake, Databricks, or BigQuery. All the transformation work happens later, using the immense computational power of the warehouse itself.

The big win with the ELT approach is its incredible flexibility. By landing the raw, untouched data first, you create a "single source of truth." This means you can re-transform that same data for totally new use cases in the future without ever having to go back and re-ingest it from the source systems.

This modern pattern keeps the ingestion layer simple and clean while taking full advantage of the scalability of cloud platforms. It's my go-to choice for most new projects. For a more detailed breakdown, you can explore various data pipeline architectures that compare these models side-by-side.

Core Principles for Longevity

To make sure your pipeline doesn't crumble under pressure or become a maintenance black hole, you need to build these three core principles into your design from day one.

- Scalability: Your architecture has to be ready for growth. A good rule of thumb is to design for 10x your current data volume. This forces you to choose technologies that scale horizontally, like a distributed messaging system paired with a cloud data warehouse that separates compute from storage.

- Fault Tolerance: Things will break. It’s a matter of when, not if. Your design must be resilient enough to handle it. This means implementing automatic retries, setting up dead-letter queues for failed messages, and having robust monitoring in place to catch issues before your downstream users even notice.

- Maintainability: A complex, undocumented pipeline is a ticking time bomb of technical debt. Always prioritize simplicity, build with modular components, and keep your documentation clean and up-to-date. A pipeline that only one person on the team understands is a massive organizational risk.

For a great deep dive into the practical side of building pipelines and these architectural trade-offs, I highly recommend checking out The Data Engineering Show, which regularly features experts talking through these exact challenges. By focusing on these strategic design elements, you're not just building a pipeline—you're engineering a reliable, future-proof data asset for your entire organization.

Implementing Real-Time Ingestion with Change Data Capture

Alright, let's move from the drawing board to the real world. This is where the theory behind your data pipeline gets put into practice. The goal is simple but crucial: create a rock-solid, lightning-fast stream of data from your core systems. And in today's world, Change Data Capture (CDC) is the go-to standard for making that happen.

Gone are the days of hammering your production database with SELECT * queries every five minutes. That old-school method, known as polling, is a performance nightmare. It puts a constant, needless strain on your source systems and, worse yet, it's notorious for missing updates that slip through between polling intervals. CDC is a much smarter, more elegant solution.

Log-based CDC works by tapping directly into the database's own transaction log—the internal record of every single insert, update, and delete. By reading this log, CDC tools capture every change as it happens, without ever touching the actual database tables. This is a game-changer because it’s incredibly low-impact and catches every event with pinpoint accuracy. If you want to dive deeper into the nuts and bolts, this guide on what Change Data Capture is is a great resource.

Ultimately, building a data pipeline isn't just about moving data; it's about connecting those raw data points to real business objectives.

This process really drives home the point that the technology is just a means to an end. A successful pipeline is one that turns raw data into something the business can actually use.

Setting Up a CDC Stream

Let’s get practical with a common scenario I see all the time: streaming changes from a PostgreSQL or MySQL database. A very popular, powerful open-source combination for this is Debezium, which captures row-level changes, and Kafka Connect, which acts as the bridge to get that data into a streaming platform like Apache Kafka.

First things first, you need to prep your source database to allow for logical replication. For PostgreSQL, this means jumping into your postgresql.conf file and setting wal_level = logical. If you're on MySQL, you'll need to enable the binary log (binlog) by adding log_bin = mysql-bin and binlog_format = ROW to your configuration. These settings tell the database to start logging detailed change events in a way CDC tools can understand.

With the database ready, you can deploy a Debezium connector using Kafka Connect. You configure this connector to watch your specific database, and from there, it takes over. It connects, starts reading the transaction log, and generates a structured event for every single change. This event is packed with useful info: the "after" state of the data, the "before" state, the operation type (c for create, u for update, d for delete), and a bunch of other helpful metadata.

At this point, you've done it. You have a real-time, event-driven stream of every single modification happening in your source database. This is more than just a data feed—it’s a perfect audit trail ready for analytics, replication, or kicking off other automated workflows.

Tackling Initial Snapshots and Backfills

One of the first questions that always comes up is, "Great, we have new changes, but what about all the data that's already in the tables?" The transaction log only captures what's new. This is where the initial snapshot, or "backfill," process comes in.

When you first fire up a Debezium connector, you can tell it to perform an initial snapshot. It will safely lock the tables, read all the existing rows, and stream them to your Kafka topic. Once that's done, it automatically switches over to reading the transaction log for live changes. This two-step dance ensures you get a complete, consistent copy of your data without missing a beat.

A word of caution: if you're working with massive tables, you'll want to plan this snapshot carefully to avoid bogging down your production systems.

Navigating Schema Evolution

Another real-world headache is schema evolution. Sooner or later, a developer is going to add a new column or change a data type. A poorly designed pipeline will just fall over.

Thankfully, modern CDC tools are built for this. When Debezium spots a schema change, it sends a special event to the Kafka topic describing exactly what changed. Your downstream systems, like a data warehouse, can be set up to listen for these events and automatically apply the DDL changes to the target tables. This ability to handle schema changes on the fly is what separates a brittle pipeline from a truly resilient, low-maintenance one.

A solid ingestion layer built this way nails three critical challenges:

- Transactional Consistency: It makes sure that all changes from a single database transaction are processed as one unit, keeping your data perfectly intact.

- Low Latency: It captures and delivers data in seconds, not minutes or hours, which is essential for any real-time application.

- Minimal Source Impact: By reading from logs, you avoid putting any significant performance load on your critical operational databases.

By starting with log-based CDC, you’re building a foundation that’s not just fast and efficient, but also robust enough to handle the constant evolution of your source systems.

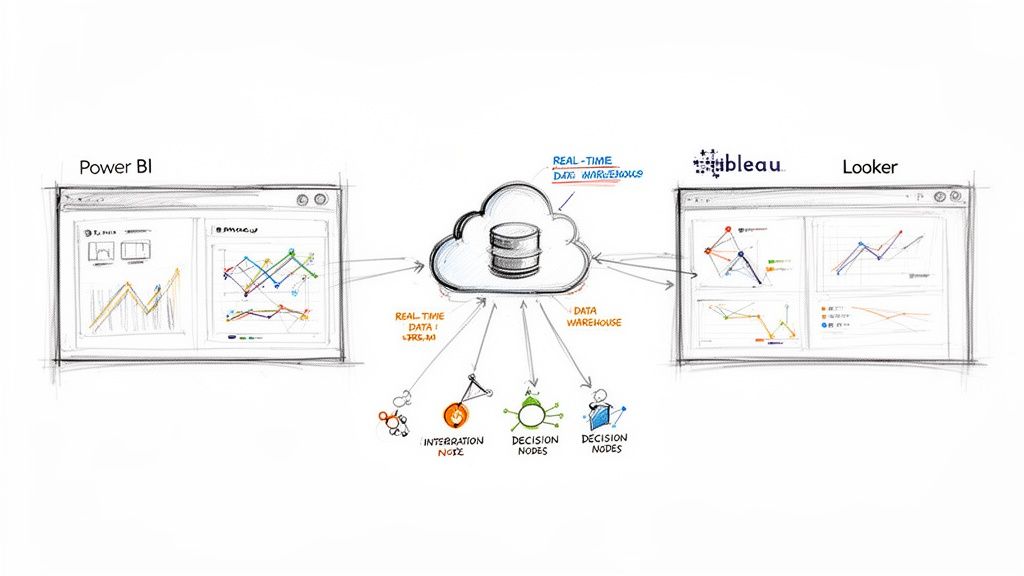

Landing Your Data in a Modern Cloud Platform

So, you've got a Change Data Capture (CDC) system up and running. It’s dutifully capturing every transaction and turning your database's activity into a tidy, real-time stream of events. That's a huge win, but it's only half the battle. Now, where does that data go?

The next critical step is getting that stream into a powerful cloud data platform where it can actually be used for analysis, dashboards, or machine learning. This is where the big three—Snowflake, Databricks, and Google BigQuery—come into play.

Each of these platforms is an absolute beast, but they all have their own quirks and preferred ways of handling streaming data. You can't just point your data firehose at them and hope for the best. To build a pipeline that's both efficient and cost-effective, you need to play by their rules and match your integration strategy to their unique architecture.

Connecting Your Pipeline to Snowflake

Snowflake's genius lies in its architecture, which separates storage from compute. This design is practically tailor-made for the unpredictable ebb and flow of real-time data. The star of the show here is Snowpipe, Snowflake’s continuous data ingestion service.

Instead of keeping a pricey virtual warehouse running around the clock just to catch new data, Snowpipe uses a serverless model. It automatically spins up resources to load micro-batches of data the moment they land in a cloud storage bucket, like Amazon S3.

Here's how that usually plays out:

- Stage the files: Your CDC pipeline, often using a tool like Kafka, drops event data into a cloud storage location (S3, Azure Blob Storage, or GCS). These are typically small files in formats like Avro, Parquet, or JSON.

- Set up Snowpipe: In Snowflake, you create a

PIPEobject that watches that specific storage location. Think of it as a subscription. - Let it run: When a new file appears, the cloud provider pings Snowpipe. Snowpipe instantly wakes up, grabs the data, and merges it into your target table.

This pattern is incredibly efficient. You’re not paying for a warehouse to sit idle waiting for data. Instead, you only pay for the exact compute resources used to load each micro-batch, making it a highly cost-effective method for near-real-time ingestion.

To make this process seamless, your final step inside Snowflake should almost always be a MERGE statement. This single command intelligently handles all the inserts, updates, and deletes from your CDC stream in one atomic transaction. It’s the key to keeping your Snowflake table a perfect, up-to-the-second mirror of your source database.

Building a Lakehouse with Databricks

Databricks takes a different approach, championing the "lakehouse" architecture that blends the best of data lakes and data warehouses. For real-time pipelines, the core combo is Structured Streaming and Delta Lake. Delta Lake is the magic layer that brings ACID transactions and reliability to the files sitting in your data lake.

Integrating a CDC stream here feels less like loading data and more like a continuous processing job.

- A Databricks job using Structured Streaming connects directly to your source stream, like a Kafka topic.

- As micro-batches of CDC events flow in, your Spark code can apply transformations on the fly.

- The processed data is then "upserted" into a Delta table using the

MERGE INTOcommand.

Delta Lake's transactional guarantees are crucial here. They prevent data corruption and ensure consistency, even if multiple streams are trying to write to the same table. This gives you a reliable, versioned, and queryable table built directly on cheap cloud storage, ready for both BI queries and large-scale ML model training.

Leveraging BigQuery for High-Throughput Ingestion

When it comes to raw speed on massive datasets, Google BigQuery is in a class of its own. For streaming data, its most potent weapon is the Storage Write API. It's a massive improvement over older methods, offering exactly-once delivery guarantees and better cost-efficiency.

The workflow for getting your CDC stream into BigQuery with this API looks something like this:

- Your data pipeline application establishes a persistent connection to the API.

- It then streams records, formatted as protocol buffers, directly over this connection. The API intelligently buffers and commits these records in transactional blocks.

- Once a batch is sent, your application signals a commit, and BigQuery makes the new data available for querying, often within just a few seconds.

The Storage Write API is built for high-volume, high-stakes scenarios. If you're feeding critical operational dashboards or building applications where not a single event can be lost or duplicated, this is the tool for the job.

To help you decide, here's a quick look at how these platforms' primary ingestion methods stack up.

Cloud Data Platform Integration Methods

Each platform provides a robust way to handle real-time data, but they are optimized for slightly different outcomes. Understanding these nuances is key to selecting the right architecture for your needs.

PlatformPrimary Ingestion MethodKey FeatureBest ForSnowflakeSnowpipeServerless, event-driven micro-batchingCost-effective, near-real-time ingestion without managing warehousesDatabricksStructured Streaming + Delta LakeUnified streaming and batch processingBuilding a transactional data lakehouse with robust data versioningBigQueryStorage Write APIHigh-throughput, exactly-once semanticsLarge-scale streaming use cases requiring low latency and strong consistency

Ultimately, your choice will likely depend on your existing cloud ecosystem, performance requirements, and what you plan to do with the data once it lands. The good news is that each of these platforms offers a world-class solution for turning that raw CDC stream into a valuable, queryable asset.

How to Optimize Pipeline Performance and Reliability

It’s one thing to build a data pipeline that works. It’s another thing entirely to build one that’s fast, dependable, and resilient. A pipeline that drops data, stalls out, or delivers stale information isn't just an inconvenience; it's a liability that can lead to bad business decisions and a total loss of trust in your data.

Real operational excellence isn't a "set it and forget it" affair. It comes from treating your pipelines like living, breathing systems that need constant care and attention. The end goal is an observable, self-healing infrastructure that flags problems before your users do.

Get Serious About Logging and Monitoring

You can't fix what you can't see. The very first step toward a reliable pipeline is getting deep visibility into every stage of its operation. This means going way beyond a simple "success" or "fail" status on a job run.

To do this right, you need to track key performance indicators (KPIs) that tell you the real story of your pipeline's health. These metrics are your early warning system, giving you a chance to step in before a small hiccup becomes a full-blown outage.

Here are the vitals I always start with:

- Data Latency: How long does it actually take for a single record to get from the source database to its final destination? If this number suddenly jumps, you’ve likely got a bottleneck forming somewhere.

- Throughput: Are you processing a consistent volume of data? Watching your records per second or MBs per minute can tell you if a source system is lagging or if your processing cluster is under-provisioned.

- Error Rates: What percentage of records are failing during processing? This is a direct measure of data quality issues and can help you pinpoint exactly which transformation is causing trouble.

Once these metrics are flowing into a dashboard, you can build smarter alerts. Forget getting paged for every minor blip. Instead, configure your alerts to fire only on sustained anomalies or major deviations from your baseline. This frees your team up from constant firefighting. Our guide on data engineering best practices dives deeper into building these kinds of observable systems.

Fine-Tune Your Pipeline with a Few Pro Tricks

With clear visibility in place, you can finally start making targeted improvements. Performance tuning is a continuous cycle of hunting down and eliminating bottlenecks.

A common place to find easy wins is with data partitioning in your cloud warehouse. For platforms like Snowflake or BigQuery, partitioning your massive tables by a date or a high-cardinality ID can make queries orders of magnitude faster. The query engine knows it can safely ignore huge chunks of data, which saves you both time and money.

Another make-or-break concept, especially with streaming data, is backpressure. This happens when a downstream system can't keep up with the data being thrown at it by an upstream one. If you don't manage it, backpressure will quickly lead to memory overflows and system crashes.

A well-architected pipeline anticipates these surges. By building in mechanisms like rate limiting, buffering, and autoscaling, you give your system the ability to handle unexpected spikes in volume without falling over.

Bring in DataOps for Automation and Resilience

The core ideas behind DevOps—automation, CI/CD, and treating infrastructure as code—apply perfectly to data pipelines. We call this practice DataOps, and it's the key to achieving both agility and rock-solid stability.

With a DataOps mindset, you automate everything: infrastructure setup, data quality checks, and even deployment rollbacks. By managing your pipeline configurations in Git, you get version control, automated testing, and a repeatable, reliable deployment process. This discipline is what stops manual "fat-finger" errors from taking down your entire system.

This push for automation is happening industry-wide. A staggering 88% of organizations are now looking into generative AI for data processing tasks. At the same time, the adoption of tools like Kubernetes has hit 84% in enterprises, enabling teams to automate scaling and create far more consistent environments. By embracing these code-driven practices, you're not just building efficient pipelines—you're building truly resilient ones.

Common Questions About Building Data Pipelines

Even the most well-thought-out plan runs into snags. When you're in the trenches building data pipelines, certain questions and roadblocks pop up again and again. Getting ahead of these common hurdles will save you a ton of headaches and prevent expensive missteps later on.

Here’s a look at some of the most frequent challenges I see teams grapple with, along with practical advice from the field.

What Is the Biggest Mistake to Avoid?

I've seen it happen countless times: the single biggest mistake is ignoring monitoring and data quality until it's too late. Teams get so hyper-focused on the mechanics of moving data from A to B that they forget to ask, "Is this data even any good?"

Before you know it, you're dealing with unreliable, latent, or inconsistent data. This reactive, fire-fighting approach completely erodes trust in the data across the whole organization. That’s why integrating robust monitoring, alerting, and simple data validation from day one is absolutely non-negotiable.

Start small, but start right away. You don't need a perfect system from the get-go. Implement basic checks like record counts and null value validation. This simple step creates a foundation of reliability that you can build on as your pipeline grows.

How Do You Choose Between ETL and ELT?

The classic ETL vs. ELT debate really boils down to your destination and how you need to handle transformations. For modern cloud platforms like Snowflake, Databricks, and BigQuery, ELT is almost always the way to go.

The modern data stack is built for this. You load raw, untouched data directly into your warehouse and then leverage its massive, scalable compute power to run your transformations. This approach is incredibly flexible—you can easily repurpose that raw data for entirely new projects down the road without having to re-ingest anything.

But that doesn't mean traditional ETL (Extract, Transform, Load) is obsolete. It’s still the right call when you have to perform seriously complex, resource-heavy transformations or enforce strict data privacy, like redacting sensitive PII before it even touches your warehouse.

Should You Use Open-Source Tools or a Managed Service?

Ah, the timeless "build vs. buy" question. There's no single right answer here; it all depends on your team's size, skills, and what you’re trying to accomplish.

- Open-Source Tools: Going with something like Apache Kafka and Debezium gives you ultimate control and customization. They are incredibly powerful, but don't underestimate the engineering effort required to deploy, manage, and scale them. This path is usually best for large organizations with very specific needs and a dedicated data engineering team to support it.

- Managed Services: These platforms abstract away the underlying complexity, freeing up your team to focus on creating business value instead of babysitting infrastructure. If you're a smaller team or an organization that needs to move fast, a managed service is almost always the more efficient choice.

At the end of the day, building solid data pipelines is a core competency for any organization that takes data seriously. For anyone looking to build a career in this space, having the right resources, like these ATS-friendly resume examples for data professionals, can make a real difference. Understanding these common questions gives you a clearer path forward, helping you sidestep pitfalls and build more resilient systems right from the start.

Ready to build reliable, real-time data pipelines without the operational overhead? Streamkap automates the complexities of Change Data Capture, letting you focus on insights, not infrastructure. See how it works at https://streamkap.com.