Understanding What is Streaming Architecture for Data

September 9, 2025

Streaming architecture is changing the way companies process data, letting information move fast and stay relevant. Most people focus on old batch methods and never consider how modern systems now handle 30% of all global data in real time by 2025. But rapid data movement is not the headline. The real story is how streaming architecture shrinks the gap between raw facts and real-world decisions, driving smarter actions when every second counts.

Table of Contents

- Defining Streaming Architecture: Concepts And Principles

- The Importance Of Streaming Architecture In Data Processing

- How Streaming Architecture Works: Key Components Explained

- Real-World Applications Of Streaming Architecture

- Challenges And Considerations In Streaming Architecture

Quick Summary

| Takeaway | Explanation |

|---|---|

| Streaming architecture enables real-time data processing | Organizations can continuously analyze data as it flows in, facilitating immediate insights and responsive actions. |

| Proactive decision-making drives competitive advantage | By leveraging real-time data analysis, businesses can detect issues and seize opportunities faster than competitors. |

| Scalability is crucial for handling large data volumes | Streaming systems must be designed to dynamically scale, maintaining performance under increasing data loads without delays. |

| Integration challenges require careful management | Successfully deploying streaming architecture involves overcoming complexities related to diverse data sources and formats. |

| Security measures are essential in streaming environments | Organizations must implement robust security protocols to protect data and ensure compliance amidst rising cyber threats. |

Defining Streaming Architecture: Concepts and Principles

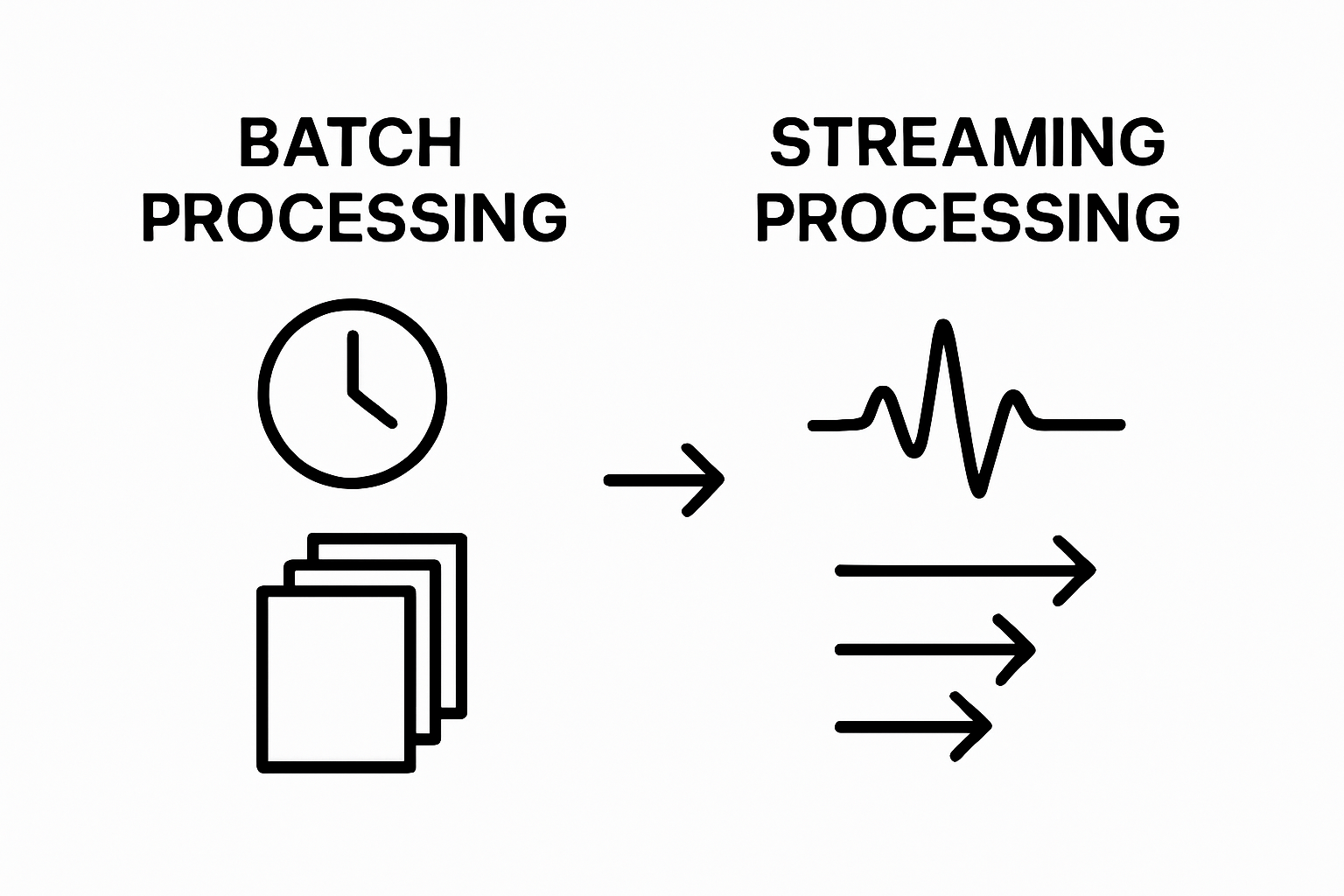

Streaming architecture represents a modern paradigm for processing and analyzing data in real time, transforming how organizations handle complex information flows. Unlike traditional batch processing methods, streaming architecture enables continuous data ingestion, processing, and analysis with minimal latency.

Core Conceptual Framework

At its fundamental level, streaming architecture is built on the principle of processing data as it is generated, creating a dynamic and responsive system. Researchers from stream processing studies define this approach as an ordered sequence of data events that are processed incrementally and continuously.

Key characteristics of streaming architecture include:

- Continuous Data Flow: Information moves through the system without interruption

- Real-Time Processing: Data is analyzed immediately upon arrival

- Event-Driven Design: System responds to data events as they occur

Technical Foundations

The technical infrastructure of streaming architecture relies on several critical components. These include distributed computing frameworks, message queuing systems, and event streaming platforms that enable high-throughput, low-latency data movement. Our approach to real-time data integration emphasizes the importance of creating scalable pipelines that can handle complex data transformations.

Streaming architectures typically employ publish-subscribe models, where data producers generate events and multiple consumers can simultaneously process and react to these events. This approach allows for remarkable flexibility and parallel processing capabilities, making it ideal for complex data ecosystems.

Performance and Scalability Considerations

Understanding streaming architecture goes beyond technical implementation. It requires a holistic view of how data moves, transforms, and provides value. Organizations adopting this approach must consider factors like data velocity, volume, and the computational resources needed to maintain real-time processing capabilities.

The ultimate goal of streaming architecture is to enable proactive, intelligent decision-making by reducing the time between data generation and actionable insights.

The Importance of Streaming Architecture in Data Processing

In today’s rapidly evolving digital landscape, streaming architecture has become a critical infrastructure for organizations seeking to transform raw data into actionable insights with unprecedented speed and efficiency. Batch processing vs real-time stream processing reveals the fundamental shift in how businesses approach data management and analysis.

Business Value and Strategic Advantages

Streaming architecture provides organizations with a competitive edge by enabling immediate data-driven decision making. Research from the International Data Corporation predicts that by 2025, approximately 30% of global data will be real-time, necessitating robust stream processing systems.

Key strategic advantages include:

- Instantaneous Insights: Analyze data the moment it is generated

- Proactive Problem Detection: Identify and respond to issues in real-time

- Enhanced Customer Experience: Deliver personalized, context-aware interactions

Technical Performance and Scalability

The technical prowess of streaming architecture lies in its ability to handle massive, complex data flows with minimal latency. Unlike traditional batch processing, streaming systems process data continuously, breaking down information into small, manageable events that can be analyzed and acted upon immediately.

This approach is particularly powerful in scenarios requiring rapid response, such as:

- Financial transaction monitoring

- IoT sensor data analysis

- Network security threat detection

- Social media sentiment tracking

Economic and Operational Impact

Beyond technical capabilities, streaming architecture represents a significant economic transformation. By reducing the time between data generation and insight, organizations can make faster, more informed decisions. The ability to process data in real-time translates to reduced operational costs, improved resource allocation, and more agile business strategies.

Ultimately, streaming architecture is not just a technological upgrade but a fundamental reimagining of how data can drive organizational intelligence and competitive advantage.

How Streaming Architecture Works: Key Components Explained

Streaming architecture operates through a sophisticated ecosystem of interconnected components designed to process data continuously and efficiently. Understanding cost-effective solutions for streaming data illuminates the strategic design behind these complex systems.

Core Infrastructure Components

The National Institute of Standards and Technology outlines streaming architecture as a dynamic system with several critical components working in concert. These key elements enable the seamless flow and processing of data in real time.

To clarify the essential building blocks of streaming architecture, the following table summarizes each core component and its primary function within the real-time data ecosystem.

| Component | Description |

|---|---|

| Data Producers | Systems or devices generating continuous data streams |

| Message Brokers | Middleware that manages transmission and buffering of events |

| Stream Processing Engines | Real-time computational frameworks for analyzing and transforming data |

| Data Storage Systems | Repositories for both processed and historical data |

The primary infrastructure components include:

- Data Producers: Systems or sensors generating continuous data streams

- Message Brokers: Middleware managing event transportation and buffering

- Stream Processing Engines: Real-time computational frameworks

- Data Storage Systems: Repositories for processed and historical data

Data Flow and Processing Mechanism

Streaming architecture transforms raw data through a sophisticated, event-driven workflow. When a data event occurs, it is immediately captured by producers, transmitted through message brokers, processed by stream processing engines, and then stored or routed to appropriate destinations.

This continuous processing enables:

- Instant data transformation

- Parallel event handling

- Dynamic routing of information

- Immediate anomaly detection

Scalability and Performance Optimization

The true power of streaming architecture lies in its ability to dynamically scale and optimize performance. Modern streaming systems leverage distributed computing principles, allowing horizontal scaling where additional computational resources can be added seamlessly to handle increased data volumes.

Key performance optimization strategies include:

- Intelligent data partitioning

- Parallel event processing

- Adaptive resource allocation

- Fault-tolerant design mechanisms

By breaking down complex data flows into manageable, processable events, streaming architecture enables organizations to transform raw information into actionable insights with unprecedented speed and precision.

Real-World Applications of Streaming Architecture

Streaming architecture has transformed how organizations process and leverage data across multiple industries, enabling real-time decision-making and sophisticated analytical capabilities. Understanding Kafka’s functionality provides deeper insights into the technological foundations driving these innovative applications.

Financial Services and Fraud Detection

Researchers developing advanced analytics platforms highlight the critical role of streaming architecture in detecting anomalies and preventing financial risks. In banking and financial sectors, streaming systems continuously monitor transactions, identifying potential fraudulent activities within milliseconds.

Key financial streaming applications include:

- Real-time transaction monitoring

- Algorithmic trading analysis

- Credit risk assessment

- Compliance and regulatory reporting

Industrial IoT and Predictive Maintenance

Manufacturing and industrial sectors leverage streaming architecture to transform operational efficiency. By processing sensor data in real-time, organizations can predict equipment failures, optimize maintenance schedules, and minimize unexpected downtime.

Critical industrial streaming use cases involve:

- Machine performance tracking

- Predictive equipment maintenance

- Quality control monitoring

- Supply chain optimization

Healthcare and Emergency Response Systems

In healthcare, streaming architecture enables sophisticated patient monitoring, epidemiological tracking, and emergency response coordination. Real-time data processing allows medical professionals to receive immediate insights from complex medical systems and patient monitoring devices.

Healthcare streaming applications encompass:

- Patient vital sign monitoring

- Medical device integration

- Pandemic outbreak tracking

- Resource allocation during emergencies

By transforming raw data into actionable intelligence across diverse domains, streaming architecture represents a powerful technological paradigm that transcends traditional data processing limitations.

Challenges and Considerations in Streaming Architecture

Streaming architecture, while powerful, presents complex technical and operational challenges that organizations must carefully navigate. Real-time CDC streaming techniques offer insights into managing these intricate data processing environments.

Data Complexity and Integration Challenges

Computer science researchers examining streaming platforms highlight the multifaceted challenges of integrating diverse data sources. Organizations must address significant complexity when connecting heterogeneous systems, managing different data formats, and ensuring seamless communication between varied technological ecosystems.

Key integration challenges include:

- Handling diverse data schemas

- Managing inconsistent data quality

- Synchronizing data across multiple platforms

- Resolving semantic differences between sources

Performance and Scalability Considerations

Streaming architectures demand robust infrastructure capable of processing massive data volumes with minimal latency. As data complexity increases, organizations must design systems that can dynamically scale, maintain high throughput, and prevent performance bottlenecks.

Critical performance considerations involve:

- Horizontal scaling capabilities

- Efficient resource allocation

- Minimizing processing latency

- Maintaining consistent data integrity

Security and Compliance Frameworks

With increasing regulatory requirements and cybersecurity threats, streaming architectures must incorporate sophisticated security mechanisms. Organizations need comprehensive strategies to protect sensitive information, ensure data privacy, and maintain compliance across complex distributed systems.

Essential security dimensions include:

- Encryption of data in transit

- Authentication and access control

- Real-time threat detection

- Comprehensive audit logging

Successfully implementing streaming architecture requires a holistic approach that balances technological capabilities with strategic organizational needs, transforming potential challenges into opportunities for innovation and efficiency.

Unlock True Real-Time Data with Streamkap’s Streaming Architecture

Are you struggling to turn continuous data flows into instant insights, as described in the article on streaming architecture? Many organizations experience delays, bottlenecks, and integration headaches when trying to build truly responsive data pipelines. Streamkap removes these barriers by providing real-time data integration and streaming ETL, delivering the seamless performance and scalability that modern analytics require. With automated schema handling and no-code connectors to popular databases, plus robust CDC and a powerful real-time transformation engine, Streamkap addresses complexity, latency, and operational overhead all at once.

Ready to move beyond traditional batch ETL and experience the proactive, shift-left approach to streaming data pipelines? Choose a solution that empowers your data engineers and analytics teams to deliver enriched, analytics-ready data as soon as it is generated. Start building scalable, cost-effective, and low-maintenance streaming pipelines in minutes. Visit Streamkap and discover how you can transform your data architecture today.

Frequently Asked Questions

What is streaming architecture?

Streaming architecture is a modern approach to processing and analyzing data in real time, allowing for continuous data ingestion and minimal latency, as opposed to traditional batch processing methods.

How does streaming architecture differ from batch processing?

Unlike batch processing, which analyzes data in large chunks at scheduled intervals, streaming architecture processes data continuously as it is generated, providing real-time insights and enabling immediate decision-making.

To help distinguish the two fundamental data processing approaches discussed in this article, the table below compares streaming architecture with traditional batch processing across key characteristics.

| Characteristic | Streaming Architecture | Batch Processing |

|---|---|---|

| Data Processing Method | Continuous, event-by-event | Scheduled, processes data in large chunks |

| Latency | Minimal (real time) | Higher (delayed insights) |

| Decision-Making | Immediate, proactive | Retrospective, after data accumulates |

| Example Use Cases | Fraud detection, IoT, healthcare | Payroll, monthly reporting, data archiving |

What are the key components of a streaming architecture?

The key components of streaming architecture include data producers, message brokers, stream processing engines, and data storage systems, all of which work together to process data continuously and efficiently.

What are the benefits of using streaming architecture in organizations?

Using streaming architecture allows organizations to achieve instantaneous insights, proactively detect problems, enhance customer experiences, and make faster, data-driven decisions to gain a competitive edge.

Recommended

- Understanding Most Cost-Effective Solutions for Streaming Data to Snowflake

- 7 Essential Tips for Understanding PlanetScale Real-Time CDC Streaming

- Understanding Why Streaming CDC Matters for Data Professionals

- Master Postgresql to Snowflake Streaming Efficiently

- What is Digital Streaming? Understanding the Basics