Understanding Why Streaming CDC Matters for Data Professionals

August 28, 2025

Streaming Change Data Capture is changing how companies handle their data with over 90 percent of large enterprises now relying on real-time data streaming. Many people assume the biggest benefit is just speed. The real surprise is how streaming CDC minimizes the load on your main databases while giving you instant access to every change, setting up a whole new level of efficiency in the way organizations move and use their data.

Table of Contents

- What Is Streaming Change Data Capture (Cdc)?

- Why Streaming Cdc Is Important For Modern Data Systems

- How Streaming Cdc Enhances Real-Time Analytics

- Key Concepts Of Streaming Cdc And Its Architecture

Quick Summary

| Takeaway | Explanation |

|---|---|

| Streaming CDC enables real-time data synchronization | Organizations can instantly propagate data changes across systems as they occur, improving responsiveness. |

| Minimal impact on source databases | Streaming CDC captures data modifications without adding significant load, ensuring system performance remains unaffected. |

| Supports event-driven architectures | This technology enhances flexibility and efficiency in modern data systems by facilitating seamless integration between different services. |

| Accelerates data insights generation | Immediate reflection of changes enables analytics platforms to provide insights in real-time, critical for timely decision-making. |

| Empowers advanced analytics capabilities | By transforming static data into dynamic resources, organizations can utilize predictive and prescriptive analytics effectively. |

What is Streaming Change Data Capture (CDC)?

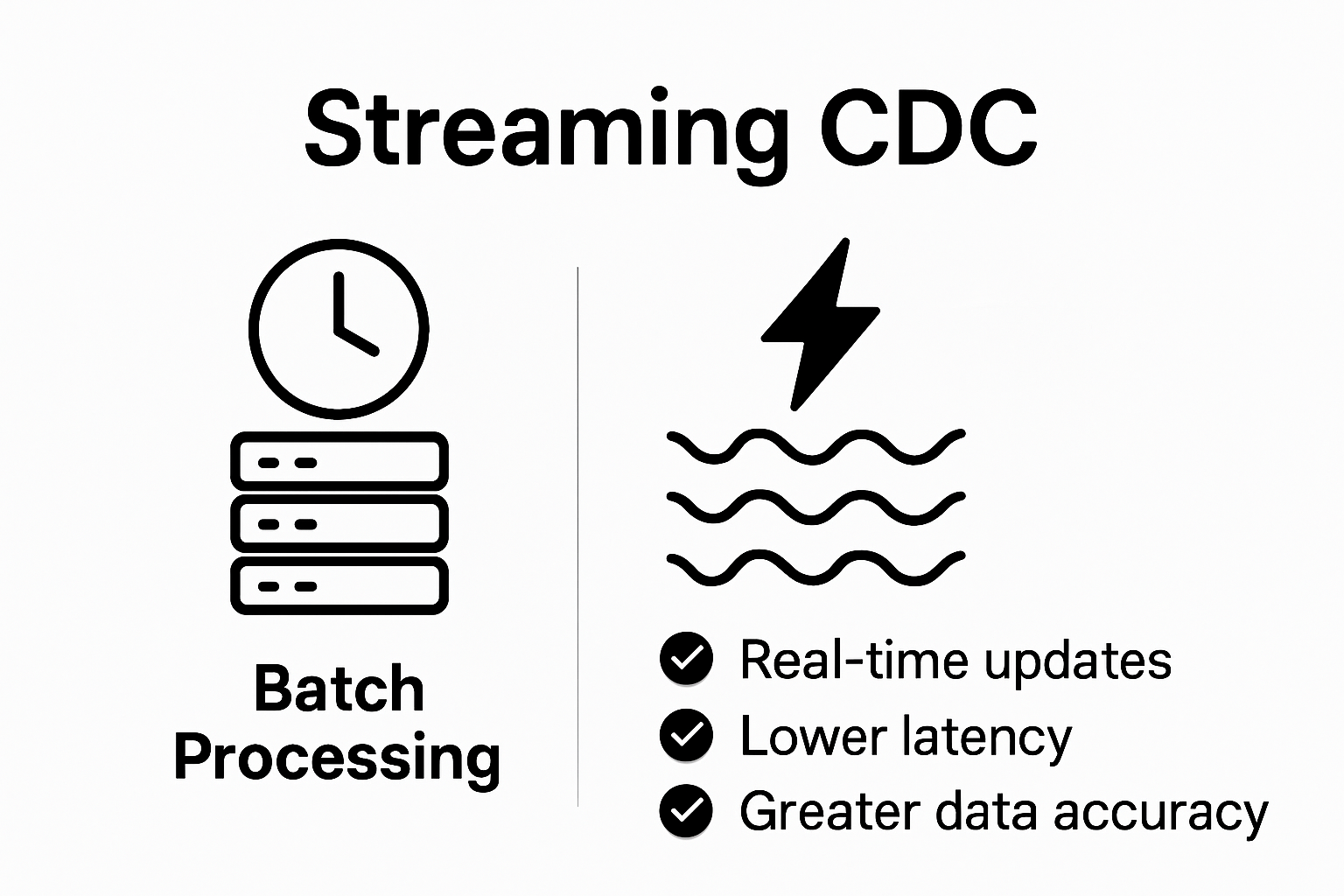

Streaming Change Data Capture (CDC) is a sophisticated data integration technique that tracks and captures incremental data modifications in real time across database systems. Unlike traditional batch processing methods, streaming CDC enables organizations to detect, record, and propagate data changes instantaneously as they occur, providing a dynamic and responsive approach to data management.

The following table provides an easy comparison of traditional batch processing versus streaming CDC, helping readers quickly grasp the practical differences highlighted in the article.

| Approach | Data Processing Timing | Impact on Source Database | Data Latency | Use Cases |

|---|---|---|---|---|

| Traditional Batch Processing | Scheduled intervals (e.g., nightly) | High (during sync/load) | High (delayed updates) | Historical reporting, backups |

| Streaming Change Data Capture (CDC) | Real-time or near real-time | Minimal (event-driven) | Low (instant updates) | Real-time analytics, event-driven apps |

Understanding the Core Mechanism

At its fundamental level, streaming CDC operates by continuously monitoring database transaction logs and identifying specific changes such as inserts, updates, and deletes. This approach allows data systems to capture granular modifications without placing additional load on the source database.

Learn more about real-time data integration techniques.

The key components of streaming CDC include:

- Source Tracking: Monitoring original data sources for any modifications

- Change Detection: Identifying precise alterations in database records

- Event Streaming: Transmitting changes in near real-time to destination systems

Significance in Modern Data Architecture

According to the CDC’s Public Health Data Modernization Initiative, real-time data processing is critical for responding quickly to emerging challenges. In enterprise data environments, streaming CDC serves similar purposes by enabling:

- Instant data synchronization across multiple systems

- Reduced latency in data warehousing and analytics

- Minimized performance impact on source databases

- Support for event-driven architectures and microservices

By transforming how organizations capture and utilize data changes, streaming CDC represents a pivotal advancement in data integration strategies, offering unprecedented speed, accuracy, and efficiency in managing complex, distributed data ecosystems.

Why Streaming CDC is Important for Modern Data Systems

Streaming Change Data Capture (CDC) has become a critical technology for organizations seeking to transform their data management strategies, enabling unprecedented agility and responsiveness in complex, distributed computing environments. By providing real-time data synchronization and minimal latency, streaming CDC addresses fundamental challenges in contemporary data architectures.

Bridging Data Silos and Enhancing Responsiveness

In an era of rapid digital transformation, businesses require instantaneous data visibility and rapid decision-making capabilities. Explore advanced streaming ETL techniques that revolutionize how organizations process and utilize data. Streaming CDC eliminates traditional barriers by creating seamless, continuous data flows across disparate systems, ensuring that critical information is immediately available for analysis and action.

Key advantages of streaming CDC include:

- Real-time Data Propagation: Immediate reflection of data changes across systems

- Minimal Performance Overhead: Reduced impact on source database performance

- Comprehensive Change Tracking: Granular monitoring of data modifications

Strategic Implications for Enterprise Data Management

According to TDWI’s research on streaming technologies, organizations implementing streaming CDC can expect significant improvements in data integration efficiency. The technology supports critical enterprise objectives by:

- Enabling event-driven architectures

- Supporting complex microservices environments

- Facilitating real-time analytics and reporting

- Reducing data warehouse loading times

Streaming CDC represents more than a technological upgrade it is a strategic approach to data management that empowers organizations to become more adaptive, informed, and competitive in an increasingly data-driven world.

How Streaming CDC Enhances Real-Time Analytics

Streaming Change Data Capture (CDC) has emerged as a transformative technology that fundamentally reshapes how organizations approach real-time analytics, providing unprecedented capabilities for immediate data insights and dynamic decision-making. By enabling continuous, instantaneous data tracking and transmission, streaming CDC bridges the traditional gap between data generation and data analysis.

Accelerating Data Availability and Insights

In contemporary data ecosystems, the ability to process and analyze data in real-time is no longer a luxury but a critical competitive advantage. Discover advanced streaming analytics strategies that revolutionize organizational data intelligence. Streaming CDC eliminates historical latency constraints by delivering data modifications instantaneously, allowing analytics platforms to generate insights milliseconds after changes occur in source systems.

Key performance advantages include:

- Immediate Data Reflection: Analytics platforms receive updates simultaneously with source system changes

- Reduced Processing Overhead: Minimal computational resources required for data synchronization

- Continuous Insight Generation: Persistent data streams enable dynamic analytical models

Enabling Advanced Analytical Architectures

According to research from Worcester Polytechnic Institute, emerging data analytics tools are increasingly focused on processing high-volume streams with unprecedented speed and accuracy. Streaming CDC supports these advanced analytical architectures by:

- Supporting complex event processing systems

- Facilitating machine learning model retraining

- Enabling predictive and prescriptive analytics

- Providing granular, real-time data lineage tracking

By transforming data from a static resource into a dynamic, continuously evolving asset, streaming CDC empowers organizations to move beyond retrospective reporting towards proactive, anticipatory analytical strategies.

Key Concepts of Streaming CDC and Its Architecture

Streaming Change Data Capture (CDC) represents a sophisticated architectural approach to data synchronization, combining advanced technologies that enable real-time data tracking and transmission across complex distributed systems. Understanding its fundamental architecture provides insight into how modern organizations transform data management strategies.

Core Architectural Components

The streaming CDC architecture consists of interconnected elements designed to capture, process, and propagate data changes with minimal latency. Explore the intricacies of streaming ETL architectures to gain deeper technical understanding. These components work synergistically to ensure seamless data movement and transformation.

Key architectural elements include:

To clarify the distinct functions within a streaming Change Data Capture (CDC) system, the table below summarizes the role and purpose of each core architectural component mentioned in the article.

| Component | Purpose |

|---|---|

| Transaction Log Interceptors | Monitor and capture database modification events |

| Stream Processing Engines | Transform and route captured change events |

| Message Queuing Systems | Enable reliable and scalable data transmission |

- Transaction Log Interceptors: Monitoring and capturing database modification events

- Stream Processing Engines: Transforming and routing captured change events

- Message Queuing Systems: Enabling reliable and scalable data transmission

Technical Mechanism and Data Flow

According to TDWI’s comprehensive research on streaming technologies, streaming CDC operates through a sophisticated sequence of data capture and propagation. The technical mechanism involves:

- Continuous scanning of database transaction logs

- Identifying and extracting granular data modification events

- Serializing change events into standardized message formats

- Routing events to downstream systems with minimal processing overhead

The architecture ensures that data changes are captured with high fidelity, maintaining the integrity and chronological sequence of modifications across different system environments.

Transform Your CDC Strategy Into Real-Time Advantage With Streamkap

Are you feeling limited by delays, manual bottlenecks, or lack of visibility in your current CDC and ETL workflows? If this article made you realize how crucial sub-second change data capture and streaming analytics are for your success, imagine what your team could achieve with proactive data pipelines, zero manual intervention, and near-instant visibility. Streamkap enables you to bypass the old challenges of batch processing, latency, and painful schema updates. With automated CDC built on Kafka and Flink, no-code connectors for your favorite databases, and real-time SQL or Python transformations, your data becomes immediately actionable.

Stop letting outdated processes slow you down. Start using Streamkap to simplify real-time data integration, accelerate analytics, and reduce costs right now. Visit Streamkap’s homepage to see how quickly you can launch reliable, continuous pipelines, or discover how streaming ETL works in practice. See the difference of replacing reactive troubleshooting with proactive control today.

Frequently Asked Questions

What is Streaming Change Data Capture (CDC)?

Streaming Change Data Capture (CDC) is a data integration technique that captures real-time data changes in databases, allowing organizations to synchronize data and propagate modifications instantly.

How does Streaming CDC improve data analytics?

Streaming CDC enhances data analytics by enabling near-real-time data processing, allowing insights to be derived almost immediately after data changes, thereby supporting dynamic decision-making and proactive strategies.

What are the key benefits of implementing Streaming CDC in an organization?

Key benefits of Streaming CDC include real-time data propagation, reduced performance overhead on source databases, improved data synchronization across systems, and enabling event-driven architectures that support complex microservices environments.

What architectural components are involved in Streaming CDC?

The architecture of Streaming CDC includes transaction log interceptors for monitoring database changes, stream processing engines for routing events, and message queuing systems to ensure reliable data transmission with minimal latency.

Recommended