Change Data Capture for Streaming ETL

Overview

We will take a look at what Change Data Capture (CDC) is, how it works, what are the benefits of streaming change events, the business benefits, and use cases. For this blog, we will only look at Change Data Capture in the context of a streaming ETL via a transaction log and not other change data capture methods such as database triggers.

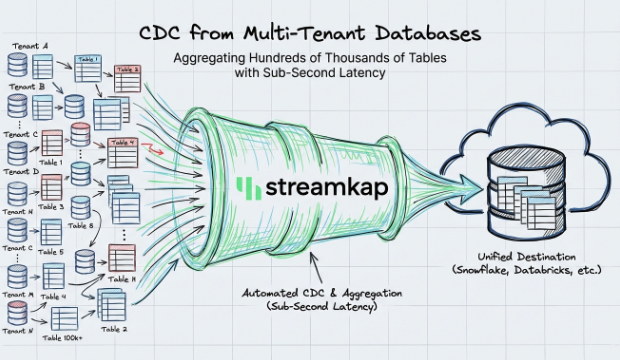

Typically, organizations begin their journey with Change Data Capture by wishing to track changed data from a source database using database transaction logs, and popular source databases are often PostgreSQL, MySQL, MongoDB, SQL Server & Oracle. The popular destinations are data warehouses or data lakes such as Snowflake, BigQuery, Databricks, and Delta Lake. This is an easy step to take to provide your organization with real-time data and gain many benefits.

What is Change Data Capture?

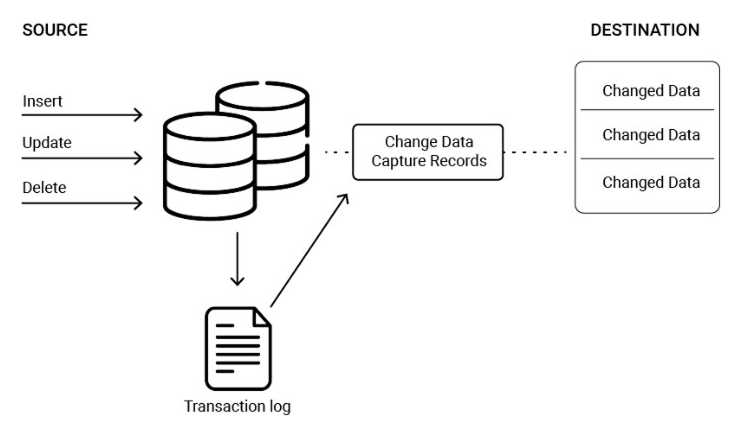

Change Data Capture refers to the process of capturing changes made to data in a source system such as a database, so that these change events can be used in the destination system, such as a data warehouse, data lake, data app, machine learning models, indexes, or caches. Better than a simple 1-to-1 replication, it’s also possible to read once but carry out multiple writes.

The above diagram shows Source Data being updated with DML statements (Inserts, Updates, Deletes) and these log-based CDC records being sent to a destination.

Taking this further, rather than only processing the changes as is, it is also possible to carry out in-stream processing such as generating new metrics, lookups, or removing PII data if needed.

The Change Data Capture real-time streaming approach to data integration also allows for high volume and velocity data replication that is both reliable and scalable, while using fewer resources. Combined with one-to-many mapping, log-based CDC is highly scalable and simplifies your architecture.

What is Streaming ETL

Streaming ETL (extract, transform, load) is a type of data integration process that involves continuously extracting data from various sources, transforming it to fit the needs of the destination system, and loading it into the destination system in near real-time.

In a streaming ETL process, data is extracted from the source system and transformed as it is received, rather than being extracted and transformed in batch intervals. Adding log-based CDC to the streaming ETL process allows the target system to remain up-to-date with the latest data from the source system, enabling real-time analytics and decision-making.

An open-source streaming ETL system you may be familiar with would be Apache Kafka.

How does Change Data Capture work?

The above diagram shows Database DML (Inserts, Updates, Deletes) executed on the database, which is then sent to the transaction log. Log-based CDC is then read in real-time and sent to the destination in the form of an Orders Table.

Databases such as PostgreSQL, MongoDB, and MySQL can be configured to track changes such as inserts, updates, and deletes. Change Data Capture is often called log-based replication. These changes in transactional databases are usually tracked by writing to the database transaction log, often called a write-ahead log (WAL) in a directory of the database. However, as the world starts to shift now to using change data, several databases and applications are employing different methods as the primary transaction log, such as Snowflake, which tracks changes in tables using Table Streams and does not provide a directory option. Whichever method is used, the idea is that changes are tracked and emitted as they happen and we call these events.

The change events are sent in the same order as they were generated in the original database. This way, Change Data Capture ensures that all the interested parties of a given dataset are precisely informed of the changes and can react accordingly, by either refreshing their version of the data or by triggering business processes.

What are the business benefits of Change Data Capture- Competitive advantage: With real-time data at their fingertips, organizations and its team can achieve better business outcomes faster as well as build real-time customer experiences.

- Protection of data: Change Data Capture can be used to prevent fraud, audit trails, or recover your systems to an exact point in time.

- Reduce costs: Change Data Capture is highly efficient and reduces the load on your source compared to the query in batches approach. Coupled with the ability to read from the log and write it out to many destinations such as data warehouses, data lakes, and data apps, you can save on system resources and bandwidth. On top of this, syncing in real-time removes the need for trying to line up schedules across multiple systems. All of this results in a simpler architecture with less cost.

Change Data Capture is more cost-effective than batch processing while providing real-time access to your data which can provide a competitive advantage.

What are the disadvantages of Change Data Capture

There are no disadvantages to implementing log-based CDC but it may be challenging to start with due to two concerns:

- Source Database: Typically, log-based CDC involves a couple of parameter changes on your database. This usually needs to be your production master/primary database and not a slave database since it is the master/primary which provides the transaction log to be read from.- Destination: Log-based CDC events can be significant in volume. You will need to decide how many historical change events you wish to keep and how you intend to clean them up. There are different methods here such as using upserts, setting retention policies on the destination tables, or simple scripts to clean up the history that is no longer required.

What are the use cases of change data capture?

- Streamlined ETL: By only capturing changes, Change Data Capture eliminates the need for bulk loading/updating at inconvenient batch intervals as well as having to worry about the timing of your scheduled workflows.

- Costs/Impact: Log-based replication is an effective way to reduce the impact on the source while obtaining new data. Additionally, with Streamkap, we can read once but write out to multiple destinations, whether that is the same contents, subset or in-stream transformed.

- Audit Trail: Change data capture allows you to store all events as an audit trail as well as being able to replay from a point in time and save this new data in a new location

- Recovery: Having an accurate record of every system adjustment makes it easier to restore the source to its original condition if a mistake occurs at a precise time

- Analytics: Move from stale dashboards to real-time

- Machine Learning: Stream updates to your data stores and models

- Data Apps: Build real-time data apps efficiently using Change Data Capture with streaming ETL

- Keep indexes and caches fresh with minimal effort

- Trigger immediate action upon a change event such as updating customer preferences

Does Change Data Capture include schema changes?

It is possible to use Change Data Capture and capture schema changes as well but it’s not always straightforward and can be complex to implement depending on the source and destination. It may also require additional considerations such as how to handle these changes automatically in the destination without breaking the consumers that rely upon this data. This is a major reason companies often choose to use a platform such as Streamkap, which can handle the schema evolution automatically.

What does a Change Data Capture message contain?

A message that contains Change Data Capture information may include the following types of data:

- The name of the database and table that the change occurred in

- The type of change that was made (e.g., insert, update, delete)

- The primary key of the affected row

- The old and new values of any columns that were updated

- The timestamp of the change, which can often be in ms

For example, a Change Data Capture message might look like this:

This message indicates that an update was made to the “users” table in the “Customers” database at the specified timestamp. The primary key of the affected row was “id” with a value of 555, and the “name” and “email” columns were both updated. The old and new values of these columns are also included in the message.

What does a Streamkap message contain?

As you can see below, Streamkap is tracking more information in each change message compared to the example above but the concept is the same. Here timestamp is held in milliseconds.

How can I start implementing Change Data Capture?

- Enable Change Data Capture on the source

- Obtain credentials for the destination of where you wish to send this data to

- Build a streaming ETL pipeline that can handle Change Data Capture events. If you go down the open-source route, then Kafka & Debezium is your starting point. If you have no experience with these technologies, it is going to take a while to get this going. On top of this, you may need development effort to handle schema changes (schema evolution).

- Alternatively, you could use Streamkap to deploy your streaming ETL Change Data Capture pipelines in minutes, which handles schema evolution and a number of sources/destinations out of the box.