What is Apache Flink? Understanding Stream Processing

August 25, 2025

Apache Flink powers some of the fastest data processing pipelines, handling millions of events per second with ease. Most people think real-time analytics at this scale is reserved for tech giants or requires massive infrastructure. Flink actually lets any organization unlock instant insights from streaming data and delivers results in milliseconds regardless of company size.

Table of Contents

Quick Summary

| Takeaway | Explanation |

| Apache Flink enables real-time data processing. | Flink processes massive data streams with low latency, facilitating instantaneous insights crucial for modern businesses. |

| Flink supports complex event processing. | Organizations can manage intricate data flows and execute window-based computations, enhancing their analytical capabilities. |

| Its architecture allows for fault tolerance. | Flink’s sophisticated checkpointing mechanisms ensure data consistency and system reliability during operations. |

| Flink is versatile across industries. | The framework is used in finance, telecommunications, and IoT for applications like fraud detection and network monitoring. |

| Flink facilitates scalable and flexible programming. | Its support for multiple programming languages and APIs allows developers to tailor solutions to specific data requirements. |

The Essential Concept of Apache Flink and Its Purpose

Apache Flink represents a cutting-edge distributed processing framework designed specifically for stateful computations over data streams. At its core, Flink enables organizations to process massive volumes of streaming data with low latency and high throughput, transforming complex data processing challenges into manageable, real-time solutions.

Understanding Stream Processing Technology

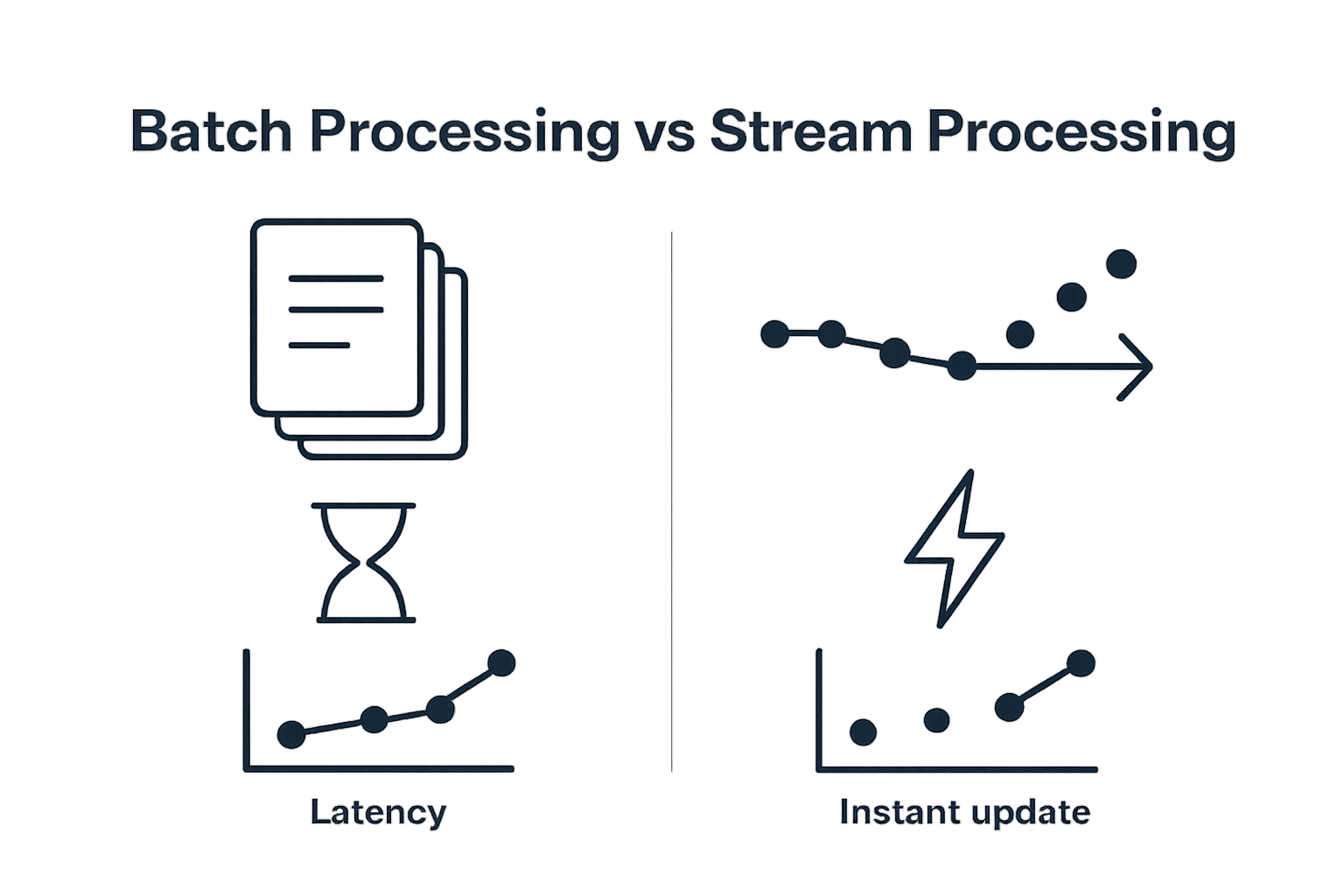

Stream processing is a revolutionary approach to handling data that breaks away from traditional batch processing methods. Unlike batch systems that process data in large, predetermined chunks, stream processing manages data in continuous, real-time flows.

To help clarify the core differences between batch processing and stream processing as discussed in the article, here is a concept comparison table summarizing their key characteristics.

| Processing Approach | Batch Processing | Stream Processing (Flink) |

| Data Handling | Processes data in large, predetermined chunks | Processes data continuously in real-time |

| Latency | High (results after all data is collected) | Low (results in milliseconds) |

| Use Case Examples | Historical reporting, periodic analytics | Real-time monitoring, instant alerts |

| Fault Tolerance | May require full job re-runs upon failure | Built-in fault tolerance with checkpoints |

| Complexity | Simpler for static data, less suited for dynamic | Suited for dynamic or always-on data flows |

Apache Flink excels in this domain by providing a robust platform that can handle event-time processing, manage state, and deliver precise results even in highly dynamic computing environments.

Key characteristics of Flink’s stream processing capabilities include:

-

Ability to process millions of events per second

-

Support for complex event processing and window-based computations

-

Fault-tolerant mechanisms ensuring data consistency

Architectural Design and Computational Model

Flink’s unique architecture separates it from other streaming frameworks. It employs a distributed runtime environment that can execute both batch and stream processing workloads with exceptional efficiency. According to O’Reilly’s Stream Processing Guide, Flink achieves this through its sophisticated execution engine that supports precise event-time semantics and provides advanced windowing operations.

The framework supports multiple programming languages and offers comprehensive libraries for machine learning, complex event processing, and graph processing. This flexibility makes Flink an attractive solution for data engineers and architects seeking a versatile streaming platform that can adapt to diverse computational requirements.

While many organizations struggle with real-time data processing, learn more about modern data integration strategies that can complement Flink’s powerful capabilities and transform your data workflow.

Why Apache Flink Matters in Today’s Data-Driven World

In an era of exponential data growth, Apache Flink emerges as a transformative technology that addresses the critical challenges of real-time data processing. Organizations across industries increasingly depend on instantaneous insights, making stream processing frameworks like Flink not just beneficial, but essential for competitive advantage.

Real-Time Decision Making and Business Intelligence

Modern businesses require immediate data analysis to respond swiftly to changing market conditions. Apache Flink enables near-instantaneous processing of massive data streams, allowing companies to make data-driven decisions in milliseconds. From financial trading platforms detecting market anomalies to e-commerce systems personalizing user experiences, Flink provides the computational backbone for intelligent, responsive systems.

Key business advantages of Apache Flink include:

-

Millisecond-level latency for time-critical applications

-

Scalable architecture supporting enterprise-level data volumes

-

Advanced analytics capabilities across multiple domains

Technological Innovation and Industry Applications

According to research presented at the ACM International Conference, Apache Flink demonstrates remarkable versatility across diverse technological domains. Its capabilities extend beyond traditional data processing, enabling sophisticated applications in fields like energy management, artificial intelligence, and Internet of Things (IoT) systems.

The framework’s ability to handle complex computational tasks makes it a preferred solution for cutting-edge technological innovations. Machine learning pipelines, real-time recommendation systems, and predictive maintenance algorithms increasingly rely on Flink’s robust processing capabilities.

Explore our real-time data integration solutions that leverage technologies like Apache Flink to transform how organizations manage and process streaming data. By understanding Flink’s potential, businesses can unlock unprecedented levels of operational efficiency and technological agility.

How Apache Flink Processes Data: An In-Depth Look

Apache Flink represents a sophisticated data processing framework that transforms complex streaming information through a meticulously designed computational approach. Its data processing mechanism goes far beyond traditional methods, enabling organizations to handle massive data volumes with unprecedented precision and efficiency.

Stream Processing Fundamentals

At the heart of Flink’s data processing strategy lies its streaming dataflow architecture. Unlike traditional batch processing systems, Flink treats every data element as part of a continuous stream. This approach allows for real-time computations where data is processed immediately upon arrival, enabling instantaneous insights and decision-making capabilities.

Critical components of Flink’s data processing methodology include:

-

Parallel execution of data transformations

-

Stateful computation capabilities

-

Dynamic scaling of computational resources

-

Fault-tolerant processing mechanisms

Computational Execution Model

According to research on distributed stream processing systems, Flink employs a sophisticated execution model that breaks down complex computational tasks into manageable, parallelizable units. The framework uses a directed acyclic graph (DAG) to represent data processing pipelines, where each node represents a specific computational operation and edges represent data flow between these operations.

Event-time processing stands out as a unique feature of Flink’s computational approach. This mechanism allows the system to process events based on their actual occurrence time, rather than the time they are received. Such capability is crucial for applications requiring precise temporal analysis, such as financial trading systems, IoT sensor networks, and real-time monitoring platforms.

Learn more about advanced stream processing techniques that can help you understand the nuanced capabilities of modern data integration frameworks. By comprehending Flink’s intricate data processing mechanisms, organizations can unlock powerful strategies for managing and extracting value from complex streaming data environments.

Key Features and Components of Apache Flink

Apache Flink represents a comprehensive stream processing framework with a sophisticated architecture designed to handle complex computational challenges. Its robust feature set enables organizations to build powerful, scalable data processing applications across diverse technological domains.

Core Processing Architecture

At the foundation of Flink’s design is its distributed computing model, which allows seamless parallel processing of massive data streams. The framework supports multiple programming interfaces, including DataStream API for stream processing and DataSet API for batch computations, providing developers with flexible tools for handling different data processing scenarios.

Key architectural components include:

-

Distributed runtime environment

-

Stateful stream processing capabilities

-

Advanced windowing mechanisms

-

Exactly-once processing semantics

-

Fault-tolerant checkpointing system

For quick reference, here is a table summarizing the key components and specialized libraries that make up Apache Flink, along with their main purposes.

| Component/Library | Description | Purpose |

| Runtime Environment | Distributed engine for batch and stream jobs | Executes and manages data processing |

| DataStream API | Interface for stream data processing | Real-time transformations and analytics |

| DataSet API | Interface for batch data processing | Handles batch computations |

| FlinkCEP | Complex Event Processing library | Detects patterns in event streams |

| Gelly | Graph processing library | Analyzes graph-structured data |

| FlinkML | Machine learning library | Streaming machine learning algorithms |

| Table API & SQL | Relational querying and data manipulation | Unified batch and stream processing |

Advanced Processing Libraries and Capabilities

According to research on distributed stream processing systems, Flink offers comprehensive libraries that extend its computational capabilities across multiple domains. These specialized libraries enable developers to tackle complex processing requirements without building foundational infrastructure from scratch.

Specialized Flink libraries include:

-

Gelly for graph processing

-

FlinkML for machine learning algorithms

-

Table API and SQL for relational data processing

-

FlinkCEP for complex event processing

The framework’s ability to support both stream and batch processing within a single system makes it a versatile solution for organizations seeking unified data processing platforms. Fault tolerance remains a cornerstone of Flink’s design, with sophisticated checkpointing mechanisms that ensure data consistency and system reliability.

Explore our real-time data integration solutions to understand how advanced stream processing technologies like Apache Flink can transform your data workflows. By leveraging these powerful computational frameworks, organizations can unlock unprecedented insights and operational efficiency.

Real-World Applications and Use Cases of Apache Flink

Apache Flink has emerged as a transformative technology across multiple industries, providing robust solutions for complex data processing challenges. Its versatility enables organizations to build sophisticated applications that require real-time analytics, event processing, and intelligent decision-making capabilities.

Industry-Specific Streaming Solutions

The framework’s powerful stream processing capabilities make it an ideal choice for industries demanding instantaneous data insights. Financial institutions leverage Flink for fraud detection systems, analyzing transactions in milliseconds to identify suspicious activities. In telecommunications, network operators use Flink to monitor network performance, detecting anomalies and preventing service disruptions before they impact customers.

Key industry applications include:

-

Financial fraud detection and risk management

-

Network performance monitoring

-

IoT sensor data processing

-

Predictive maintenance in manufacturing

-

Real-time recommendation systems

Advanced Computational Use Cases

According to comprehensive research on stream processing technologies, Flink supports a wide range of computational scenarios that go beyond traditional data processing. Event-driven applications benefit significantly from Flink’s ability to handle complex event processing, enabling businesses to create responsive systems that react to changing conditions in real time.

Some sophisticated use cases demonstrate Flink’s computational prowess:

-

Machine learning model training on streaming data

-

Complex algorithmic trading platforms

-

Real-time cybersecurity threat detection

-

Dynamic pricing systems

-

Geospatial data analysis

Explore our real-time data integration strategies to understand how advanced technologies like Apache Flink can revolutionize your data processing approach. By embracing these innovative solutions, organizations can transform raw data into actionable intelligence with unprecedented speed and accuracy.

Transform Your Data Streams Into Instant Insights with Streamkap

Traditional batch processing cannot keep up with the real-time demands discussed in What is Apache Flink? Understanding Stream Processing. Struggling with slow, manual ETL and delayed analytics makes it hard to deliver timely insights and responsive business intelligence. Apache Flink’s stream-first approach is powerful, but teams often find the underlying setup challenging, especially when handling sub-second data, event-time processing, and managing changing schemas across various databases.

Experience hands-free, real-time data movement right now. Streamkap delivers continuous low-latency pipelines by seamlessly integrating technologies like Flink and Kafka. Set up reliable change data capture and zero-maintenance streaming ETL from PostgreSQL, MySQL, or MongoDB to destinations such as Snowflake and Databricks—all without writing code. See how our no-code connectors solve the speed and complexity challenges you read about in this article. Modernize your data workflows today and discover why teams trust Streamkap for cost-effective, lightning-fast streaming. If you want to simplify your architecture and stay ahead of business needs, take the next step with Streamkap now.

Frequently Asked Questions

What is Apache Flink and what is it used for?

Apache Flink is a distributed processing framework designed for stateful computations over data streams. It is used to process large volumes of streaming data in real-time with low latency and high throughput, enabling organizations to derive insights and make decisions quickly.

How does Apache Flink handle stream processing?

Apache Flink employs a streaming dataflow architecture, processing each data element as part of a continuous flow. This allows for real-time computations, where data is processed immediately upon arrival, facilitating instant insights and decision-making capabilities.

What are the key features of Apache Flink?

Key features of Apache Flink include a distributed computing model, stateful stream processing, fault-tolerant checkpointing, advanced windowing mechanisms, and support for both batch and stream processing within a single platform.

In which industries is Apache Flink commonly used?

Apache Flink is used across various industries, including finance for fraud detection, telecommunications for network performance monitoring, and manufacturing for predictive maintenance. Its capabilities make it suitable for any sector requiring real-time analytics and event processing.

Recommended