Understanding Real-Time ETL Challenges Explained Clearly

September 3, 2025

Every business wants their data to work harder and faster. Real-time ETL is changing the rules by allowing companies to process information instantly as it is created and over 60 percent of modern enterprises are shifting towards real-time data pipelines to gain a competitive edge. Most people expect the biggest hurdles to be technical ones, like getting the right tools in place. Turns out, the real struggle is keeping data quality high and making sure the flows run smoothly minute by minute.

Table of Contents

- What Is Real-Time ETL And Why Is It Important?

- Key Challenges In Real-Time ETL Processes

- Understanding Data Latency And Its Impact

- How Data Quality Affects Real-Time ETL

- Emerging Solutions For Real-Time ETL Challenges

Quick Summary

| Takeaway | Explanation |

|---|---|

| Real-Time ETL enables instant insights. | This approach allows organizations to analyze data as it is generated, improving decision-making speed. |

| Managing data volume is crucial. | High data flows from sources like IoT increase pressure on infrastructure, necessitating advanced streaming architectures. |

| Data quality ensures reliable transformations. | Maintaining completeness, accuracy, and consistency is essential to prevent errors in real-time ETL processes. |

| Automation enhances data processing efficiency. | Self-healing systems can adapt to changes, reducing manual intervention and boosting resilience in ETL workflows. |

| Latency impacts decision-making effectiveness. | Minimizing delays in data processing is vital for maintaining the relevance of insights in fast-paced environments. |

What is Real-Time ETL and Why is it Important?

Real-Time ETL represents a transformative approach to data integration that enables organizations to process and analyze data instantaneously as it is generated. Unlike traditional batch ETL processes that handle data in large periodic chunks, real-time ETL allows immediate extraction, transformation, and loading of information, providing businesses with up-to-the-moment insights.

Understanding the Core Mechanism

In real-time ETL, data moves through pipelines continuously and seamlessly. When a change occurs in a source system, such as a database update or a new customer record, the information is immediately captured, transformed according to predefined rules, and loaded into target systems. According to research from data integration experts, this approach is particularly crucial in complex and heterogeneous network environments where timely data processing can significantly impact decision-making.

Business Value and Strategic Importance

Real-Time ETL delivers substantial strategic advantages for modern enterprises. Key benefits include:

- Immediate Decision Making: Organizations can react to data changes instantly, enabling proactive strategies

- Enhanced Operational Efficiency: Reduces delays in data processing and reporting

- Improved Data Accuracy: Minimizes errors associated with batch processing delays

Moreover, industries like finance, healthcare, and e-commerce rely on real-time data integration to maintain competitive edges. Learn more about the nuances of this approach in our guide to streaming ETL technologies.

By enabling continuous data flow and immediate transformation, real-time ETL empowers organizations to leverage their data assets more effectively, turning raw information into actionable intelligence with unprecedented speed and precision.

Key Challenges in Real-Time ETL Processes

Real-Time ETL processes face numerous complex challenges that can significantly impact data integration efficiency and reliability. While the promise of instantaneous data processing is compelling, organizations must navigate intricate technical and operational hurdles to successfully implement these advanced data workflows.

Data Volume and Velocity Management

One of the most significant challenges in real-time ETL is managing massive data volumes and extreme velocity. As data integration research indicates, modern enterprises generate exponential amounts of data from diverse sources like IoT devices, social media platforms, and transactional systems. This continuous data stream creates immense pressure on processing infrastructure, demanding high-performance computing resources and sophisticated streaming architectures.

Data Consistency and Quality Assurance

Maintaining data integrity during real-time transformations presents another critical challenge. Key issues include:

- Schema Drift: Unexpected changes in data structure that can break transformation pipelines

- Latency Variations: Inconsistent processing times that might introduce data synchronization problems

- Error Handling: Implementing robust mechanisms to manage and recover from data inconsistencies

Moreover, organizations must develop sophisticated validation techniques to ensure data quality remains high throughout the streaming process. Explore advanced analytics workflow strategies to understand comprehensive data management approaches.

Infrastructure and Scalability Constraints

Building a real-time ETL infrastructure capable of handling dynamic workloads requires significant technological investment. Companies must design flexible, horizontally scalable systems that can dynamically adjust to fluctuating data processing demands. This involves selecting appropriate streaming technologies, implementing efficient resource allocation strategies, and creating fault-tolerant architectures that can maintain performance under extreme computational stress.

Understanding Data Latency and Its Impact

Data latency represents the critical time delay between data generation and its availability for processing or analysis. In real-time ETL environments, minimizing this delay is paramount for maintaining the relevance and actionability of information across complex technological ecosystems.

Defining Latency in Data Processing

Latency is more than just a technical metric it fundamentally determines the effectiveness of data-driven decision making. According to research from data integration experts, latency encompasses the entire journey of data from its origin point through transformation and delivery. The shorter this journey, the more valuable the data becomes for organizational strategies.

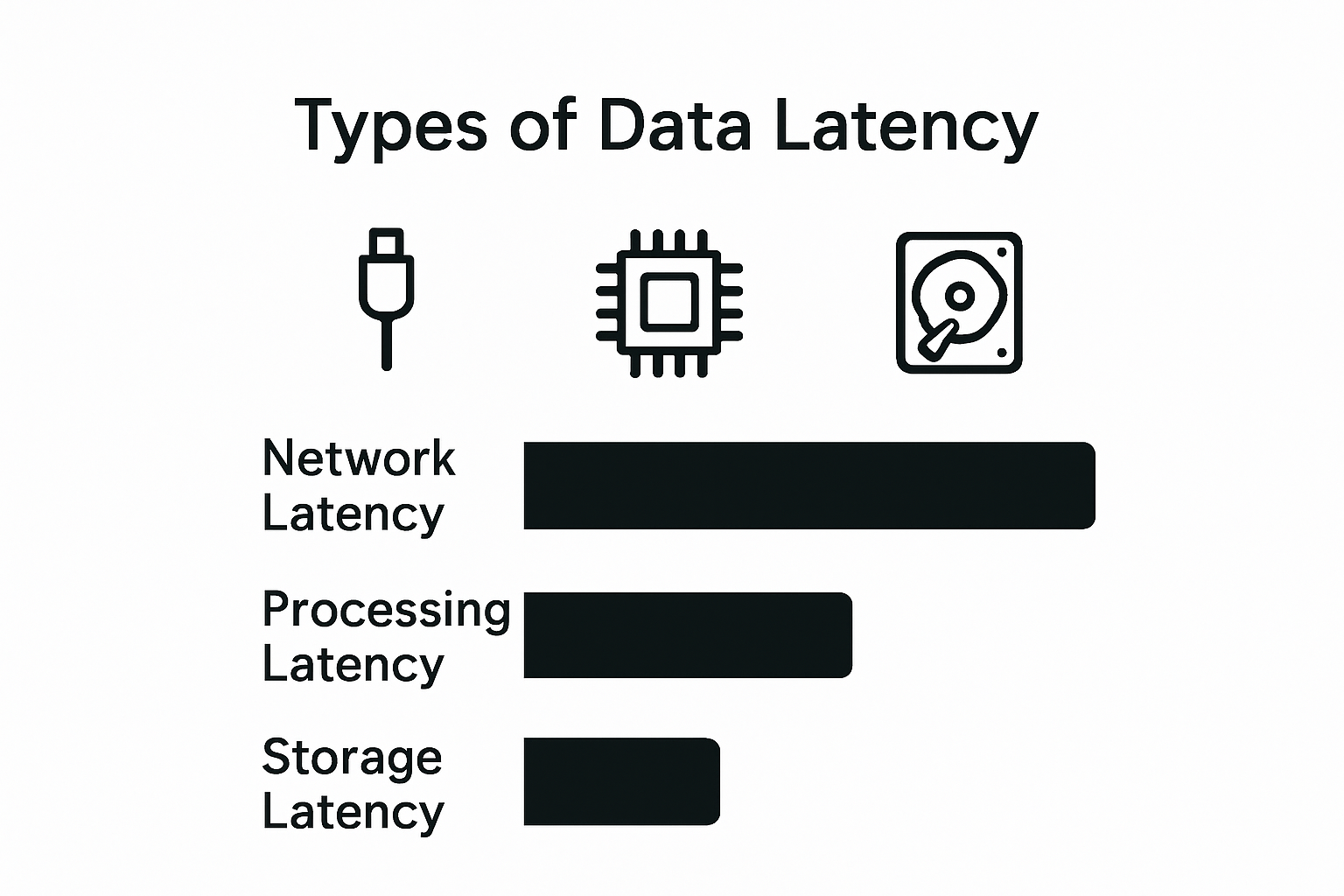

Types and Implications of Latency

Different latency categories significantly impact real-time ETL performance:

- Network Latency: Time required for data transmission across network infrastructure

- Processing Latency: Computational time needed to transform and prepare data

- Storage Latency: Delays associated with reading and writing data to storage systems

Each latency type introduces potential bottlenecks that can dramatically reduce the effectiveness of real-time data integration.

The following table outlines and compares different types of data latency discussed in the article, summarizing their definitions and their potential impact on real-time ETL performance.

| Latency Type | Definition | Impact on Real-Time ETL |

|---|---|---|

| Network Latency | Time required for data transmission across network infrastructure | Delays data arrival for processing |

| Processing Latency | Computational time needed to transform and prepare data | Slows down data readiness for insights |

| Storage Latency | Delays associated with reading and writing data to storage systems | Limits speed at which data can be accessed |

Performance and Business Impact

High latency can render data obsolete before it reaches decision makers. In fast-moving sectors like financial trading or emergency response, even milliseconds of delay can translate into significant operational consequences. Organizations must design robust architectural frameworks that prioritize low-latency data processing, implementing advanced streaming technologies and intelligent caching mechanisms to minimize temporal gaps between data generation and utilization.

How Data Quality Affects Real-Time ETL

Data quality represents a critical cornerstone in real-time ETL processes, directly influencing the reliability, accuracy, and usefulness of data transformations. Poor data quality can undermine entire data integration strategies, leading to flawed decision-making and significant operational risks.

Dimensions of Data Quality

Understanding data quality requires examining multiple interconnected dimensions. According to research from data management experts, comprehensive data quality assessment encompasses several key attributes:

- Completeness: Ensuring all required data elements are present

- Accuracy: Verifying data reflects true values and information

- Consistency: Maintaining uniform data representation across systems

- Timeliness: Delivering data within acceptable time frames

Impact on Real-Time Processing

In real-time ETL environments, data quality challenges can emerge rapidly and unexpectedly. Inconsistent or incomplete data streams can create cascading problems that propagate through entire data pipelines. Explore advanced analytics workflow strategies to understand sophisticated quality management techniques.

Mitigation and Monitoring Strategies

Organizations must implement robust data quality frameworks that proactively identify and remediate potential issues. This involves developing sophisticated validation mechanisms, implementing real-time data profiling tools, and creating adaptive transformation rules that can dynamically handle variations in data structure and content. Continuous monitoring, automated error detection, and immediate corrective actions are essential to maintaining high-quality data streams in real-time ETL processes.

This table summarizes the main dimensions of data quality as described in the article, providing clear definitions to help readers understand the fundamental concepts essential for real-time ETL success.

| Data Quality Dimension | Definition |

|---|---|

| Completeness | All required data elements are present |

| Accuracy | Data reflects true values and information |

| Consistency | Data is represented uniformly across systems |

| Timeliness | Data is delivered within acceptable time frames |

Emerging Solutions for Real-Time ETL Challenges

As data complexity grows exponentially, organizations are developing innovative solutions to address real-time ETL challenges. These emerging technologies aim to create more flexible, robust, and efficient data integration frameworks that can adapt to increasingly dynamic technological landscapes.

Advanced Streaming Architectures

Modern real-time ETL solutions are leveraging sophisticated streaming architectures that enable continuous data processing. According to research in data integration technologies, new non-intrusive, reactive architectures like ‘Data Magnet’ demonstrate remarkable capabilities in handling complex data workflows with consistent performance across varying data volumes.

Intelligent Transformation Techniques

Next-generation ETL solutions are incorporating intelligent transformation mechanisms that go beyond traditional processing models:

- Adaptive Rule Engines: Dynamically adjusting transformation rules based on data variations

- Machine Learning Integration: Automatically detecting and correcting data inconsistencies

- Domain-Specific Customization: Enabling non-technical experts to participate in ETL design

Learn more about advanced data synchronization strategies to understand how cutting-edge technologies are revolutionizing data integration approaches.

Automation and Self-Healing Mechanisms

The future of real-time ETL lies in developing self-managing systems that can proactively identify, diagnose, and resolve data processing challenges. These emerging solutions focus on reducing human intervention, minimizing manual configuration, and creating intelligent pipelines that can automatically adapt to changing data structures and processing requirements. By implementing advanced monitoring, predictive error detection, and autonomous correction mechanisms, organizations can build more resilient and efficient real-time data integration ecosystems.

Transform Real-Time ETL Frustrations into Seamless Streaming Success

Stuck with constant worries about high data latency, inconsistent data quality, or overwhelmed by the pressure to support real-time business decisions? If managing data streaming, unpredictable schema changes, or scaling up infrastructure has left your team struggling to deliver reliable data pipelines, it is time for a new approach.

With Streamkap, you can finally take control. Replace complex batch ETL with automated, sub-second latency data pipelines and enjoy real-time transformations with simple no-code connectors for PostgreSQL, MySQL, MongoDB, and more. Our platform brings agile testing, automated validation, and robust change data capture right into the earliest stages of pipeline development. See how others are building cost-efficient, high-quality data streams with streaming ETL you can trust.

Embrace a future where your data is always ready for analytics and decision-making. Visit Streamkap’s homepage and experience faster, scalable, and stress-free real-time ETL today.

Frequently Asked Questions

What is real-time ETL?

Real-time ETL (Extract, Transform, Load) is a data integration process that enables organizations to capture and process data continuously as it is generated, allowing for immediate insights and decision-making.

What are the key challenges associated with real-time ETL?

Key challenges include managing large data volumes and high velocity, ensuring data consistency and quality, and building scalable infrastructure to meet dynamic processing demands.

How does data latency impact real-time ETL processes?

Data latency refers to the delay between data generation and its availability for analysis. High latency can hinder decision-making by rendering data obsolete before it can be acted upon.

Why is data quality important in real-time ETL?

Data quality is crucial in real-time ETL because poor quality data can lead to inaccurate insights and flawed decision-making, impacting operational effectiveness and strategy.

Recommended