<--- Back to all resources

Top 12 Database Replication Tools for 2025

Explore the 12 best database replication tools for real-time synchronization. Compare features, pros, cons, and use cases to find your ideal solution.

In the world of data management, ensuring data is consistent, available, and up-to-date across multiple systems is a critical challenge. Whether you’re building resilient applications, migrating to the cloud, or powering real-time analytics, the right database replication tools are essential for achieving seamless data synchronization. These solutions solve the complex problem of moving data reliably between sources and targets, often with minimal latency and impact on production systems.

This guide is designed to help you navigate the crowded market of data replication software. We cut through the marketing jargon to provide a detailed, practical overview of the leading platforms available today. Instead of generic feature lists, we offer an in-depth analysis of 12 top-tier tools, from established enterprise solutions like Oracle GoldenGate to modern cloud-native services like Streamkap and open-source powerhouses like Debezium. Our goal is to equip you with the insights needed to make an informed decision for your specific technical and business requirements.

For each tool, you will find:

- A concise overview of its core functionality and ideal use cases.

- An honest assessment of its strengths and limitations.

- Practical implementation considerations to be aware of.

- Direct links to the platform and relevant documentation.

This resource is for data engineers, IT managers, and architects who need to select the most effective tool for their data infrastructure. We provide the comprehensive details necessary to compare solutions and identify the one that best fits your environment, whether you need high-availability, disaster recovery, or a foundation for your real-time data pipeline.

1. Streamkap

Streamkap establishes itself as a premier choice among modern database replication tools by delivering high-performance, real-time data movement through an exceptionally user-friendly platform. It masterfully replaces cumbersome traditional batch ETL processes with an event-driven architecture built on advanced Change Data Capture (CDC). This approach ensures data is streamed from source to destination in milliseconds, not minutes or hours, without imposing a performance burden on your production databases. Its core strength lies in abstracting away the immense complexity of managing systems like Apache Kafka and Flink, offering a serverless, no-code experience.

This empowers data teams to build and deploy sophisticated, low-latency pipelines with remarkable speed. Engineers can focus on leveraging fresh data for analytics, AI, and operational intelligence rather than grappling with infrastructure management. Streamkap’s architecture is specifically designed for reliability and scalability, making it a powerful solution for organizations looking to modernize their data stack and gain a competitive edge through real-time insights.

Standout Features and Practical Benefits

- Serverless CDC Platform: Streamkap’s fully managed infrastructure eliminates the need for users to provision, configure, or maintain complex streaming components. This significantly reduces operational overhead and technical debt.

- Automated Schema Handling: The platform automatically detects and propagates schema changes from source to destination. This prevents pipeline failures and ensures data integrity without manual intervention, a critical feature for agile development environments.

- In-Flight Transformations: Users can apply transformations using familiar Python or SQL directly within the data stream. This allows for data cleaning, enrichment, and normalization before it lands in the warehouse, streamlining the analytics pipeline.

- Cost-Effective Architecture: By leveraging an event-driven model, Streamkap delivers data processing at a significantly lower Total Cost of Ownership (TCO), with reports showing up to a 3x cost reduction compared to traditional batch ETL solutions.

Use Case: Real-Time Analytics Dashboard

A common application is powering live operational dashboards. A retail company can use Streamkap to replicate sales transaction data from a PostgreSQL production database directly into Snowflake. As new sales occur, the data appears on executive dashboards in sub-second time, enabling immediate inventory adjustments, fraud detection, and dynamic pricing strategies without waiting for nightly batch jobs.

Pros:

- Sub-second data synchronization via high-performance CDC

- User-friendly, no-code interface with automated schema drift handling

- Seamless integration with major destinations like Snowflake and Databricks

- Significantly lower total cost of ownership than legacy ETL

- Excellent customer support with dedicated Slack channels

Cons:

- Optimal use may require initial familiarity with CDC concepts

- Pricing is not listed publicly and requires direct contact for a quote

Website: https://streamkap.com

2. Oracle GoldenGate (Oracle)

Oracle GoldenGate is an enterprise-grade platform specializing in real-time data integration and heterogeneous database replication. As one of the most mature database replication tools on the market, its primary strength lies in high-performance, log-based Change Data Capture (CDC). This allows it to replicate data with sub-second latency, making it ideal for mission-critical systems that require continuous availability and real-time data analytics. The platform is not just a tool but a comprehensive ecosystem.

It supports a vast array of sources and targets, extending far beyond the Oracle stack to include other RDBMS, messaging queues like Kafka, and big data destinations. Oracle offers it as both a traditional on-premises software and as a fully managed cloud service, OCI GoldenGate, which significantly reduces the operational burden of configuration and maintenance. For an in-depth analysis of its capabilities, you can explore various resources covering database replication software.

Key Features & Use Cases

- Log-Based CDC: Captures changes directly from transaction logs, minimizing impact on the source database performance. This is crucial for high-throughput OLTP systems.

- Heterogeneous Support: Replicates data between different database vendors (e.g., Oracle to PostgreSQL, SQL Server to Oracle) and modern data platforms.

- OCI GoldenGate: The managed cloud version provides a user-friendly interface for designing, executing, and monitoring replication pipelines, abstracting away complex infrastructure management.

- Veridata Tool: A powerful utility for data validation that compares source and target datasets at scale to ensure consistency and identify discrepancies without downtime.

Pros:

- Extremely mature and reliable with extensive enterprise support.

- The managed cloud service simplifies deployment and operations.

- Broad connector library for diverse a rchitectures.

Cons:

- On-premises licensing can be complex and costly.

- Its most advanced features are often optimized for the Oracle ecosystem.

Website: https://www.oracle.com/integration/goldengate/

3. Qlik Replicate (Qlik)

Qlik Replicate is a powerful, universal data replication and ingestion solution known for its simplicity and broad connectivity. It leverages log-based Change Data Capture (CDC) to move data in real-time with minimal impact on source systems. The platform’s core value proposition is its “click-to-replicate” design, which allows data engineers to set up and deploy replication pipelines quickly through an intuitive graphical interface, abstracting away much of the underlying complexity.

It excels in heterogeneous environments, supporting a vast matrix of on-premises databases, cloud data warehouses, data lakes, and streaming platforms. Qlik Replicate is often praised as one of the most accessible enterprise-grade database replication tools for teams that need to deliver data for analytics and BI without extensive manual scripting or deep expertise in every source system. The focus is on automating the entire replication process, from initial full load to continuous incremental updates.

Key Features & Use Cases

- Agentless, Log-Based CDC: Captures data changes directly from transaction logs without installing intrusive software on the source database servers, ensuring low overhead.

- Web Console for Design & Monitoring: A centralized web-based UI allows users to configure, execute, and monitor replication tasks from a single point of control.

- Broad Endpoint Matrix: Supports an extensive list of sources and targets, including all major RDBMS, data warehouses like Snowflake and BigQuery, and streaming systems like Kafka.

- Enterprise Manager: Provides a centralized management console for overseeing and controlling multiple Qlik Replicate servers, ideal for large-scale, distributed deployments.

Pros:

- Extremely straightforward setup and intuitive graphical user interface.

- Strong heterogeneous coverage across a wide variety of data sources and targets.

- Low-impact CDC minimizes performance degradation on production systems.

Cons:

- Pricing is not publicly disclosed and is available only via a custom quote.

- The server component has historically had a Windows-first footprint, which could be a limitation for some Linux-centric environments.

Website: https://www.qlik.com/us/products/qlik-replicate

4. IBM Data Replication (InfoSphere/IBM Data Replication)

IBM Data Replication is a high-volume, low-latency data movement platform rooted in IBM’s extensive enterprise ecosystem. It excels in environments with complex mainframe and distributed systems, offering robust Change Data Capture (CDC) and its high-throughput Q Replication technology. The platform is designed for mission-critical operations where data must be synchronized in near-real-time from sources like Db2 for z/OS, Oracle, and SQL Server to a wide range of targets, including modern data streaming platforms like Kafka.

This makes it one of the essential database replication tools for large organizations looking to modernize legacy data infrastructure without disrupting core operations. Its strength lies in its ability to bridge the gap between traditional systems of record and contemporary analytical or cloud-based applications, ensuring data consistency and availability across the enterprise.

Key Features & Use Cases

- High-Throughput Replication: Utilizes Q Replication, a message-based technology, to achieve extremely high throughput and low latency, ideal for active-active database configurations and disaster recovery.

- Mainframe Integration: Offers premier support for mainframe data sources, particularly Db2 for z/OS, enabling seamless data offloading and integration with distributed systems.

- Kafka Integration: Natively publishes data changes to Kafka topics, empowering real-time analytics, event-driven microservices, and modern data pipelines.

- Centralized Monitoring: Provides operational dashboards and a unified console for monitoring replication health, managing subscriptions, and tracking latency metrics across complex topologies.

Pros:

- Unmatched performance and reliability for Db2 and z/OS environments.

- Deep enterprise support infrastructure and a long history of reliability.

- Excellent for hybrid environments connecting mainframes to cloud or distributed systems.

Cons:

- Complex versioning and product lifecycle can be challenging to manage.

- Pricing is not transparent and requires direct sales engagement.

Website: https://www.ibm.com/products/data-replication

5. AWS Database Migration Service (AWS DMS)

AWS Database Migration Service (AWS DMS) is a fully managed cloud service designed to simplify migrating databases to AWS quickly and securely. While its name highlights migration, one of its core strengths is its capability for continuous data replication using Change Data Capture (CDC). This makes it a powerful and accessible database replication tool, especially for organizations heavily invested in the AWS ecosystem. DMS handles the complex tasks of provisioning, monitoring, and patching replication infrastructure.

It supports homogeneous migrations, like Oracle to Oracle, as well as heterogeneous migrations between different database platforms, such as Oracle to Amazon Aurora. The service offers both provisioned instances for predictable workloads and a serverless option that automatically scales capacity up or down based on demand. This flexibility, combined with its deep integration with other AWS services like S3 and Redshift, makes it a strategic choice for cloud-native data architectures.

Key Features & Use Cases

- Continuous Replication & Consolidation: Supports ongoing CDC to keep sources and targets synchronized for disaster recovery, analytics, or feeding data lakes. It can also consolidate multiple source databases into a single target.

- Serverless Option: Automatically provisions and scales replication resources based on workload, simplifying management and optimizing costs for variable traffic.

- Broad Engine Support: Works with a wide range of popular open-source and commercial databases, including RDS, Redshift, S3, DynamoDB, PostgreSQL, and SQL Server.

- Free Tier: Offers a generous free tier for new users, including up to 750 hours of a single-AZ dms.t2.micro instance per month, making it accessible for testing and small projects.

Pros:

- Fully managed service eliminates the need for server maintenance.

- Seamless integration with other AWS services and networking.

- Cost-effective serverless and free tier options available.

Cons:

- Cost modeling can be complex, balancing provisioned instances versus serverless metering.

- Optimal performance and ease of use are primarily within the AWS cloud ecosystem.

Website: https://aws.amazon.com/dms/pricing/

6. Google Cloud Datastream (GCP)

Google Cloud Datastream is a serverless, easy-to-use Change Data Capture (CDC) and replication service fully integrated into the Google Cloud ecosystem. Its primary function is to simplify the process of synchronizing data from operational databases like Oracle and MySQL directly into Google Cloud destinations such as BigQuery, Cloud SQL, Cloud Storage, and Spanner. As a key component in modern data architectures on GCP, Datastream is designed to power real-time analytics, database migrations, and event-driven applications with minimal latency and operational overhead.

The platform stands out for its serverless nature, which eliminates the need for users to provision or manage any replication-specific infrastructure. It handles both the initial backfill of historical data and the ongoing capture of real-time changes, streaming them reliably to the destination. This makes it an excellent choice among database replication tools for organizations deeply invested in GCP’s analytics and data warehousing services, offering a streamlined path to unlock insights from their operational data.

Key Features & Use Cases

- Serverless Architecture: No servers to provision or manage, allowing teams to focus on data pipelines rather than infrastructure. The service scales automatically to handle data volume changes.

- Real-time CDC and Backfill: Efficiently performs an initial snapshot of existing data and seamlessly transitions to streaming real-time changes from the source database’s transaction log.

- Tight GCP Integration: Natively delivers data to BigQuery, Cloud Storage, and Cloud SQL, with streamlined templates for Dataflow and Data Fusion to enable complex transformations.

- Transparent Pricing: Offers a tiered pricing model with a free tier for initial data backfills. Costs are based on the volume of data processed, with clear regional pricing visibility.

Pros:

- Extremely simple to set up and operate within Google Cloud Platform.

- Optimized for and tightly integrated with BigQuery for analytics workloads.

- Serverless design significantly reduces management overhead.

Cons:

- Source and destination options are limited compared to more platform-agnostic tools.

- Additional costs are incurred for downstream GCP services like BigQuery storage and querying.

Website: https://cloud.google.com/datastream

7. Azure Database Migration Service (Microsoft)

Azure Database Migration Service (DMS) is a fully managed service designed to enable seamless migrations from multiple database sources to Azure data platforms with minimal downtime. While its primary purpose is migration, its online migration capability makes it a powerful tool for continuous data replication during the transition period. It orchestrates the entire migration process, from assessment to the final cutover, making it a cornerstone of Microsoft’s cloud adoption framework.

The service is deeply integrated with the Azure ecosystem, working alongside tools like Azure Migrate for discovery and the Azure SQL Migration extension in Azure Data Studio for a guided experience. DMS supports both offline (one-time) and online (continuous synchronization) migrations from sources like SQL Server, PostgreSQL, MySQL, and MongoDB. This flexibility allows organizations to perform phased cutovers, ensuring business continuity. Understanding its continuous sync functionality can be enhanced by exploring Azure SQL Database change data capture methods.

Key Features & Use Cases

- Online Continuous Migrations: Performs an initial load of the source database and then continuously applies subsequent changes from transaction logs, allowing applications to remain online until the final cutover.

- Azure Ecosystem Integration: Works natively with Azure Data Studio and Azure Migrate to provide a unified workflow for assessing, planning, and executing database migrations.

- Heterogeneous Source Support: While optimized for SQL Server to Azure SQL migrations, it also supports moving open-source databases like PostgreSQL and MySQL into their Azure-managed equivalents.

- Tiered Pricing Model: Offers a free Standard tier for offline migrations and a Premium tier that supports the more complex, minimal-downtime online migrations.

Pros:

- Seamless, native integration with the broader Azure cloud ecosystem.

- Online migration mode is excellent for phased cutovers with near-zero downtime.

- The guided experience simplifies complex migration projects.

Cons:

- Purpose-built for migrating into Azure, not for general-purpose, multi-cloud replication.

- Premium tier pricing can be complex, varying by region and instance type.

Website: https://azure.microsoft.com/en-us/products/database-migration

8. Fivetran HVR

Fivetran HVR (High-Volume Replicator) is an enterprise-grade solution designed for high-volume, real-time data replication in complex environments. It specializes in log-based Change Data Capture (CDC) and is particularly well-suited for organizations with strict security or regulatory requirements that necessitate on-premises, hybrid, or VPC deployments. Unlike pure SaaS tools, HVR offers the flexibility to run in self-managed or even fully air-gapped environments, giving organizations complete control over their data pipeline infrastructure.

This platform excels at replicating data from heterogeneous sources, including a wide array of databases and file systems. A key advantage is its integration with the broader Fivetran ecosystem. Customers can leverage HVR for secure, on-premises replication and seamlessly connect to Fivetran’s extensive library of cloud connectors, all managed under a unified consumption-based pricing model based on Monthly Active Rows (MAR). This makes it a powerful option among modern database replication tools for hybrid architectures.

Key Features & Use Cases

- Enterprise CDC: Provides robust, low-latency log-based CDC for diverse sources like Oracle, SQL Server, Db2, and SAP HANA, ensuring minimal performance impact on production systems.

- Flexible Deployment Models: Supports on-premises, hybrid-cloud, VPC, and air-gapped deployments, making it ideal for government, finance, and healthcare industries.

- Unified Pricing Model: Usage is measured via Monthly Active Rows (MAR), combining both HVR and cloud connector consumption into a single, predictable bill.

- Centralized Management: A central hub architecture allows for the design, deployment, and monitoring of replication channels from a single graphical user interface.

Pros:

- Strong fit for regulated, hybrid, or security-conscious environments.

- Integrates with Fivetran’s cloud connectors under a unified pricing structure.

- High-performance replication for large, complex enterprise databases.

Cons:

- Requires more setup and operational effort than fully managed SaaS solutions.

- Pricing model changes, like the 2025 update, require careful review to manage costs.

Website: https://fivetran.com/docs/hvr6

9. Quest SharePlex

Quest SharePlex is a proven and highly reliable database replication tool with a long history of serving large enterprise environments. It specializes in replicating data from Oracle and PostgreSQL databases to a variety of targets, focusing on high availability, zero-downtime migrations, and offload reporting. Its core strength lies in its robust, log-based Change Data Capture (CDC) mechanism that ensures near-real-time data synchronization with minimal impact on source system performance.

Trusted by Fortune 500 companies, SharePlex is often considered a more straightforward and cost-effective alternative to other enterprise-grade solutions. It supports replication to diverse destinations, including other relational databases, modern data warehouses like Snowflake, and streaming platforms such as Kafka. This flexibility makes it a versatile choice for organizations looking to integrate their legacy systems with modern data analytics pipelines or implement comprehensive disaster recovery strategies.

Key Features & Use Cases

- Log-Based CDC: Captures data changes from Oracle and PostgreSQL transaction logs, delivering low-latency replication without adding significant overhead to production databases.

- Active-Active Replication: Supports bi-directional replication configurations with built-in conflict resolution, enabling high availability and load balancing across geographically distributed systems.

- Heterogeneous Targets: Facilitates data movement to various platforms, including Kafka, Snowflake, SQL Server, and Teradata, supporting data integration and analytics initiatives.

- Enterprise Monitoring: Provides a comprehensive console for monitoring replication health, tracking data latency, and managing replication processes to ensure data integrity.

Pros:

- Renowned for its reliability and trusted by major enterprises.

- Generally simpler to install and configure compared to some competitors.

- Strong vendor support and a dedicated user community.

Cons:

- Pricing is typically available by quote and can have nuanced licensing options.

- Historically focused on Oracle and PostgreSQL as primary sources.

Website: https://www.quest.com/products/shareplex/

10. Debezium (open source)

Debezium is a distributed, open-source platform for Change Data Capture (CDC) that has become a de facto standard in modern data architectures. It captures row-level changes in your databases and streams them to consumers, making it an excellent choice among database replication tools for microservices, data warehousing, and real-time analytics pipelines. Its core design philosophy is to be a library of connectors that work seamlessly within existing data streaming ecosystems.

While most commonly deployed with Apache Kafka and Kafka Connect, Debezium offers flexibility through its Debezium Server, allowing for standalone operation that can stream changes to other messaging infrastructures like Amazon Kinesis or Google Cloud Pub/Sub. This adaptability makes it a powerful tool for organizations with the engineering capacity to manage and operate their own data infrastructure. For a deeper understanding of its architecture and how it functions, you can find helpful resources explaining what Debezium is and how it works.

Key Features & Use Cases

- Row-Level Change Streams: Provides detailed, low-latency data events for every insert, update, and delete, ensuring downstream systems have a granular and ordered view of changes.

- Rich Connector Ecosystem: Offers robust, log-based CDC connectors for popular databases including MySQL, PostgreSQL, SQL Server, Oracle, and MongoDB, with strong Kubernetes operator support for cloud-native deployments.

- Flexible Deployment: Operable with Kafka for large-scale, resilient streaming or as a standalone server, which simplifies architecture for smaller use cases or non-Kafka environments.

- Active Community: Backed by a vibrant and active open-source community, ensuring continuous development, a wealth of shared knowledge, and a wide array of community-supported connectors.

Pros:

- Completely free, open-source, and highly extensible.

- Widely adopted with strong community support and documentation.

- Flexible deployment options fit diverse infrastructure needs.

Cons:

- Requires significant engineering effort to deploy, manage, and scale the required infrastructure (e.g., Kafka).

- Official enterprise-grade support is not provided directly and must be obtained through third-party vendors or partners.

Website: https://debezium.io/

11. SymmetricDS (JumpMind)

SymmetricDS is a versatile open-source and commercial platform designed for database synchronization and replication, particularly excelling in multi-site, bi-directional, and occasionally connected scenarios. Its trigger-based Change Data Capture (CDC) mechanism allows it to operate across a vast range of databases and operating systems. This makes it a highly adaptable solution for distributed environments like retail point-of-sale (POS) systems, IoT data aggregation, or multi-office data consolidation where network connectivity may be intermittent.

The platform offers both a community edition under the GPLv3 license and a commercial Pro version from JumpMind, which adds a web-based user interface, enhanced security, and dedicated support. This dual-offering model makes it an accessible entry point for smaller projects while providing a clear upgrade path for enterprise-level deployments. Its flexibility in handling complex network topologies, such as hub-and-spoke or peer-to-peer mesh, sets it apart from many other database replication tools that assume constant connectivity.

Key Features & Use Cases

- Trigger-Based Synchronization: Utilizes database triggers to capture data changes, ensuring broad compatibility across different database platforms without relying on specific transaction log formats.

- Flexible Topologies: Excels at managing complex data flows, including hub-and-spoke, multi-master mesh, and tiered synchronization, making it ideal for distributed enterprises.

- Offline Operation: Designed to handle intermittent network connectivity by queuing data changes and synchronizing automatically when a connection is re-established.

- Open-Source & Commercial Editions: Offers a powerful free version for community use and a Pro version with a web console, enterprise support, and additional features for mission-critical deployments.

Pros:

- Excellent for occasionally connected and low-bandwidth environments.

- Highly flexible topology configurations support complex distributed systems.

- Cost-effective with a robust open-source community edition.

Cons:

- Trigger-based CDC can introduce performance overhead on the source database.

- The web-based UI and professional support are limited to the paid Pro version.

Website: https://symmetricds.org/download

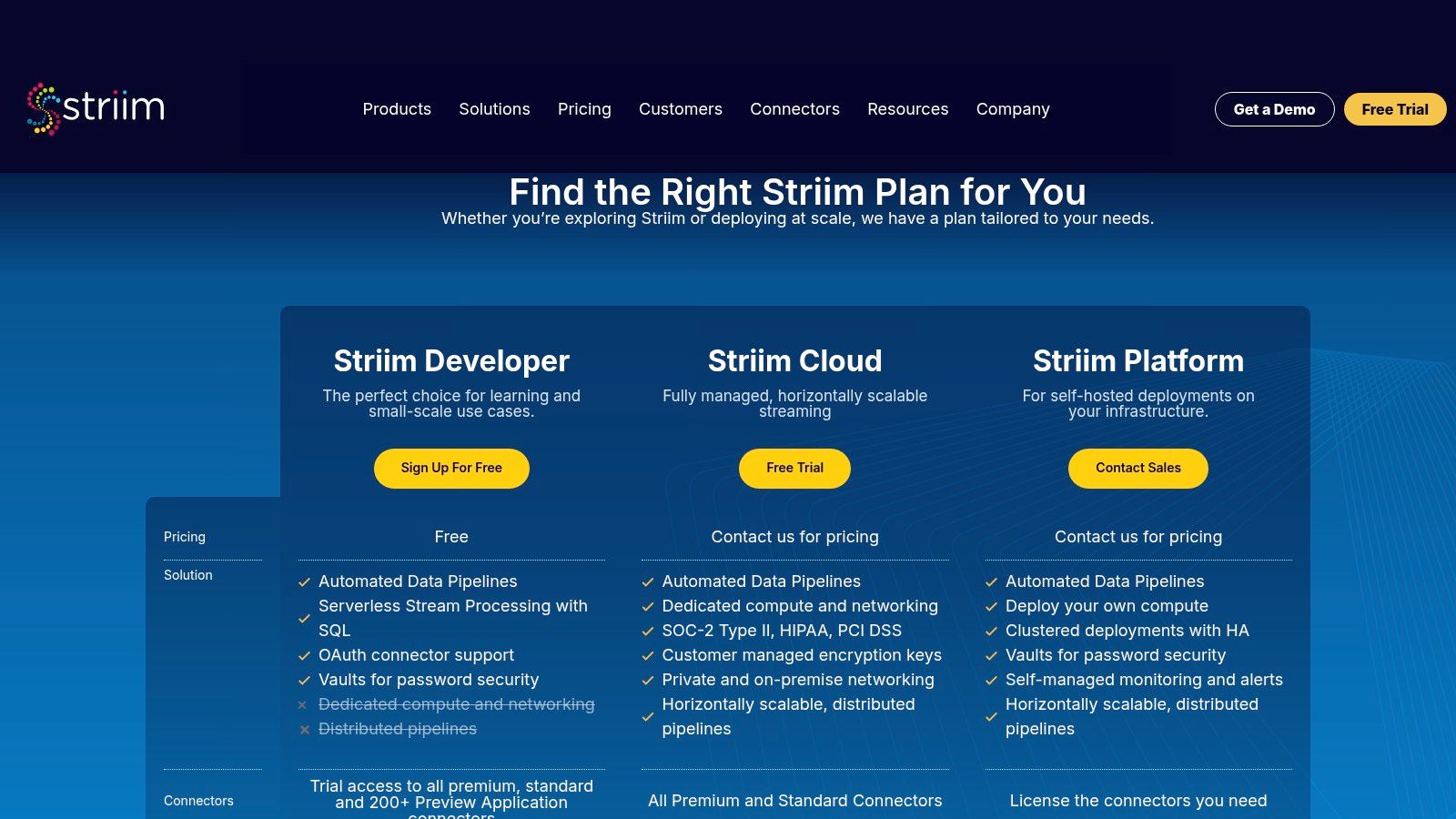

12. Striim

Striim is a real-time streaming data integration and Change Data Capture (CDC) platform designed for modern, high-velocity data architectures. It stands out by unifying data ingestion, in-flight stream processing using SQL, and delivery into a single, cohesive platform. This integrated approach allows users to build sophisticated replication and analytics pipelines that can filter, transform, and enrich data streams before they land in the target system. Striim’s architecture is built for performance and scale, making it a strong contender among database replication tools for complex, real-time scenarios.

The platform is offered as both a self-managed software and a fully managed SaaS solution, Striim Cloud, available across AWS, GCP, and Azure. This flexibility caters to different operational preferences, from enterprises needing full control to teams wanting to accelerate deployment. With a comprehensive library of over 100 connectors, Striim supports a wide array of sources and targets, from traditional databases to cloud data warehouses and messaging systems, ensuring broad compatibility within diverse tech stacks.

Key Features & Use Cases

- Streaming SQL: Empowers users to perform real-time data transformations, filtering, and aggregations on data in-motion using a familiar SQL-based language. This is ideal for pre-processing data for real-time analytics dashboards.

- 100+ Connectors: Provides extensive connectivity for heterogeneous replication, supporting sources like Oracle, PostgreSQL, and MySQL and targets like Snowflake, BigQuery, Kafka, and Databricks.

- Managed Cloud Services: Striim Cloud abstracts away infrastructure management, offering a user-friendly, wizard-driven interface for building and monitoring data pipelines with high availability.

- Monitoring and Alerts: Includes built-in dashboards to monitor data pipeline health, latency, and throughput, with configurable alerts to proactively address potential issues.

Pros:

- Fast time-to-value with the fully managed cloud option and intuitive UI.

- Flexible, usage-based pricing model that scales with event volume and compute.

- Powerful in-flight stream processing capabilities using SQL.

Cons:

- Costs can escalate quickly with high event volumes and vCPU consumption.

- Complex, advanced pipelines may require specialized expertise for performance tuning.

Website: https://www.striim.com/pricing/

Database Replication Tools Feature Comparison

ProductCore Features/TechnologyUser Experience & Quality MetricsValue PropositionTarget AudiencePrice & LicensingStreamkapSub-second CDC streaming, no-code UI, schema automationIntuitive interface, low latency, robust monitoringUp to 4x faster, 3x lower TCO than ETLData engineers, CTOs, analystsFlexible pricing, free trial availableOracle GoldenGate (Oracle)Log-based CDC, heterogeneous targets, managed OCI serviceMature ecosystem, orchestration UIExtensive connector supportEnterprise Oracle-centric environmentsComplex licensing, quote-basedQlik Replicate (Qlik)Agentless CDC, web console, broad endpointsSimple setup, user-friendly UIStrong heterogeneous database coverageEnterprises needing broad DB supportQuote-based, no public pricingIBM Data ReplicationQ Replication, Kafka integration, mainframe supportEnterprise-grade, operational dashboardsDeep mainframe and Db2 supportLarge enterprises with mainframe systemsPricing via salesAWS Database Migration ServiceServerless CDC, broad DB support, auto scalingFully managed, integrates well in AWS ecosystemNo server maintenance, free tier for 12 mosAWS users for migration and replicationUsage-based pricingGoogle Cloud Datastream (GCP)Serverless CDC, backfill, GCP integrationsSimple serverless operation, tiered pricingOptimized for GCP analytics stackGCP users focusing on real-time analyticsPay-as-you-go, regional pricingAzure Database Migration ServiceOnline/offline migrations, Azure integrationGuided migrations, near-zero downtimeNative Azure tool, free standard tierAzure cloud users migrating databasesFree standard, premium variesFivetran HVREnterprise CDC, air-gapped/on-prem optionsHybrid environment supportUnified pricing with Fivetran connectorsRegulated, hybrid, and on-premises usersUpdated pricing model, quote-basedQuest SharePlexLog-based replication, multi-targetsReliable in large enterprisesTrusted by Fortune 500, strong supportOracle/PostgreSQL shops, large enterprisesPricing by quoteDebezium (open source)Row-level CDC, Kafka or standaloneFlexible deployment, community-drivenFree and extensibleOrganizations with engineering capacityOpen source, community supportSymmetricDS (JumpMind)Trigger-based bi-directional syncWeb UI (Pro), flexible topologyCost-effective for distributed/edgeDistributed, multi-site database setupsCommunity free, commercial Pro paidStriimCDC & streaming SQL, 100+ connectorsFast deployment, monitoring dashboardsScalable pricing, managed cloud optionEnterprises needing streaming dataUsage-based, free developer tier

Making the Right Choice for Your Data Architecture

Navigating the landscape of database replication tools can feel overwhelming, but as we’ve explored, the right solution hinges entirely on the specific contours of your data architecture and business objectives. We’ve journeyed through a diverse set of options, from managed cloud services like AWS DMS and Google Cloud Datastream to powerful enterprise platforms such as Oracle GoldenGate and Qlik Replicate. The key takeaway is that there is no single “best” tool, only the tool that is best suited for your unique operational reality.

Your decision-making process should be a strategic exercise, not just a technical one. The choice you make will directly impact your organization’s ability to support real-time analytics, maintain high availability, and execute seamless cloud migrations. A misaligned tool can lead to data latency, spiraling costs, and a significant engineering burden, while the right one can unlock transformative business intelligence and operational resilience.

Key Takeaways and Final Considerations

Reflecting on the tools we’ve analyzed, several core themes emerge. The most critical decision point often revolves around the build-versus-buy dilemma. Open-source solutions like Debezium offer unparalleled flexibility and community support but demand significant in-house expertise for setup, management, and scaling. In contrast, commercial platforms like Fivetran HVR or Streamkap provide a managed, user-friendly experience that accelerates time-to-value, albeit with associated licensing costs.

When evaluating potential database replication tools, focus on these critical factors:

- Latency and Performance: What are your real-time requirements? Do you need sub-second latency for critical applications, or can you tolerate a few minutes for BI reporting? Tools leveraging log-based Change Data Capture (CDC) will almost always outperform query-based methods.

- Source and Target Compatibility: Ensure the tool has robust, well-maintained connectors for your specific source databases (e.g., PostgreSQL, Oracle, SQL Server) and target destinations (e.g., Snowflake, BigQuery, Kafka). Don’t just check a box; investigate the maturity and feature depth of each connector.

- Scalability and Reliability: How will the tool handle spikes in data volume? Does it have built-in fault tolerance and automatic recovery mechanisms? Consider how its architecture will grow alongside your data needs without requiring a complete re-platforming effort.

- Ease of Use and Management Overhead: Who will be managing the pipelines? A tool with a no-code UI and automated schema drift handling, like Striim or Streamkap, empowers a wider range of users and reduces the burden on your data engineering team.

- Total Cost of Ownership (TCO): Look beyond the initial license fee. Factor in the cost of infrastructure, engineering time for maintenance and troubleshooting, and potential data transfer fees associated with cloud-based solutions.

Your Actionable Next Steps

Armed with this information, your path forward should be clear and methodical. Begin by thoroughly documenting your requirements. Identify your key use cases, whether for disaster recovery, feeding a real-time analytics dashboard, or migrating to a cloud data warehouse.

Next, create a shortlist of 2-3 tools that align with your core needs. Engage in a proof-of-concept (POC) with each contender, using a representative dataset and a real-world scenario. This hands-on evaluation is the single most effective way to uncover a tool’s true strengths and limitations, moving beyond marketing claims to practical, real-world performance. In your POC, pay close attention to the setup experience, the observability features, and how it handles edge cases like schema changes or network interruptions. This rigorous, data-driven approach will ensure you select the database replication tool that not only solves today’s challenges but also serves as a foundational component of your future-proof data stack.

Ready to experience the power of truly real-time, scalable data replication without the complexity? Streamkap offers a serverless platform built on open standards, providing millisecond latency and automated schema evolution for a fraction of the cost. See how our modern approach to database replication tools can transform your data pipelines by starting a free trial of Streamkap today.