<--- Back to all resources

Mastering Change Data Capture MySQL for Real-Time Data

Discover how Change Data Capture MySQL transforms data pipelines. Learn how CDC works, compare methods, and implement best practices for real-time insights.

Change Data Capture in MySQL is a way to track and capture every change made to your data—inserts, updates, and deletes—the moment they happen. Instead of repeatedly querying the entire database to see what’s new, CDC taps into the database’s internal transaction log to stream these changes in real-time, keeping all your downstream systems perfectly in sync.

Understanding Change Data Capture in MySQL

Think of your database like a busy shipping warehouse. The old way of checking inventory involved shutting everything down at night and manually counting every single box on every shelf. It was slow, disruptive, and by the time you finished, the report was already out of date. This is basically how traditional batch processing works—running huge, resource-heavy queries to find what’s changed.

CDC is the modern, smarter way. Instead of a nightly shutdown, you have a live feed from the loading dock. Every time a package comes in or goes out, that event is logged and broadcast instantly. That’s the core of change data capture for MySQL: it gives you a continuous, real-time stream of every single thing that happens to your data.

This isn’t just a small improvement; it’s a fundamental shift from working with periodic snapshots to a live flow of events. You’re no longer dealing with data that’s hours or even days old. You’re making decisions with information that’s current down to the millisecond.

The Core of MySQL CDC: The Binary Log

So, how does MySQL pull this off? The secret lies in a special file called the binary log (or binlog). The best way to think of the binlog is as the database’s indestructible flight recorder. It’s an official, unchangeable transcript of every transaction that has ever occurred.

Before any data modification is even committed, MySQL writes a detailed entry into this log. This record contains everything needed to perfectly reconstruct the event, including:

- The type of change (INSERT, UPDATE, or DELETE).

- The actual data that was modified.

- A precise timestamp of when the change happened.

CDC tools connect directly to this binlog, reading the events as they’re written and forwarding them to other systems. This method is incredibly efficient because it doesn’t put any extra load on your actual database tables. The database is already writing to the binlog for its own internal needs, like replication and recovery. CDC just listens in.

Change Data Capture for MySQL has become a cornerstone for businesses that need real-time data synchronization. It mirrors every insert, update, and delete from a source database to any number of downstream systems with almost no latency.

Having instant access to data changes opens up a world of possibilities for building modern, responsive applications. Industry benchmarks show that CDC can slash data replication times from hours to under five seconds for most transactional systems. That’s a mind-boggling 99%+ reduction in synchronization delay compared to old-school nightly batch jobs.

You can dive deeper by exploring a complete guide to CDC for MySQL.

How the MySQL Binary Log Powers CDC

At the very core of MySQL CDC is the binary log, or binlog. It’s not some optional add-on; it’s the database’s official, unchangeable record of every single data modification. Think of it like an airplane’s black box, diligently logging every INSERT, UPDATE, and DELETE command exactly as it happens and in the correct sequence.

This chronological log is the secret sauce that makes real-time data streaming possible. Instead of constantly hammering your production tables with queries, a CDC tool simply reads this log file. The database is already creating it for its own internal needs like replication and disaster recovery, so tapping into it is incredibly efficient and doesn’t drag down your source system’s performance.

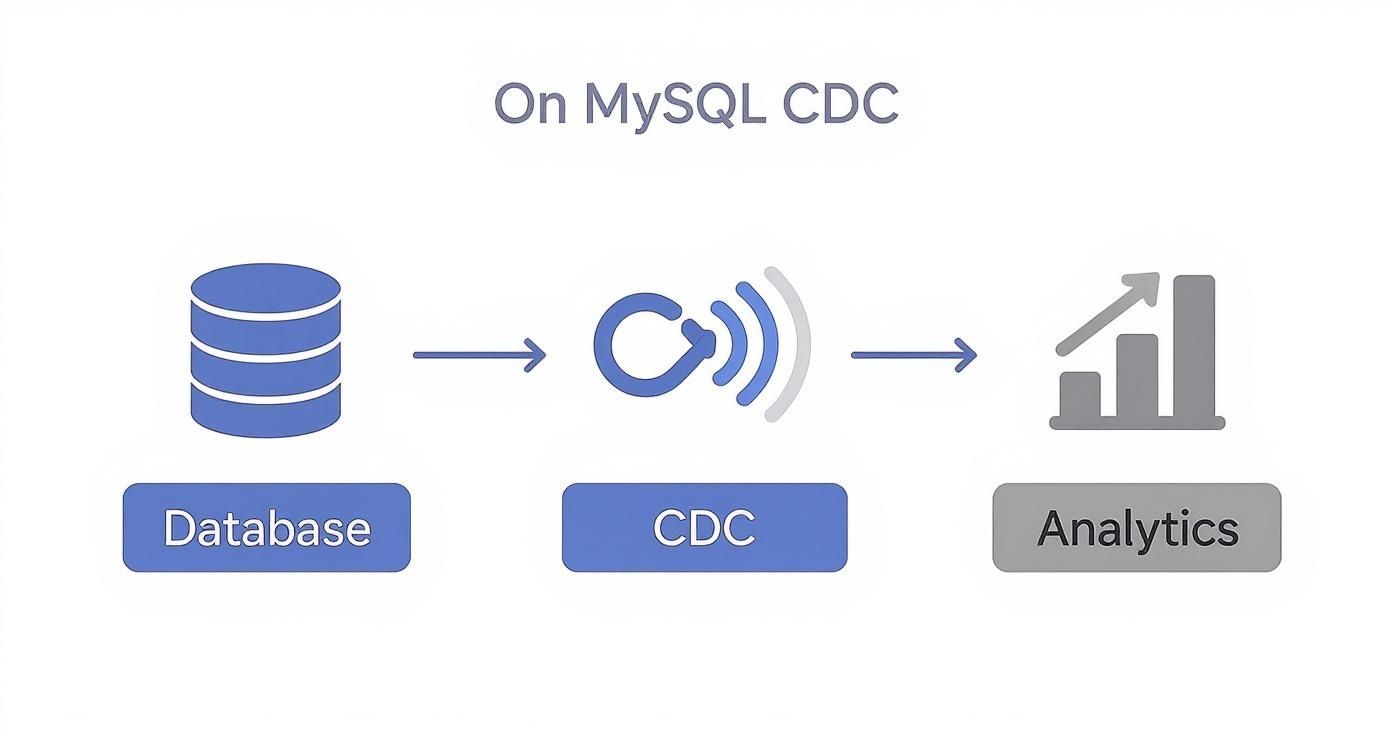

This whole process is about capturing a database event and getting it into a downstream system for analysis, all in near real-time.

As you can see, it’s a direct, three-step journey. Data changes are captured right at the source and streamed out for immediate use, showing just how effective log-based CDC really is.

Choosing the Right Binlog Format

To make change data capture with MySQL work reliably, you have to get the binlog configuration right. MySQL gives you three formats for logging events, but for modern CDC pipelines, only one is the clear winner.

- Statement-Based Replication (SBR): This format logs the exact SQL query that changed the data (e.g.,

UPDATE users SET last_login = NOW() WHERE id = 123). It’s compact, sure, but it’s a landmine for CDC. Why? Because non-deterministic functions likeNOW()orUUID()can generate different results on the source and target, leading to frustrating data inconsistencies. - Mixed-Based Replication (MBR): This is a hybrid where MySQL tries to be smart and decides whether to log the statement or the row change for each event. While it attempts to give you the best of both, its unpredictability makes it a poor choice for CDC. You can’t build a reliable pipeline on guesswork.

- Row-Based Replication (RBR): This is the gold standard for CDC. Instead of the query, RBR logs the actual data changes for each row. It captures the “before” and “after” state of the data, leaving no doubt about what actually changed. This is the only format we recommend for dependable CDC.

With Row-Based Replication, there’s no ambiguity. You get a complete, deterministic picture of every data modification. That’s absolutely critical for guaranteeing data integrity in downstream systems like data warehouses and analytics platforms.

The Lifecycle of a Data Change Event

Let’s walk through what happens to a single change, from the user’s click to the data appearing in your analytics tool. When you see the flow, the whole process clicks into place. It’s remarkably fast.

Here’s the step-by-step breakdown:

- A Transaction Occurs: An application or user runs a command, like an

UPDATEto change a customer’s shipping address. - The Change is Written to the Binlog: Before the transaction is even fully committed, MySQL writes the row-level change event to the binlog file on disk. This makes the record permanent.

- The CDC Connector Reads the Event: Your CDC tool is constantly watching the binlog. It spots the new event and reads it almost instantly.

- The Event is Streamed Downstream: The connector then translates the event into a standard format like JSON or Avro and sends it on its way to a message broker or directly to a destination like Snowflake or BigQuery.

This whole sequence unfolds in milliseconds, which is what enables true real-time data pipelines.

Of course, setting up a stream like this from a managed service can have its own complexities. For anyone looking to get this running smoothly, our guide offers clear steps on how to stream data from AWS RDS MySQL using Streamkap. By using the binlog, you’re plugging into a native, high-performance mechanism that ensures every single change is captured without fail.

Comparing the Top MySQL CDC Approaches

When it comes to change data capture with MySQL, not all methods are created equal. Picking the wrong approach can bog down your database, cause you to lose critical data, and create a tangled mess of maintenance headaches. To get it right, you have to understand the trade-offs between the three main strategies: triggers, queries, and logs.

Each one has its place, but as you’ll see, one method has clearly become the gold standard for any serious, high-performance data architecture. Let’s dig into how they compare on performance impact, data accuracy, and overall complexity.

The Problem with Triggers

On the surface, using database triggers for CDC seems like a no-brainer. Triggers are just stored procedures that fire automatically whenever an INSERT, UPDATE, or DELETE happens on a table. The idea is simple: create a trigger on each table you want to watch, and have it copy the change event into a separate “history” table.

The catch? This approach comes with a massive performance penalty. Triggers run inside the same transaction as the original operation, which means every single write to your database now has to do double the work. This adds a ton of latency and processing overhead directly to your production system.

As your application traffic grows, this extra load can slow your primary database to a crawl. On top of that, trying to manage triggers across hundreds of tables—especially when schemas evolve—is a maintenance nightmare waiting to happen.

The Pitfalls of Query-Based Polling

Another common tactic is query-based CDC, which is really just a fancy term for polling. This method involves repeatedly hitting your source tables with queries to ask, “Hey, what’s changed since I last checked?” You typically have to add a last_updated timestamp or a version number column to your tables to make this work.

The CDC process then runs a query like SELECT * FROM orders WHERE last_updated > 'last_check_timestamp' every few minutes. While it feels less invasive than triggers, it’s riddled with flaws.

- It misses deletes: If a row is deleted, it’s just gone. The query will never see it, and that change is lost forever unless you start building complicated “soft-delete” logic into your application.

- It’s not real-time: Your data is only as fresh as your polling interval. If you check every five minutes, your downstream systems are always working with five-minute-old data. Polling faster just puts more and more strain on the database.

- It creates race conditions: It’s surprisingly tricky to grab every change without either missing some or double-counting others, especially on busy, high-transaction systems.

This approach forces a terrible compromise between data freshness and database performance—a trade-off you really shouldn’t have to make with modern data pipelines.

Why Log-Based CDC Is the Gold Standard

This brings us to log-based CDC, the method that taps into the MySQL binary log (binlog). As we covered earlier, the binlog is the database’s own native, super-efficient transaction journal. Log-based CDC tools simply read this file, which has a near-zero impact on the source database’s performance.

The beauty of log-based CDC is that it’s completely non-invasive. It captures every single change—including deletes—in the correct order and with perfect fidelity, without adding any transactional overhead to your production system.

It elegantly solves all the problems that plague the other methods. Because it’s asynchronous, it doesn’t slow down your application’s write operations. It captures every type of change with 100% accuracy and delivers events in milliseconds, opening the door for true real-time analytics and applications.

The only real challenge used to be the complexity of setting up and managing the connectors that read the binlog. But that’s exactly the problem that modern managed platforms like Streamkap were built to solve, taking all the operational burden off your plate.

A Head-to-Head Comparison

To make the differences perfectly clear, let’s line up the key characteristics of each approach. This table breaks down why log-based CDC has become the go-to choice for almost any scenario involving change data capture in MySQL.

Comparison of MySQL CDC Implementation Methods

This table compares the key characteristics of trigger-based, query-based, and log-based Change Data Capture approaches for MySQL, highlighting performance impact, reliability, and complexity.

MethodPerformance Impact on Source DBData Accuracy & CompletenessImplementation ComplexityBest ForTrigger-BasedHigh. Adds overhead to every transaction, slowing down write performance.Moderate. Can miss changes if triggers fail or are disabled. Does not capture schema changes.High. Requires manual creation and management of triggers for every table. Very brittle.Simple, low-volume auditing tasks.Query-BasedModerate to High. Constant polling adds significant read load on production tables.Low. Easily misses DELETE operations and can lead to data gaps due to polling intervals.Moderate. Requires schema modifications (last_updated columns) and careful query design.Legacy systems where other methods aren’t possible.Log-BasedVery Low. Reads from the binlog, which is an asynchronous and highly efficient process.Very High. Guarantees capture of all changes, including deletes and schema modifications, in order.Low to Moderate. Requires connector setup, but managed platforms automate this process entirely.Any modern, real-time, or high-volume data pipeline.

Ultimately, the choice is clear. For reliable, scalable, and real-time data integration, log-based CDC isn’t just the best option—it’s the only one that truly meets the demands of modern data architectures.

Powerful Use Cases for MySQL CDC

It’s one thing to understand the mechanics of change data capture with MySQL, but it’s another thing entirely to see what it can do in the real world. Now that we’ve covered the technical “how,” let’s get into the compelling “why.” CDC isn’t just an abstract data engineering pattern; it’s a practical solution that opens up a ton of new possibilities for businesses everywhere.

From powering live dashboards to keeping the lights on during a major database migration, MySQL CDC tackles critical challenges that old-school batch processing just can’t touch. These examples show how a real-time data stream goes from being a nice-to-have technical feature to a core part of the business.

Real-Time Analytics and Business Intelligence

Trying to run a modern business on stale data is like driving down a highway using a map that’s updated once a day. You’re making decisions based on what was happening, not what is happening. Opportunities are missed, and you don’t spot problems until hours after they’ve started.

MySQL CDC flips this completely on its head. By streaming every insert, update, and delete straight into a cloud data warehouse like Snowflake, BigQuery, or Databricks, you can build analytics dashboards that are genuinely live.

- Retail and E-commerce: You can watch inventory levels, spot sales trends, and flag fraudulent transactions the second they occur.

- Finance: Risk models and trading dashboards get updated with live market data, leading to much faster and sharper decisions.

- Logistics: Supply chains become more responsive when you can track shipments and inventory movements in real time, reacting instantly to any delays.

This isn’t just a minor speed bump. Businesses that switch to CDC see huge improvements in how quickly they can get insights. In fact, a study of 1,000 global companies found that CDC cut the median time-to-insight from operational data by a massive 87%—dropping from 15 hours down to under 2 hours. This kind of speed is a game-changer for staying competitive. You can read the full research about these CDC findings to learn more.

Zero-Downtime Database Migrations and Upgrades

Ask any data engineer about their least favorite job, and “migrating a production database” will probably be high on the list. It usually means scheduling downtime, a ton of careful planning, and hoping for the best. With CDC, there’s a much safer and saner way to do it.

Instead of a risky, all-at-once migration, you can use CDC to set up a continuous replication stream from the old MySQL database to the new one. The two systems run in parallel, and the CDC pipeline makes sure the new database is a perfect, up-to-the-second mirror of the old one. Once you’re confident it’s all working smoothly, you can flip the switch with absolutely no downtime.

This approach basically de-risks the entire migration. It gives you a chance to thoroughly test and validate the new system using live production data before you make the final cutover. The result is a seamless transition for your apps and your users.

Building Responsive Event-Driven Microservices

Modern applications are increasingly built with microservices—small, independent services that are much more flexible than old monolithic designs. In an event-driven setup, these services communicate by reacting to events instead of calling each other directly. MySQL CDC fits this model perfectly.

When something changes in your database—a new user signs up, an order is placed—CDC captures that change as an event. It then publishes that event to a message broker like Apache Kafka. From there, other microservices can listen for the events they care about and react.

Here’s how it could work for an e-commerce order:

- A new row appears in the

orderstable and is captured by CDC. - The “order placed” event gets published to a Kafka topic.

- The Inventory Service sees the event and updates the stock count.

- The Shipping Service sees it and starts the fulfillment process.

- The Notification Service sees it and sends the customer a confirmation email.

Each service does its job independently, without being tightly coupled to the main database or the other services. This creates a far more resilient, scalable, and maintainable system. It’s why change data capture in MySQL is a foundational piece for building modern, reactive applications.

Best Practices for a Resilient CDC Pipeline

Getting a MySQL change data capture pipeline up and running isn’t just about flipping a switch. To build something that’s reliable and can handle the twists and turns of real-world operations, you need a solid plan. A resilient pipeline is one that’s built from the ground up to anticipate problems, ensuring it’s not just a cool experiment but a core part of your infrastructure.

Think of these best practices as your pre-flight checklist. Following them will help you sidestep the common traps and ensure your data stream stays accurate and healthy, even as your business scales. This is how you move from a prototype to a mission-critical system your team can truly count on for real-time data.

Configure Your MySQL Source Correctly

Everything flows from the source. The stability of your entire CDC pipeline hinges on how your MySQL database is configured. If you get these initial settings wrong, you’re setting yourself up for headaches like data loss or mysterious pipeline failures down the road.

The most critical piece of the puzzle is the binary log.

- Enable the Binlog: First things first, make sure the

log_binparameter is active in your MySQL configuration. This is the master switch that starts recording all data changes. - Set Binlog Format to ROW: As we’ve covered, Row-Based Replication (RBR) is the only way to go for serious CDC. It logs the exact before-and-after picture of every modified row, which is far more reliable than just logging the SQL statement that made the change.

- Assign a Unique Server ID: Every MySQL server involved in replication needs a unique

server_id. This is non-negotiable. It prevents logging conflicts and allows CDC connectors to know exactly which database they’re tracking.

The binlog is the bedrock of your entire CDC setup. By setting the format to ROW, you guarantee that you’re capturing every single modification with perfect fidelity. This is absolutely essential for maintaining data integrity in your downstream systems, whether it’s a data warehouse or a real-time analytics dashboard.

Plan for Schema Evolution Gracefully

Let’s be realistic—databases change. Your application will evolve, and with it, your database schema. Columns get added, data types are tweaked, and tables get renamed. A poorly designed CDC pipeline will shatter the moment an ALTER TABLE command runs, causing downtime and a scramble to fix things.

A resilient pipeline, on the other hand, takes these changes in stride.

Modern CDC tools are smart enough to spot schema changes right in the binlog. They can automatically apply those same changes to your target destination, keeping source and target schemas perfectly in sync without you lifting a finger. This gets even more critical in complex environments like multi-tenant platforms. We dive deeper into this in our guide on MySQL CDC for multi-tenant SaaS platforms.

The need for engineers who understand these systems is exploding. The market for MySQL training services shot up from $664.77 million in 2021 and is expected to hit $900.4 million by 2025. This surge is fueled by companies moving to real-time data, with over 80% of new Fortune 500 data projects now requiring CDC. You can read more about this trend in enterprise data on cognitivemarketresearch.com.

A Simpler Way: MySQL CDC with Modern Data Platforms

Sure, you could build your own change data capture MySQL pipeline from scratch using open-source tools. Many teams have tried. But this path is often paved with unexpected operational headaches. It demands a dedicated team to wrangle connectors, scale infrastructure, and put out fires when the inevitable complexities of real-time streaming pop up.

Suddenly, you’re dealing with manual configurations, constant monitoring, and late-night alerts when a pipeline breaks.

Modern data platforms offer a much smoother ride. They are built specifically to handle the messy, low-level engineering work, freeing you up to focus on what you’ll do with the data, not just how to move it. These managed solutions take care of the entire CDC pipeline, from the first connection to long-term maintenance.

Why a Managed CDC Platform Makes Sense

Think of it like this: you could build your own car to get to work, but it’s much easier to use a ride-sharing service. Instead of sourcing parts, assembling an engine, and learning to be a mechanic, you just get in and go. That’s exactly what a platform like Streamkap does for your data.

The advantages become clear almost immediately:

- Go from Weeks to Minutes: An engineering project that might take weeks to build, test, and stabilize can be set up in just a few minutes. Connecting a MySQL source to a warehouse like Snowflake or a stream like Kafka becomes a simple, UI-driven task.

- Set It and Forget It Operations: Stop worrying about managing Kafka clusters, watching for replication lag, or patching connector updates. The platform handles all the infrastructure under the hood, scaling up or down as your data volume changes.

- Effortless Schema Evolution: What happens when a developer adds a new column to a source table? A DIY pipeline often breaks. A managed platform, however, sees the schema change in the binlog and automatically applies it to the destination. Your data keeps flowing without interruption.

The core idea is simple: managed CDC platforms turn a thorny data engineering project into a reliable, automated utility. This lets your engineers get back to building features that drive the business, rather than being stuck on pipeline plumbing.

This approach gives you enterprise-grade reliability without the sleepless nights. For example, you can see how a MySQL source connector works in a managed environment to understand just how much of the process is handled for you.

By offloading the operational complexity, you get a faster, more resilient, and ultimately more cost-effective way to get your real-time data pipelines up and running.

Your Top MySQL CDC Questions, Answered

When you start digging into change data capture with MySQL, a few practical questions always pop up. It’s one thing to understand the theory, but what happens when you actually flip the switch on a production system? Let’s walk through the common concerns we hear from engineering teams to clear up any confusion.

We’ll cover the real-world impact on performance, how to deal with changing schemas, and the best way to set up your architecture.

What’s the Real Performance Hit from Using the Binlog?

This is usually the first question on everyone’s mind. Will enabling the binary log slow our production database to a crawl?

The short answer is almost always no. The impact is tiny. On any reasonably modern hardware, you’re looking at an overhead of well under 5%. MySQL is already built to do this efficiently because its own replication and recovery features depend on the very same binary log. It’s a core, highly-optimized function.

When you stack that against the alternatives, log-based CDC looks even better. A trigger-based system, for instance, adds synchronous overhead to every single transaction. Under heavy load, that can seriously tank your write performance. Log-based CDC, on the other hand, is asynchronous. It works off to the side, making it the clear winner for efficiency.

How Does CDC Handle Database Schema Changes?

Your database schema isn’t set in stone. It has to evolve as your application adds new features. A huge source of anxiety is worrying that a simple ALTER TABLE command will shatter your entire data pipeline.

A solid CDC pipeline shouldn’t just survive schema changes—it should handle them gracefully. Modern CDC tools are smart enough to spot schema evolution in the binlog and automatically pass those changes downstream.

Think about what happens when you add a new column. A well-built CDC connector will:

- See the

ALTER TABLEevent in the log. - Understand what the change is.

- Automatically apply that same change to the target table in your data warehouse, like adding the new column in Snowflake.

This kind of automation is what keeps your data flowing without interruption. It saves you from the pipeline failures and late-night manual fixes that plague more fragile setups.

Can I Run CDC Off a MySQL Read Replica?

Yes, and you probably should. Pointing your CDC process at a read replica instead of your primary database is a fantastic strategy for isolating workloads.

This setup gives you two huge advantages:

- Zero Impact on the Primary: All the work of reading the binlog and streaming out changes happens on a secondary server. This leaves your primary database with all its resources dedicated to handling application traffic.

- Better Resilience: It creates a safe separation. If anything goes wrong with the CDC connector, your main database won’t even notice.

The only catch is making sure the replica is configured properly. It needs its own unique server_id and, of course, must have the binlog enabled to ensure everything stays in sync.

Ready to build a rock-solid MySQL CDC pipeline without the operational headaches? Streamkap provides a fully managed platform that handles everything from connector deployment to schema evolution, so you can get real-time data flowing in minutes. Find out more and try it for free.