A Practical Guide to S3 Real-Time Data Pipelines

September 16, 2025

Switching to S3 real-time data ingestion is about more than just speeding things up; it's a fundamental shift away from outdated nightly batch jobs. You're moving towards a dynamic, responsive data lake on Amazon S3 where insights pop up in seconds, not hours. This isn't a minor tweak—it's essential for any modern business that relies on having the freshest data at its fingertips.

Why S3 Real-Time Data Is Now a Necessity

Let's be honest, yesterday's data just doesn't cut it anymore. The demand for instant answers has completely reshaped data architecture. Businesses are now competing based on how quickly they can react to new information.

Think about it: preventing fraud as it happens, delivering perfectly timed personalized user experiences, or feeding the latest data into hungry machine learning models—none of this is possible with stale data. This is exactly why building an S3 real-time pipeline has become a strategic imperative, not just a nice-to-have.

The whole idea is to shrink the time it takes for an event—like a customer purchase in your transactional database or a new reading from an IoT sensor—to become available for analysis in your data lake. Traditional ETL processes are notorious for their delays, often pushing data on a 24-hour cycle. By the time it arrives, it's already history. For a solid primer on the concepts, this guide on real-time data processing is a great resource.

The Architectural Shift to Streaming

Moving from delayed batch processing to immediate streaming is a major architectural pivot. Instead of pulling huge, infrequent chunks of data, a real-time system uses Change Data Capture (CDC) to grab every single change the moment it occurs. This is where tools like Streamkap come in, acting as a bridge to stream those changes directly from sources like PostgreSQL or MySQL straight into S3.

This isn't just a minor adjustment; it's a complete change in how you handle data. To really get a feel for the differences, we've put together a guide comparing https://streamkap.com/blog/batch-processing-vs-real-time-stream-processing that breaks down the pros and cons of each approach.

To get a better sense of how this works in practice, here's a quick comparison.

Batch Processing vs Real-Time Ingestion

As you can see, the choice isn't just technical—it directly impacts how agile and responsive your business can be. Real-time ingestion unlocks a whole new class of applications that simply aren't feasible with batch methods.

The image below really drives home how central Amazon S3 has become in modern data stacks, serving as the foundation for everything from analytics to AI.

This visual shows just how well S3 plays with a massive ecosystem of services, making it the perfect landing spot for real-time data that needs to be ready for any number of analytical tools.

The sheer scale of S3 is hard to wrap your head around. It stores over 400 trillion objects and fields around 150 million requests per second. That's not just big; it's a testament to its power as a real-time data hub. Plus, with recent additions like S3 Tables with native Apache Iceberg support, query speeds for analytics can get a boost of up to 3x.

By embracing an S3 real-time model, you're not just getting your data faster. You're fundamentally building a smarter, more competitive organization.

Preparing Your Sources and Destinations

Any successful S3 real-time pipeline is built on a solid foundation. Before you even think about connecting components, spending a little time upfront to properly prepare your source database and S3 destination will save you from a world of configuration headaches later on. Think of it as laying the groundwork before building the house—it's the most critical part of the entire setup.

First things first, let's lock down the connection to your Amazon S3 bucket. This means creating a dedicated AWS Identity and Access Management (IAM) role or user specifically for your data ingestion tool, like Streamkap. I've seen it happen too many times: people get tempted to use a root account or an overly permissive user for a quick setup. Don't do it. This is where the Principle of Least Privilege is your best friend.

This security best practice means that any user or service should have only the bare minimum permissions needed to do its job. For writing data to S3, this usually involves specific actions like s3:PutObject, s3:GetObject, and s3:ListBucket, but only for the target bucket. Granting a blanket permission like s3:* is an unnecessary security risk.

Configuring Your Source Database for CDC

Once your S3 destination is secure, the spotlight moves to your source database. Real-time data pipelines rely on a technology called Change Data Capture (CDC), which works by reading the database's own transaction logs. For this to work, you have to explicitly enable it, and the process varies a bit depending on which database you're running.

Here’s a quick rundown for some of the most common sources I see:

- For PostgreSQL: You'll need to turn on logical replication. This is typically done by setting

wal_level = logicalin yourpostgresql.conffile. If you're on AWS RDS, this is handled through a parameter group. For a complete guide, check out our walkthrough on how to stream data from AWS RDS PostgreSQL to S3. - For MySQL: The equivalent here is enabling the binary log (binlog). You’ll want to make sure your configuration file has

binlog_format = ROWandbinlog_row_image = FULL. - For MongoDB: CDC is accomplished by tapping into the replica set's operation log (oplog). The database user you create for Streamkap will need specific permissions to read from it.

Pro Tip: When you're enabling CDC, keep in mind that some configuration changes might require a database restart. Always plan for this and schedule it during a low-traffic maintenance window to avoid any disruption to your live applications. It's a simple step that ensures a smooth and painless transition.

Lastly, you’ll need to create a dedicated database user with just enough privileges to read the replication logs. Just like with the IAM user, apply the Principle of Least Privilege. This user needs read access to the transaction logs and the specific tables you want to stream, but it definitely shouldn't have any write permissions. Getting these source and destination settings right from the start is the key to building a robust and secure S3 real-time pipeline.

With the groundwork laid, it's time to get your hands dirty and build the actual data pipeline. This is where we'll configure the source and destination, effectively telling Streamkap where to get the data and where to put it. We'll walk through the process step-by-step, highlighting the key decisions you'll need to make along the way.

Building your first real-time connector requires a solid grasp of how different systems talk to each other. If you're new to this, this guide on software integration offers a great primer.

Configuring the Source Connector

First up is the source. This is the starting point of your pipeline, where we connect to your database and start listening for changes. The first thing you'll do is plug in the credentials for that dedicated, read-only user you created earlier. Using a separate user is a non-negotiable best practice for security and isolating the pipeline from your production workload.

Next, you get to be selective. You'll specify exactly which schemas and tables Streamkap should watch. You rarely need to stream an entire database. For instance, you might only care about the orders and customers tables from your production schema. By ignoring temporary tables or logs, you keep the pipeline lean and your S3 costs in check.

A pivotal choice you'll make right away is the snapshot option. This setting determines how the connector handles all the data that already exists in your tables when you first turn it on.

- Initial Snapshot: This is the default and most common choice. It takes a complete, one-time copy of your selected tables and then smoothly transitions to streaming only the new changes (inserts, updates, deletes) as they happen. This is what you want for creating a full historical mirror of your data in S3.

- No Snapshot: If you only need to capture changes from this moment forward and don't care about the historical data, this is the way to go.

- Custom Snapshot: More advanced scenarios might require this, allowing you to snapshot a specific subset of data based on a query.

Getting the snapshot method right is a big deal. An initial snapshot gives you a complete historical record, but be mindful—it can put a heavy load on your database if you have massive tables. I always recommend scheduling that initial sync during a low-traffic window.

Configuring the S3 Destination

Once the source is dialed in, you'll set up the S3 destination. This is all about telling Streamkap how and where to write the data it receives. You'll start by entering your target S3 bucket name and the AWS region it lives in.

Here’s where you make a decision that will have a huge impact on performance and cost down the line: the output format.

While it's tempting to use simple JSON, you'll thank yourself later for choosing a columnar format like Apache Parquet or Apache Iceberg. These formats are built for analytics. They compress data far more efficiently and let query engines like Amazon Athena scan only the columns a query needs. This simple choice can slash your query costs by 90% or more.

Finally, you'll define how the data is organized in your S3 bucket, known as a partitioning strategy. Partitioning creates a logical folder structure that makes querying incredibly fast. A classic and highly effective strategy is to partition by date, which looks something like this:

s3://your-bucket/table_name/year=2024/month=11/day=06/

This structure lets Athena completely ignore folders that aren't relevant to a query's date range, drastically cutting down on scan time and cost. For a complete rundown of all the available settings, you can explore the options for an S3 connector on our platform. Nailing these details from the start is the key to building a truly robust pipeline.

Time to Deploy and Validate Your Pipeline

You’ve done the hard work of configuring your source and destination. Now for the satisfying part: going live.

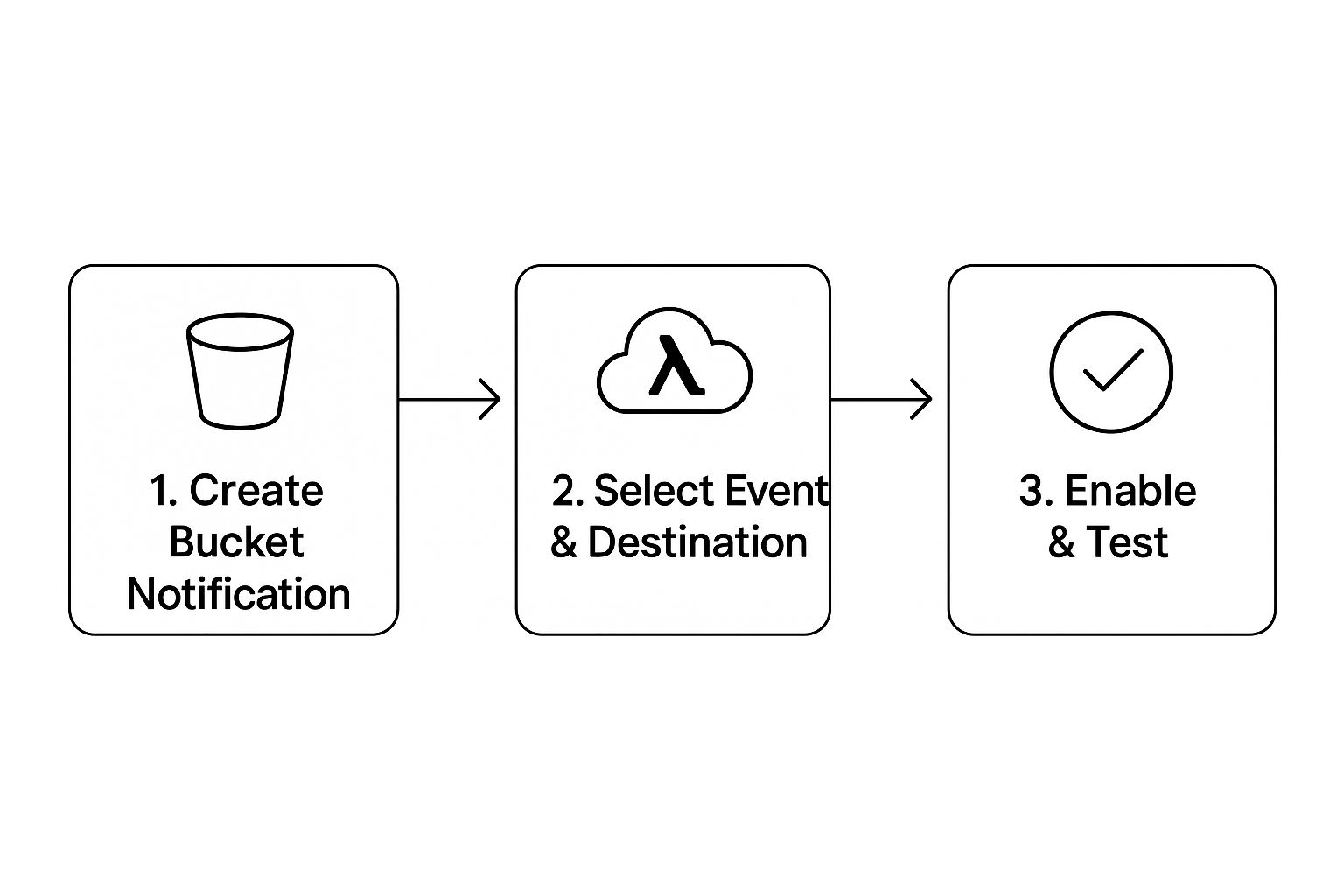

In most modern CDC platforms, all it takes is a click of a "deploy" or "start" button. That simple action breathes life into your configuration, turning your blueprint into a functioning data stream. Almost instantly, change events will begin flowing from your source database straight into your S3 real-time environment.

Keep an Eye on Pipeline Health

Once you hit deploy, your first port of call should be the monitoring dashboard. Think of this as your pipeline's command center, where you can see everything happening under the hood. You'll want to watch a few key metrics right away:

- Latency: How long does it take for a change in your database to show up in S3? For a healthy pipeline, you should see this number stay in the low seconds.

- Throughput: This tells you how much data is moving, usually measured in records or megabytes per second. It’s a great way to gauge the load on your system.

- Connector Health: This is your quick at-a-glance status check. Green is good—it means all the components are communicating and running smoothly.

Watching these vital signs is the quickest way to get immediate feedback and confidence that data is actually moving.

Confirming Data Has Landed in S3

Seeing healthy metrics is one thing, but seeing the actual files is the real proof. Let's head over to the AWS Management Console and pop into the S3 service.

Navigate to the bucket you set up as your destination. You should find a folder structure that mirrors the partitioning scheme you chose during setup. For instance, if you partitioned by date, you'll see folders organized by year, month, and day. Click into the most recent folder, and you should see new data files—most likely in Parquet format—popping up. That's your confirmation that the S3 real-time pipeline is officially working.

Here’s a pro tip: AWS recently made this validation process much easier with improvements to Amazon S3 Metadata. Instead of waiting on slow API calls to list objects, you can now use live inventory tables to query object metadata with SQL. It’s a game-changer for getting near real-time visibility as new files land. You can get more details about these advanced S3 features on the AWS blog.

Running a Quick Data Integrity Check

The files are there. Great. But is the data inside correct?

The easiest way to check is with Amazon Athena. Just open the Athena query editor in the AWS console, point it to the database and table associated with your S3 data, and run a quick check.

I always start with something simple like

SELECT * FROM your_table LIMIT 10;or aSELECT COUNT(*) FROM your_table;. These commands are perfect for a quick sanity check. They confirm that Athena can not only read the Parquet files but also correctly interpret the schema.If that query runs without a hitch and you see data, you’ve done it. You have officially validated your entire pipeline, from the source database all the way to a queryable object in S3.

Getting the Most Out of Your Pipeline: Cost and Performance Tweaks

Getting a real-time data pipeline running into S3 is a great first step. But the real goal is to make it efficient—both in terms of query speed and your monthly AWS bill. Just dumping data into an S3 bucket isn't enough; you need to be strategic about how it's stored. This all comes down to a few critical choices you make around file formats, data partitioning, and how you batch your data.

Let’s tackle the biggest one first: your file format. While it's tempting to use something human-readable like JSON, it's an absolute performance killer for analytics at scale. For any S3 real-time destination, your go-to should be a columnar format like Apache Parquet.

The performance difference is staggering. When a query engine like Amazon Athena runs a query, it can be incredibly selective with Parquet. It only scans the specific columns it needs to answer your question. With a row-based format like JSON, it has to read every single byte of every file, even if you only needed data from one column out of a hundred. This single change can easily slash your data scan volume—and your query costs—by 90% or more.

Smart Data Partitioning Strategies

Next up is how you organize the files inside your S3 bucket. Think of it like a filing cabinet. A single drawer stuffed with files is a mess. A well-partitioned S3 structure, on the other hand, is like a perfectly organized cabinet with labeled folders, making it fast to find exactly what you need.

By far, the most common and effective strategy is partitioning by date. Your S3 paths would look something like this:

s3://your-bucket/table-name/year=2024/month=11/day=28/

When you run a query that filters on a specific date range, Athena is smart enough to use this folder structure to ignore everything else. It doesn't even bother looking inside the folders for other months or years. As your dataset balloons into terabytes or even petabytes, this becomes absolutely essential for keeping query times low and costs in check.

Expert Tip: Be careful not to over-partition. Partitioning on a field with high cardinality, like

user_id, is a classic mistake. You'll create millions of tiny files, which introduces a ton of overhead and can actually make your queries slower. Stick to fields with low cardinality—date, region, or product category are usually safe bets.

Finding the Sweet Spot with Batching

"Real-time" doesn't have to mean writing every single event to S3 the microsecond it occurs. Every write to S3 is an API call—a PUT request—and you get charged for each one. If you're writing millions of tiny, individual records every minute, those costs will pile up surprisingly quickly.

This is where batching is your friend. A tool like Streamkap gives you fine-grained control over this. You can configure it to bundle records and write them out as a single file to S3 every 60 seconds, or maybe once the batch size hits 128 MB. This strikes a perfect balance, giving you near real-time data availability while drastically cutting down on API requests and keeping your costs under control.

Finally, remember that cost management is an ongoing process. Amazon S3 has some fantastic built-in tools for this. S3 Storage Lens, for instance, gives you a deep, organization-wide view of your storage usage and activity trends. It helps you spot inefficiencies and provides actionable recommendations. You can learn more about S3's storage analytics tools to see how they can help you continuously fine-tune your setup.

Got Questions About S3 Real-Time Ingestion?

Whenever you're architecting a new data pipeline, especially a real-time one for S3, a handful of questions always come up. Let's walk through the most common ones I hear from engineering teams and get you the answers you need.

How Do You Handle Schema Changes from the Source?

This is probably the most critical question. What happens when a developer on another team decides to add a new column to a table you’re streaming? In the old days, this would break your pipeline and trigger a late-night alert.

With a modern Change Data Capture (CDC) platform like Streamkap, the answer is simple: you don’t have to do anything. The system automatically detects schema changes at the source—think ALTER TABLE commands—and mirrors those changes downstream.

That new column will just start appearing in your S3 data files. No manual work, no downtime. This automatic schema evolution is a massive win, saving your team from constant maintenance headaches.

What’s a Realistic Latency from Database to S3?

"Real-time" is a great marketing term, but in engineering, we know nothing is truly instantaneous. So what's a realistic expectation? For a typical pipeline streaming from a source like PostgreSQL into Amazon S3, you should expect an end-to-end latency of just a few seconds.

Of course, a few things can affect this:

- Database Load: If your source database is under heavy strain, replication can lag.

- Network Conditions: The quality of the connection between your source, the CDC service, and AWS always plays a role.

- File Write Frequency: Your configuration for how often to write files to S3 (e.g., every 60 seconds) is a direct factor.

Even with these variables, data usually lands in S3 and becomes queryable in under a minute. That’s a world away from the hours or even days you'd wait for traditional batch ETL jobs.

A classic mistake I see teams make is trying to achieve sub-second latency by writing tiny, frequent files to S3. This leads to the dreaded "small file problem," which absolutely tanks query performance and racks up costs from all the S3

PUTrequests. It's almost always better to accept a slightly higher latency for well-optimized, larger files.

How Do I Troubleshoot Connection Problems?

If your data isn't flowing, 99% of the time it's a permissions or networking issue. Before you start digging into complex logs, check the fundamentals.

First, can the CDC service even see your database? Go through your firewall rules, VPC settings, and security groups to make sure the right ports are open and accessible.

Next, look at your credentials. Is the dedicated database user you created actually granted the right permissions for logical replication or binlog access? On the other side, does your AWS IAM user or role have the s3:PutObject permission for the target bucket? Walking through these simple checks will solve the vast majority of setup issues.

Ready to build your own robust, low-latency data pipelines without the headache? Streamkap offers an automated, real-time CDC solution that connects your databases to Amazon S3 in minutes. See how easy it can be at https://streamkap.com.

Article created using Outrank