How to Stream DynamoDB to ClickHouse with Streamkap

Introduction

In today’s fast-paced business environment, having access to accurate data at the right moment is crucial for making informed decisions.

However, traditional data processing methods often fall short, being both slow and overly complex.

This guide enables developers to implement robust customer behavior analytics and fraud detection using ClickHouse’s powerful analytics engine with real-time data streams from AWS DynamoDB. By establishing a direct pipeline between DynamoDB’s transactional data and ClickHouse’s analytical capabilities, engineering teams can create a high-performance analytics infrastructure that efficiently processes massive volumes of user interactions, purchase patterns, and suspicious transactions—all with minimal latency.

Guide Sections:

| Prerequisites | You will need Streamkap and Aws accounts |

|---|---|

| Configuring an Existing DynamoDB | For existing DynamoDB, you will have to modify its configuration to make it Streamkap-compatible |

| Creating a New Clickhouse Account | This step will help you set up Clickhouse |

| Fetching credentials from existing Clickhouse Destination | For the existing Clickhouse data warehouse, you will have to modify its permissions to allow Streamkap to write data |

| Streamkap Setup | Adding DynamoDB as a source, adding Clickhouse as a destination, and finally connecting them using a data pipe |

Are you ready to capitalize on your data? Join us!

Prerequisites

Make sure you have the following ready so you can follow this guide:

1. Streamkap Account: You must have an active Streamkap account with admin or data admin rights. If you don’t have one yet; sign up here

2. ClickHouse Account: Ensure you have an active ClickHouse account with admin privileges. If you don’t have one yet, you can sign up here

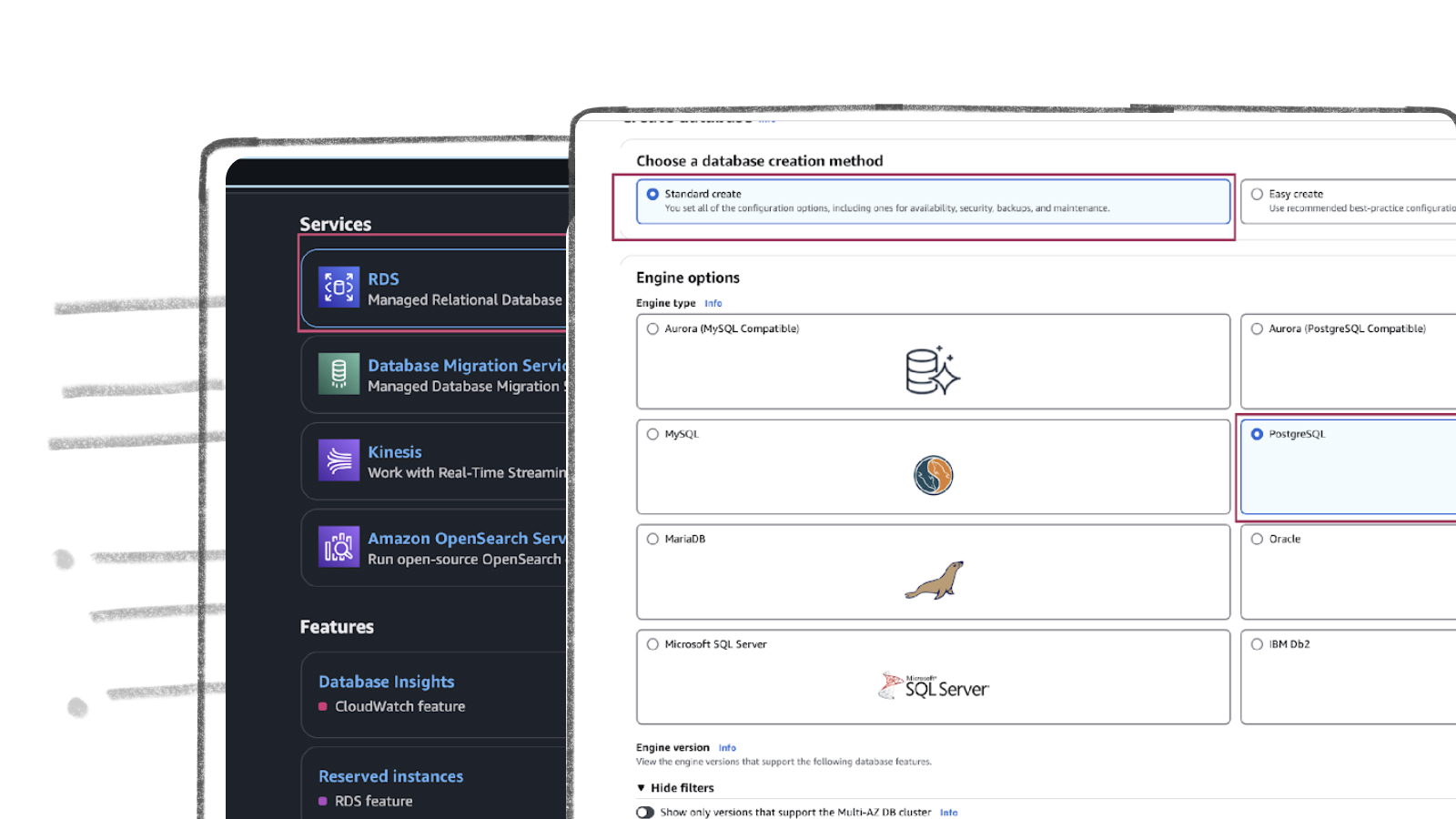

3. Amazon AWS Account: To create, set, or update an AWS RDS instance, you need an active Amazon AWS account with networking and core RDS permission. If you don’t already have, join for one here.

AWS DynamoDB Set Up Start

AWS DynamoDB is one of the most widely used NoSQL database services, known for its scalability, high availability, and low latency performance. Its ease of use and flexibility make it a go-to solution for handling large amounts of data in production environments. Setting up a DynamoDB instance is quick and straightforward, allowing new users to get started in minutes.

Moreover, if you already have an existing DynamoDB instance, configuring it for integration with Streamkap is a smooth process. In this section, we will explore various methods to set up and configure AWS DynamoDB for seamless compatibility with Streamkap, ensuring optimized performance and reliability.

Configuring an Existing AWS DynamoDB for Streamkap Compatibility

Step 1: Configuring Your DynamoDB Table for Streamkap Compatibility

- Click on the table (e.g. MusicCollection) you would like to configure for Streamkap compatibility.

- Once you’ve chosen the table, navigate to the Export and Streams section to proceed with the next step.

- Turn on the Dynamodb stream details.

- Select New and Old Images on the DynamoDB Streams setting to capture both the updated and previous versions of the items in your table. This ensures that any changes made to the data are fully captured.

- Navigate to the Backups section and click on Point-in-Time Recovery (PITR) Edit button to enable continuous backups for your DynamoDB table. This enables point-in-time recovery to maintain a continuous backup of your table, allowing you to restore data to any moment within the retention window.

- Check Turn on Point-in-Time Recovery and click Save Changes to enable the feature for your DynamoDB table.

Note: If you have multiple tables and want all of them to be compatible with Streamkap, you will need to repeat the above process for each table.

Step 2: Create an S3 bucket

- Navigate to your AWS Management Console. and type “S3” on the search bar.

- Click on “S3” as shown below.

- While you can use an existing S3 bucket for Streamkap integration, creating a dedicated new bucket is recommended. This approach helps organize and isolate your Streamkap data streams. A separate bucket ensures cleaner configuration and better data management without interfering with other workflows.

- Choose the bucket type as General. Plug in a bucket name (source-dynamodb-bucket).

- Additionally, make sure to disable ACLs (Access Control Lists) under the Object Ownership section. This ensures that the S3 bucket uses the bucket owner’s permissions and simplifies access management.

- Block all public access because it ensures that your S3 bucket and its contents are secure and not accessible to unauthorized users. This security best practice ensures your bucket and its contents remain private and accessible only to authorized users.

- Enable versioning for the S3 bucket to preserve, retrieve, and restore every version of an object in the bucket. This is particularly useful for maintaining historical data, allowing you to recover from accidental deletions or overwrites.

- Select SSE-S3 (Server-Side Encryption with Amazon S3-managed keys) to automatically encrypt your data at rest. This ensures that all objects stored in your S3 bucket are securely encrypted without the need for managing encryption keys.

- Disable the bucket key for SSE-S3, as it is not necessary when using Amazon S3-managed keys. Disabling it simplifies the encryption process while still ensuring your data is securely encrypted.

Step 3: Create IAM User and Policy for Streamkap Compatibility

- Navigate to your AWS Management Console. and type “IAM” on the search bar.

- Click on “IAM” as shown below

- After clicking on IAM, navigate to the Policies section.

- Click Create Policy to start creating a new IAM policy for DynamoDB and S3 access.

- To create the IAM policy, use the following configuration. Make sure to replace TableName ,AccountID and Region and S3 bucket name with your actual table name and bucket name.

- Policy Name: source-dynamodb-bucket-policy

- Description: This policy will be used to configure DynamoDB as a source to Streamkap.

- Navigate to Users on the left-hand menu in the IAM dashboard.

- Click on Create user to start the process of adding a new IAM user.

- Plug in a user name of your choice.

- Leave the option Provide user access to the AWS Management Console unchecked, as the user will only need programmatic access (via access keys) to interact with DynamoDB and S3 for Streamkap integration.

- In the Permissions options, select Attach policies directly.

- From the dropdown, select Customer managed policies.

- Find and select the source-dynamodb-bucket-policy policy you created earlier.

- After selecting the policy, click Next to proceed.

- Review the username and attached source-dynamodb-bucket-policy, then click Create user to finalize the process.

- In the Users section, click on the username that you just created to create the access key.

- Click on Create access key .

- Select Third-party-service.

- Click Next.

- Plug in your description

- Download the credentials .csv file for future reference and click on Done to complete the process.

Clickhouse Warehouse Set Up Start

ClickHouse is a high-performance, open-source columnar database management system designed for online analytical processing (OLAP). Known for its speed and scalability, ClickHouse is often used in data-intensive applications that require real-time analytics on large volumes of data. It allows users to store, process, and analyze vast amounts of (un)structured data quickly and efficiently.

In this section, we will explore how to set up and configure ClickHouse for Streamkap streaming, ensuring seamless integration and optimal data flow for analytics.

Creating a New Clickhouse account

- Create your Clickhouse account

- Enter the name of your data warehouse (e.g. Streamkap_datawarehouse).

- Choose AWS as the cloud provider, select the appropriate region, and purpose, and click on Create Service.

- After entering the required details, please wait for the service to be provisioned. This process will take a few seconds.

- In the Getting Started prompt under the Connect Your App section, copy the highlighted password and hostname, then securely store them in a safe location.

- Leave Add Data settings as they are.

- In Configure Idling and IP Filtering section, if you are certain about your use case, select the appropriate option; otherwise, choose I don’t know.

- In the Where Do You Want to Connect section, you choose either “Anywhere” or “Specific locations”.

- If you decide to choose the “Specific Location” option, enter the IP address based on your Streamkap region, and write Streamkap App in the description.

- Finally, click on Submit to proceed.

- Leave Invite Team Members and Explore Integration as default.

- Navigate to the SQL Console on the left-hand, select Query, and choose the default database.

- It is advisable to create a dedicated user and role for Streamkap to access your ClickHouse database.

- Run the following SQL code to create a role, assign permissions, create a user and grant the role to the user. These steps are necessary to set up a user and assign the appropriate permissions within the Streamkap system, ensuring that the user has access to the required resources and can perform necessary actions.

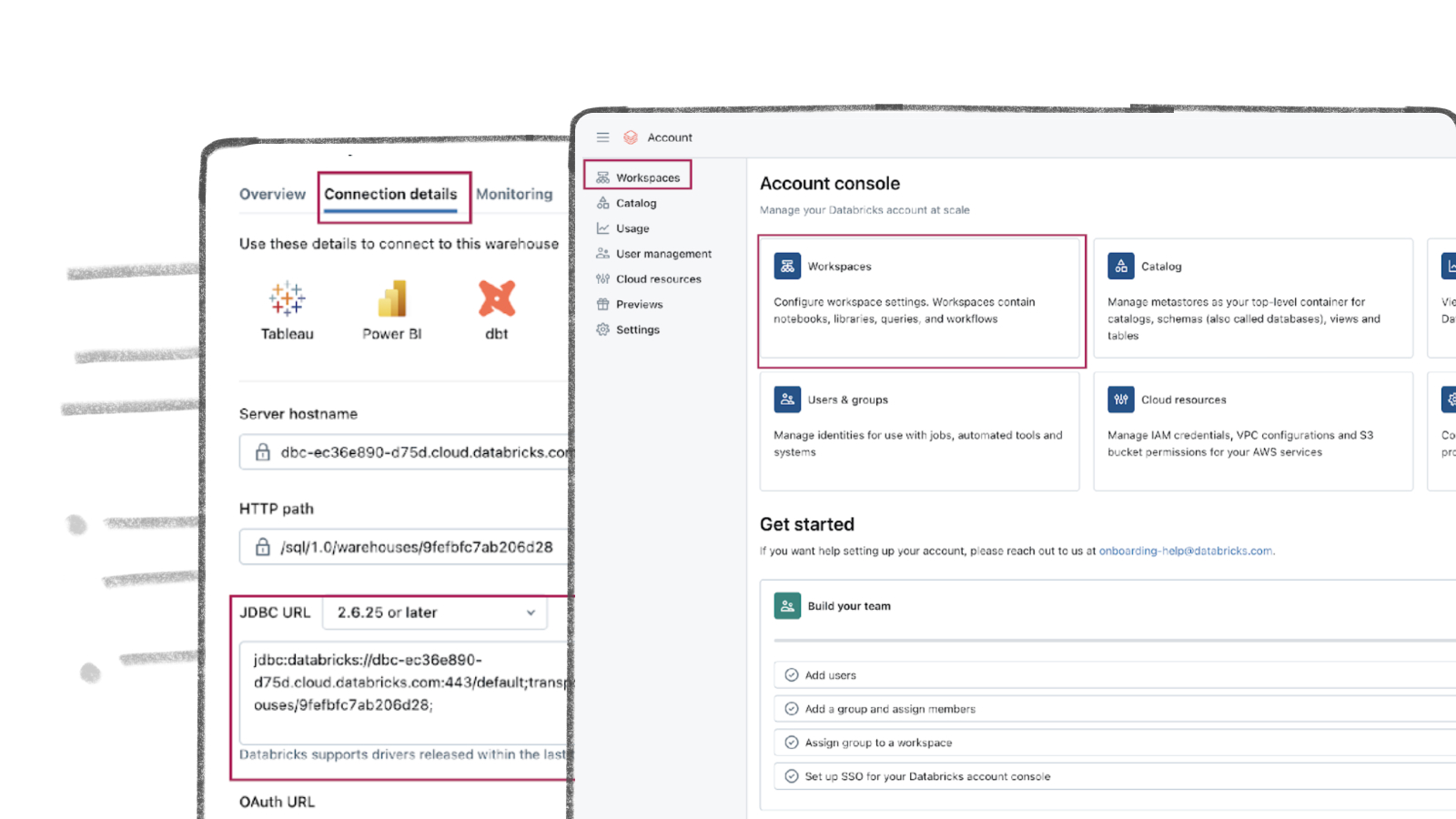

Fetching credentials from existing Destination warehouse

In this step, you will retrieve the following details:

-

Hostname: The IP address or URL of your ClickHouse instance.

-

Port (default: 8443).

-

Username : Streamkap_user or the username you selected.

-

Password: The password for the database user.

-

Database: The name of the database you previously entered.

-

Log in to your Clickhouse account and click on Connect on the left-side navigation pane.

- Once you click Connect, copy the hostname and password as highlighted below.

- Navigate to the SQL Console on the left-hand pane, select Query, and choose the default database.

- Create a role, assign permissions, create a user, and grant the role to the user. These steps are necessary to set up a user and assign the appropriate permissions within the Streamkap system, ensuring that the user has access to the required resources and can perform necessary actions.

- It is advisable to create a dedicated user and role for Streamkap to access your ClickHouse database.

- After running this query you will get Username (streamkap_user) and Data Warehouse (streamkap_datawarehouse).

Streamkap Set Up

To connect Streamkap to DynamoDB, we need to ensure that the database is configured to accept traffic from Streamkap by safelisting Streamkap’s IP addresses.

Note: If DynamoDB accepts traffic from anywhere in the world you can move on to the Configuring DynamoDB for Streamkap Integration section.

Safelisting Streamkap’s IP Address

Streamkap’s dedicated IP addresses are

| Service | IPs |

|---|---|

| Oregon (us-west-2) | 52.32.238.100 |

| North Virginia (us-east-1) | 44.214.80.49 |

| Europe Ireland (eu-west-1) | 34.242.118.75 |

| Asia Pacific - Sydney (ap-southeast-2) | 52.62.60.121 |

When signing up, Oregon (us-west-2) is set as the default region. If you require a different region, let us know. For more details about our IP addresses, please visit this link.

- To safelist one of our IPs, you need to ensure that the policy assigned to your IAM user or role includes permissions for one of Streamkap’s regions.

Here is an example of a policy that includes access to Streamkap’s safe listed IP address, make sure to replace TableName, AccountID, and Region and S3 bucket name with your actual table name and bucket name.

Configuring AWS DynamoDB for Streamkap Integration

If you followed any of the following sections mentioned above by default your AWS DynamoDB will be Streamkap compatible

- Setting up a New Source Database from Scratch section

- Configuring an Existing Source Database for Streamkap Compatibility

Adding AWS DynamoDB as a Source Connector

Step 1: Log in to Streamkap

- Log in to Streamkap. This will take you to your dashboard.

Note: You must have admin or data admin privileges to continue with the following steps.

Step 2: Set Up a DynamoDB Source Connector

- On the left side navigation pane

- click on “Connectors”

- click on “Sources” tab

- click the “+ Add” button as shown below

- Enter DynamoDB in the search bar and select it from the list of available services, as depicted below.

You’ll be asked to provide the following details:

- Name: Streamkap_source- AWS Region: Enter the region you have configured (e.g., us-east-1).

- AWS Access Key ID: Enter the access key from the file you downloaded.

- AWS Secret Key: Enter the secret key from the file you downloaded.

- S3 Export Bucket Name: Enter the name of your S3 bucket (e.g., source-dynamodb-bucket).

After filling in the details, click Next to proceed.

- After specifying the table names to include, click Save to complete the configuration.

- Once you click Save, wait until the status becomes Active as indicated below.

Adding a Clickhouse Connector

- In order to add Clickhouse as a destination navigate to the “Connection” tab on the left side pane, click on “Destination” and click the “+Add” button.

- Search for Clickhouse in the search bar and click on Clickhouse.

The settings for Clickhouse are as follows:

- Name: Desired name for destination for Streamkap.

- Hostname: The IP address or URL of your ClickHouse instance.(e.g., r5oq46wstt.us-east-1.aws.clickhouse.cloud)

- Port (default: 8443).

- Username (case sensitive): STREAMKAP_USER or the username you selected.

- Password: The password for the data warehouse user.

- Database: The name of the data warehouse of clickhouse.

After filling in the configuration details, hit Save at the bottom right corner to finalize the setup.

- After clicking Save, the system will display an Active status indicator.

Adding a Pipeline

- Navigate to “Pipelines” on the left side and click “+Create” to create a pipeline between source and destination.

- Select source and destination and click on “Next” in the bottom right corner.

- Select all schema or the Tables that you want to transfer.

- Plug in a pipeline name and hit “Save”.

- Once the pipeline is successfully created, it will appear similar to the screenshot below, with its status displayed as Active.

- Log in to your AWS Management Console. and type “DynamoDB” on the search bar.

- Click on “DynamoDB” as shown below

- Go to Explore items on the left pane. Click on the table where you want to add data and click on Create item.

- Add the artist as Taylor Swift and the song title as Love Story, or feel free to choose your own option, then click on Create Item.

- Once we add the above, include multiple data entries as shown below:

- Log in to your Clickhouse account

-

Run the query to view all the data in your table.

-

The streamed data appears in your Clickhouse destination as shown below.

By design, Your “Table” in the destination will have the following meta columns apart from the regular columns.

| Column Name | Description |

|---|---|

| _STREAMKAP_SOURCE_TS_MS | Timestamp in milliseconds for the event within the source log |

| _STREAMKAP_TS_MS | Timestamp in milliseconds for the event once Streamkap receives it |

| __DELETED | Indicates whether this event/row has been deleted at source |

| _STREAMKAP_OFFSET | This is an offset value in relation to the logs we process. It can be useful in the debugging of any issues that may arise |

Setting up a New AWS DynamoDB from Scratch

Note: To create and manage a DynamoDB table compatible with Streamkap, you must have IAM permissions to manage DynamoDB tables, streams, item operations, backups, point-in-time recovery, and S3 exports.

If your permissions are limited, please contact your AWS administrator to request the necessary DynamoDB, S3, and IAM permissions for managing DynamoDB tables and their associated features required for Streamkap integration.

Step 1: Log in to the AWS Management Console

- Log in to your AWS Management Console. and type “DynamoDB” on the search bar.

- Click on “DynamoDB” as shown below

Step 2: Create a new DynamoDB TableNote: If you already have existing tables, you can skip this step.

- Once you are in the DynamoDB section navigate to Dashboard orTables on the left side navigation menu.

- Choose the region where you want to host your DyanamoDB, ensuring it aligns with your application’s requirements.

Note: To integrate with Streamkap, ensure that the selected region is one of the following:

Oregon (us-west-2)

North Virginia (us-east-1)

Europe Ireland (eu-west-1)

Asia Pacific - Sydney (ap-southeast-2)

- Once you have selected the region click on the Create Table as shown below.

- In the Table Details field, enter a name of your choice (e.g., MusicCollection). Set the Partition Key (e.g., Artist) and Sort Key (e.g., Song Title) according to your preference, specify their Type as String, and leave the remaining table settings as default. Finally, click Create table.

- After creating the table, wait until its status changes to Active. Once active, click on the MusicCollection table to continue.

Step 3: Configuring Your DynamoDB Table for Streamkap Compatibility

- Click on the table that you wish to configure for Streamkap compatibility.

- Once you’ve entered the details of the table, navigate to the Export and Streams section to proceed with the next steps.

- Turn on the Dynamodb stream details.

- Select New and Old Images on the DynamoDB Streams setting to capture both the updated and previous versions of the items in your table. This ensures that any changes made to the data are fully captured.

- Navigate to the Backups section and click on Point-in-Time Recovery (PITR) Edit button to enable continuous backups for your DynamoDB table. This enables point-in-time recovery to maintain a continuous backup of your table, allowing you to restore data at any moment within the retention window.

- Check Turn on Point-in-Time Recovery and click Save changes to enable the feature for your DynamoDB table.

Note: If you have multiple tables and want all of them to be compatible with Streamkap, you will need to repeat the above process for each table.

Step 4: Create a S3 bucket

- Navigate to your AWS Management Console and type “S3” on the search bar.

- Click on “S3” as shown below.

- While you can use an existing S3 bucket for Streamkap integration, creating a dedicated new bucket is recommended. This approach helps organize and isolate your Streamkap data streams. A separate bucket ensures cleaner configuration and better data management without interfering with other workflows.

- Choose the bucket type as General. Plug in a bucket name (source-dynamodb-bucket).

- Additionally, make sure to disable ACLs (Access Control Lists) under the Object Ownership section. This ensures that the S3 bucket uses the bucket owner’s permissions and simplifies access management.

- Block all public access because it ensures that your S3 bucket and its contents are secure and not accessible to unauthorized users. This security best practice ensures your bucket and its contents remain private and accessible only to authorized users.

- Enable versioning for the S3 bucket to preserve, retrieve, and restore every version of an object in the bucket. This is particularly useful for maintaining historical data, allowing you to recover from accidental deletions or overwrites.

- Select SSE-S3 (Server-Side Encryption with Amazon S3-managed keys) to automatically encrypt your data at rest. This ensures that all objects stored in your S3 bucket are securely encrypted without the need for managing encryption keys.

- Disable the bucket key for SSE-S3, as it is not necessary when using Amazon S3-managed keys. Disabling it simplifies the encryption process while still ensuring your data is securely encrypted.

Step 5: Create IAM User and Policy for Streamkap Compatibility

- Navigate to your AWS Management Console. and type “IAM” on the search bar.

- Click on “IAM” as shown below

- After clicking on IAM, navigate to the Policies section.

- Click Create Policy to start creating a new IAM policy for DynamoDB and S3 access.

To create the IAM policy, use the following configuration. Make sure to replace TableName ,AccountID and Region and S3 bucket name with your actual table name and bucket name.

- Policy Name: source-dynamodb-bucket-policy

- Description: This policy will be used to configure DynamoDB as a source to Streamkap.

- Navigate to Users on the left-hand side menu in the IAM dashboard.

- Click on Create user to start the process of adding a new IAM user.

- Plug in a user name of your choice.

- Leave the option Provide user access to the AWS Management Console unchecked, as the user will only need programmatic access (via access keys) to interact with DynamoDB and S3 for Streamkap integration.

- In the Permissions options, select Attach policies directly.

- From the dropdown, select Customer managed policies.

- Find and select the source-dynamodb-bucket-policy policy you created earlier.

- After selecting the policy, click Next to proceed.

- Review the username and attached source-dynamodb-bucket-policy, then click Create user to finalize the process.

- In the Users section, click on the username that you just created to create access key.

- Click on the Create access key.

- Select Third-party-service.

- Click Next.

- Plug in your description

- Download the credentials .csv file for future reference and click on Done to complete the process.

What’s Next?

Thank you for reading this guide. If you have other sources and destinations to connect in near real-time check out the following guides.

For more information on connectors please visit here

.png)