How to Build Data Pipelines From Scratch

September 29, 2025

At its core, a data pipeline is all about moving data from point A to point B. You extract it from a source, maybe transform it so it’s actually useful, and then load it into a destination like a data warehouse. This whole process is what we know as ETL (Extract, Transform, Load)—or its more modern cousin, ELT (Extract, Load, Transform). It’s the plumbing that makes everything from analytics to machine learning possible.

Why Modern Data Pipelines Are So Important

Forget the dry, technical definitions for a moment and think about the real-world impact. Data pipelines have completely changed. They’ve gone from clunky, overnight batch jobs to the real-time nervous system that keeps a modern business running. This isn't just some background IT function anymore; it's a strategic asset.

Think of an e-commerce site using live inventory data to stop overselling during a huge flash sale. Or a bank flagging a fraudulent transaction in milliseconds instead of hours. These aren't hypotheticals—they are happening right now, powered by fast, efficient data pipelines.

The Shift from Batch to Real-Time

In the past, data was processed in big chunks, usually overnight. That worked just fine when business decisions could wait until the next morning. Today, that kind of delay is a massive competitive liability. Everyone wants insights now, and that requires a complete shift in how we handle data movement.

This is where truly understanding https://streamkap.com/blog/what-is-real-time-data-en makes all the difference. It’s about reacting to events the moment they occur, enabling you to make smarter decisions right in the heat of the moment.

Building a data pipeline isn't just an IT task; it's a strategic investment in agility and operational intelligence. The ability to act on fresh, reliable data is what separates market leaders from the rest.

A Market Driven by Necessity

You don't have to take my word for it—just look at the numbers. The global market for data pipeline tools was valued at around USD 12.09 billion and is expected to explode to USD 48.33 billion by 2030. That kind of growth tells you everything you need to know: companies are betting big on data-driven operations.

Learning how to build these pipelines has become a critical business skill. It’s what allows you to turn a flood of raw information into something you can actually act on. The core principles of Data and Automation are fundamental here, and this guide will walk you through exactly how to build a modern, real-time pipeline that starts delivering value from day one.

Designing Your Data Pipeline Architecture

Before you write a single line of code, you need a blueprint. Think of it like building a house—you wouldn't start hammering without a plan. A solid data pipeline architecture is that plan, and getting it right upfront is what separates a successful project from one that's constantly breaking or failing to deliver.

So, where do you start? The most common mistake I see is teams jumping straight into the tech. You need to begin with the business question.

Forget vague goals like "we want better marketing insights." That’s not actionable. A real goal sounds something like this: "We need to understand our customer lifetime value by combining purchase history from our Shopify database with their support ticket history from Zendesk."

Now we're talking. That single sentence tells you exactly what you need. You've identified your data sources (a production database like PostgreSQL for Shopify and a SaaS API for Zendesk) and the specific outcome you’re driving towards. This clarity is everything.

Choosing Your Processing Model

With your sources and goals locked in, the next big architectural decision is how you'll process the data. This choice dictates how fast data flows through your pipeline and, ultimately, how fresh your insights are. It's a critical decision that influences your infrastructure costs, complexity, and what you can actually achieve.

You've got three main flavors to choose from, each with its own pros and cons.

- Batch Processing: This is the old-school, tried-and-true method. Data is gathered up and processed in large chunks on a set schedule—think daily or hourly. It’s perfect for heavy-lifting jobs where immediate results aren't necessary.

- Micro-Batch Processing: A happy medium. Instead of waiting hours, you process data in small, frequent batches, maybe every few minutes. It gives you a near real-time feel without the full complexity of true stream processing.

- Real-Time Streaming: Here, you're processing data event-by-event, as it happens. This is for situations where you need to react in milliseconds, not minutes or hours.

I've seen teams over-engineer things by choosing real-time streaming when simple batch processing would have done the job just fine. On the flip side, trying to detect credit card fraud with a nightly batch job is a recipe for disaster. The business need must drive the technical choice, not the other way around.

Choosing Your Data Processing Model

To really nail down which model fits your needs, it helps to see them side-by-side. Each one represents a different trade-off between speed, complexity, and the kinds of problems it can solve. Getting this wrong can lead to missed opportunities or bloated, expensive infrastructure.

Here's a straightforward comparison to help you decide.

Making the right choice here is fundamental to building a pipeline that not only works but also scales and adapts to future needs.

These processing models are also at the heart of larger architectural patterns. To go a level deeper, you might want to explore https://streamkap.com/blog/the-evolution-from-lambda-to-kappa-architecture-a-comprehensive-guide, which explains how modern systems are designed around these core concepts.

Ingesting Data in Real-Time with Change Data Capture

Alright, you’ve got your architectural blueprint. Now for the fun part: making the data flow. The old way of doing things—running massive batch queries on a schedule—just doesn’t cut it anymore. That approach hammers your production databases, drags down performance, and, worst of all, delivers stale, outdated information.

Modern data pipelines need a much smarter, more elegant solution. We need something that's gentle on our source systems while delivering insights the very moment they’re born.

This is exactly what Change Data Capture (CDC) was built for. Instead of repeatedly scraping an entire database table, CDC hooks directly into the database's transaction log. Think of this log as the database's private journal, where it records every single change to ensure its own integrity. By reading this journal, CDC tools capture every INSERT, UPDATE, and DELETE at the row level, as it happens.

The result? A continuous, low-impact stream of data changes. This isn't just an improvement; it's a fundamental shift in how we build pipelines for real-time applications.

How CDC Works in the Real World

Let's make this tangible. Imagine you're running a SaaS platform and need to populate a customer 360 dashboard to track user activity. Your production database is PostgreSQL, and you want to run analytics in Snowflake.

Every time a user logs in, finishes a tutorial, or updates their profile, a new row gets written to your user_activity table. With a traditional batch job, you might not see that activity until the next morning. But with CDC, the instant that transaction is committed to the PostgreSQL write-ahead log (WAL), it’s captured and already on its way downstream.

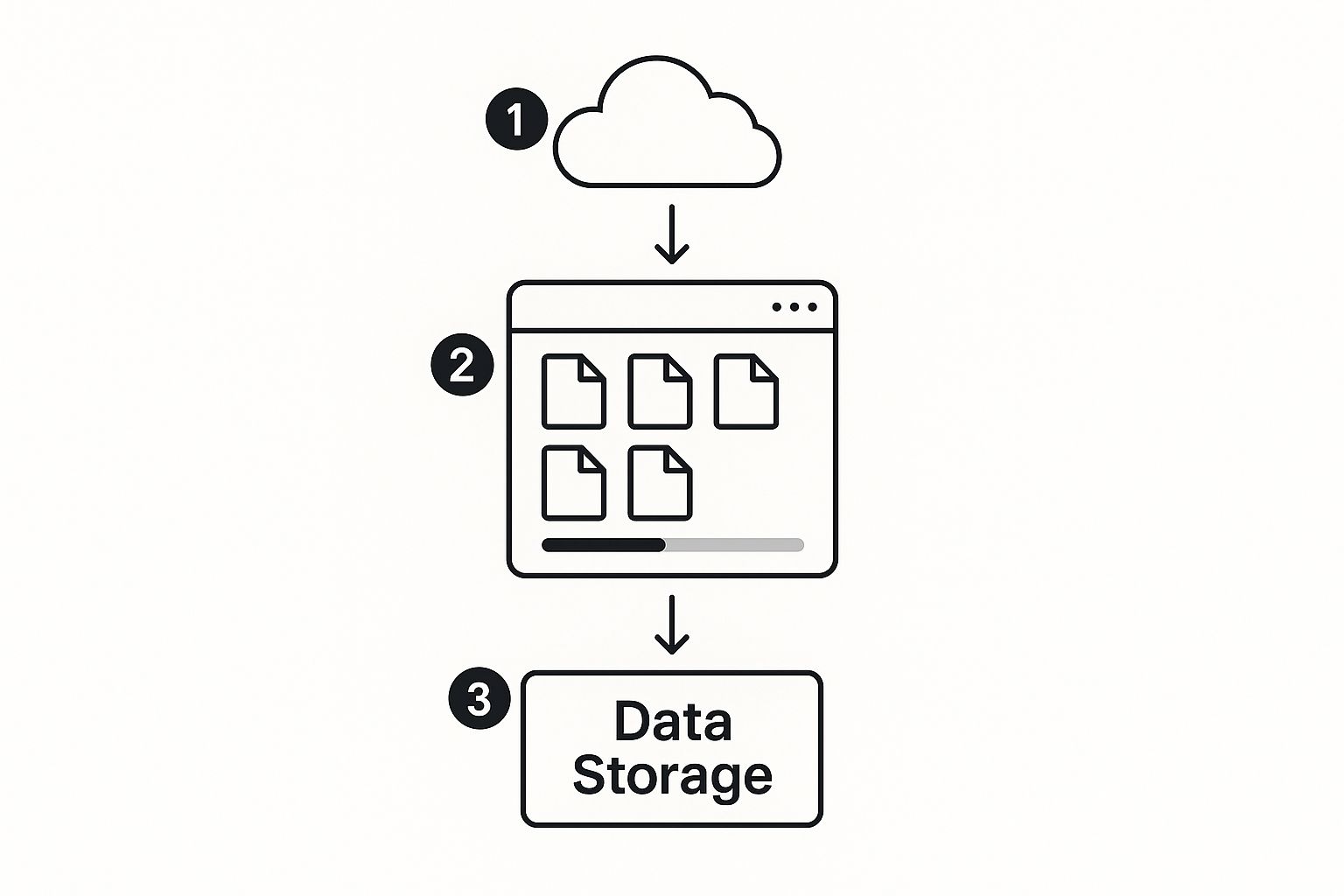

This infographic gives a great high-level view of how data travels from source to storage in a modern pipeline.

This process ensures your data warehouse or lakehouse is always a near-perfect mirror of your source systems, with just milliseconds of delay.

Setting Up a CDC Connector with Streamkap

Putting CDC into practice is surprisingly simple when you use a managed platform like Streamkap. You get to skip the headache of managing complex infrastructure like Kafka or Flink and just focus on configuring the connection.

Here’s a quick rundown of what that looks like:

- Turn on Logical Replication: First, you’ll need to enable this feature in your source database (like PostgreSQL). It's a one-time setup that gives external tools permission to stream changes from the transaction log. It does require specific user permissions, so make sure you have those handy.

- Configure the Source Connector: Inside Streamkap, you’ll simply provide the credentials for your database. The platform handles the rest, connecting securely and starting to read the log file from the precise point you tell it to, ensuring zero data is missed.

- Define Your Destination: Next, you point Streamkap to your destination—in our case, Snowflake. You'll enter your credentials and tell it which database and schema the incoming data should land in.

- Flip the Switch: Once everything is configured, you just activate the pipeline. Streamkap takes care of the initial snapshot of your existing data and then seamlessly switches over to streaming all the real-time changes as they occur.

For a deeper look at how CDC stacks up against older methods, check out this guide on Change Data Capture for streaming ETL. The real magic of a platform like this is how it automates the tricky parts, like handling schema drift, ensuring fault tolerance, and guaranteeing exactly-once processing.

The true power of CDC lies in its incredible efficiency. Because it only moves what has changed, you dramatically cut the load on your source systems and slash the amount of data flying across the network. It's the difference between sending a quick text update versus mailing a completely new, updated copy of a book.

This efficient, real-time approach is becoming the standard. The global data pipeline market is currently valued at around USD 10.01 billion and is projected to explode to USD 43.61 billion by 2032, growing at a CAGR of 19.9%. It’s clear that getting data from one system to another for immediate use is no longer a luxury—it’s a core business requirement.

The Real Work Starts in the Warehouse

Getting your data into a powerful warehouse like Snowflake or Google BigQuery is a huge win, but it's really just the first step. That raw data, streaming in from all your sources, is full of potential. But on its own, it’s often a messy, inconsistent jumble that isn't ready for your analysts or machine learning models.

This is where the magic happens. We need to transform that raw material into clean, reliable, and genuinely insightful data assets. The modern way to do this is with an approach called ELT (Extract, Load, Transform).

Why We Load Before We Transform

If you’ve been in the data world for a while, you’re probably familiar with the older ETL process, where all the heavy lifting and transformations happened before the data ever touched the warehouse. ELT flips that model on its head. We get the data loaded first, and then we worry about transforming it.

This seemingly small change is a game-changer. It means you can leverage the immense computational horsepower of your cloud data warehouse to do the transformations. These platforms are absolute beasts, designed for chewing through massive datasets, making them the perfect place for this kind of work.

It also creates a clean separation of duties. You use a specialized tool like Streamkap for what it does best—rock-solid, real-time data ingestion. Then, you use a tool like dbt inside the warehouse to focus entirely on building robust, modular transformations. This makes your entire pipeline faster, more resilient, and way easier to manage.

Real-World Transformations You’ll Actually Build

So, what does "transformation" actually mean in practice? Let's get out of the clouds and talk about what you'll be doing day-to-day. It’s all about taking those raw tables and turning them into something that can power a BI dashboard, feed a machine learning model, or run an operational report.

Here are a few common scenarios you'll run into constantly:

- Standardizing Messy Data: You’ve got customer addresses coming from three different systems. One uses "St.", another writes out "Street", and the third abbreviates it to "Str." A transformation job can clean that up, standardizing everything into a single, consistent format. Simple, but critical.

- Creating a 360-Degree Customer View: Your customer orders live in your production database, but their support tickets are in a separate system like Zendesk. A transformation can join these two sources on a

customer_id, giving you a single, enriched table that paints a complete picture of each customer's journey. - Pre-Aggregating Data for Fast Dashboards: No one wants to wait five minutes for a dashboard to load. A common and highly effective transformation is to take raw transaction data and roll it up into daily, weekly, or monthly summaries. Your BI tools can then query these much smaller, pre-calculated tables and load dashboards in seconds.

I’ve seen teams spend weeks trying to perfect their data transformations in-flight, only to have a requirement change and force them to start over. With ELT, that pain disappears. The raw data is always there, ready for you to experiment and build new models on top of it.

Think of your raw data as crude oil—it has a ton of potential but you can't put it directly into your car. The transformation process is the refinery, turning it into the high-octane gasoline that actually powers your business. This is the final, crucial step that turns a simple data pipeline into a true strategic asset.

Keeping Your Pipeline Healthy: Monitoring and Optimization

Getting your real-time data pipeline up and running is a huge win, but the job isn't done. Think of it like a living system—it needs ongoing attention to perform at its best. If you just set it and forget it, you're opening the door to silent data failures, creeping latency, and performance bottlenecks that will slowly chip away at the trust your team has in the data.

This is more critical than ever as data volumes continue to explode. We're seeing an incredible surge in real-time data from sources like the Internet of Things (IoT), with devices expected to top 30.9 billion by 2025. This massive influx, combined with rising cloud costs, means a scalable and reliable pipeline isn't just nice to have; it's essential. You can dig deeper into these trends by checking out the full research on data pipeline tools.

The bottom line? You have to treat your pipeline like a product that requires continuous improvement.

What to Watch: The Metrics That Really Matter

Good monitoring isn't about tracking everything; it's about tracking the right things. Focusing on a handful of core metrics will give you a clear, immediate sense of your pipeline's health without overwhelming you with noise.

Here’s where I always start:

- Data Latency: How long does it take for a record to get from A to B? This is the ultimate test of a "real-time" pipeline. If latency starts climbing, the value of the data plummets. I recommend setting up alerts for when latency creeps past an acceptable threshold for your use case, say, anything over five minutes.

- Throughput: What volume of data is moving through the pipe? I look at this in terms of records per second or megabytes per minute. A sudden dip is a classic sign of trouble—maybe a source system is lagging, or there's a bottleneck somewhere downstream.

- Error Rate: How many records are failing to process? This is your most obvious red flag. A spike in the error rate points directly to problems like data corruption, unexpected schema changes, or simple connectivity issues.

A Quick Tip from Experience: Don't just log these numbers in a file somewhere. Get them onto a dashboard. Using a tool like Grafana or Datadog turns abstract data points into a visual health check, making it incredibly easy to spot a negative trend before it becomes a full-blown crisis.

Smart Tweaks for Better Performance

Once you can see what's happening, you can start making things better. Optimization isn't about massive, risky overhauls. It's about making small, intelligent adjustments that deliver significant gains.

For example, partitioning your data in the destination warehouse is a game-changer. Simply organizing tables by date or a frequently queried ID can make queries run orders of magnitude faster.

Another trick I rely on is building intelligent retry logic into the pipeline. Instead of letting a transient network blip cause a total failure, you build in a mechanism that automatically tries the operation again a few times. This one small feature adds a ton of resilience and saves you from being paged in the middle of the night for a problem that would have fixed itself. That’s how you build a robust pipeline that lasts.

When you first start building data pipelines, a few questions always seem to come up. I’ve seen engineering teams grapple with these same challenges time and time again, so let's walk through the most common ones.

Getting these fundamentals straight from the beginning can save you from a world of headaches and costly rework down the line. It's all about building a system that actually solves your business problems, not just moves data around.

ETL or ELT? Which One Do I Choose?

This is the big architectural question that everyone asks. The truth is, the right answer really depends on your specific goals and the tech you have available.

Traditional ETL (Extract, Transform, Load) is what many of us started with. It's a solid choice when you have highly structured data and rigid compliance rules that demand data be cleaned before it ever touches your warehouse.

But these days, the modern ELT (Extract, Load, Transform) approach gives you so much more flexibility. You dump the raw data straight into a beast of a cloud data warehouse like Snowflake or BigQuery, then use its incredible processing power to run all your transformations. This is a far better fit for the high-volume, messy data streams we see today and lets you pivot quickly when business needs change.

How Do I Deal With Schema Changes?

Ah, schema drift—the silent killer of data pipelines. Someone adds a column or changes a data type in a source database, and suddenly, your entire downstream process shatters. If you're not ready for it, you're stuck with a tedious, reactive fire drill to fix everything.

This is exactly why modern Change Data Capture (CDC) platforms have become so indispensable.

A robust pipeline shouldn't just move data; it must adapt to it. Automated schema drift handling is a non-negotiable feature for any serious real-time data operation. It turns a potential crisis into a non-event.

Tools like Streamkap are built to solve this exact problem. They automatically spot schema changes at the source and push those updates to the destination without anyone needing to lift a finger. This means your data keeps flowing, even during routine database updates, saving your engineers countless hours of frustration.

What Are the Real Costs I Should Expect?

To manage your budget, you need to know where the money is actually going. When it comes to data pipelines, the costs typically break down into three main buckets:

- Infrastructure: This is the raw cost of your cloud compute and storage. Think about your AWS EC2 instances, S3 buckets, and your Snowflake compute credits.

- Tooling: These are the subscription fees for any platforms you're using. This could be your ingestion tool, a transformation service, or your monitoring platform.

- People: This is the big one, and it's the cost most people forget. It’s the salary hours your engineers spend building, babysitting, and fixing the pipeline.

This is where managed services really shine. They often deliver a massive return on investment simply by slashing the "people" cost, automating the most complex and time-consuming parts of the job.

Ready to build resilient, real-time data pipelines without the complexity? Streamkap uses CDC to stream data from your databases to your warehouse in milliseconds, with automated schema handling and fault tolerance built-in. See how Streamkap can simplify your data architecture.