A Guide to Database Replication Software

September 26, 2025

Ever wonder how major websites and applications stay online 24/7, even when things go wrong behind the scenes? A huge part of that magic is database replication.

At its simplest, database replication software is a smart, real-time photocopier for your data. It works tirelessly in the background to create and maintain live, synchronized copies of your database on different servers. This ensures your data is always available and up-to-date, making it the backbone of any serious high-availability system or disaster recovery plan.

Why Database Replication Is Essential

Picture your main database as the star performer on a single stage. If the lights go out on that stage, the show's over. Database replication, however, sets up several identical stages, each one perfectly in sync and ready to take the spotlight. If one stage fails, another one picks up the performance instantly. Your audience—the users—never even knows there was a problem.

This constant synchronization is what keeps modern applications ticking. It’s a safety net that protects against everything from a single server crash to a regional power outage. By keeping duplicate copies of data, businesses keep their services online, which protects revenue and, just as importantly, customer trust.

A key technology that makes this real-time sync possible is Change Data Capture (CDC). It’s the mechanism that identifies and captures changes as they happen. You can dive deeper into how this works in our guide to SQL change data capture.

This diagram gives you a great visual of the basic idea: a primary database (often called a Master) sends its updates to one or more replicas (Slaves).

This fundamental master-slave model is the starting point for building systems that are not only resilient but also faster, since you can spread the workload of read requests across multiple servers.

The Growing Demand for Replication

You don't just have to take our word for it—the market numbers tell the same story. The global database replication software market is currently valued at around USD 5.6 billion and is expected to more than double, hitting USD 12.3 billion by 2033.

This explosive growth is being fueled by the massive shift to cloud computing and the absolute necessity of keeping data consistent across geographically distributed systems.

At its core, database replication software is an insurance policy for your data. It transforms your infrastructure from a single point of failure into a resilient, distributed network capable of weathering unexpected disruptions.

So, what does this all mean for your business? It boils down to a few critical advantages:

- High Availability: This is the big one. It keeps your applications running around the clock by automatically failing over to a replica server if the primary one goes down. No more 3 a.m. panic calls.

- Improved Performance: By offloading read-heavy queries to the replica databases, you take a massive load off your primary server. This frees it up to handle the all-important write operations, making everything feel snappier for your users.

- Disaster Recovery: If your primary data center is hit by a flood, fire, or other catastrophe, having an up-to-date copy of your data sitting safely somewhere else is a business-saver.

A Look at the Core Replication Architectures

Before you can pick the right database replication software, you have to get a handle on the blueprints they're built on. These blueprints, or architectures, are really just the rules that govern how data moves between your main database and its copies. They have a massive impact on everything from system speed to how reliable your data is.

Let's break down the three most common models. Think of them like different ways a team might communicate—each has its own protocol for who gets to talk and who has to listen. Getting this right is fundamental to building a data environment that actually works for you.

The Master-Slave Model

This is the most common and probably the easiest to understand. The master-slave model works like a lead singer and their backup vocalists.

The lead singer—the master database—is the only one creating new melodies. In tech terms, it's the only one that can handle write operations (like adding or changing data).

The backup vocalists—the slave databases—just listen and repeat what the lead sings. They can't add their own lyrics. In the same way, slave databases get a continuous stream of changes from the master and just apply them. This setup is fantastic for spreading out the read workload. Your users can "listen" to any of the backup singers without ever distracting the lead, which keeps things running smoothly.

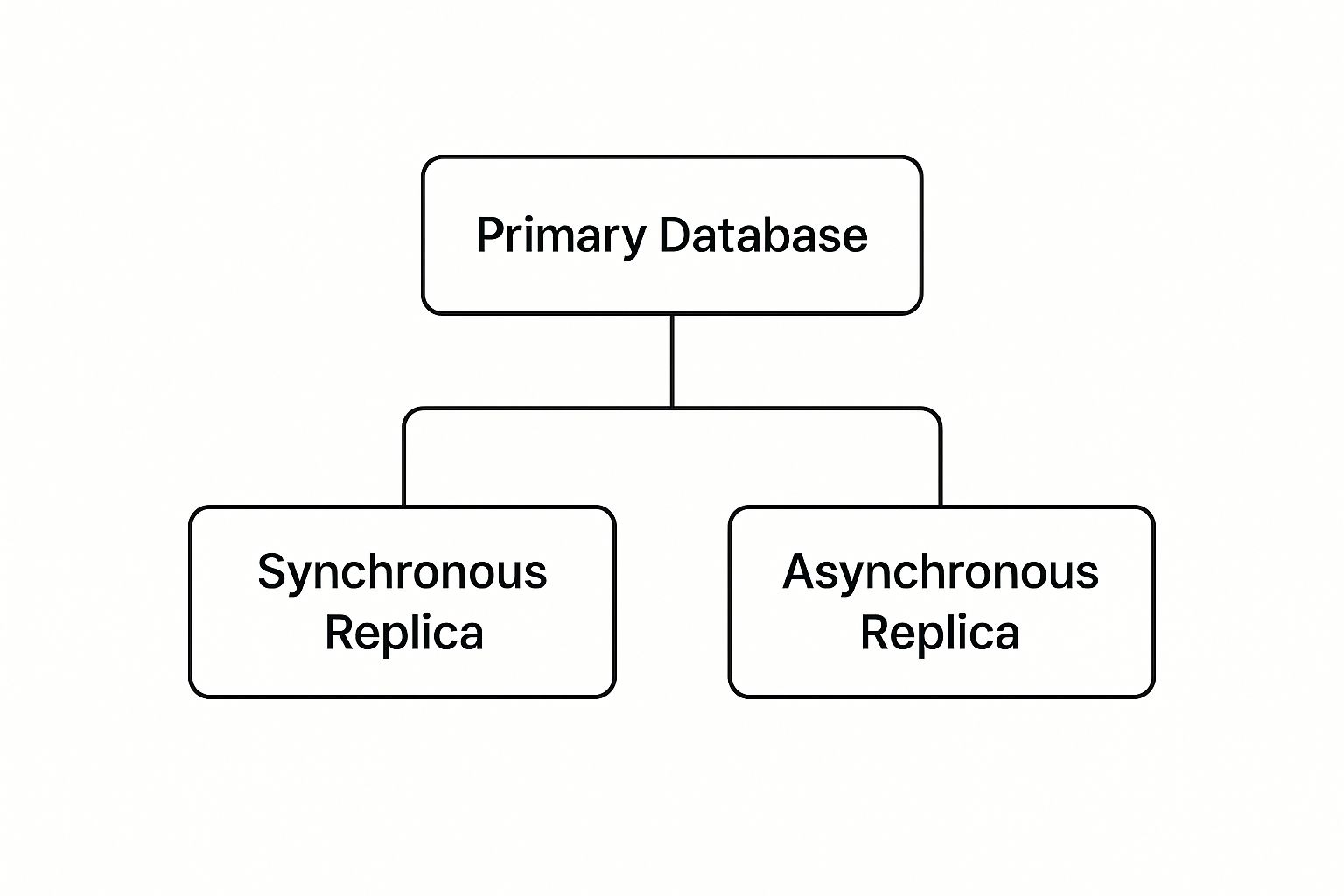

This image below illustrates how the primary database pushes updates out to its replicas. This can happen instantly or with a small, intentional delay.

You can see the difference between synchronous replicas, which confirm the change right away, and asynchronous ones, which catch up when they can. Deciding between those two is a major fork in the road for any replication strategy.

The Master-Master Model

Next up is the master-master model. Imagine two authors co-writing a book. Both have full authority to add new chapters, rewrite paragraphs, and make edits wherever they see fit.

In this scenario, both databases can accept write operations and are responsible for syncing their changes with each other. This is great for write availability; if one author goes on vacation, the other can keep the project moving forward without a hitch.

The real headache with a master-master setup is what happens when both authors try to edit the same sentence at the same time. Who wins? This is where you need smart replication software that can spot these conflicts and resolve them automatically before your data becomes a mess.

The Peer-to-Peer Model

Finally, we have the peer-to-peer model, which is completely decentralized. Picture a brainstorming session around a big table. Everyone has an equal voice, anyone can throw out an idea, and the group works together to keep the conversation coherent.

Every database node in this network is an equal—a peer. Each one can read and write data, and they all communicate constantly to stay in sync.

It's by far the most complex architecture to manage, but it’s also incredibly resilient. If one person leaves the table, the discussion just carries on. You’ll often see this model in globally distributed systems where you absolutely cannot afford downtime and need lightning-fast responses everywhere. The challenges in managing such complex, real-time data flows have led to some big shifts in thinking. To see a parallel, check out this guide on the evolution from Lambda to Kappa architecture, which tackles similar problems in data processing.

Comparing Key Replication Architectures

Choosing the right model really boils down to your specific needs for scalability, availability, and simplicity. This table lays out the core differences to help guide your decision.

Ultimately, the master-slave model offers simplicity, master-master provides write availability at the cost of complexity, and peer-to-peer delivers the ultimate in resilience for the most demanding applications.

Choosing Between Synchronous and Asynchronous Replication

When you're setting up database replication, one of the first and most important decisions you'll make is whether to go with a synchronous or asynchronous approach. This isn't just a minor technical setting; it's a fundamental choice that strikes a balance between how consistent your data is and how fast your application feels to users.

The main difference really boils down to timing. Think of it as a live phone call versus a text message. Each one has its place, but they work in completely different ways.

The Guarantee of Synchronous Replication

Synchronous replication is your live phone call. When a change is made to the main database, everything pauses. The system waits for an explicit "got it" confirmation from every single replica before it calls the transaction complete. The primary server essentially puts the whole process on hold until it's sure all copies are perfectly in sync.

This method gives you the strongest possible guarantee of data integrity. Because the application can't move forward until every replica acknowledges the change, you can achieve zero data loss—what's known in the industry as a zero Recovery Point Objective (RPO). If your primary server suddenly goes offline, you can be 100% confident that one of the replicas has an exact, up-to-the-millisecond copy ready to take over.

But that guarantee has a price: latency. That waiting period, even if it's just a fraction of a second, adds a delay to every write operation. For applications that need to feel instantaneous, this can be a dealbreaker.

Synchronous replication is the only option for systems where every single transaction is critical. We're talking about financial processing, e-commerce checkouts, and inventory management—anywhere a lost transaction could cause major problems.

The Speed of Asynchronous Replication

Now, let's look at the text message approach: asynchronous replication. The primary database sends the update to its replicas and immediately moves on to the next task. It doesn't wait for a reply. It simply fires off the data and trusts that the replicas will process it shortly.

This method is all about speed and performance. By not waiting for confirmation, the primary database keeps application latency to an absolute minimum. This is perfect for high-traffic systems where a snappy user experience is the top priority. From the user's perspective, things just happen faster.

The trade-off, of course, is a small but real risk of data loss. If the primary database fails right after it sends an update but before a replica has had a chance to apply it, that data could be lost for good. This creates a brief window where your data might not be perfectly consistent across all copies.

- Best for Performance-Critical Apps: This is the go-to for social media feeds, analytics dashboards, and content platforms where a slight data lag is an acceptable price for speed.

- Geographically Distributed Replicas: It’s also the only practical choice when your replicas are spread out across the globe. The network lag would make a synchronous setup painfully slow.

So, how do you choose? It all comes down to what your business can and cannot tolerate. If losing even one transaction is out of the question, you need synchronous replication. If your application's success hinges on maximum speed and can handle a tiny bit of data lag, then asynchronous is the clear winner.

What to Look For in Top-Tier Replication Software

Picking the right database replication tool can feel like a maze, but you can cut through the noise by zeroing in on a few critical features. It's not just about making a simple copy of your data. The best tools offer a whole suite of capabilities that keep your data consistent, give you a clear view of what’s happening, and elegantly handle the messiness of modern data systems.

Think of it like choosing a car. Sure, any car gets you from A to B. But a great one comes with a state-of-the-art navigation system, advanced safety features, and a dashboard that tells you exactly how it's performing. The same goes for replication software; the premium options turn a basic copy-paste job into a rock-solid, automated, and secure part of your infrastructure.

For any business that relies on its data for high availability, sharp analytics, or a solid disaster recovery plan, these features aren't just nice-to-haves—they're deal-breakers.

Broad Database and Platform Support

First and foremost, you need a tool that can play nicely with all the different databases you use. This is often called heterogeneous replication, and it's a huge deal. Your company might be running MySQL for its website, SQL Server for its back-office systems, and PostgreSQL for its analytics team. A flexible tool can move data between all of them without breaking a sweat.

This kind of versatility is your ticket to avoiding vendor lock-in and making sure your data strategy can evolve. It means you can funnel data from all your different operational systems into a central data warehouse without having to write a mountain of custom, brittle scripts.

While big names like IBM and Oracle hold a massive chunk of the market—over 40% combined—the rest is filled with specialized companies pushing the envelope on things like cloud integration and multi-platform support. This gives you more choice than ever. If you want to dig deeper, you can discover more insights about the replication software market on MarketReportAnalytics.com.

Real-Time Data Capture and Synchronization

In today's world, waiting for a nightly batch job to update your data is a non-starter. The best replication software uses a technique called Change Data Capture (CDC) to spot and copy changes the instant they happen. This means your replica databases and analytics dashboards are always working with information that's fresh out of the oven.

This real-time capability is essential for a few key reasons:

- Live Dashboards: It feeds your business intelligence tools with up-to-the-second data so you can make decisions based on what's happening right now.

- Instant Failover: It ensures your backup replica is a perfect mirror of your primary, ready to take over immediately if the main database goes down.

- Event-Driven Systems: It can trigger other processes across your company the moment a specific event happens in the database.

A replication tool without real-time CDC is like a news feed that only shows you yesterday's headlines. You get the information, but it's too late to act on it.

Automated Conflict Resolution

When you have a setup where multiple databases can be written to at the same time (like in a master-master or peer-to-peer architecture), data conflicts are bound to happen. Imagine two people in different offices trying to update the same customer record at the exact same moment. Without a smart way to handle that, your data can become a corrupted, inconsistent mess.

This is where the leading replication tools really shine. They come with built-in logic to automatically detect and resolve these conflicts. You can set up rules to decide which change wins—maybe the one with the latest timestamp, or the one from a specific office, or based on some other business rule. This keeps your data clean and trustworthy without anyone having to jump in and fix things by hand. For any distributed system, this is an absolute must-have.

How Database Replication Solves Real Business Problems

Database replication isn't just a technical neat-trick; it’s a core business strategy that tackles some of the toughest, highest-stakes problems modern companies face. By creating and maintaining live copies of your data, you can build incredibly resilient and high-performance systems that have a direct impact on your bottom line and customer loyalty.

Let's break down how this technology works in the real world. From making sure a website never crashes during a massive sales event to speeding up content delivery for users on the other side of the planet, replication is the unsung hero keeping businesses running smoothly.

Achieving Unbreakable Uptime

Picture an e-commerce site on Black Friday. Millions of users are trying to check out at once, and even a single minute of downtime could mean a fortune in lost sales and shattered customer trust. This is where replication truly proves its worth.

Using a master-slave or master-master setup, the company can keep a "hot standby" replica humming along in the background. If the main database server buckles under the pressure, traffic is instantly and automatically rerouted to the fully synchronized replica. From the customer's perspective, nothing ever went wrong. The shopping cart works, the sale goes through, and the business keeps running.

This is why one of the biggest drivers for replication is business continuity. It's a cornerstone of any solid disaster recovery plan, turning a potential catastrophe into a minor hiccup. Check out these disaster recovery plan examples to see how it fits into a larger strategy.

The core value here is simple: replication transforms your database from a single point of failure into a highly available system, safeguarding revenue and reputation during critical business moments.

Enhancing Global User Experience

Now, think about a global media company streaming video to viewers everywhere. If a user in Tokyo has to pull data from a server in New York, they're going to face frustrating lag and buffering. Nobody likes that. Replication solves this problem elegantly by distributing read-only copies of the database to servers around the world.

The company can set up replica databases in data centers across Asia, Europe, and North America. When someone wants to watch a video, their request is automatically sent to the server closest to them. This dramatically cuts down latency, leading to faster load times and a much smoother viewing experience—essential for keeping a global audience hooked.

This need for snappy, responsive applications worldwide is a huge reason the data replication market, valued at USD 5.2 billion, is expected to more than double to USD 11.8 billion by 2032.

Enabling Modern Data Strategies

Beyond uptime and speed, replication also opens the door to other crucial business initiatives without messing with your live systems.

- Zero-Downtime Migrations: In the past, moving to the cloud or upgrading a database meant scheduling a painful maintenance window. With replication, you simply sync your live production database to the new one. When you're ready, you flip the switch. The entire migration happens instantly, with zero interruption to your users.

- Real-Time Analytics: Firing off complex analytical queries against your live production database can slow it to a crawl, frustrating your customers. The solution? Replicate that data to a separate analytics warehouse. Your data science team gets to work with fresh, up-to-the-minute information without ever affecting your application's performance.

Common Questions About Database Replication

As you start digging into database replication software, a few questions tend to pop up again and again. It's a powerful concept, but it's also easy to get lost in the technical jargon or mix it up with other data management tasks. Getting these common points cleared up is the first step toward making a smart decision and building a solid data architecture.

Let's walk through some of the most frequent questions and give you some clear, straightforward answers. Nailing down these distinctions will help you see exactly where replication fits into your big-picture strategy for data availability, performance, and disaster recovery.

What Is the Difference Between Database Replication and a Backup?

This is probably the most common point of confusion. People often think replication and backups are the same thing, but they solve completely different problems. Here’s a simple way to think about it: replication is all about business continuity, while a backup is for disaster recovery.

A backup is just a snapshot of your data at a specific moment. It’s like taking a photo of your database every night at midnight. If something truly terrible happens—say, a rogue script corrupts your data or you get hit with ransomware—you can restore your system from that last known good photo. The catch? The restore process can be slow, and you'll lose every single piece of data created since that snapshot was taken.

Replication, on the other hand, is a live, continuously updated copy of your database. It’s less like a photo and more like a live video stream. If your main database goes down, you can failover to the replica almost instantly with little to no data loss. This keeps your applications up and running, which is what business continuity is all about.

Key Takeaway: Backups are your "go back in time" button for major data loss events. Replication is your "keep the lights on" switch, letting you failover to a live, up-to-date copy in real-time.

How Does Replication Affect Primary Database Performance?

The performance hit from replication really boils down to which method you use: synchronous or asynchronous.

With asynchronous replication, the impact on your primary database is usually very low. It works by sending data changes to the replica and then immediately moving on without waiting for a confirmation. This approach puts your application's speed first, making it perfect for high-traffic systems where a tiny bit of data lag is an acceptable trade-off.

Synchronous replication, however, can introduce noticeable latency. Because it has to wait for a "got it" signal from every replica before finalizing a transaction, it adds a small delay to every single write operation. This guarantees zero data loss, but it can definitely slow down your application.

The good news is that modern replication software is pretty smart about this. Many tools minimize the overhead by reading changes directly from the database’s transaction logs instead of constantly querying the active tables. This technique, called log-based Change Data Capture (CDC), is much less intrusive and dramatically reduces the performance drag on your source system.

Can I Replicate Data Between Different Database Types?

Yes, you absolutely can! This is called heterogeneous replication, and it’s one of the most powerful features of modern replication tools. It lets you move data between totally different database systems—like from an on-premise Oracle database to a cloud-based PostgreSQL instance, or from MySQL to a data warehouse like Snowflake.

This capability is a game-changer for a few key reasons:

- Data Warehousing: You can pull data from all your different operational databases into one central place for analytics.

- Cloud Migration: It’s the secret to migrating from old-school, on-premise databases to the cloud without any downtime.

- Microservices: Different teams can pick the best database for their specific service, but you can still keep all the data in sync across the entire organization.

Pulling this off usually requires a tool that can handle data transformations on the fly, because data types and schemas can be wildly different from one database to another. Tools built for real-time data movement, especially those based on Debezium, are great for this kind of work. To get a better handle on the tech, take a look at our guide on what Debezium is and how it works.

Ready to implement real-time, low-latency data replication without the complexity? Streamkap uses Change Data Capture to move data from databases like MySQL, Postgres, and SQL Server to your data warehouse in seconds, not hours. See how you can replace brittle batch ETL jobs and build a truly event-driven architecture. Start your free trial today!