A Guide to Change Data Capture in SQL Server

Explore our complete guide on Change Data Capture in SQL Server. Learn how CDC works, how to configure it, and real-world use cases for real-time data.

Change Data Capture in SQL Server is a built-in feature that records every insert, update, and delete action applied to a table. Think of it as a detailed transaction log for your data, capturing the what, how, and when of every change. This historical data is then made available for other systems without getting in the way of your database's performance.

The Power of Tracking Data History

Imagine having a dedicated court stenographer for your database, meticulously recording every single modification the moment it happens. That's pretty much what Change Data Capture (CDC) in SQL Server does. It’s not just about knowing the current state of your data; it’s about understanding its entire journey over time. This gives you a reliable, ordered stream of changes without bogging down your primary database operations.

Before CDC came along, developers had to get creative with clumsy, inefficient methods like triggers or timestamp columns to track changes. These approaches often backfired, slowing down performance, locking up tables, and creating a tangled mess of dependencies. CDC, on the other hand, works asynchronously in the background. It simply reads the database's transaction log—the same journal SQL Server uses for recovery—to spot and record changes. This means the impact on your production workload is almost unnoticeable.

Why Is Capturing Changes Important?

Knowing your data's history is absolutely critical for modern applications. Without a solid way to track modifications, businesses miss out on key insights and end up fighting with data consistency issues across different systems. Change Data Capture solves these problems by unlocking several important capabilities:

- Real-Time Data Synchronization: Keep data warehouses, caches, and search indexes perfectly in sync with your operational database.

- Robust Auditing and Compliance: Maintain a complete, verifiable history of all data modifications, which is a lifesaver for meeting regulatory requirements.

- Event-Driven Architectures: Feed changes into messaging systems like Apache Kafka to kick off downstream processes and build responsive microservices.

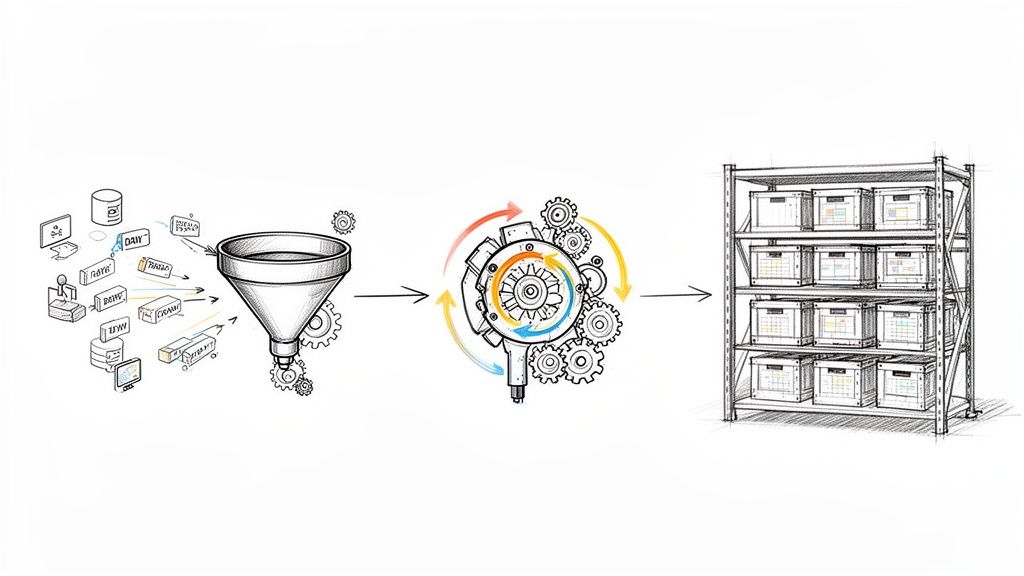

- Simplified Data Integration: Say goodbye to nightly batch ETL (Extract, Transform, Load) jobs by streaming data changes as soon as they happen.

At its core, Change Data Capture turns your database from a static repository into a dynamic source of events. It allows other applications to react instantly to data changes, paving the way for more intelligent and integrated systems.

Here's a quick look at the key features that make CDC so effective:

SQL Server Change Data Capture Key Features

This table summarizes the core functionalities and benefits that SQL Server CDC provides for modern data management.

FeatureDescriptionPrimary BenefitAsynchronous CaptureReads changes from the transaction log after they are committed, operating independently of the source transaction.Minimal Performance Impact on the production database, avoiding latency and contention.Ordered Change StreamDelivers change data in the same order that the transactions were committed to the source database.Transactional Consistency for downstream systems, ensuring data integrity.DML Change TrackingCaptures INSERT, UPDATE, and DELETE operations, providing both the old and new values for updates.Complete Data History for auditing, replication, and historical analysis.Schema Evolution SupportAutomatically handles DDL changes (like adding or dropping a column) to tracked source tables without interrupting the capture process.Resilience and Low Maintenance in evolving application environments.Flexible ConsumptionExposes change data through table-valued functions (TVFs), allowing consumers to query for changes within specific time ranges.Easy Integration with a wide range of tools and custom applications.

These features work together to provide a robust and low-impact solution for tracking data modifications right at the source.

A Foundational Technology for Modern Data

The adoption of CDC has exploded right alongside the rise in data volumes and the big shift from old-school batch processing to real-time data streaming. Microsoft first introduced it in SQL Server 2008, and it has since become a cornerstone for companies needing immediate data integration and analytics.

Its inclusion in Azure SQL Database and Managed Instances just goes to show how central change data capture in sql server has become, especially in scalable cloud setups. You can learn more about the growth of the SQL Server transformation market on futuremarketinsights.com. This whole evolution points to a much broader need for systems that can handle data with both speed and accuracy.

How SQL Server CDC Works Behind the Scenes

To really get a feel for what makes change data capture in SQL Server so effective, you have to pop the hood and see what’s going on. It’s not magic—it's a clever, asynchronous process designed to work alongside the database's normal operations without getting in the way. Think of it as a silent, reliable observer.

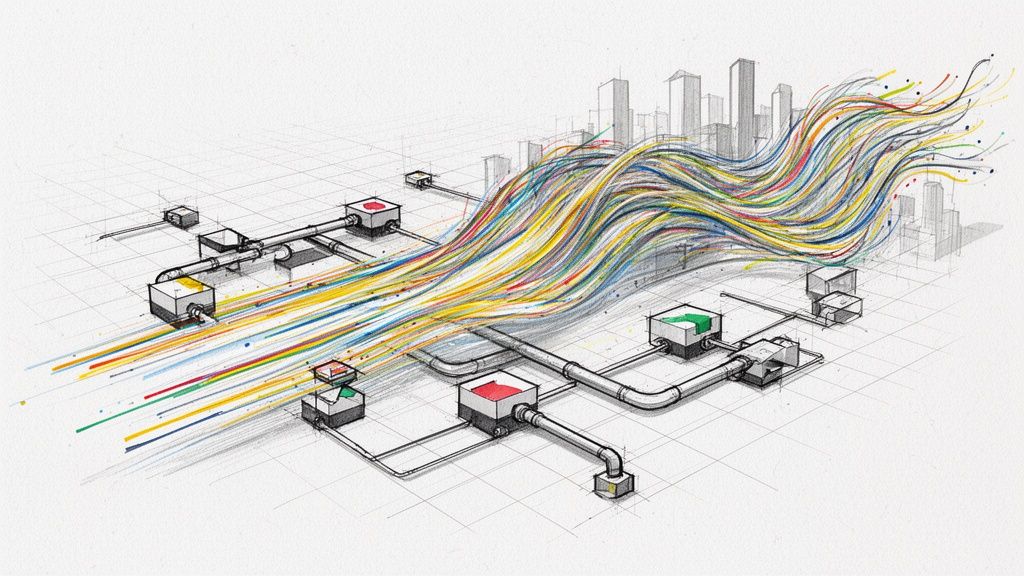

The whole operation hinges on the SQL Server transaction log. This log is basically the database's diary, where every single transaction—every insert, update, and delete—gets written down before it even touches the actual data files. CDC smartly taps into this existing system, using the transaction log as the definitive source for every change that happens.

This approach is incredibly efficient. Instead of bogging down tables with triggers or constantly querying them to see what's new, CDC simply reads from a log that SQL Server already meticulously maintains for its own recovery and consistency needs. That’s the secret to its low-overhead design.

The Capture and Cleanup Jobs

Once you flip the switch and enable CDC on a table, SQL Server automatically creates two essential SQL Server Agent jobs. These jobs are the workhorses that manage the whole process.

- The Capture Job: This job's sole purpose is to scan the transaction log. When it spots a committed transaction for a table you're tracking, it pulls out the change data. It then formats this data and neatly stores it in a special change table. This new table mirrors your original table's structure but adds extra metadata columns to describe the change.

- The Cleanup Job: A complete history of changes is great, but you can't just let it grow forever. The cleanup job runs on a schedule to clear out old data from the change tables, based on a retention policy you set. This keeps things tidy and prevents the change tables from ballooning in size.

These two jobs are a tag team, creating a self-managing system that tracks and maintains a history of every modification.

This visual breaks down the flow: a transaction occurs, the capture process reads it from the log, and the change is then stored in its own dedicated table, ready to be queried.

This entire process is decoupled from the original transaction. That’s a critical detail, as it means the source database can keep humming along without performance hits.

Understanding Log Sequence Numbers

So, how does CDC keep everything in perfect order? The answer is Log Sequence Numbers (LSNs). You can think of an LSN as a unique, ever-increasing serial number stamped on every single record in the transaction log. When the capture job processes a change, it also records the LSN tied to that transaction.

By using LSNs, CDC guarantees that changes are delivered to downstream systems in the exact same order they were committed to the database. This chronological integrity is vital for maintaining transactional consistency in data warehouses, caches, and other replicas.

When it's time to query the CDC data, you use LSN ranges to ask for all the changes that happened between "point A" and "point B." It's a precise and foolproof way to consume the stream of changes without ever missing an update or processing a duplicate.

It's this combination of reading the log asynchronously, using dedicated agent jobs, and ordering everything with LSNs that creates such a robust and efficient architecture. This behind-the-scenes engineering is what makes change data capture in SQL Server a rock-solid foundation for building modern, real-time data pipelines.

Getting CDC Up and Running in Your Database

Putting change data capture in SQL Server into action is pretty straightforward, but you have to do it in the right order. It’s a two-step dance: first, you switch CDC on for the entire database, and then you tell it which specific tables to watch. This lets you be very precise, so you're only tracking changes that matter and not creating unnecessary overhead.

Before you even start, there's one non-negotiable prerequisite: SQL Server Agent must be running. Think of the Agent as the engine that drives CDC. It's what runs the capture and cleanup jobs in the background. If it's not running, CDC is dead in the water—no changes get pulled from the transaction log, and nothing gets written to your change tables.

Step 1: Flip the Switch on the Database

First things first, you need to enable CDC at the database level. This command doesn't start tracking your tables just yet. Instead, it lays the groundwork by creating the necessary system tables, schemas, and other metadata that CDC needs to operate. It’s like prepping the kitchen before you start cooking.

You'll use a simple T-SQL stored procedure, sys.sp_cdc_enable_db, for this. Keep in mind, you'll need sysadmin rights to run it.

-- Make sure you're in the right database

USE YourDatabaseName;

GO

-- Run the stored procedure to turn on CDC

EXEC sys.sp_cdc_enable_db;

GO

Once you run this, SQL Server creates a cdc schema and a few system tables like cdc.change_tables and cdc.captured_columns. These tables are where CDC will keep track of which tables you've enabled for tracking. This one-time setup is the foundation for everything that follows.

Step 2: Target a Specific Table

Now that the database is ready, you can get granular and pick the exact tables you want to monitor. This is where you tell SQL Server, "Hey, watch this table for me." To do this, you'll use another stored procedure, sys.sp_cdc_enable_table. This is the command that actually creates the change table for your source table and sets up the specific capture and cleanup jobs for it.

This procedure has a few key parameters you'll need to define:

- @source_schema: The schema your table lives in (e.g., 'dbo').

- @source_name: The name of the table you want to track.

- @role_name: A database role that gates access to the change data. Only members of this role (and sysadmins) can see the change table. If you set this to

NULL, anyone can query it. - @supports_net_changes: If you set this to

1, it creates an extra function that makes it easier to query for just the net changes, which can be a real time-saver.

Let's say we want to track an Orders table inside a Sales schema. Here’s what that would look like:

EXEC sys.sp_cdc_enable_table

@source_schema = N'Sales',

@source_name = N'Orders',

@role_name = N'CDCAccessRole',

@supports_net_changes = 1;

GO

Pro Tip: I always recommend setting a specific @role_name instead of leaving it NULL. It's a simple way to build a security fence around your change data, making sure only authorized people or applications can get their hands on what could be sensitive historical information.

Step 3: Check Your Work

After running the commands, it's always smart to double-check that everything is configured correctly. If it worked, you should see a few new objects pop up automatically.

Here's what to look for to confirm your setup is good to go:

- A New Change Table: In your system tables, you should find a new table named

cdc.YourSchema_YourTable_CT. In our example, this would becdc.Sales_Orders_CT. - New SQL Server Agent Jobs: Fire up SQL Server Agent and expand the "Jobs" folder. You'll find two new jobs named something like

cdc.YourDatabaseName_captureandcdc.YourDatabaseName_cleanup.

Seeing these components means your change data capture in SQL Server setup is live. The capture job is now actively scanning the transaction log, and any inserts, updates, or deletes on the Sales.Orders table will be faithfully recorded in its change table.

If you're operating in the cloud, the process is very similar. We've put together a detailed walkthrough in our guide to Azure SQL Database Change Data Capture.

How to Query and Use Your Change Data

Once you have change data capture in SQL Server up and running, you’ve basically created a time machine for your data. So, how do you use it? The trick is that you don't query your original source table. Instead, you'll tap directly into the special change tables that CDC generates, using a few handy functions to translate the raw log of inserts, updates, and deletes into useful information.

The real key to unlocking this data is getting familiar with the metadata columns CDC automatically bolts onto every change table. Think of these columns as your guide—they tell the story of how each row has evolved over time, giving you context that goes way beyond just the final data values.

Interpreting the Change Table Metadata

Every single change table (which you'll find named like cdc.schema_table_CT) comes with a standard set of metadata columns. Getting a handle on these is the first step to making any sense of your captured data.

__$start_lsn: This is the Log Sequence Number (LSN) from the transaction that caused the change. It's a unique marker that pinpoints the exact moment the change was committed to the database.__$end_lsn: This is reserved for future use and will almost always beNULL.__$seqval: If multiple changes happen within the same transaction, this column puts them in the correct order.__$operation: This is arguably the most important one. It tells you exactly what kind of change happened.__$update_mask: ForUPDATEoperations, this is a bitmask that cleverly shows you exactly which columns were changed.

The __$operation column is your main navigation tool, using simple codes to spell out the action:

- 1 = Delete: The row was deleted.

- 2 = Insert: A new row was created.

- 3 = Update (Old Values): This is a snapshot of the row's data before the update.

- 4 = Update (New Values): And this is what the row looked like after the update.

This before-and-after picture for every single modification is incredibly powerful. It's exactly what you need for detailed auditing or recreating a sequence of events.

Using CDC Functions to Retrieve Changes

Thankfully, you don't have to write complex queries against the _CT tables and wrangle LSNs by hand. SQL Server gives us a couple of powerful table-valued functions that do the heavy lifting for you: cdc.fn_cdc_get_all_changes_<capture_instance> and cdc.fn_cdc_get_net_changes_<capture_instance>.

These functions are your go-to tools for pulling change data. get_all_changes gives you the full, unabridged history, while get_net_changes just gives you the final, summarized version of what changed.

Choosing which one to use really boils down to what you're trying to achieve. Do you need a granular, step-by-step audit trail? Or just an efficient summary of what a row looks like now?

To help you decide, here’s a quick comparison of the two main functions for querying CDC data.

Choosing the Right CDC Query Function

This table breaks down when to use cdc.fn_cdc_get_all_changes versus cdc.fn_cdc_get_net_changes, helping you pick the right tool for the job.

Function TypeBest ForExample Use CasePerformance ConsiderationAll ChangesDetailed auditing, streaming every single event to a message queue, or replicating a perfect, transaction-by-transaction history.You're feeding a real-time fraud detection system where every intermediate step of a transaction could be significant.Can return many rows for a single source row if it was updated multiple times in the query window.Net ChangesSynchronizing a data warehouse, updating a search index, or refreshing a cache where only the final state of the row matters.You're updating a customer dimension table in a data warehouse. You only care about their latest address, not the five previous ones.Much more efficient, as it collapses multiple updates into a single "net change" row for each source row.

Once you get comfortable with these query functions, you can build incredibly robust data pipelines. You can feed data warehouses, keep search indexes fresh, or trigger alerts in other applications—all by turning the raw output of change data capture in SQL Server into a powerful, actionable asset.

Real-World Use Cases for Change Data Capture

Knowing how change data capture in SQL Server works is one thing, but seeing it solve real-world problems is where the lightbulb really goes on. Companies in just about every industry are using CDC to build faster, more accurate, and more resilient data pipelines. It's the secret sauce behind modern architectures that can't afford to wait for stale data.

From keeping a web of microservices in sync to feeding live analytics dashboards, the applications are incredibly practical. Let's dig into a few of the most common ways businesses are putting CDC to work right now.

Real-Time Analytics and Business Intelligence

Probably the most popular use for CDC is fueling business intelligence platforms. A classic application is powering Business Intelligence and Power BI tools, where having the latest data is non-negotiable for making sound decisions. Instead of relying on slow, overnight batch jobs to refresh a data warehouse, CDC streams changes as they occur.

What this means for the business is that sales dashboards, financial reports, and operational metrics are a true reflection of what's happening now. A retailer, for example, can watch sales performance by the minute, giving managers the power to react instantly to a hot-selling product or a sudden dip in inventory.

Data Synchronization and Microservices

In modern microservice architectures, you often have several distinct services that all need a consistent view of the same data. Trying to keep everything in sync can quickly become a nightmare. This is where CDC offers a surprisingly elegant solution, broadcasting changes from the source database for other services to consume.

Picture an e-commerce platform with separate services for inventory, shipping, and customer notifications. When a customer places an order, CDC captures that change in the Orders table. This single event can then trigger a cascade of actions:

- The inventory service grabs the event and immediately decrements the stock count.

- The shipping service sees the new order and kicks off the fulfillment workflow.

- The notification service uses it to send the customer a confirmation email.

This event-driven pattern keeps all your services loosely coupled but perfectly in sync, without building brittle, direct integrations between them.

Auditing and Compliance

For businesses in finance or healthcare, keeping a detailed, verifiable history of every data change isn't just a "nice-to-have"—it's a strict legal requirement. CDC essentially functions as a perfect, built-in audit log right out of the box.

By capturing every INSERT, UPDATE, and DELETE, along with crucial metadata like who made the change and when, an organization can easily reconstruct the entire history of any record. This creates an undeniable trail for compliance auditors and security teams.

A bank, for instance, can leverage CDC to track every single change to a customer's account balance, providing a rock-solid ledger for regulatory reporting and fraud investigations. If you're curious about building this kind of pipeline, you can see a practical example in our guide on how to stream data from RDS SQL Server.

Driven by this intense need for real-time data, the global market for CDC tools is growing fast. The shift to the cloud and the sheer volume of data being generated are pouring fuel on the fire. With some projections suggesting that real-time data will make up 30% of all data by 2025, CDC is moving from a niche tool to a must-have for any competitive business.

Best Practices and Common Pitfalls to Avoid

Getting change data capture in SQL Server up and running is one thing. Keeping it humming along smoothly in a live production environment? That's a whole different ballgame. It really comes down to thoughtful planning to make sure your CDC process stays stable, efficient, and doesn't morph into a maintenance nightmare.

These aren't just theoretical tips; they're hard-won lessons from the field. By thinking ahead about storage, monitoring, and inevitable schema changes, you can dodge the most common bullets and build a data pipeline you can actually rely on.

Proactively Manage Storage and Retention

One of the classic mistakes I see is letting the change tables grow wild. If you don't have a solid retention policy, these tables will just keep expanding, gobbling up disk space, slowing down your server, and bloating your storage bill. The default cleanup job is there to help, but you can't just set it and forget it.

- Set a Realistic Retention Period: Be honest about how long you really need to keep change data. Figure out the longest recovery window your downstream systems might need and set the cleanup job accordingly. A 72-hour window is a pretty common and sensible place to start.

- Monitor Disk Usage: Keep a close watch on the filegroup where your change tables live. If you see sudden, unexpected growth, it’s a big red flag that the cleanup job might be failing or just can't keep up.

- Consider a Separate Filegroup: For your busiest tables, it's often a smart move to put their change tables on a completely separate filegroup. This can really help with I/O performance and makes managing the storage much more straightforward.

Monitor Latency and Job Health

A CDC pipeline is all about speed. If the capture job starts lagging, the delay between a change happening in the source and it appearing downstream can stretch out, which kind of defeats the whole purpose of having a near real-time stream.

A healthy CDC implementation is a monitored one. You absolutely have to keep tabs on the SQL Server Agent jobs and the capture latency. If you don't, you're flying blind, and silent failures are almost guaranteed.

Make it a habit to check the status of the capture and cleanup jobs in SQL Server Agent. For a deeper look, you can query the sys.dm_cdc_log_scan_sessions dynamic management view to see exactly how far the capture process is trailing the live transaction log. Setting up alerts for high latency or failed jobs isn't just a good idea—it's essential.

For a less hands-on approach, you might want to check out our guide on how to implement change data capture without complexity.

Plan for Schema Changes and Initial Loads

Handling schema changes on a tracked table is a huge operational hurdle. CDC won't magically propagate your ALTER TABLE statements. The reality is you have to manually disable CDC on the table, make your schema change, and then re-enable it. This creates a whole new capture instance and means you have to coordinate carefully with anyone consuming that data.

And please, don't forget about the initial data load. When you first enable CDC, it only captures what happens from that point forward. You still need a plan to get the full snapshot of existing data into your target system. Overlooking this initial synchronization is a surprisingly common misstep that can bring a project to a screeching halt.

Common Questions About SQL Server CDC

When you start digging into change data capture in SQL Server, a few practical questions almost always come up, especially around performance and day-to-day management. Let's tackle some of the most common ones so you can go in with your eyes open.

How Much of a Performance Hit Does CDC Cause?

You'll be happy to hear the performance impact is usually pretty small. This is because CDC is designed to work asynchronously. It doesn't interfere with your live transactions; instead, it reads changes from the transaction log after they've already been committed.

The main resource cost comes from the capture job itself, which uses some CPU and I/O to scan the log and write to the change tables. For systems with a massive number of transactions, you’ll want to keep an eye on capture latency and make sure your disk system can keep up with the extra writes.

What Happens When I Need to Change a Table’s Schema?

This is a big one. CDC does not automatically handle schema changes like adding or dropping a column. If you need to make a DDL change, you have to follow a specific process.

The steps are:

- Disable CDC on the specific table.

- Make your schema change (e.g.,

ALTER TABLE). - Re-enable CDC on that same table.

This creates a brand new capture instance and a fresh change table with the new column structure. It's a crucial operational detail you need to plan for, ideally during a maintenance window, to prevent breaking any downstream data pipelines that expect the old schema.

Don't treat schema evolution as an afterthought. It's a core part of managing any CDC setup. Forgetting this manual process is a classic mistake that can bring your data pipelines to a halt.

Is CDC Included in Every Version of SQL Server?

No, Change Data Capture is considered a premium feature. You’ll find it in the Enterprise, Developer, and Evaluation editions of SQL Server. It is not available in the Standard or Express editions.

The good news for cloud users is that this powerful feature comes standard with both Azure SQL Database and Azure SQL Managed Instance, making it a go-to choice for modern cloud architectures.

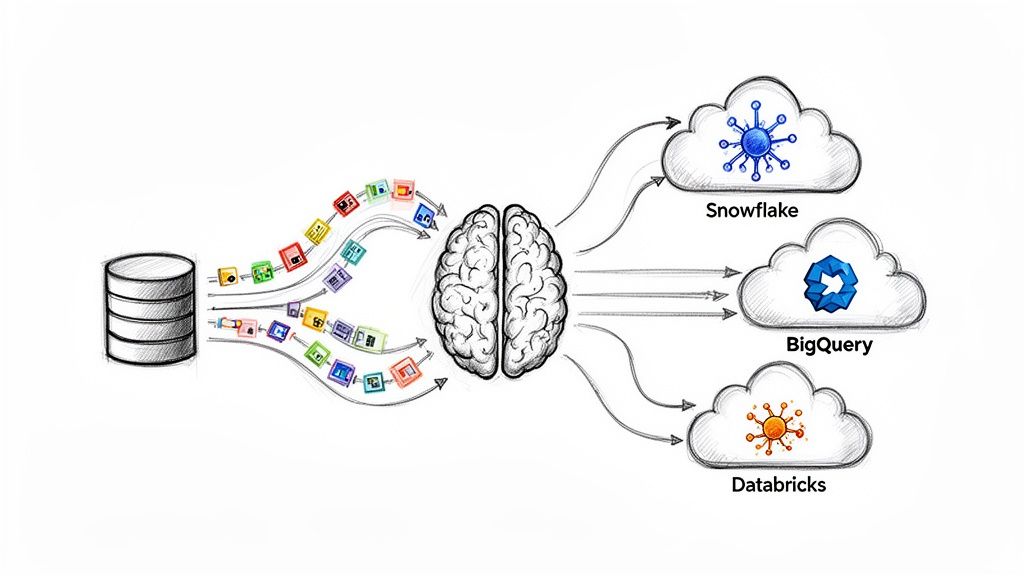

Ready to build real-time data pipelines without the operational headaches? Streamkap uses CDC to stream data from sources like SQL Server to destinations like Snowflake and Databricks in minutes, not months. Start your free trial today and see how easy real-time can be. Learn more at https://streamkap.com.