Zero Ops  Data Streaming

Data Streaming

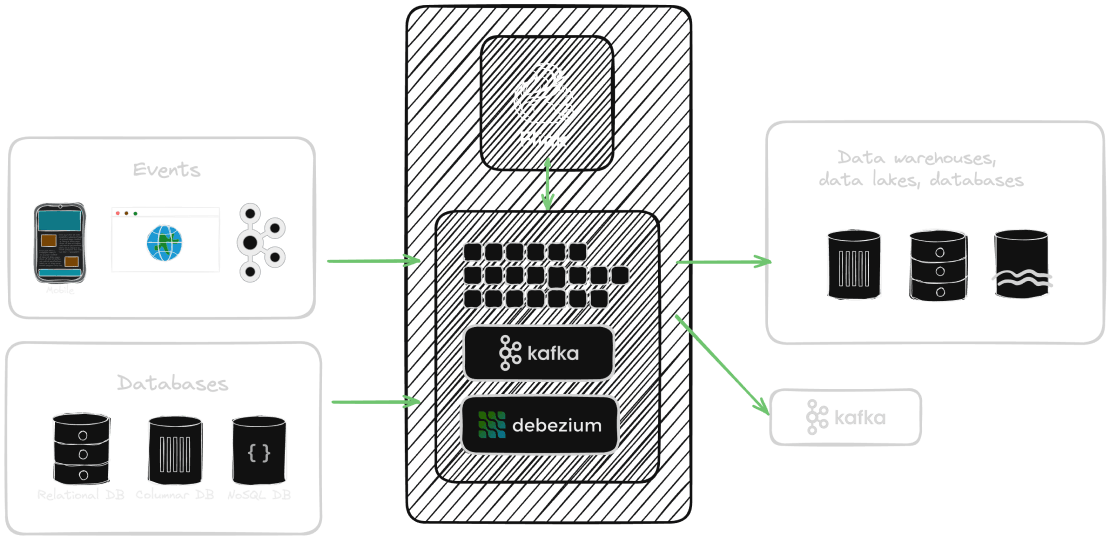

Streamkap is the fastest way to build streaming applications

Power real-time AI & analytics with sub-second CDC & stream processing.

Kafka & Flink without the headaches

Trusted by data teams at

CAPABILITIES

Everything You Need for Real-Time Data

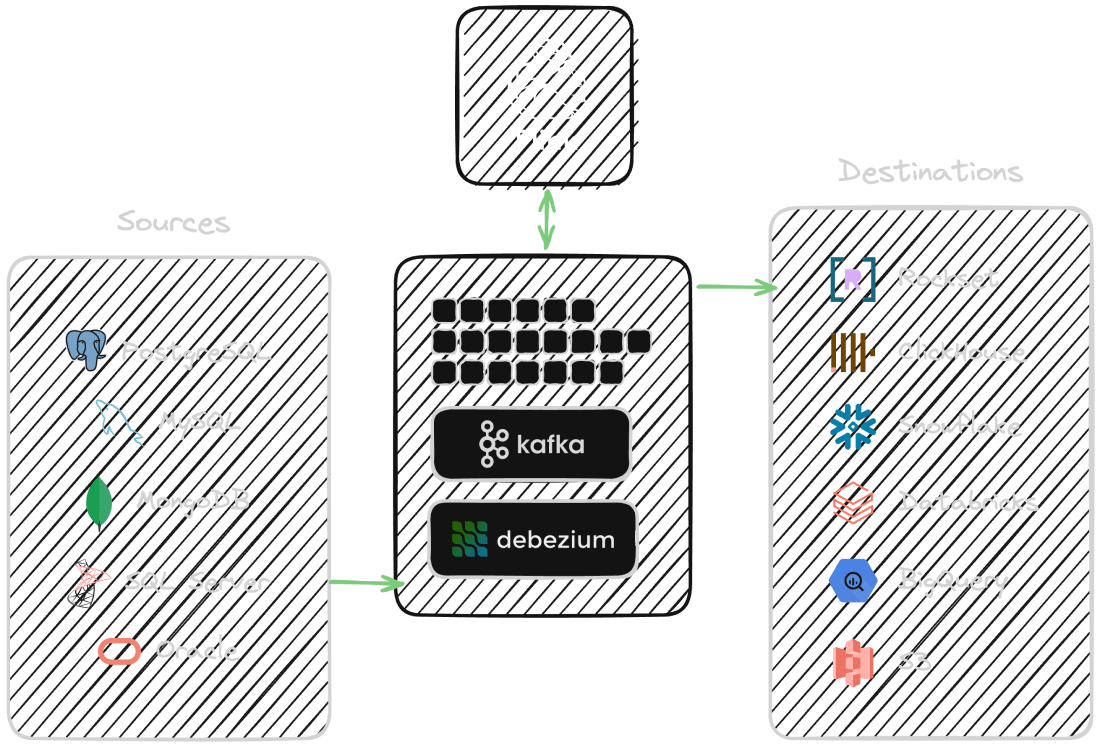

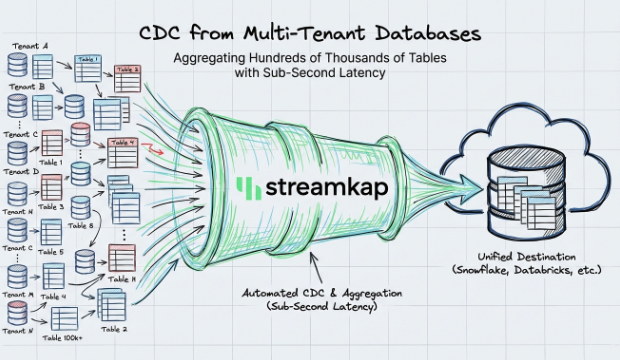

Change Data Capture

Capture every database change in real-time with log-based CDC. No polling, minimal source impact.

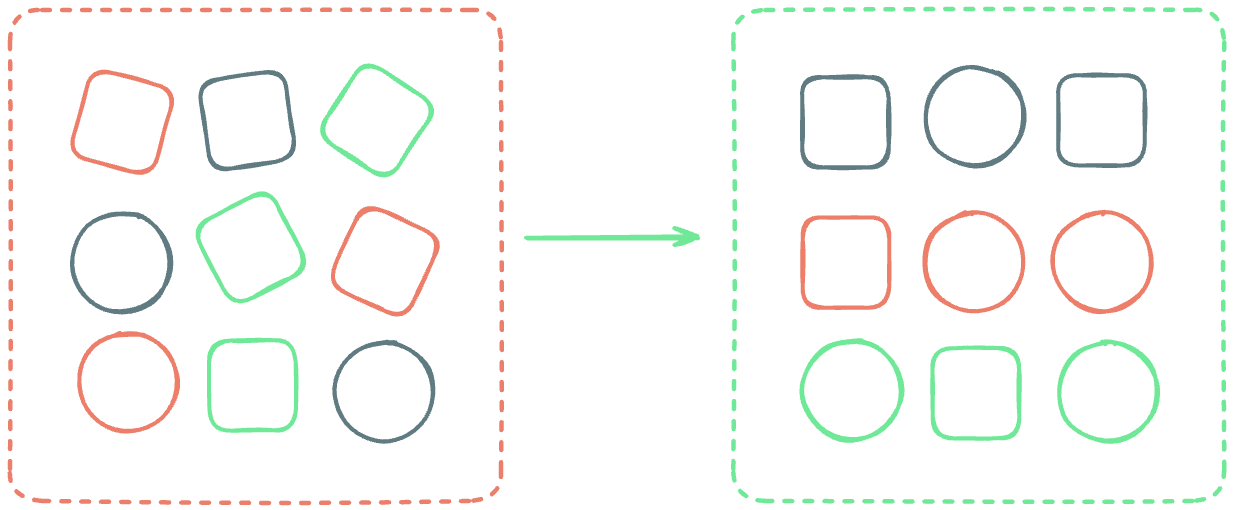

Learn moreStream Processing

Transform, filter, and enrich data in-flight with SQL, Python, or JavaScript.

Learn moreMonitoring & Alerts

Full observability with metrics, lag tracking, and custom alerts for production confidence.

Learn moreEnterprise Security

SOC 2, HIPAA, GDPR, PCI-DSS compliant. Deploy in your cloud or ours with full data control.

Learn moreEnterprise Scale

Proven at scale with millions of tables and multi-tenant architecture. One platform that grows with you.

Learn more