<--- Back to all resources

What is data latency: what is data latency and its impact on your systems

Uncover what is data latency, its causes, and practical steps to measure and reduce it for faster, more reliable performance.

In the simplest terms, data latency is the delay between an action and a reaction in a digital system. It’s the time it takes for a piece of data to get from point A to point B, ready for use. Think of it as the digital equivalent of waiting.

Understanding Data Latency

Let’s use a real-world analogy. Say you order a package online. The total time from you hitting the ‘buy’ button to the package landing on your doorstep is the latency. This isn’t one single delay; it’s a collection of them—the time it takes the warehouse to process your order, the time to package it, and the actual transit time in the delivery truck.

Data works the same way. The total latency is the sum of all the little delays it hits along its journey. That journey could be from a sensor on a factory floor to a cloud database, from a user’s click to a website loading, or from a transaction in your database to it appearing on an executive’s analytics dashboard.

The Key Components of Latency

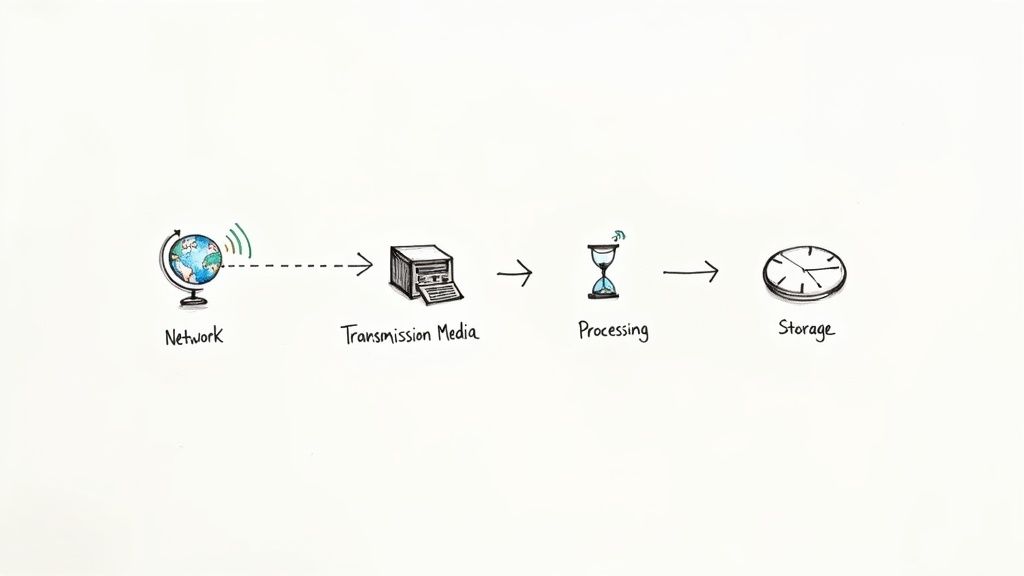

Every data transfer is a multi-step process, and each step introduces a small delay. When you add them all up, you get the total latency that users or systems experience. It’s helpful to break these delays down into their core components.

The table below dissects the main culprits behind data latency, using our package delivery analogy to make each concept a bit clearer.

The Core Components of Data Latency

Latency ComponentDescriptionAnalogy (Package Delivery)**Distance (Propagation Delay)**The time it takes for a signal to travel the physical distance through cables or air. Even at the speed of light, this is a factor over long distances.The time your package spends physically on the road or in the air traveling from the warehouse to your city.**Processing (Nodal Delay)**The “thinking time” required by devices like routers, servers, and switches to handle the data, such as routing it or running a calculation.The time it takes for the warehouse to find your item, package it, and load it onto the correct truck.**Transmission (Serialization Delay)**The time needed to push all the bits of a data packet onto the network link. Bigger data packets take longer to send, just like a long train takes time to leave the station.The time it takes to load all the boxes onto the delivery truck. A truck with more packages takes longer to load.

Each of these components plays a role, and a bottleneck in any one of them can significantly slow down the entire process.

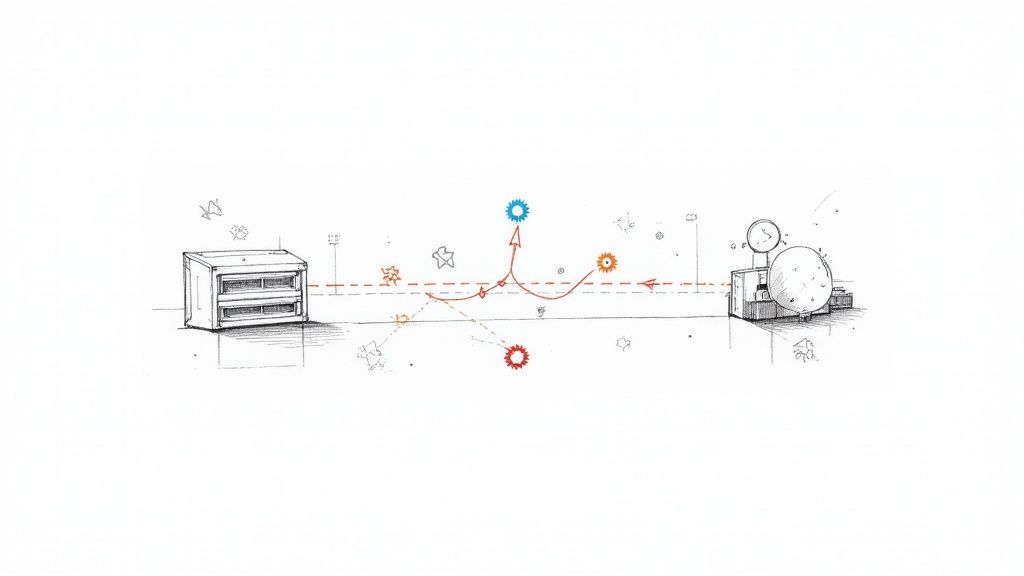

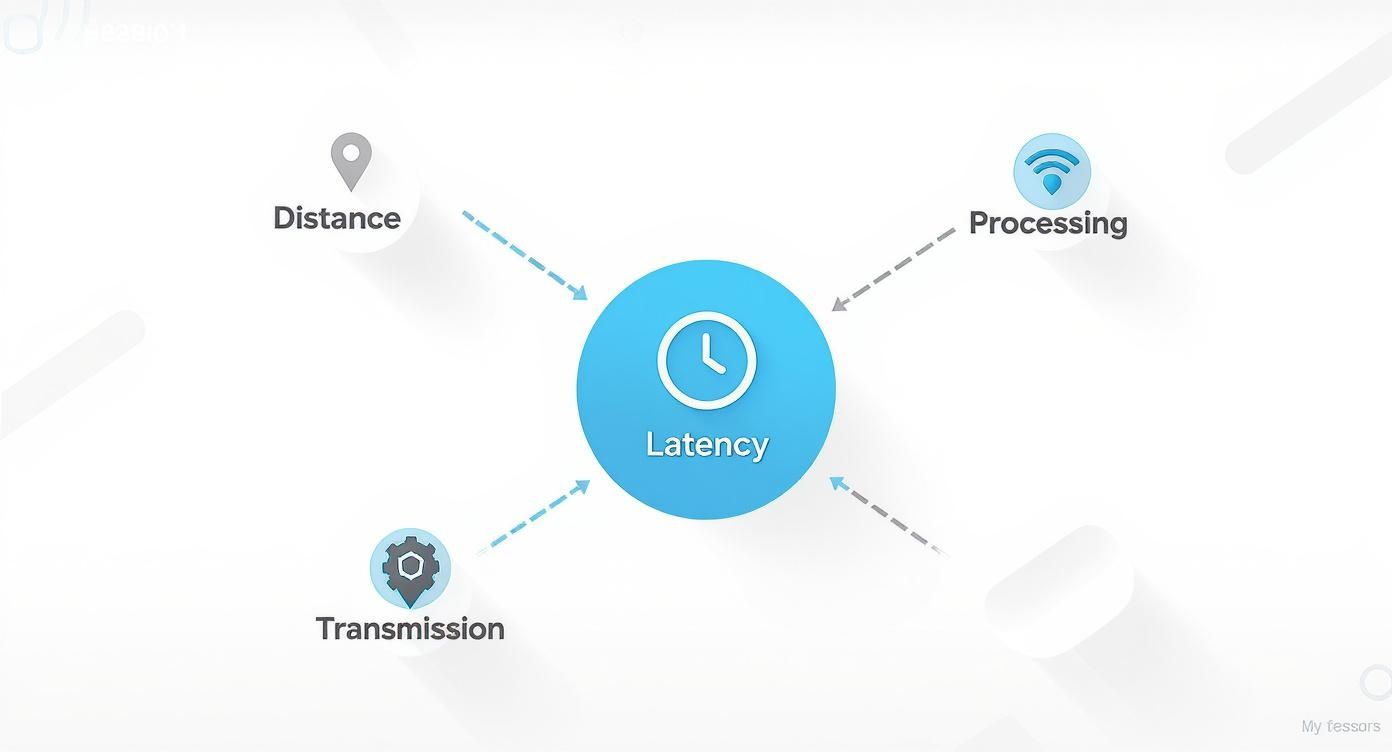

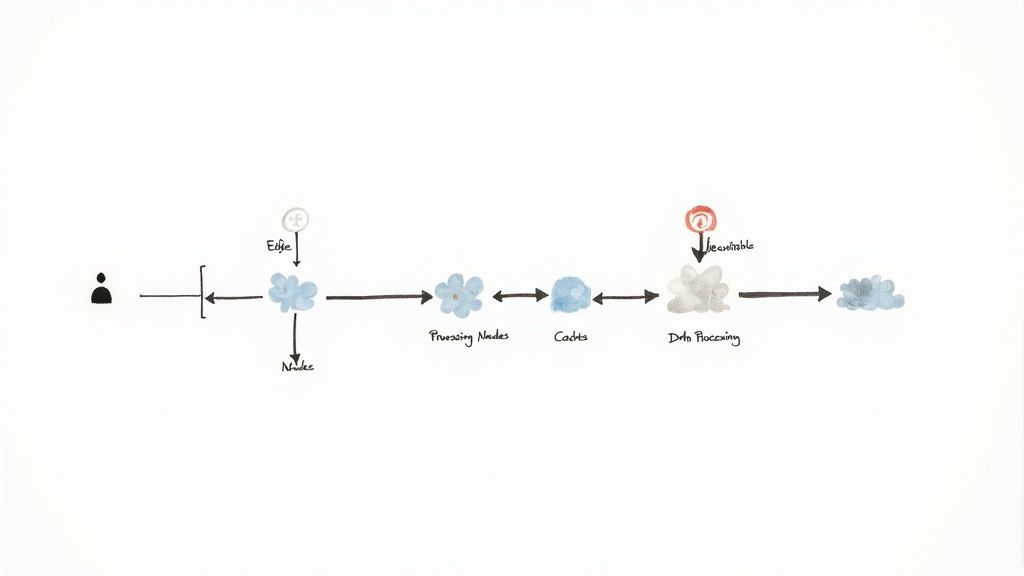

The diagram below gives you a good visual of how these factors—physical distance, processing power, and the sheer volume of data—all contribute to the total delay.

As you can see, latency isn’t just about how fast your internet connection is. It’s a complex issue where delays can come from the physical journey, the network itself, or the hardware doing the work.

Why Latency Matters Today

In today’s world, the obsession with minimizing these delays is at an all-time high. With technologies like AI, IoT, and 5G networks becoming mainstream, the demand for instantaneous data is no longer a luxury—it’s a fundamental requirement.

This pressure is fueling massive growth. The global data center market, the very heart of data processing, is expected to jump from $418.2 billion in 2025 to $691.6 billion by 2030, largely driven by this relentless pursuit of speed. To get a better sense of how companies are tackling this, you can explore the concepts behind real-time data streaming.

Ultimately, this trend sends a clear message: fast data is the new currency. It’s what separates market leaders from the competition and unlocks the next wave of innovation.

The Four Main Causes of High Data Latency

It’s one thing to know that data latency is a cumulative delay; it’s another to know exactly where those delays are coming from. High latency isn’t some single, mysterious force—it’s the sum of specific bottlenecks that slow down data on its journey. Once you can pinpoint these culprits, you can start to get a handle on performance.

Let’s break down the four primary sources of those frustrating delays that bog down your systems.

1. Network and Propagation Delay

At its core, the most fundamental cause of latency is just plain physics. Data moves at nearly the speed of light, but when you’re sending it across continents, even that incredible speed introduces a noticeable delay. This is called propagation delay.

Think about a video call with someone in Tokyo while you’re in New York. That slight, perceptible lag? That’s not a “slow” internet connection. It’s the time it takes for the signal to physically travel thousands of miles through undersea fiber-optic cables.

This delay gets worse with every device—like a router or switch—the data has to pass through. Each one of these “hops” is like a quick stoplight on a cross-country road trip. A single stop doesn’t seem like much, but they add up fast and can seriously extend your total travel time.

2. Transmission Media

Not all data highways are built the same. The physical medium carrying your data plays a massive role in how fast it gets from point A to point B.

It’s the difference between taking a high-speed bullet train versus the local bus. They’ll both get you there eventually, but one is a whole lot more efficient.

- Fiber-Optic Cables: These are the bullet trains of the data world. They use pulses of light to send information at incredible speeds with very little signal loss, even over huge distances.

- Copper Cables (Ethernet): The workhorse of many networks. They’re reliable and common but are slower than fiber and can be affected by electronic interference, especially over longer stretches.

- Wireless (Wi-Fi, 4G/5G): Super convenient, but often the slowest and least reliable option. Walls, weather, and other radio signals can easily interfere, all of which adds to latency.

Simply put, the choice of transmission medium is a critical factor. Upgrading from an old technology to a newer one, like fiber, can slash this type of delay.

Key Takeaway: The physical distance and the medium data travels through are the foundational, and often unchangeable, sources of latency. The best you can do is reduce the number of network hops and use the fastest medium available to you.

3. Processing Delay

Once your data packet finally arrives at its destination server, its journey isn’t over. The server has to actually think about the request, process it, and figure out a response. This “thinking time” is known as processing delay.

Picture a busy chef in a restaurant kitchen. Even if all the fresh ingredients (the data) arrive instantly, the chef still needs time to prep and cook the dish (process the request). If the kitchen is understaffed or suddenly gets slammed with a dozen complex orders, a backlog forms, and every single customer waits longer.

In the same way, a server’s CPU, memory, and current workload dictate how fast it can handle incoming data. An old, underpowered, or overloaded server will introduce significant processing delays.

4. Storage Delay

Finally, many requests need the system to fetch data from a storage device, like a hard disk drive (HDD) or a solid-state drive (SSD). The time it takes for the system to read or write that data is the storage delay.

Think of this as the warehouse retrieval step.

Requesting data from a modern SSD is like grabbing an item from a well-organized shelf right next to the packing station—it’s incredibly fast. But pulling data from an older, mechanical HDD is like sending someone to the back of a huge, messy warehouse to find one specific box. The physical movement of the drive’s read/write heads takes real time, making it a classic latency bottleneck.

This is a major reason why systems relying on slow, periodic data pulls often struggle with performance. To see how modern approaches get around this, our guide on batch vs. stream processing digs into alternatives that minimize these storage-related delays.

How to Measure and Benchmark Data Latency

You can’t fix what you can’t measure. That old saying is especially true for data latency. Moving from a vague feeling of “slowness” to hard numbers is the only way to build faster, more responsive systems. Once you start quantifying latency, you can finally diagnose problems, set realistic performance goals, and actually see the impact of your optimizations.

The process is a bit like being a detective. You use specific metrics and tools to follow your data’s journey, uncovering precisely where and why delays are cropping up. By establishing a clear performance baseline, you can stop guessing and start making informed improvements.

Key Metrics for Measuring Latency

To get the full picture of your system’s performance, you need to look at more than just one number. Different metrics tell different parts of the story, each shedding light on a specific aspect of the delay.

Here are a few of the most important ones:

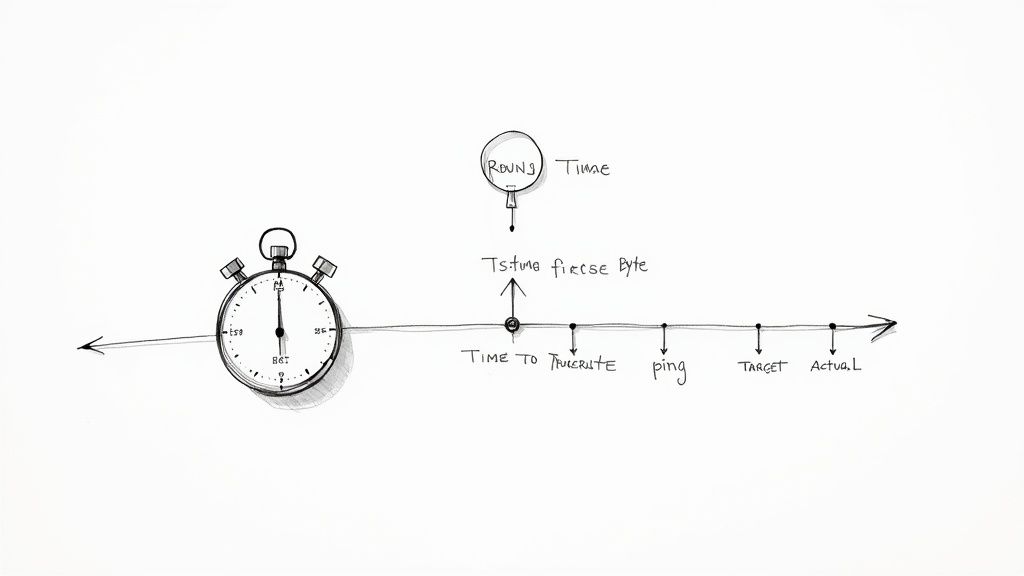

- Round-Trip Time (RTT): This is the classic. It measures the total time it takes for a data packet to travel from a source to a destination and back again. RTT is your fundamental health check for network connectivity, as it includes all the propagation, transmission, and processing delays along the way.

- Time to First Byte (TTFB): This one is all about server responsiveness. TTFB measures the time from when a client sends a request to when it receives the very first byte of the response. If you have a high TTFB, it’s a strong signal that the problem lies on the server side—think slow database queries or overloaded application logic.

- End-to-End Latency: This is the metric your users actually care about. It measures the total time from an initial action (like a click) to the final outcome (like a page fully loading or a dashboard updating). It captures the entire perceived delay from start to finish.

Think of these metrics as your primary tools for turning abstract complaints about speed into a set of actionable numbers.

What ‘Good’ Latency Looks Like: While it always depends on the use case, a common benchmark for web applications is a TTFB under 200 milliseconds. For real-time applications like video calls, an RTT below 150 ms is crucial to avoid that awkward lag. Ultimately, the best benchmark is the one defined by your users’ expectations.

Common Latency Metrics and Their Use Cases

Choosing the right metric depends entirely on what you’re trying to achieve. The table below breaks down which metric is best suited for different business needs.

MetricWhat It MeasuresIdeal Use Case Example**Round-Trip Time (RTT)**The total delay for a signal to go from source to destination and back.Checking network health and stability for an online gaming platform.**Time to First Byte (TTFB)**The time until a client receives the first byte of a server’s response.Optimizing the server-side performance of an e-commerce website to reduce cart abandonment.End-to-End LatencyThe total time from user action to the final visible outcome.Measuring the true user experience for a financial trading application where every millisecond counts.Packet LossThe percentage of data packets that fail to reach their destination.Ensuring reliable video streaming quality for a media company.

By focusing on the metric that aligns with your goals—whether it’s a snappy user interface or a rock-solid network—you can direct your optimization efforts where they’ll have the biggest impact.

Tools for Diagnosing Latency Bottlenecks

Once you have your metrics, you need the right tools to find the source of the delays. These are the go-to diagnostics for tracing a data packet’s path and identifying the slow points.

- Ping: This is the simplest tool in your kit.

pingsends a tiny data packet to a server and measures the Round-Trip Time. It’s a quick and dirty way to check basic network connectivity. A high or inconsistent ping time is an immediate red flag. - Traceroute (or tracert): This tool takes things a step further.

Traceroutemaps out the entire journey a data packet takes, showing you every “hop” (like a router or switch) it passes through. It reports the latency at each hop, making it invaluable for pinpointing exactly where a slowdown is happening along the network path.

Setting Performance Benchmarks

Measuring latency is just the first step—benchmarking is where it gets strategic. A benchmark is your standard, the point of reference you use to define what “good” looks like for your specific application.

To set a solid benchmark, you need to monitor your key latency metrics over time to understand your system’s normal operating performance. This baseline is critical. It helps you instantly spot when something goes wrong, diagnose performance drops after a new code deployment, and set clear, achievable goals for improvement.

The pressure to meet these benchmarks is only growing. As of Q1 2025, the average global data center vacancy rate dropped to a razor-thin 6.6%, a huge year-over-year decline. This squeeze shows that demand for low-latency access is quickly outpacing supply, pushing companies toward innovations like edge computing, which can slash latency from hundreds of milliseconds down to single digits. You can dig into these trends in CBRE’s recent global data center trends report.

What High Latency Actually Costs Your Business

So, why does a delay of a few milliseconds even matter? It might sound like a problem for engineers to solve in a server room, but the truth is, data latency creates a ripple effect that touches every corner of a business. It’s not just an IT headache; it’s a direct drag on revenue, customer happiness, and operational efficiency.

Think about it from a human perspective. We can perceive a delay of just 400 milliseconds—literally the blink of an eye. That’s all it takes for an experience to feel “off” and for frustration to set in. When your systems lag, customers walk away, opportunities vanish, and critical business operations can grind to a halt. Suddenly, that abstract technical metric has very real, C-suite-level consequences.

In E-commerce, a Slowdown Kills Sales

Nowhere is the pain of high latency felt more acutely than in online retail. Today’s shoppers have zero patience for a slow experience. If a page takes an extra second to load or a search query hangs, they’re gone.

This isn’t a small thing; it’s a direct hit to your bottom line. Study after study confirms that even a one-second delay can tank conversion rates. Frustrated shoppers abandon their carts and head straight to a competitor—and they probably won’t be back.

- Abandoned Carts: A clunky, slow checkout is one of the top reasons people leave without buying. High latency during inventory checks or payment processing gives them just enough time to second-guess their purchase.

- Bad User Experience: When product searches are sluggish and images load one by one, it makes your brand look unprofessional and erodes customer trust.

- Failed Personalization: Those real-time recommendation engines need fresh, low-latency data to work their magic. Stale data means irrelevant suggestions, and a massive sales opportunity is completely missed.

In Finance, Milliseconds Can Mean Millions

In the high-stakes world of high-frequency trading (HFT), latency isn’t just a metric—it’s everything. Financial firms invest astronomical sums on specialized hardware and infrastructure just to shave off a few microseconds. A delay that’s completely invisible to a human can mean the difference between a massively profitable trade and a huge loss.

For a high-frequency trading firm, a 1-millisecond advantage can be worth as much as $100 million per year. This is the clearest example of how time literally is money. The first algorithm to see and react to a market event wins.

But it’s not just about trading. Think about fraud detection. To stop bad actors, you need to analyze transactions the instant they happen. A delay of just a few seconds is all a fraudulent transaction needs to clear, resulting in direct financial losses and seriously damaging your customers’ trust.

In SaaS and IoT, It Leads to Frustration and Churn

The consequences of latency are just as severe in other industries. For any Software-as-a-Service (SaaS) company, a responsive and snappy UI is table stakes. If your collaborative tool, CRM, or analytics dashboard feels slow, users will get frustrated and eventually leave for a faster competitor.

This need for speed is also exploding in the industrial sector with the rise of the Internet of Things (IoT). By 2025, an estimated 21.1 billion connected IoT devices will be out in the world, all streaming data that needs to be processed instantly for things like factory automation or smart city management. The value here is enormous; 64% of IT leaders in the Asia-Pacific region already report seeing at least a five-fold ROI from their investments in streaming data—an entire approach built to defeat latency. You can learn more about the growth rates of real-time data integration on Integrate.io.

Effective Strategies for Reducing Data Latency

Knowing what causes data latency is one thing, but knowing how to fight it is what gives you a real competitive edge. The good news is there are plenty of battle-tested strategies to minimize these delays. The most effective game plan is a three-front attack, tackling latency across your core infrastructure, application architecture, and data processing methods.

Think of it like running a world-class delivery service. You can invest in faster trucks (infrastructure), map out smarter routes (architecture), and completely overhaul how packages are sorted at the warehouse (data processing). By improving all three, you don’t just speed things up a little—you fundamentally change how quickly you can operate.

Optimizing Your Infrastructure

The physical journey your data takes is often the biggest latency hog. The goal here is simple: shorten the distance. If you can bring your data and computation closer to your users, you can wipe out a huge chunk of that travel time.

- Content Delivery Networks (CDNs): A CDN is essentially a network of servers scattered around the globe. It stores copies of your static content—images, videos, stylesheets—in locations physically closer to your users. When a user requests that content, it’s served from the nearest server, not your main one, drastically cutting down load times.

- Edge Computing: This takes the CDN idea and kicks it up a notch. Instead of just storing content, edge computing moves actual processing power to the network’s “edge.” This is a game-changer for things like IoT and mobile apps, where processing data locally avoids the long round trip to a central cloud server, making near-instant responses possible. Deploying services in environments like AWS Local Zones can get you into single-digit millisecond territory.

Refining Your Application Architecture

You can have the best hardware in the world, but a clunky application or data architecture will still create frustrating bottlenecks. The focus here is on making your code and data access patterns as clean and efficient as possible.

Smart architecture is all about avoiding unnecessary work. By caching common requests and picking the right communication protocols, you lighten the load on your core systems and speed up every single interaction.

Key Insight: Architectural optimization is about being smarter, not just faster. A good caching strategy can eliminate 90% or more of redundant database queries, freeing up precious resources and slashing delays for the queries that actually matter.

Here are a few high-impact architectural tweaks:

- Implement Caching: Store frequently accessed data in a super-fast memory layer like Redis or Memcached. This stops your app from hammering a slower database for the same information over and over again.

- Optimize Code and Queries: Bad code and inefficient database queries are sneaky culprits behind processing delays. Profile your application to pinpoint slow functions, and take the time to optimize your SQL queries by adding indexes or rewriting complex joins.

- Choose Efficient Protocols: Use modern, lightweight protocols for data transfer. For APIs, especially for communication between your own microservices, something like gRPC can be way faster than a traditional REST/JSON setup.

Modernizing Data Processing Techniques

For any application that deals with a lot of data, how you move and process it is everything. The old way of doing things—collecting data into big batches and processing them periodically—is inherently slow. The modern approach is to process data continuously, as it arrives.

This is where today’s biggest gains against latency are being made. Moving from batch jobs to real-time streams is a fundamental shift. For a deeper look at this, our guide on how to reduce latency offers even more detailed strategies.

Two key technologies are driving this change:

- Change Data Capture (CDC): Instead of constantly running heavy queries on your database to see what’s new, CDC taps directly into the database’s transaction log. It captures every single change—every insert, update, and delete—as a real-time event. It’s incredibly efficient, barely touches the source database, and delivers a constant stream of changes with sub-second latency.

- Stream Processing: Once CDC gives you that real-time stream, you need a way to work with it on the fly. Stream processing engines like Apache Flink let you run complex calculations, transformations, and enrichments as the data flows past. This gets rid of the need to store everything first and process it later, paving the way for true real-time analytics and fraud detection.

By pairing CDC with stream processing, you can build event-driven systems that react to new information instantly. This modern data stack breaks free from the limitations of batch ETL, creating pipelines where the gap between a business event and seeing it on a dashboard is measured in seconds, not hours.

Why Low Latency Is Your Competitive Edge

We’ve covered a lot of ground, from what data latency actually is to the nitty-gritty of how to fight it. If there’s one key takeaway, it’s this: our tolerance for delays is shrinking to zero. Managing latency isn’t just a technical chore anymore; it’s a core part of staying relevant and competitive.

The ability to get information where it needs to go, right now, is what sets the winners apart. Think about it—technologies like AI and real-time analytics are becoming table stakes. In that environment, high latency is more than an inconvenience; it’s a liability. It slows down critical insights, frustrates customers, and leaves dangerous blind spots in your operations. A delay of just a few hundred milliseconds can be the difference between catching fraud and losing money, or between a happy customer and a lost sale.

Embracing a Real-Time Future

To keep up, businesses have to move away from the old-school model of processing data in slow, clunky batches. The future is all about modern, low-latency pipelines that process information as it happens.

This shift requires investing in the right infrastructure and techniques. The goal is to build a faster, more responsive organization, and that often comes down to a few key areas:

- Edge Computing and CDNs: These are all about closing the distance, bringing data physically closer to your users to cut down travel time.

- Change Data Capture (CDC): This lets you grab data from your source systems the very instant it changes, eliminating the wait.

- Stream Processing: This is the magic that lets you analyze and act on data while it’s still moving, paving the way for immediate, intelligent decisions.

Investing in a low-latency architecture isn’t just an IT project. It’s a direct investment in your customer experience, your operational efficiency, and your ability to out-innovate the competition.

At the end of the day, getting a handle on data latency is essential. The strategies we’ve discussed are a roadmap. By building modern data pipelines with tools like Streamkap—which uses CDC to deliver data with sub-second latency—you’re not just fixing a technical problem. You’re building the foundation for a smarter, faster, and more resilient business that’s ready for whatever comes next.

Frequently Asked Questions About Data Latency

When you dive into data performance, a few common questions always seem to pop up. Let’s walk through some of the most frequent ones to clear up the confusion and give you practical answers.

What Is the Difference Between Latency and Bandwidth?

It’s incredibly common to mix up latency and bandwidth, but they represent two totally different things. The easiest way to get it straight is with a simple highway analogy.

Think of bandwidth as the number of lanes on the highway. A massive, ten-lane superhighway can handle a ton more cars at once than a little two-lane country road. It’s all about capacity.

Latency, on the other hand, is the speed limit. It’s how fast one single car can travel from Point A to Point B. You could have a ten-lane highway (huge bandwidth), but if the speed limit is 20 MPH or there’s a massive traffic jam (high latency), that one car is still going to take forever to arrive. Both are critical, but they solve different problems.

What Is Considered Good or Low Latency?

This is the classic “it depends” answer, but it’s true. There’s no single number that defines “good” latency because it’s completely tied to the specific application. What’s fantastic for one use case can be a total disaster for another.

A few examples really bring this to life:

- Competitive Online Gaming: In a fast-twitch game, every millisecond is a potential advantage. Latency under 50ms is considered great. Anything more, and you’re dealing with frustrating lag.

- Smooth Video Calls: For a conversation to feel natural, you need the delay to be short enough that you aren’t constantly talking over each other. Anything under 150ms usually feels seamless.

- Responsive Websites: For a website to feel snappy and keep users engaged, the goal is a response time under 200ms.

- Overnight Data Analytics: On the flip side, a massive data processing job that runs while everyone is asleep can have a latency of several hours, and that’s perfectly acceptable.

The Bottom Line: “Low latency” isn’t a fixed target. It’s a goal defined entirely by user expectations and the demands of your application.

How Does Edge Computing Reduce Latency?

Edge computing is a smart way to tackle one of the biggest sources of latency: physical distance. It works by completely rethinking where your data gets processed.

Imagine how a retail giant like Amazon delivers packages. Instead of shipping every order from one gigantic warehouse in the middle of the country, they have smaller distribution centers scattered everywhere. This gets the products much closer to you, the customer, drastically cutting down on shipping time.

Edge computing does the exact same thing, but with data. It moves the processing power closer to where the data is actually being generated—out at the “edge” of the network. By doing this, you shrink the distance the data has to travel to a centralized cloud server and back. Since distance is a primary factor in propagation delay, the result is a much faster, more responsive experience.

Ready to eliminate the latency holding your business back? Streamkap uses real-time CDC to create ultra-low-latency data pipelines, ensuring your insights are always instant. See how Streamkap can help you build for a real-time world.