<--- Back to all resources

10 Real-World Event Driven Architecture Examples Transforming Industries in 2025

Explore 10 detailed event driven architecture examples from finance, e-commerce, and IoT. Learn how real-time data streaming unlocks new capabilities.

In a world that demands instant responses, traditional batch-processing data systems are falling behind. Delays are no longer acceptable, whether it’s in detecting fraud, updating inventory, or personalizing user content. The solution is a paradigm shift to event-driven architecture (EDA), a design pattern where systems react to ‘events’ - like a new order, a sensor reading, or a user click - as they happen.

This approach allows businesses to build highly responsive, scalable, and resilient applications that process information in real-time. But what does this look like in practice? Simply knowing the theory isn’t enough; understanding its application is what delivers real value.

In this article, we’ll dive into 10 detailed event driven architecture examples across various industries, from e-commerce and finance to IoT and healthcare. We will break down the specific strategies, tactics, and replicable methods behind their success. You will learn how leading companies leverage real-time data streams to innovate and gain a competitive edge. This listicle moves beyond generic descriptions to provide actionable insights and practical takeaways you can apply directly to your own systems and strategic planning. We will explore how these architectures are constructed and why they succeed.

1. Real-Time Payment Processing and Fraud Detection

Financial institutions like Stripe and PayPal leverage event-driven architecture examples to process transactions and detect fraud in near real-time. Instead of relying on slow, periodic batch processing, this model treats every payment attempt, authorization, or settlement as a discrete “event.” These events are published to a central event stream, like Apache Kafka, the moment they occur.

Multiple downstream services subscribe to this stream simultaneously. A payment processing service acts on the event to move funds, while a separate fraud detection service uses machine learning models to analyze the same event against historical patterns and risk profiles. This parallel, decoupled processing allows for instantaneous decisions, enabling systems to approve or deny a transaction in milliseconds. The architecture ensures high throughput and resilience while creating a comprehensive, immutable audit trail of every transaction.

Strategic Breakdown

- Why it’s effective: This approach decouples critical functions. The payment gateway doesn’t need to wait for a fraud score; it simply consumes the result when it becomes available. This enhances system responsiveness and scalability.

- When to use it: Ideal for high-volume, low-latency environments where immediate feedback is crucial, such as e-commerce checkouts, point-of-sale terminals, and peer-to-peer transfers. If your system requires instant transaction validation, this is a prime use case. For more details on the fundamentals, you can explore this overview of what event-driven architecture is.

Actionable Takeaways

- Use Change Data Capture (CDC): Capture transaction events directly from the source database logs to minimize latency and ensure data consistency.

- Implement Idempotency: Design consumers with event deduplication logic to safely handle network retries without causing duplicate processing.

- Cache Reference Data: Keep customer profiles or risk data in a fast, in-memory cache for rapid event enrichment during fraud analysis.

2. E-Commerce Order Fulfillment and Inventory Management

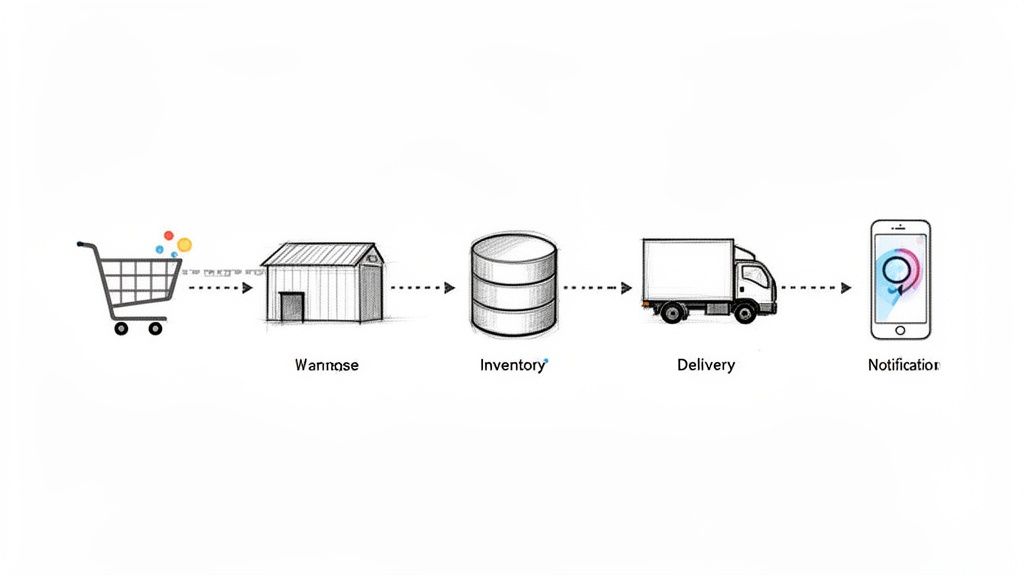

E-commerce giants like Amazon and Shopify use event-driven architecture examples to orchestrate complex order fulfillment and inventory workflows. When a customer places an order, it generates an “OrderPlaced” event. This single event triggers a cascade of independent processes: the inventory service decrements stock levels, the shipping service generates a label, and the notifications service sends a confirmation email to the customer.

This decoupled model allows each service to scale and evolve independently. For instance, the inventory system can handle Black Friday traffic spikes without affecting the notification service. Each step in the fulfillment journey, from payment confirmation to shipment and delivery, is a separate event that pushes the order through its lifecycle. This creates a resilient, auditable, and highly scalable system capable of managing millions of orders simultaneously without system-wide bottlenecks.

Strategic Breakdown

- Why it’s effective: This architecture creates a resilient workflow where the failure of one component, like label generation, doesn’t halt the entire order process. Events can be retried or routed to a dead-letter queue for manual intervention, ensuring no order is lost. To effectively manage complex regulations and minimize manual intervention, many businesses are adopting automated shipping compliance solutions within their e-commerce platforms.

- When to use it: Perfect for systems with multi-step business processes involving multiple, distinct domains (e.g., inventory, shipping, notifications). It is especially valuable in high-volume retail environments where system responsiveness and operational reliability are critical.

Actionable Takeaways

- Use Event Sourcing: Persist the full sequence of events for each order to create an immutable audit trail, which simplifies debugging and rebuilding state.

- Implement Compensating Transactions: For actions like order cancellations, publish a “Compensation” event that triggers downstream services to reverse their original actions (e.g., restock inventory).

- Partition by Order ID: Process events for the same order sequentially by using the order ID as a partition key. This prevents race conditions, such as a shipping event being processed before a payment confirmation event. For more detail on real-time data capture, learn more about what Change Data Capture is.

3. Real-Time User Activity Analytics and Personalization

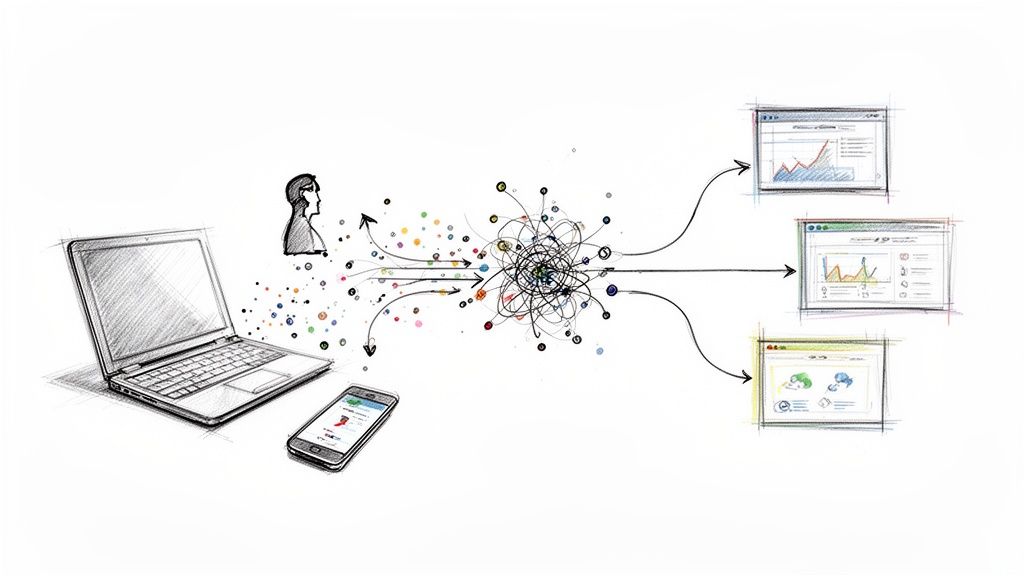

Digital platforms like Netflix and Spotify leverage event-driven architecture examples to provide hyper-personalized user experiences. Every user interaction, from a mouse click and video view to a song skip, is captured as a discrete event. These events are published in real-time to an event bus, where multiple downstream services can consume them independently to drive immediate actions.

This approach allows for parallel processing where one service updates a user’s behavioral profile, another feeds the data into a machine learning model to refine recommendations, and a third populates a live analytics dashboard. By decoupling these functions, platforms can instantly adapt to user behavior, serving dynamic content or personalized offers without waiting for slow batch jobs. The result is a highly engaging and responsive user experience built on a continuous stream of interaction data.

Strategic Breakdown

- Why it’s effective: The architecture separates the high-volume ingestion of user actions from the complex logic of personalization and analytics. This allows each component to scale independently, ensuring that a spike in user activity doesn’t cripple the recommendation engine or business intelligence tools.

- When to use it: Ideal for any digital product where real-time personalization is a key differentiator, such as media streaming services, e-commerce sites, and content platforms. If your goal is to react to user behavior as it happens, this is a powerful model.

Actionable Takeaways

- Enrich Events Early: Add contextual data (like user segment, device type, or location) to events as they are ingested to simplify downstream processing for consumers.

- Use Separate Streams: Isolate different types of user actions (e.g., “clicks” vs. “purchases”) into separate event streams or topics to allow services to subscribe only to the data they need.

- Plan for Late Data: Design systems to handle events that arrive out-of-order or with a delay, a common issue with mobile users who may have intermittent connectivity.

4. IoT Sensor Data Processing and Predictive Maintenance

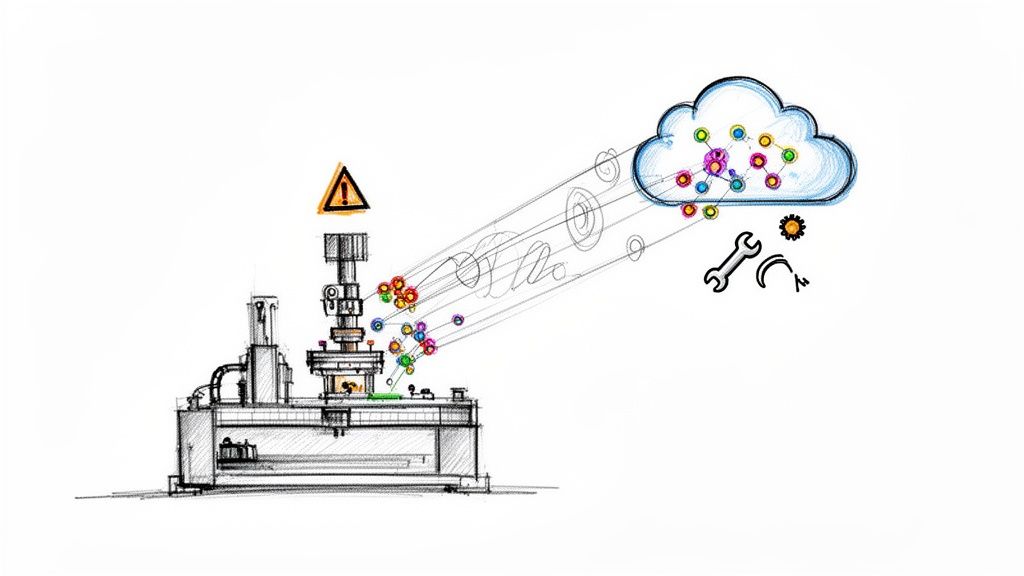

Industrial giants like Siemens and GE Digital use event-driven architecture examples to power their predictive maintenance platforms. In advanced manufacturing, millions of events are generated every second from equipment sensors monitoring temperature, vibration, and pressure. Instead of polling for status updates, each sensor reading is published as an event to a high-throughput message broker like MQTT or Kafka.

This continuous stream of events is consumed by various microservices. One service might archive raw data, while another feeds the events into a real-time analytics engine. This engine compares incoming data against historical patterns and machine learning models to detect anomalies that signal potential equipment failure. This architecture enables companies to shift from reactive or scheduled maintenance to a proactive, predictive model, significantly reducing downtime and operational costs by addressing issues before they become critical.

Strategic Breakdown

- Why it’s effective: The system decouples data ingestion from analysis. This allows for massive scalability to handle data from thousands of sensors simultaneously. It also enables different analytical models to process the same data stream for different purposes, such as real-time alerting and long-term trend analysis.

- When to use it: This model is essential for any Industrial IoT (IIoT) application where equipment uptime is critical. Use it in manufacturing, energy, and logistics to monitor high-value assets, prevent catastrophic failures, and optimize maintenance schedules based on actual equipment health. For more on this topic, see this guide to data stream processing.

Actionable Takeaways

- Leverage Edge Processing: Filter, aggregate, and analyze non-critical sensor data at the edge (close to the source) to reduce network bandwidth and latency before sending key events to the central platform.

- Use Time-Windowed Aggregations: Analyze events within specific time windows (e.g., one minute or five minutes) to identify meaningful trends and smooth out noisy sensor readings, making anomaly detection more accurate.

- Correlate Multi-Sensor Data: Implement stream joins to combine data from different sensors on the same piece of equipment (e.g., temperature and vibration) to create a more holistic view and uncover complex failure patterns.

5. Banking and Financial Services Event-Driven Systems

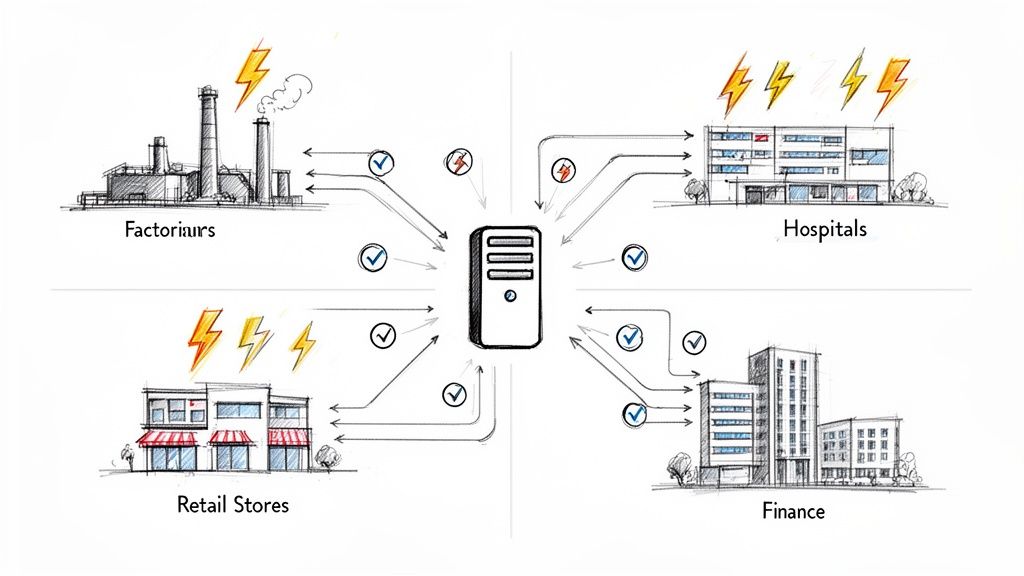

Modern banking and fintech companies like JPMorgan Chase and Wise use event-driven systems for core operations, from transactions to regulatory compliance. Each customer action, such as a deposit, withdrawal, or loan application, is treated as an event. These events are published to a central message bus, triggering a cascade of decoupled processes across different systems. For example, a single transaction event can simultaneously update a customer’s balance, trigger a compliance check, and log the action for auditing.

This model allows financial institutions to move away from nightly batch jobs, providing customers with real-time account information and instant notifications. The architecture is crucial for maintaining data consistency across a complex ecosystem of deposit systems, lending platforms, and fraud detection engines. By treating every operation as an immutable event, banks create a robust and verifiable audit trail, which is essential for meeting strict regulatory requirements and ensuring data integrity.

Strategic Breakdown

- Why it’s effective: This architecture provides the agility needed to compete with modern fintechs. It decouples legacy systems from new services, allowing for faster innovation and deployment. Real-time processing enhances the customer experience and strengthens regulatory compliance.

- When to use it: It’s ideal for any core banking function requiring real-time updates and strong transactional integrity. Use it for account management, payment processing, loan origination, and compliance monitoring where immediate, consistent data across all systems is non-negotiable.

Actionable Takeaways

- Implement Event Versioning: As financial products and regulations evolve, introduce new event schemas with version numbers to ensure backward compatibility and prevent breaking changes in downstream services.

- Design for Exactly-Once Semantics: Use transactional outboxes or idempotent consumers to guarantee that critical financial events are processed exactly once, preventing duplicate charges or missed transactions.

- Maintain Separate Compliance Streams: Create dedicated event streams for regulatory and audit-related events. This isolates compliance monitoring from core business logic, simplifying reporting and reducing risk.

6. Real-Time Recommendation Systems and Content Delivery

Streaming platforms like Netflix and content providers such as TikTok use event-driven architecture to power their highly personalized recommendation engines. Instead of calculating recommendations in slow, overnight batches, every user interaction is treated as an event. A click, a search, a “like,” or time spent on a video generates an event that is published to a real-time data pipeline.

Multiple services subscribe to this event stream. A model training service might use these signals to update a user’s preference profile, while a content delivery service uses the event to immediately surface a new, more relevant piece of content. This creates a powerful feedback loop where user behavior continuously refines the system’s output in real time. The architecture allows these platforms to process billions of daily interactions to keep users engaged with fresh, dynamic suggestions.

Strategic Breakdown

- Why it’s effective: This architecture enables a highly responsive and adaptive user experience. The system doesn’t wait for periodic updates; it reacts instantly to user signals, making recommendations feel immediate and intelligent. This decoupling of event capture from recommendation logic allows for immense scalability and experimentation.

- When to use it: Ideal for any platform where user engagement is driven by personalized content discovery, such as media streaming, e-commerce product suggestions, or news feed curation. If your goal is to create a dynamic feedback loop that continuously learns from user behavior, this is one of the most powerful event-driven architecture examples.

Actionable Takeaways

- Implement a Multi-Stage Funnel: Use a series of event-driven services to filter content, starting with broad candidate generation and narrowing down to fine-tuned, personalized ranking.

- Utilize a Feature Store: Maintain a low-latency feature store that consumers can query to enrich user interaction events with historical data for more accurate, context-aware recommendations.

- Create Feedback Event Streams: Design specific event streams to capture user responses to recommendations (e.g., “recommendation_accepted,” “recommendation_ignored”) to measure and improve model performance.

7. Supply Chain Visibility and Logistics Optimization

Global logistics leaders like FedEx and Amazon rely on event-driven architecture examples to manage the immense complexity of their supply chains. Every significant action, from a package leaving a warehouse to a truck passing a checkpoint or a shipment clearing customs, generates an event. These events are published to event streams, allowing disparate systems to react in real time.

This constant flow of data creates end-to-end visibility. An inventory management system can subscribe to “warehouse departure” events to update stock levels, while a customer notification service listens for “delivery status change” events to send alerts. Simultaneously, a route optimization engine consumes location-based events from vehicles to dynamically adjust delivery paths based on live traffic data and weather conditions.

Strategic Breakdown

- Why it’s effective: The architecture decouples operational components. The package tracking system doesn’t need direct integration with the routing engine; they simply share a common event stream. This allows for massive scale, flexibility, and the independent evolution of each service.

- When to use it: This model is essential for any business managing complex, multi-stage physical or digital supply chains. It’s ideal for scenarios requiring real-time tracking, dynamic resource allocation, and proactive notifications for logistics, manufacturing, and global trade.

Actionable Takeaways

- Design Granular Event Schemas: Define specific event types for different transportation modes (e.g.,

AirCargoLoaded,OceanVesselDeparted) to enable precise, context-aware processing. - Handle Late-Arriving Data: Implement logic to manage out-of-order or delayed location updates, which are common in logistics due to connectivity issues, ensuring the system state remains accurate.

- Use Geospatial Windowing: Process location-based events in real-time streams to detect when a shipment enters or exits a specific geographic area (geofence), triggering automated warehouse check-ins or customs alerts.

8. Healthcare Patient Monitoring and Clinical Alerts

Healthcare providers like Philips Healthcare and Epic Systems use event-driven architecture to monitor patient vital signs, medication administration, and lab results in real-time. This model treats each data point, such as a heartbeat from a monitor or a new lab result, as a distinct event. These events are published to dedicated streams where they can be processed and correlated immediately.

In the realm of patient monitoring, these event-driven systems are increasingly leveraging consumer devices like those using smartwatch ECG technology for real-time data analysis and immediate clinical alerts. When a clinically significant event or a combination of events indicates potential patient deterioration, an alert is automatically triggered and routed to the appropriate care team. This ensures that interventions happen at the earliest possible moment, moving from a reactive to a proactive care model.

Strategic Breakdown

- Why it’s effective: This architecture decouples data sources from clinical decision-support systems. A patient monitor simply emits data events, unaware of the various systems that consume them, such as alerting, charting, and analytics. This allows for rapid innovation and integration of new monitoring technologies.

- When to use it: This approach is critical for intensive care units (ICUs), remote patient monitoring programs, and hospital-wide systems where timely response to clinical changes is paramount. It is essential for any scenario requiring immediate action based on complex, multi-source patient data.

Actionable Takeaways

- Enforce Strict Security: Implement end-to-end encryption and stringent access controls for all event data to comply with HIPAA and protect sensitive patient information.

- Use Clinical Rule Engines: Collaborate with medical staff to define domain-specific rules. A rule engine can consume events and apply this clinical logic to identify significant patterns and trigger alerts.

- Design for High Availability: In healthcare, system downtime can have severe consequences. Implement fault-tolerant infrastructure with redundancy and automated failover to ensure the monitoring and alerting services are always operational.

9. Real-Time Advertising and Marketing Campaign Optimization

Digital advertising platforms like Google Ads and The Trade Desk rely on event-driven architecture examples to manage billions of daily ad impressions, clicks, and conversions. Every user interaction generates an event, which is published to an event stream. These events are consumed by multiple services in real-time to power ad bidding algorithms, update campaign performance dashboards, and trigger personalized marketing actions.

This model enables advertisers to react instantly to user behavior. An event like a user adding an item to a cart can trigger a retargeting ad to be served moments later. This decoupled, parallel processing allows ad platforms to adjust bidding strategies, allocate budgets to high-performing campaigns, and pause underperforming ads automatically, optimizing for ROI within minutes instead of hours or days.

Strategic Breakdown

- Why it’s effective: The architecture separates event collection from event processing. This allows for massive ingestion scalability while enabling different services, such as real-time bidding, analytics, and fraud detection, to operate on the same data stream independently and without delay.

- When to use it: This approach is essential for any system requiring immediate response to user engagement, such as programmatic advertising, real-time personalization, and affiliate marketing. It is ideal for environments where campaign performance data must be fresh to make timely, data-driven decisions.

Actionable Takeaways

- Use Windowed Aggregations: Implement time-windowed aggregations (e.g., 5-minute or hourly windows) on event streams to calculate key performance metrics like click-through rates and conversion counts in near real-time.

- Design Flexible Event Schemas: Create event schemas that can easily accommodate new attribution models, device types, or interaction data without requiring a full system overhaul.

- Create Topic-Specific Streams: Separate events into different streams or topics based on campaign type, region, or event type (e.g., impressions, clicks) to simplify consumer logic and improve data routing efficiency.

10. Cloud Infrastructure Monitoring and Auto-Scaling

Cloud providers like AWS and observability platforms like Datadog rely on event-driven architecture examples to manage dynamic, large-scale infrastructure. Instead of polling servers for their status, every log entry, CPU utilization metric, or network packet is treated as a discrete event. These events are published to high-throughput message brokers, creating real-time streams of system health data.

Specialized services subscribe to these streams to perform specific tasks. An alerting service listens for error patterns or threshold breaches, triggering notifications. Simultaneously, an auto-scaling service consumes performance metrics like CPU load or request latency. When these metrics cross predefined thresholds, this service emits a command event, such as “add-instance,” to automatically adjust resource allocation. This creates self-healing, elastic infrastructure that adapts to workload changes without human intervention.

Strategic Breakdown

- Why it’s effective: This model decouples monitoring from response. The service generating metrics doesn’t need to know how scaling decisions are made. This allows for complex, rule-based automation that can react to thousands of system events per second, ensuring high availability and cost-efficiency.

- When to use it: Essential for managing modern cloud-native applications, serverless functions, and containerized environments like Kubernetes. It is the go-to approach for any system that requires high uptime and must handle unpredictable traffic spikes without performance degradation or over-provisioning.

Actionable Takeaways

- Correlate Events: Use stream processing to join different event streams, such as application logs and infrastructure metrics, to gain contextual insights for faster root cause analysis.

- Prevent Cascading Failures: Design auto-scaling consumers with circuit breakers or rate limiters to prevent a single faulty event from triggering a massive, system-wide scaling reaction.

- Use Event Sampling: For extremely high-volume metrics, implement intelligent sampling at the source to reduce data volume while preserving the statistical significance needed for monitoring.

10 Event-Driven Architecture Use Cases Comparison

| Use case | Implementation complexity | Resource requirements | Expected outcomes | Ideal use cases | Key advantages |

|---|---|---|---|---|---|

| Real-Time Payment Processing and Fraud Detection | Very high — sub-ms latency, stateful processing, ML inference | Very high — low-latency infra, GPUs/CPUs for ML, secure networks | Instant approvals/blocks, reduced fraud, full audit trails | Payment gateways, high-volume fintech, fraud-sensitive services | Real-time fraud prevention, improved UX, scalable transaction processing |

| E-Commerce Order Fulfillment and Inventory Management | High — distributed transactions, choreography, event sourcing | Moderate–high — integration with warehouses, message brokers, databases | Accurate inventory, faster fulfillment, end-to-end order traceability | Online retailers, multi-warehouse fulfillment, omnichannel commerce | Decoupled services, real-time inventory sync, easy channel expansion |

| Real-Time User Activity Analytics and Personalization | Medium–high — event capture, enrichment, online ML | High — storage for events, feature stores, model serving infra | Personalized UX, faster insights, higher engagement and retention | Streaming platforms, e‑commerce personalization, analytics teams | Live personalization, rapid A/B testing, better user understanding |

| IoT Sensor Data Processing and Predictive Maintenance | High — edge processing, time-series windows, domain-specific models | Very high — high ingest throughput, edge compute, long-term storage | Early anomaly detection, reduced downtime, optimized maintenance schedules | Manufacturing, industrial IoT, equipment monitoring | Prevents failures, improves OEE, enables predictive maintenance |

| Banking and Financial Services Event-Driven Systems | Very high — strict consistency, compliance, secure auditing | High — encrypted messaging, reliable delivery, audit stores | Real-time compliance, consistent account state, faster reconciliations | Banks, fintechs, regulatory reporting systems | Strong auditability, compliance-ready, improved fraud controls |

| Real-Time Recommendation Systems and Content Delivery | High — continuous model updates, feature pipelines, low latency | High — model training compute, feature stores, low-latency serving | Increased content consumption, better discovery, dynamic ranking | Streaming services, content platforms, e‑commerce recommendations | Continuous feedback loop, improved personalization, higher engagement |

| Supply Chain Visibility and Logistics Optimization | High — multi-party integration, geospatial windowing, late-arriving data | Moderate–high — location data streams, optimization engines, partners’ integration | End-to-end visibility, optimized routes, proactive delay detection | Carriers, 3PLs, large shippers, multimodal logistics | Real-time tracking, route optimization, improved partner coordination |

| Healthcare Patient Monitoring and Clinical Alerts | Very high — clinical validation, high availability, strict controls | High — HIPAA-grade security, redundant infra, audit logging | Faster clinical response, fewer adverse events, improved outcomes | Hospitals, critical care, remote patient monitoring | Early detection, patient safety, regulatory-compliant audit trails |

| Real-Time Advertising and Marketing Campaign Optimization | High — attribution, deduplication, dynamic bidding systems | Very high — extreme event throughput, bidding engines, real-time aggregations | Improved ROI, faster campaign iteration, better targeting | Ad networks, DSPs, large-scale marketing platforms | Immediate campaign optimization, reduced wasted spend, cross-device correlation |

| Cloud Infrastructure Monitoring and Auto-Scaling | Medium–high — metric aggregation, incident correlation, autoscale policies | High — metrics/logs storage, alerting systems, orchestration tools | Self-healing infrastructure, improved uptime, cost-optimized scaling | Cloud providers, large SaaS, platform/DevOps teams | Automatic scaling, faster incident response, operational cost savings |

Putting Event-Driven Insights into Action

Throughout this exploration of diverse event driven architecture examples, a powerful, unifying theme emerges. From the instantaneous fraud detection in financial services to the dynamic inventory updates in e-commerce, the strategic advantage lies in harnessing the power of now. These systems are not merely technical implementations; they represent a fundamental business shift from a reactive, batch-oriented mindset to a proactive, real-time operational model.

The journey we’ve taken through industries like supply chain logistics, healthcare, and digital marketing reveals that event-driven architecture is the key to building resilient, scalable, and highly responsive systems. It allows organizations to decouple services, enabling independent evolution and deployment, which directly translates to increased business agility and a faster time-to-market for new features. The ability to react to a customer’s click, a sensor’s reading, or a market fluctuation in milliseconds is no longer a luxury but a competitive necessity.

Synthesizing the Core Takeaways

As you consider implementing these patterns, it’s crucial to distill the strategic lessons from the examples we’ve analyzed. The success of these architectures hinges on more than just technology; it’s about a strategic approach to data and system design.

Here are the most critical takeaways to guide your implementation:

- Focus on Business Outcomes: The most successful event-driven systems are directly tied to a specific business goal. Whether it’s enhancing customer personalization, preventing fraud, or optimizing supply chain efficiency, a clear objective should drive your architectural decisions.

- Embrace Asynchronous Communication: Decoupling services is the cornerstone of EDA. By communicating asynchronously through events, you build systems that are more resilient to individual component failures and can scale specific services independently based on demand.

- Master Data Consistency: In a distributed, event-driven world, maintaining data consistency can be challenging. Techniques like Change Data Capture (CDC) are invaluable for ensuring that event streams accurately reflect the state of your source systems, providing a reliable foundation for all downstream applications.

- Prioritize a Robust Event Backbone: The success of your entire architecture depends on the reliability and scalability of your event streaming platform. Investing in a solid foundation, like managed Kafka, prevents performance bottlenecks and operational headaches as your event volume grows.

Your Next Steps Toward an Event-Driven Future

Moving from theory to practice requires a clear, actionable plan. The event driven architecture examples we’ve detailed provide a blueprint, but your journey will be unique. Start small by identifying a single, high-impact business process that can be re-architected. This could be a customer notification system, a real-time analytics dashboard, or an inventory management process.

By focusing on one domain, you can build expertise, demonstrate value quickly, and create a reusable pattern that can be replicated across your organization. The key is to shift from large, monolithic projects to an incremental, evolutionary approach. This strategy de-risks the transition and allows your team to learn and adapt as they go, ensuring a smoother and more successful adoption of event-driven principles. Embracing this paradigm is not just about modernizing your technology stack; it’s about building an organization that can sense and respond to its environment in real time, creating a lasting competitive edge.

Ready to build your own real-time, event-driven systems without the complexity of managing the underlying infrastructure? Streamkap provides a fully managed platform powered by Kafka and Flink, using lossless Change Data Capture (CDC) to stream data from your databases in real time. Start building a more responsive and intelligent architecture today by exploring what Streamkap can do for your organization.